Natural Language Processing • Ensemble Methods

- Overview

- Ensemble Models

- Ensemble Model’s techniques

- Random Forest

- Gradient Boosting vs Random Forest

- When are tress not useful?

Overview

- Ensemble models leverage the advantages of multiple models to achieve a better solution.

- The underlying idea is that the aggregation of multiple models can often outperform a single model, leading to improved predictive performance.

- Random Forest and Gradient Boosting are popular examples of ensemble decision trees that are highly effective in various domains. Ensemble models present several advantages over individual trees such as improved accuracy, reduced overfitting, increased robustness, insights into feature importance, and flexibility.

Ensemble Models

- Ensemble models combine the predictions of multiple individual models, called base models or weak learners, to make more accurate and robust predictions than individual models alone.

- These ensemble models outperform individual trees by capturing a wider range of patterns and dependencies in the data, leading to more accurate predictions. Their collective decision-making reduces the impact of outliers and noise in the data, leading to better generalization and reduced overfitting.

- Ensemble models are also more robust to changes in the data and less sensitive to individual instances or outliers.

- “Ensembles and cascades are related approaches that leverage the advantages of multiple models to achieve a better solution. Ensembles execute multiple models in parallel and then combine their outputs to make the final prediction.” source

- These models are combine the predictions of multiple individual models, called base models or weak learners, to make more accurate and robust predictions. The idea behind ensemble modeling is that the aggregation of multiple models can often outperform a single model, leading to better predictive performance.

- Random Forest and Gradient Boosting are examples of ensemble decision trees. These ensemble models have proven to be highly effective in various domains and have several advantages over individual trees:

- Improved Accuracy: Ensemble models can achieve higher accuracy compared to individual trees. By combining the predictions of multiple trees, ensemble models can capture a wider range of patterns and dependencies in the data, leading to more accurate predictions. Each individual tree may have its limitations or biases, but the ensemble can overcome these limitations and provide a more comprehensive prediction.

- Reduced Overfitting: Individual decision trees are prone to overfitting, where they memorize the training data and perform poorly on unseen data. Ensemble models help mitigate overfitting by combining the predictions of multiple trees. The ensemble’s collective decision-making reduces the impact of outliers and noise in the data, leading to better generalization and reduced overfitting.

- Increased Robustness: Ensemble models are more robust to changes in the data and less sensitive to individual instances or outliers. While a single decision tree can be highly influenced by a single outlier, an ensemble model considers the collective decisions of multiple trees, making it more resilient to individual data points.

- Feature Importance: Ensemble models provide valuable insights into feature importance. They can measure the contribution of each feature in the ensemble’s decision-making process. This information can be helpful in understanding the underlying patterns in the data and identifying the most influential features.

- Flexibility and Versatility: Ensemble models can be applied to a wide range of problem domains and data types. They can handle both classification and regression tasks and are effective in handling high-dimensional datasets. Ensemble models can also be easily parallelized, allowing for efficient computation on large datasets.

Ensemble Model’s techniques

- Below we will look at the different techniques used by ensemble models.

Bootstrapping

- Before diving into the specifics of bagging and boosting, let’s first understand bootstrapping.

- Bootstrapping is a fundamental technique in ensemble modeling. It involves creating subsets of observations from the original dataset with replacement, enabling a better understanding of the bias and variance within the dataset.

- Each subset has the same size as the original dataset, and random sampling aids in estimating the mean and standard deviation by resampling from the dataset.

- Bootstrapping is a sampling technique that involves creating subsets of observations from the original dataset with replacement.

- Each subset has the same size as the original dataset, and the random sampling allows us to better understand the bias and variance within the dataset. It helps estimate the mean and standard deviation by resampling from the dataset.

Bagging

- Bagging, short for Bootstrap Aggregation, is a straightforward yet powerful ensemble method. It applies the bootstrap procedure to high-variance machine learning algorithms, typically decision trees.

- The idea behind bagging is to combine the results of multiple models, such as decision trees, to obtain a more generalized and robust prediction. It creates subsets (bags) from the original dataset using random sampling with replacement, and each subset is used to train a base model or weak model independently. These models run in parallel and are independent of each other.

- Bagging, short for Bootstrap Aggregation, applies the bootstrap procedure to high-variance machine learning algorithms, typically decision trees. Bagging combines the results of multiple models to obtain a more generalized and robust prediction. It creates subsets (bags) from the original dataset using random sampling with replacement, with each subset used to train a base model or weak model independently. The final prediction is determined by combining the predictions from all the models, often through averaging or majority voting.

- The final prediction is determined by combining the predictions from all the models, often through averaging or majority voting.

Boosting

- Boosting is a sequential process where each subsequent model attempts to correct the errors made by the previous model.

- Boosting involves training learners sequentially, with early learners fitting simple models to the data and subsequent learners analyzing the data for errors. Through this iterative process, boosting aims to convert a collection of weak learners into a stronger and more accurate model. The final model is a weighted combination of all the models.

Bagging vs Boosting

- agging and Boosting are both ensemble learning techniques used to improve the performance of machine learning models. However, their approaches and objectives differ significantly:

- Data Sampling:

- Bagging: In Bagging (short for Bootstrap Aggregating), multiple training datasets are created by randomly sampling from the original dataset with replacement. Each dataset is of the same size as the original dataset.

- Boosting: In Boosting, the training datasets are also created by random sampling with replacement. However, each new dataset gives more weight to the instances that were misclassified by previous models. This allows subsequent models to focus more on difficult cases.

- Model Independence:

- Bagging: In Bagging, each model is built independently of the others. They are trained on different subsets of the data and can be constructed in parallel.

- Boosting: In Boosting, models are built sequentially. Each new model is influenced by the performance of previously built models. Misclassified instances are given higher weights, and subsequent models try to correct those errors.

- Weighting of Models:

- Bagging: In Bagging, all models have equal weight when making predictions. The final prediction is often obtained by averaging the predictions of all models or using majority voting.

- Boosting: In Boosting, models are weighted based on their performance. Models with better classification results are given higher weights. The final prediction is obtained by combining the weighted predictions of all models.

- Objective:

- Bagging: Bagging aims to reduce the variance of a single model. It helps to improve stability and reduce overfitting by combining multiple models trained on different subsets of the data.

- Boosting: Boosting aims to reduce the bias of a single model. It focuses on difficult instances and tries to correct the model’s mistakes by giving more weight to misclassified instances. Boosting can improve the overall accuracy of the model but may be more prone to overfitting.

- Examples:

- Bagging: Random Forest is an extension of Bagging that uses decision trees as base models and combines their predictions to make final predictions.

- Boosting: Gradient Boosting is a popular Boosting algorithm that sequentially adds decision trees to the model, with each new tree correcting the mistakes of the previous ones.

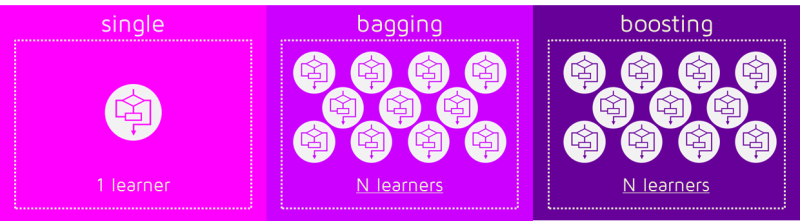

- The image below (source) is an illustrated example of bagging and boosting.

Random Forest

- The motivation behind Random Forests, or ensemble models in general, is the concept of the “Wisdom of the crowd”.

- By aggregating the results of weak learners, specifically Decision Trees, Random Forests generate good predictions by removing dependency on a particular set of features.

- Compared to individual decision trees, Random Forests generalize better on unseen data as they use randomness in feature selection during data sampling, making them less prone to overfitting.

- A random forest is generally better than a decision tree, however, you should note that no algorithm is better than the other it will always depend on the use case & the dataset [Check the No Free Lunch Theorem in the first comment]. Reasons why random forests allow for stronger prediction than individual decision trees: 1) Decision trees are prone to overfit whereas random forest generalizes better on unseen data as it is using randomness in feature selection as well as during sampling of the data. Therefore, random forests have lower variance compared to that of the decision tree without substantially increasing the error due to bias. 2) Generally, ensemble models like Random Forest perform better as they are aggregations of various models (Decision Trees in the case of Random Forest), using the concept of the “Wisdom of the crowd.”

Gradient Boosting vs Random Forest

-

Both Gradient Boosting and Random Forest are decision-tree-based ensemble algorithms and are quite flexible. However, they differ in the way they construct and combine the trees:

- Similarities:

- Both these algorithms are decision-tree based algorithms

- Both these algorithms are ensemble algorithms

- Both are flexible models and do not need much data preprocessing.

- Differences:

- Random forests (Uses Bagging): Trees are arranged in a parallel fashion where the results of all trees are aggregated at the end through averaging or majority vote. Gradient boosting (Uses Boosting): Trees are arranged in a series sequential fashion where every tree tries to minimize the error of the previous tree.

- Radnom forests: Every tree is constructed independently of the other trees. Gradient boosting: Every tree is dependent on the previous tree.

- Advantages of gradient boosting over random forests:

- Gradient boosting can be more accurate than Random forests because we train them to minimize the previous tree’s error.

- Gradient boosting is capable of capturing complex patterns in the data.

- Gradient boosting is better than random forest when used on unbalanced data sets.

- Advantages of random forests over gradient boosting :

- Random forest isless prone to overfit as compared to gradient boosting.

- Random forest has faster training as trees are created parallelly & independent of each other.

- The disadvantage of GB over RF:

- Gradient boosting is more prone to overfitting than random forests due to their focus on mistakes during training iterations and the lack of independence in tree building.

- If the data is noisy the boosted trees might overfit and start modeling the noise.

- In GB training might take longer because every tree is created sequentially.

- Tunning the hyperparameters of gradient boosting is harder than those of random forest.

- Random Forest creates multiple trees in parallel and aggregates their results at the end through averaging or majority voting. Every tree is constructed independently of the others, leading to faster training.

- Gradient Boosting creates trees in a sequential fashion where every tree tries to minimize the error of the previous tree. This often leads to more accurate models but may also lead to overfitting and longer training times.

- When it comes to selecting between the two, the choice depends on the specific problem and dataset at hand.

When are tress not useful?

- When Trees Are Not Useful Tree ensembles like Random Forests and Gradient Boosted Trees are usually a good choice for many machine learning problems.

- However, there are some cases where they may not be the best choice.

- Here are some of the only reasons not to use tree ensembles for your supervised machine learning problem:

- You are working with unstructured data (text, image, audio, video)

- You are doing statistical inference on a parametric model to draw conclusions (for example, causal inference)

- You have strict interpretability requirements from a legal perspective

- You are trying to model a phenomenon with a known relationship in order to extrapolate the relationship (for example, logistic curves to model population growth scenarios)

- You have very restrictive latency and/or memory requirements (sparse linear models and SVMs are superior here)

- Ignoring these, tree ensembles are typically more adaptable and performant. Spend less time trying to beat them, and more time iterating on data quality, feature engineering, and MLOps best practices.