AI Fundamental Concepts

- Overview

- Handwritten ML

- Machine Learning

- Machine Learning

- Optimize Model Performance

- What is the independence assumption for a Naive Bayes classifier?

- Explain the linear regression model and discuss its assumption?

- Explain briefly the K-Means clustering and how can we find the best value of K?

- Explain what is information gain and entropy in the context of decision trees?

- Mention three ways to handle missing or corrupted data in adataset?

- Strategies for Mitigating the Impact of Outliers in Model Training

- What is an activation function and discuss the use of an activation function? Explain three different types of activation functions?

- Dimensionality reduction techniques

- What do you do when you have a low amount of data and large amount of features

- Sample size

- Define correlation

- What is a Correlation Coefficient?

- Explain Pearson’s Correlation Coefficient

- Explain Spearman’s Correlation Coefficient

- Compare Pearson and Spearman coefficients

- How to choose between Pearson and Spearman correlation?

- Multicollinearity

- Mention three ways to make your model robust to outliers?

- What are L1 and L2 regularization? What are the differences between the two?

- What are the Bias and Variance in a Machine Learning Model and explain the bias-variance trade-off?

- Feature Scaling

- Metrics

- Data

- Randomness

- Sigmoid vs Softmax

- Deep Learning

- Fully Connected Network

- Analogy: A Fully Connected Network as a Highway System 🚗🛣️

- Transformer differences

- Why did the transition happen from RNNs to LSTMs

- What is the difference between self attention and Bahdanau (traditional) attention

- Two Tower

- Why should we use Batch Normalization?

- What is weak supervision?

- Active learning

- What are some applications of RL beyond gaming and self-driving cars?

- You are using a deep neural network for a prediction task. After training your model, you notice that it is strongly overfitting the training set and that the performance on the test isn’t good. What can you do to reduce overfitting?

- A/B Testing

- Small file and big file problem in Big data

- Comparing Group Normalization and Batch Normalization

- Batch Inference vs Online Inference: Methods and Considerations

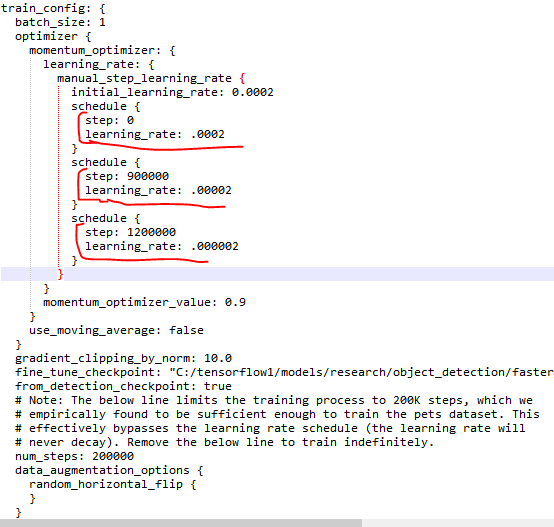

- Learning rate schedules

- How many attention layers do I need if I leverage a Transformer?

- Params, Weights, and Features

- Evaluating Model Architecture Effectiveness

- Generate Embeddings

- What are the differences between a model that minimizes squared error and the one that minimizes the absolute error? and in which cases each error metric would be more appropriate?

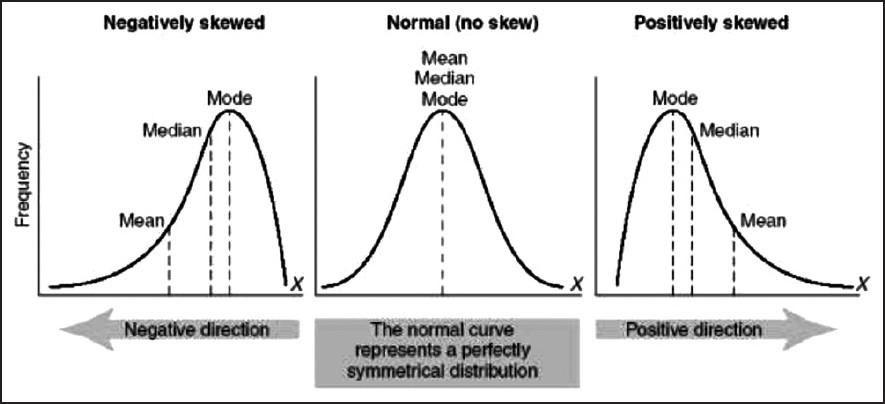

- Given a left-skewed distribution that has a median of 60, what conclusions can we draw about the mean and the mode of the data?

- Can you explain the parameter sharing concept in deep learning?

- What is the meaning of selection bias and how to avoid it?

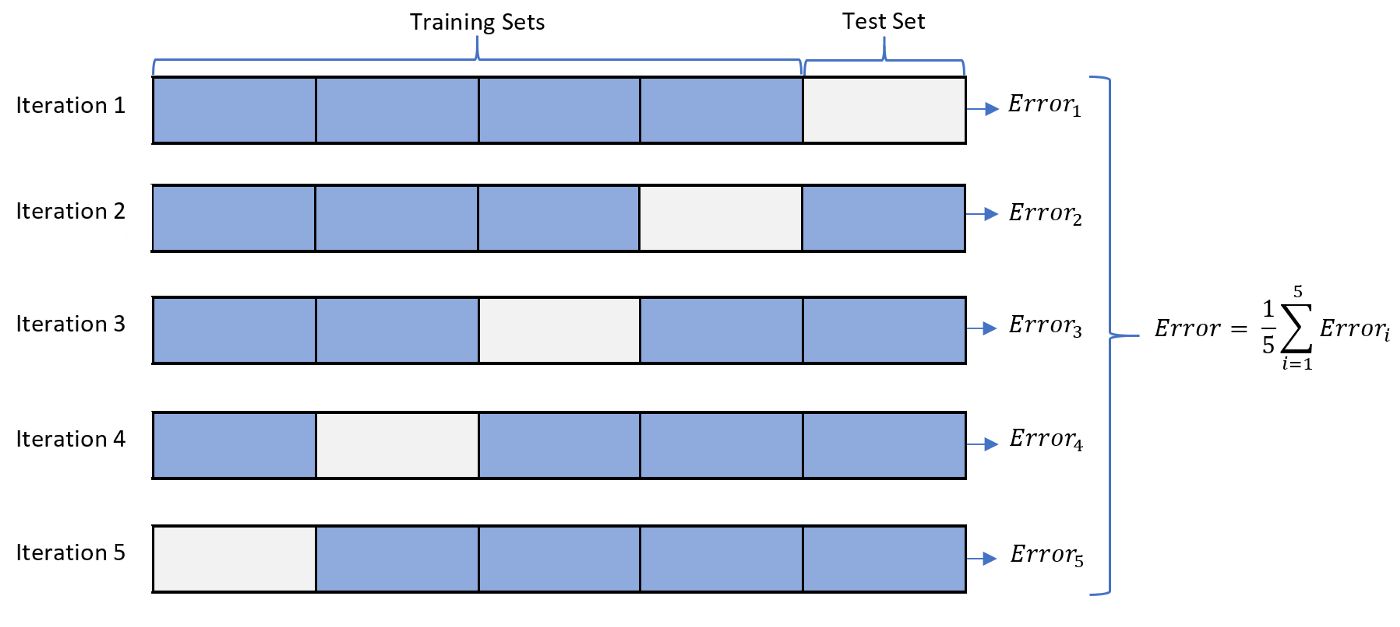

- Define the cross-validation process and the motivation behind using it?

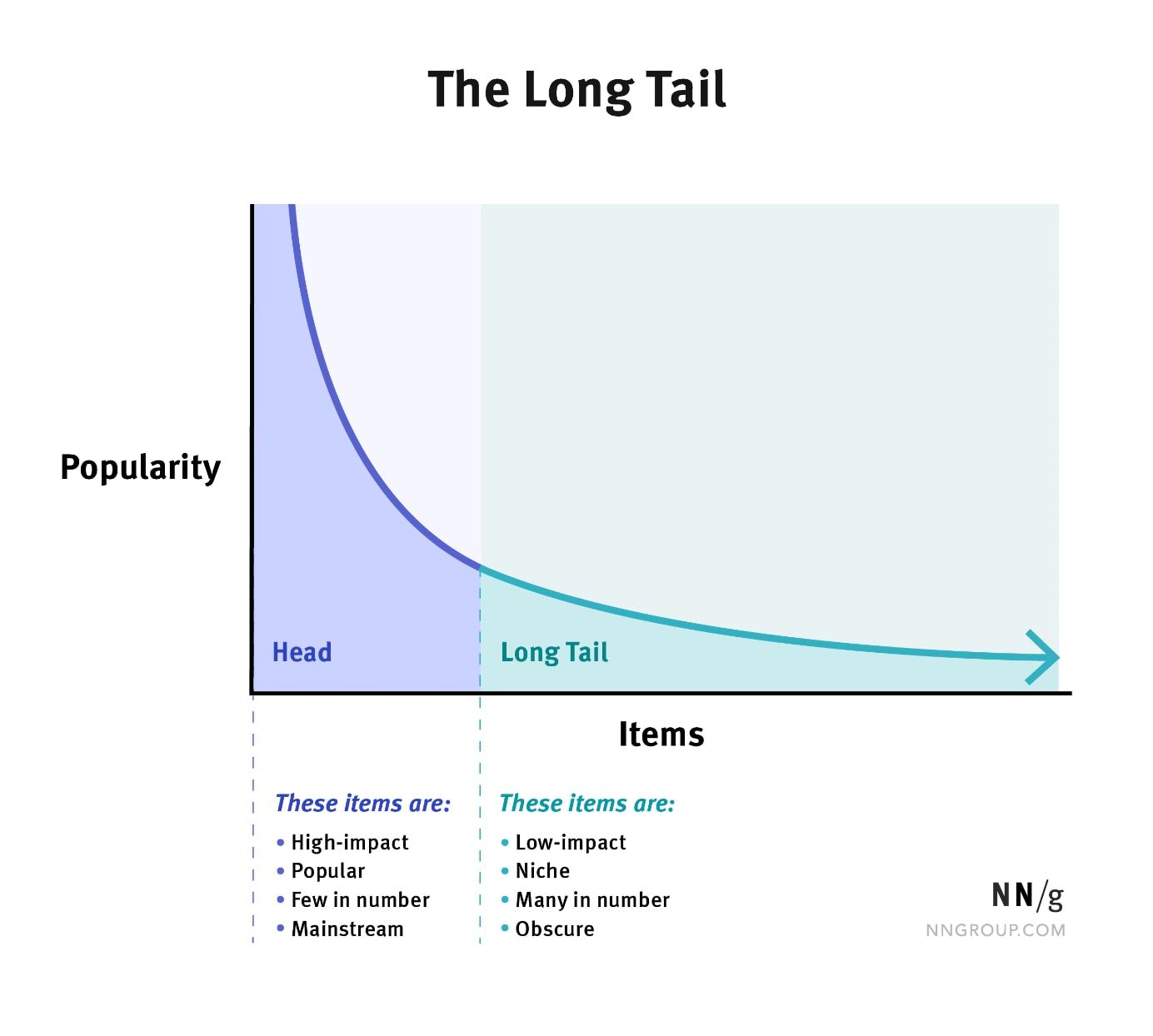

- Explain the long-tailed distribution and provide three examples of relevant phenomena that have long tails. Why are they important in classification and regression problems?

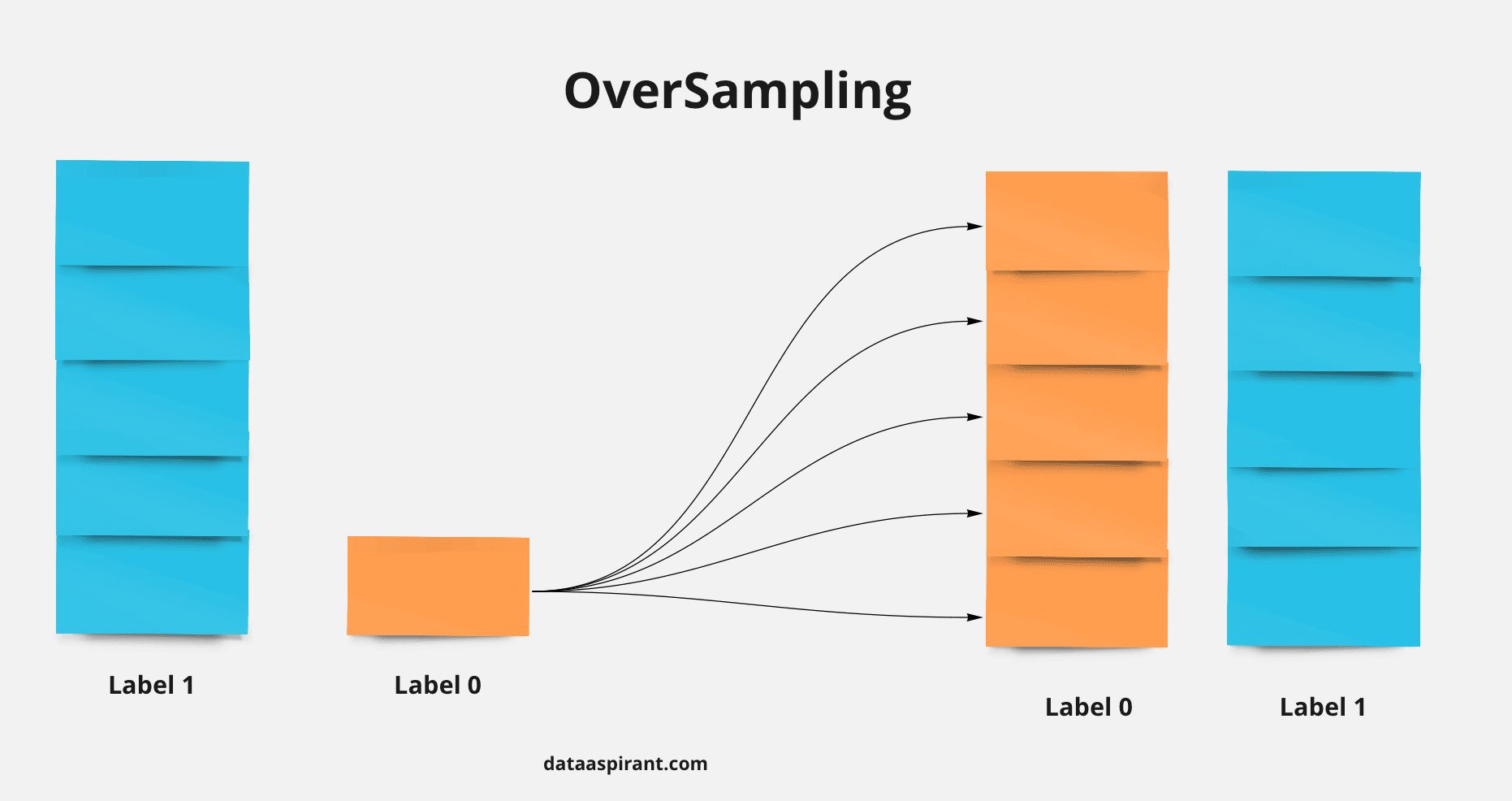

- You are building a binary classifier and found that the data is imbalanced, what should you do to handle this situation?

- What to do with imbalance class

- What is the Vanishing Gradient Problem and how do you fix it?

- What are Residual Networks? How do they help with vanishing gradients?

- How do you run a deep learning model efficiently on-device?

- Evaluating Model Architecture Effectiveness

- Underfitting

- Overfitting

- How do you avoid overfitting? Try one (or more) of the following:

- Data Drift

- Fine-Tuning in Deep Learning

- Libraries for Fine-Tuning

- Code Examples for Each Approach

- Strategies to Manage Data and Semantic Shift

- Detecting Data Drift

- Continuous Training & Testing: Beyond Data Drift

- What is Continuous Training?

- Monitoring and Addressing Drift

- Pretraining vs. Continued Pretraining of Large Language Models (LLMs)

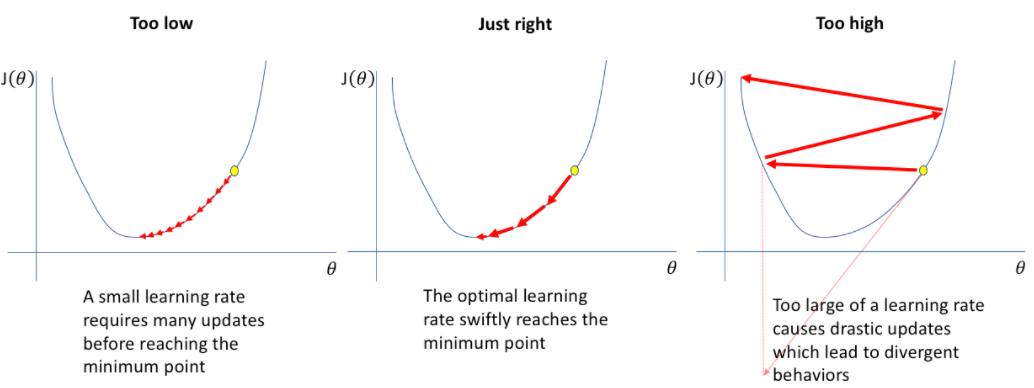

- Describe learning rate schedule/annealing.

- Explain mean/average in terms of attention.

- What is convergence in k-means clustering?

- List some debug steps/reasons for your ML model underperforming on the test data.

- Common Errors and how to solve them

- Not performing one-hot encoding when using categorical_crossentropy

- Small dataset for complex algorithms

- Failure to detect outliers in data

- Failure to verify model assumptions

- Failure to utilize a validation set for hyperparameter tuning

- Less data for training

- Accuracy metric used to evaluate models with data imbalance

- Omitting data normalization

- Using excessively large batch sizes

- Neglecting to apply regularization techniques

- Selecting an incorrect learning rate

- Using an incorrect activation function for the output layer

- How to debug when online and offline results are inconsistent

- Regarding the question about the model file being very large, it could be caused by various factors:

- Why do we initialize weights randomly? / What if we initialize the weights with the same values?

- Misc

- What is the difference between standardization and normalization?

- When do you standardize or normalize features?

- Why is relying on the mean to make a business decision based on data statistics a problem?

- Explain the advantages of the parquet data format and how you can achieve the best data compression with it?

- What is Redis?

- MLOps

- Question and Answers

- References

- Citation

Overview

- From virtual personal assistants to recommendation systems, self-driving cars, and medical diagnostics, AI is powering a new era of intelligent systems that exhibit remarkable capabilities.

- In this article, we’ll go over the fundamental concepts that underpin these remarkable technologies.

Handwritten ML

Machine Learning

- Machine Learning (ML) uses algorithms to parse data, learn from that data, and make informed decisions based on what it has learned. Traditional techniques like decision trees and support vector machines efficiently handle structured data and have applications across various industries such as finance and healthcare. These models excel in environments where relationships in data are quantifiable and predictive accuracy is paramount.

Popular Machine Learning Algorithms: Pros and Cons

Machine Learning

Machine Learning (ML) uses algorithms to parse data, learn from that data, and make informed decisions based on what it has learned. Traditional techniques like decision trees and support vector machines efficiently handle structured data and have applications across various industries such as finance and healthcare. These models excel in environments where relationships in data are quantifiable and predictive accuracy is paramount.

| Algorithm | Description | Pros | Cons | Use Cases |

|---|---|---|---|---|

| Linear Regression | Models the relationship between a scalar response and one or more explanatory variables by fitting a linear equation to observed data. |

|

|

|

| Logistic Regression | Used for binary or multi-class classification by modeling the probability that an observation belongs to a certain class. |

|

|

|

| Support Vector Machines | Finds the optimal hyperplane (or set of hyperplanes) in a high-dimensional space to separate different classes or perform regression. |

|

|

|

| Decision Trees | Uses a tree-like structure of decisions and their possible consequences, including chance event outcomes and resource costs. |

|

|

|

| Random Forest | An ensemble of decision trees that combines multiple trees (via bagging) to improve robustness and reduce overfitting. |

|

|

|

| Gradient Boosting | An ensemble method that builds new models in a stage-wise fashion, training each new model to correct the errors of the previous ensemble. |

|

|

|

| k-Nearest Neighbor (k-NN) | Classifies or regresses based on how closely a query data point resembles existing data points in the feature space. |

|

|

|

| k-Means Clustering | Partitions data into K clusters based on similarity, minimizing the within-cluster variance. |

|

|

|

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise (DBSCAN) finds core samples of high density and expands clusters from them. Can discover clusters of arbitrary shape and does not require specifying the number of clusters beforehand. |

|

|

|

| Principal Component Analysis (PCA) | A dimensionality reduction technique that transforms variables into a set of orthogonal components capturing maximal variance in the data. |

|

|

|

| Naive Bayes | A probabilistic classifier based on Bayes’ theorem with the “naive” assumption of conditional independence among features. |

|

|

|

| ANN (Artificial Neural Networks) | Models inspired by biological neural networks that learn from data by adjusting weights in interconnected layers of artificial “neurons.” |

|

|

|

| AdaBoost | An ensemble method that sequentially trains weak learners on misclassified examples, improving performance by combining many such weak models. |

|

|

|

Optimize Model Performance

Maximizing model efficiency involves optimizing training steps, batch sizes, inference speed, and loss reduction techniques. This section highlights key strategies to enhance performance for both machine learning (ML) and deep learning (DL) models.

Optimize Training Efficiency

Step, Batch Size, and Epochs

- A training step involves processing a batch of examples and updating model parameters.

- Batch size influences both computational efficiency and convergence:

- Small batches (e.g., 16, 32): More updates per epoch, improved generalization but slower training.

- Large batches (e.g., 256, 512): Faster training but may require higher learning rates and risk poorer generalization.

- Dynamic Batch Sizes: Gradually increasing batch size during training (e.g., linear scaling rule) can improve stability.

- An epoch completes one pass over the full dataset. The number of epochs should be optimized based on validation loss trends and early stopping to prevent overfitting.

Optimization Techniques

- Mixed Precision Training: Uses FP16 where possible to reduce memory consumption and speed up computation.

- Gradient Accumulation: Allows training with larger effective batch sizes when memory is limited.

- Asynchronous Data Loading & Prefetching: Reduce training bottlenecks by overlapping data preprocessing with model computation.

Minimizing Loss & Improving Convergence

- Adaptive Optimizers:

- AdamW: Addresses weight decay issues in Adam.

- Lion Optimizer: Efficient for vision tasks, achieving better convergence with fewer updates.

- Learning Rate Scheduling:

- Cosine Annealing: Reduces learning rate smoothly, improving generalization.

- One-cycle policy: Boosts performance by first increasing, then decreasing the learning rate.

- Regularization Strategies:

- Dropout (for deep learning) and L1/L2 regularization (for ML models) prevent overfitting.

- Label smoothing mitigates overconfidence in classification tasks.

- Gradient Clipping: Prevents exploding gradients in deep networks, stabilizing training.

Optimizing Inference Speed

- Quantization: Converts models to lower-precision formats (e.g., INT8) for faster execution on edge devices.

- Pruning: Removes redundant neurons or weights, reducing model size without major accuracy loss.

- TensorRT / ONNX Runtime: Accelerates deep learning inference on GPUs and specialized hardware.

- Batching & Parallelization: For real-time inference, dynamic batching reduces latency while utilizing hardware efficiently.

Advanced Techniques for Deep Learning

Flash Attention

- A cutting-edge improvement over standard attention mechanisms in transformers.

- How it Works: Uses memory-efficient algorithms to reduce the quadratic scaling of attention computation.

- Benefits: Improves speed and scalability for large transformer models (e.g., LLaMA, GPT-4).

- Implementation: Available in frameworks like PyTorch (

xformers), TensorFlow, and Hugging Face libraries.

Efficient Transformer Variants

- Sparse Attention: Reduces memory footprint by attending to only a subset of tokens (e.g., BigBird, Longformer).

- LoRA (Low-Rank Adaptation): Adapts pretrained transformers efficiently without full retraining.

- FSDP (Fully Sharded Data Parallel): Distributes large models across multiple GPUs with better efficiency than traditional methods.

What is the independence assumption for a Naive Bayes classifier?

- Naive bayes assumes that the feature probabilities are independent given the class \(c\), i.e., the features do not depend on each other are totally uncorrelated.

- This is why the Naive Bayes algorithm is called “naive”.

-

Mathematically, the features are independent given class:

\[\begin{aligned} P\left(X_{1}, X_{2} \mid Y\right) &=P\left(X_{1} \mid X_{2}, Y\right) P\left(X_{2} \mid Y\right) \\ &=P\left(X_{1} \mid Y\right) P\left(X_{2} \mid Y\right) \end{aligned}\]- More generally: \(P\left(X_{1} \ldots X_{n} \mid Y\right)=\prod_{i} P\left(X_{i} \mid Y\right)\)

Explain the linear regression model and discuss its assumption?

- Linear regression is a supervised statistical model to predict dependent variable quantity based on independent variables.

- Linear regression is a parametric model and the objective of linear regression is that it has to learn coefficients using the training data and predict the target value given only independent values.

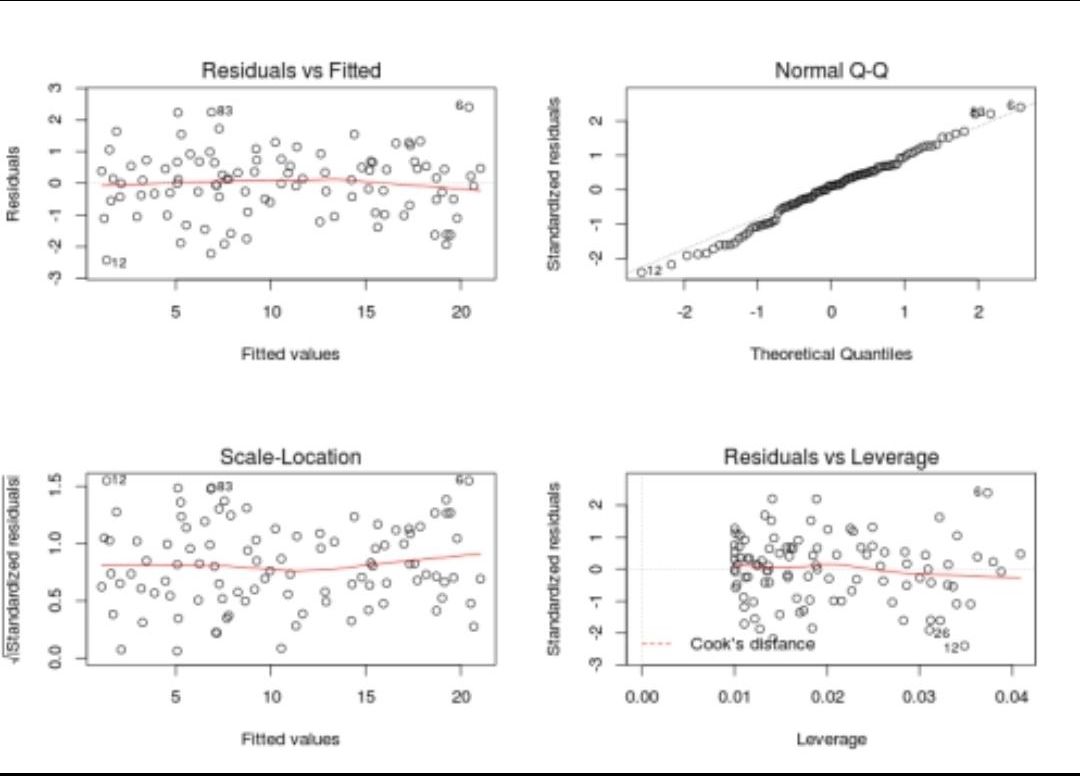

- Some of the linear regression assumptions and how to validate them:

- Linear relationship between independent and dependent variables

- Independent residuals and the constant residuals at every \(x\): We can check for 1 and 2 by plotting the residuals(error terms) against the fitted values (upper left graph). Generally, we should look for a lack of patterns and a consistent variance across the horizontal line.

- Normally distributed residuals: We can check for this using a couple of methods: -Q-Q-plot(upper right graph): If data is normally distributed, points should roughly align with the 45-degree line. -Boxplot: it also helps visualize outliers -Shapiro–Wilk test: If the p-value is lower than the chosen threshold, then the null hypothesis (Data is normally distributed) is rejected.

- Low multicollinearity

- You can calculate the VIF (Variable Inflation Factors) using your favorite statistical tool. If the value for each covariate is lower than 10 (some say 5), you’re good to go.

- The figure below summarizes these assumptions.

Explain briefly the K-Means clustering and how can we find the best value of K?

- K-Means is a well-known clustering algorithm. K-Means clustering is often used because it is easy to interpret and implement. It starts by partitioning a set of data into \(K\) distinct clusters and then arbitrary selects centroids of each of these clusters. It iteratively updates partitions by first assigning the points to the closet cluster and then updating the centroid and then repeating this process until convergence. The process essentially minimizes the total inter-cluster variation across all clusters.

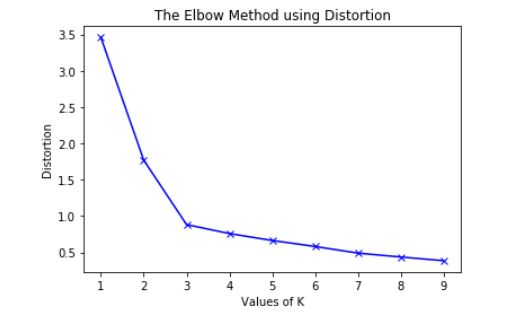

- The elbow method is a well-known method to find the best value of \(K\) in K-means clustering. The intuition behind this technique is that the first few clusters will explain a lot of the variation in the data, but past a certain point, the amount of information added is diminishing. Looking at the graph below of the explained variation (on the y-axis) versus the number of cluster \(K\) (on the x-axis), there should be a sharp change in the y-axis at some level of \(K\). For example in the graph below the drop-off is at \(k=3\).

- The explained variation is quantified by the within-cluster sum of squared errors. To calculate this error notice, we look for each cluster at the total sum of squared errors using Euclidean distance.

- Another popular alternative method to find the value of \(K\) is to apply the silhouette method, which aims to measure how similar points are in its cluster compared to other clusters. It can be calculated with this equation: \((x-y)/max(x,y)\), where \(x\) is the mean distance to the examples of the nearest cluster, and \(y\) is the mean distance to other examples in the same cluster. The coefficient varies between -1 and 1 for any given point. A value of 1 implies that the point is in the right cluster and the value of -1 implies that it is in the wrong cluster. By plotting the silhouette coefficient on the y-axis versus each \(K\) we can get an idea of the optimal number of clusters. However, it is worthy to note that this method is more computationally expensive than the previous one.

Explain what is information gain and entropy in the context of decision trees?

- Entropy and Information Gain are two key metrics used in determining the relevance of decision making when constructing a decision tree model and to determine the nodes and the best way to split.

- The idea of a decision tree is to divide the data set into smaller data sets based on the descriptive features until we reach a small enough set that contains data points that fall under one label.

- Entropy is the measure of impurity, disorder, or uncertainty in a bunch of examples. Entropy controls how a Decision Tree decides to split the data. Information gain calculates the reduction in entropy or surprise from transforming a dataset in some way. It is commonly used in the construction of decision trees from a training dataset, by evaluating the information gain for each variable, and selecting the variable that maximizes the information gain, which in turn minimizes the entropy and best splits the dataset into groups for effective classification.

Mention three ways to handle missing or corrupted data in adataset?

-

In general, real-world data often has a lot of missing values. The cause of missing values can be data corruption or failure to record data. The handling of missing data is very important during the preprocessing of the dataset as many machine learning algorithms do not support missing values. However, you should start by asking the data owner/stakeholder about the missing or corrupted data. It might be at the data entry level, because of file encoding, etc. which if aligned, can be handled without the need to use advanced techniques.

-

There are different ways to handle missing data, we will discuss only three of them:

-

Deleting the row with missing values

- The first method to handle missing values is to delete the rows or columns that have null values. This is an easy and fast method and leads to a robust model, however, it will lead to the loss of a lot of information depending on the amount of missing data and can only be applied if the missing data represent a small percentage of the whole dataset.

-

Using learning algorithms that support missing values

- Some machine learning algorithms are robust to missing values in the dataset. The K-NN algorithm can ignore a column from a distance measure when there are missing values. Naive Bayes can also support missing values when making a prediction. Another algorithm that can handle a dataset with missing values or null values is the random forest model and Xgboost (check the post in the first comment), as it can work on non-linear and categorical data. The problem with this method is that these models’ implementation in the scikit-learn library does not support handling missing values, so you will have to implement it yourself.

-

Missing value imputation

- Data imputation means the substitution of estimated values for missing or inconsistent data in your dataset. There are different ways to estimate the values that will replace the missing value. The simplest one is to replace the missing value with the most repeated value in the row or the column. Another simple way is to replace it with the mean, median, or mode of the rest of the row or the column. This advantage of this is that it is an easy and fast way to handle the missing data, but it might lead to data leakage and does not factor the covariance between features. A better way is to use a machine learning model to learn the pattern between the data and predict the missing values, this is a very good method to estimate the missing values that will not lead to data leakage and will factor the covariance between the feature, the drawback of this method is the computational complexity especially if your dataset is large.

-

Strategies for Mitigating the Impact of Outliers in Model Training

- Implementing Regularization Techniques:

- L1 (Lasso) and L2 (Ridge) regularization methods are effective for reducing overfitting, which can be exacerbated by outliers. They work by adding a penalty to the loss function that discourages large weights in the model, thus attenuating the influence of outliers. L1 regularization can also promote sparsity, which may altogether eliminate the impact of some outlier-influenced features.

- Utilizing Tree-Based Algorithms:

- Models like Random Forests and Gradient Boosting Decision Trees inherently possess a higher tolerance to outliers. They don’t rely on the assumption of data being normally distributed since they use hierarchical splitting. Outliers tend to end up in nodes that don’t significantly skew the majority of the data, thereby isolating their influence.

- Applying Log Transformations:

- For datasets where the target variable shows an exponential growth pattern, log transformation can normalize the scale, bringing the data closer to a normal distribution. This can be particularly useful when dealing with right-skewed data, as it dampens the effect of very large values. However, this technique should only be applied when it makes sense for the data distribution and the nature of the variables involved.

- Employing Robust Evaluation Metrics:

- Instead of relying on metrics that are highly sensitive to outliers, such as the Mean Squared Error, switching to more robust alternatives like the Mean Absolute Error or Median Absolute Deviation can provide a more reliable measure of model performance in outlier-affected datasets.

- Outlier Detection and Removal:

- In cases where outliers do not contribute to predictive power, especially when they result from errors or noise, it may be justifiable to remove them. This should be done with caution, considering the risk of losing valuable information. Outlier removal should always be backed by a solid rationale that aligns with the overall modeling goals and data understanding. - By combining these strategies, you can significantly reduce the adverse effects that outliers might have on your predictive models, leading to more robust and reliable outcomes.

What is an activation function and discuss the use of an activation function? Explain three different types of activation functions?

- In mathematical terms, the activation function serves as a gate between the current neuron input and its output, going to the next level. Basically, it decides whether neurons should be activated or not. It is used to introduce non-linearity into a model.

- Activation functions are added to introduce non-linearity to the network, it doesn’t matter how many layers or how many neurons your net has, the output will be linear combinations of the input in the absence of activation functions. In other words, activation functions are what make a linear regression model different from a neural network. We need non-linearity, to capture more complex features and model more complex variations that simple linear models can not capture.

- There are a lot of activation functions:

- Sigmoid function: \(f(x) = 1/(1+exp(-x))\).

- The output value of it is between 0 and 1, we can use it for classification. It has some problems like the gradient vanishing on the extremes, also it is computationally expensive since it uses exp.

- ReLU: \(f(x) = max(0,x)\).

- it returns 0 if the input is negative and the value of the input if the input is positive. It solves the problem of vanishing gradient for the positive side, however, the problem is still on the negative side. It is fast because we use a linear function in it.

- Leaky ReLU:

- Sigmoid function: \(f(x) = 1/(1+exp(-x))\).

- It solves the problem of vanishing gradient on both sides by returning a value “a” on the negative side and it does the same thing as ReLU for the positive side.

- Softmax: it is usually used at the last layer for a classification problem because it returns a set of probabilities, where the sum of them is 1. Moreover, it is compatible with cross-entropy loss, which is usually the loss function for classification problems.

Dimensionality reduction techniques

-

Dimensionality reduction techniques help deal with the curse of dimensionality. Some of these are supervised learning approaches whereas others are unsupervised. Here is a quick summary:

- PCA - Principal Component Analysis is an unsupervised learning approach and can Handle skewed data easily for dimensionality reduction.

- LDA - Linear Discriminant Analysis is also a dimensionality reduction technique based on eigenvectors but it also maximizes class separation while doing so. Moreover, it is a supervised Learning approach and it performs better with uniformly distributed data.

- ICA - Independent Component Analysis aims to maximize the statistical independence between variables and is a Supervised learning approach.

- MDS - Multi dimensional scaling aims to preserve the Euclidean pairwise distances. It is an Unsupervised learning approach.

- ISOMAP - Also known as Isometric Mapping is another dimensionality reduction technique which preserves geodesic pairwise distances. It is an unsupervised learning approach. It can handle noisy data well.

- t-SNE - Called the t-distributed stochastic neighbor embedding preserves local structure and is an Unsupervised learning approach.

What do you do when you have a low amount of data and large amount of features

- When handling a low amount of data with a large number of features:

-

Use data augmentation to create more training samples, employing techniques like geometric transformations or noise injection, but avoid excessive augmentation that can lead to misleading patterns.

-

Apply dimensionality reduction to address the curse of dimensionality, using feature selection to discard less important features and feature extraction methods like PCA to transform the feature space.

-

Reduce overfitting by minimizing the number of features, which can also improve the model’s ability to generalize and increase computational efficiency.

-

Ensure data quality, as noisy or inconsistent data can significantly impact model performance, especially when the data is scarce.

-

Implement models adept at handling high-dimensional data, like deep neural networks or ensemble methods, but be cautious of overfitting and higher computational demands.

-

Decorrelate features using Pearson correlation for linear relationships and Spearman correlation for monotonic relationships, setting a threshold to identify and eliminate redundant features.

-

Combine correlation-based feature selection with other methods for a thorough feature engineering process, and choose the correlation measure that best fits the nature of your data and analysis goals.

Sample size

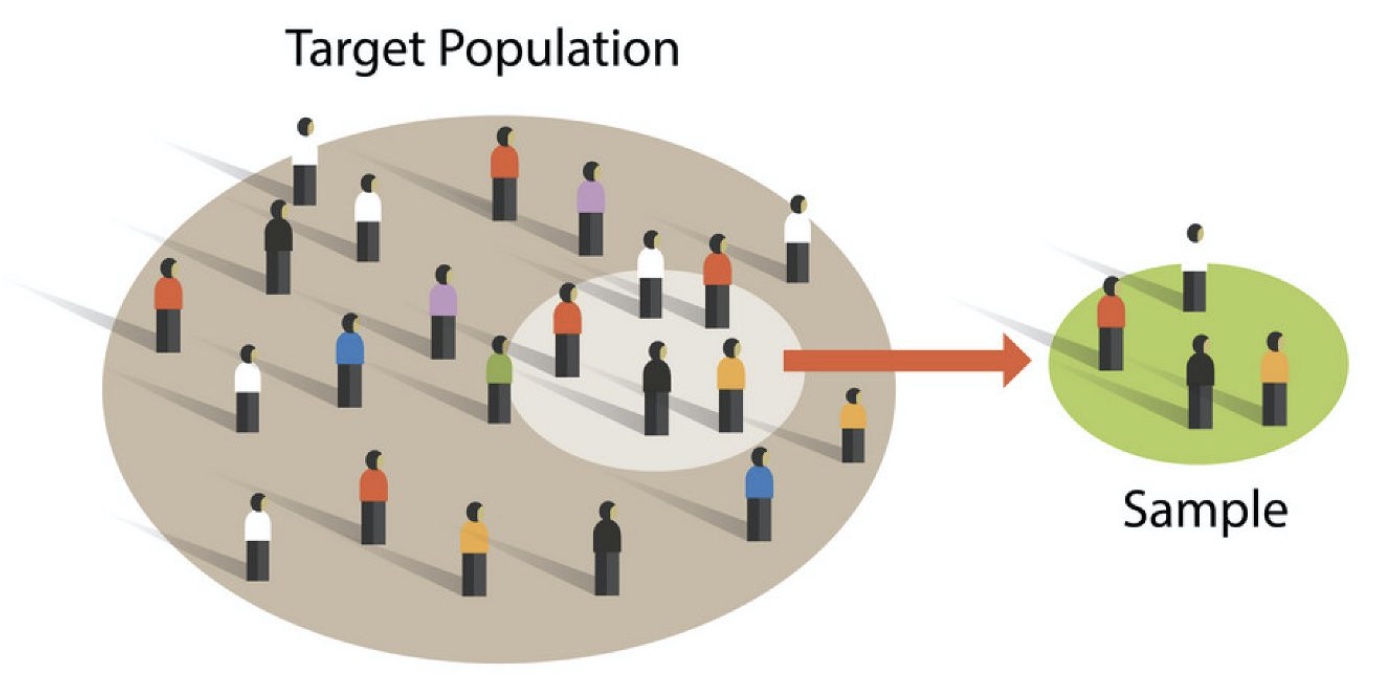

- Sample size refers to the number of data points or observations in the entire dataset. It represents the total amount of data available for training, validation, and testing. The sample size is a characteristic of the dataset itself and remains fixed throughout the training process.

- Population size: Consider the size of the population you are trying to make inferences about. If the population is small, you may need a larger sample size to obtain reliable estimates. Conversely, if the population is large, a smaller sample size might be sufficient.

- Desired level of precision: Determine the level of precision or margin of error that you are willing to tolerate in your estimates. A smaller margin of error requires a larger sample size.

- Confidence level: Specify the desired level of confidence in your estimates. Commonly used confidence levels are 95% or 99%. Higher confidence levels generally require larger sample sizes.

- Variability of the data: Consider the variability or dispersion of the data you are working with. If the data points are highly variable, you may need a larger sample size to capture the underlying patterns accurately.

- Statistical power: If you are conducting hypothesis tests or performing statistical analyses, you need to consider the statistical power of your study. Higher statistical power often necessitates a larger sample size to detect meaningful effects or differences.

- Available resources: Take into account the resources available to collect and analyze data. If there are limitations in terms of time, cost, or manpower, you may need to make trade-offs and choose a sample size that is feasible within those constraints.

- Prior research or pilot studies: If prior research or pilot studies have been conducted on a similar topic, they can provide insights into the expected effect sizes and variability, which can guide sample size determination.

- Nonlinear algorithms (ANN, SVN, Random Forest), which have the ability to learn complex relationships between input and output features, often require a larger amount of training data compared to linear algorithms. These nonlinear algorithms, such as random forests or artificial neural networks, are more flexible and have higher variance, meaning their predictions can vary based on the specific data used for training.

- For example, if a linear algorithm achieves good performance with a few hundred examples per class, a nonlinear algorithm may require several thousand examples per class to achieve similar performance. Deep learning methods, a type of nonlinear algorithm, can benefit from even larger amounts of data, as they have the potential to further improve their performance with more training examples

- Also note, more data never hurts!

Define correlation

- Correlation is the degree to which two variables are linearly related. This is an important step in bi-variate data analysis. In the broadest sense correlation is actually any statistical relationship, whether causal or not, between two random variables in bivariate data.

An important rule to remember is that Correlation doesn’t imply causation.

- Let’s understand through two examples as to what it actually implies.

- The consumption of ice-cream increases during the summer months. There is a strong correlation between the sales of ice-cream units. In this particular example, we see there is a causal relationship also as the extreme summers do push the sale of ice-creams up.

- Ice-creams sales also have a strong correlation with shark attacks. Now as we can see very clearly here, the shark attacks are most definitely not caused due to ice-creams. So, there is no causation here.

- Hence, we can understand that the correlation doesn’t ALWAYS imply causation!

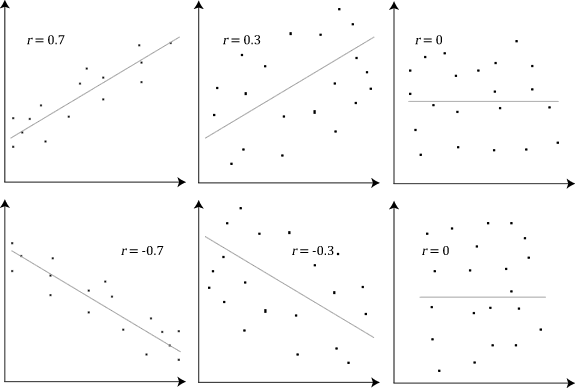

What is a Correlation Coefficient?

- A correlation coefficient is a statistical measure of the strength of the relationship between the relative movements of two variables. The values range between -1.0 and 1.0. A correlation of -1.0 shows a perfect negative correlation, while a correlation of 1.0 shows a perfect positive correlation. A correlation of 0.0 shows no linear relationship between the movement of the two variables.

Explain Pearson’s Correlation Coefficient

-

Wikipedia Definition: In statistics, the Pearson correlation coefficient also referred to as Pearson’s r or the bivariate correlation is a statistic that measures the linear correlation between two variables X and Y. It has a value between +1 and −1. A value of +1 is a total positive linear correlation, 0 is no linear correlation, and −1 is a total negative linear correlation.

-

Important Inference to keep in mind: The Pearson correlation can evaluate ONLY a linear relationship between two continuous variables (A relationship is linear only when a change in one variable is associated with a proportional change in the other variable)

-

Example use case: We can use the Pearson correlation to evaluate whether an increase in age leads to an increase in blood pressure.

-

Below is an example (source: Wikipedia) of how the Pearson correlation coefficient (r) varies with the strength and the direction of the relationship between the two variables. Note that when no linear relationship could be established (refer to graphs in the third column), the Pearson coefficient yields a value of zero.

Explain Spearman’s Correlation Coefficient

-

Wikipedia Definition: In statistics, Spearman’s rank correlation coefficient or Spearman’s ρ, named after Charles Spearman is a nonparametric measure of rank correlation (statistical dependence between the rankings of two variables). It assesses how well the relationship between two variables can be described using a monotonic function.

-

Important Inference to keep in mind: The Spearman correlation can evaluate a monotonic relationship between two variables — Continous or Ordinal and it is based on the ranked values for each variable rather than the raw data.

-

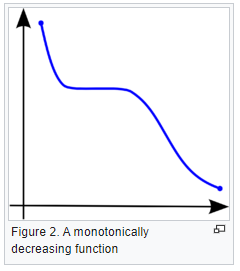

What is a monotonic relationship?

- A monotonic relationship is a relationship that does one of the following:

- As the value of one variable increases, so does the value of the other variable, OR,

- As the value of one variable increases, the other variable value decreases.

- But, not exactly at a constant rate whereas in a linear relationship the rate of increase/decrease is constant.

- A monotonic relationship is a relationship that does one of the following:

- Example use case: Whether the order in which employees complete a test exercise is related to the number of months they have been employed or correlation between the IQ of a person with the number of hours spent in front of TV per week.

Compare Pearson and Spearman coefficients

- The fundamental difference between the two correlation coefficients is that the Pearson coefficient works with a linear relationship between the two variables whereas the Spearman Coefficient works with monotonic relationships as well.

- One more difference is that Pearson works with raw data values of the variables whereas Spearman works with rank-ordered variables.

- Now, if we feel that a scatterplot is visually indicating a “might be monotonic, might be linear” relationship, our best bet would be to apply Spearman and not Pearson. No harm would be done by switching to Spearman even if the data turned out to be perfectly linear. But, if it’s not exactly linear and we use Pearson’s coefficient then we’ll miss out on the information that Spearman could capture.

-

Let’s look at some examples (source: A comparison of the Pearson and Spearman correlation methods):

- Pearson = +1, Spearman = +1:

- Pearson = +0.851, Spearman = +1 (This is a monotonically increasing relationship, thus Spearman is exactly 1)

- Pearson = −0.093, Spearman = −0.093

- Pearson = −1, Spearman = −1

- Pearson = −0.799, Spearman = −1 (This is a monotonically decreasing relationship, thus Spearman is exactly 1)

- Note that both of these coefficients cannot capture any other kind of non-linear relationships. Thus, if a scatterplot indicates a relationship that cannot be expressed by a linear or monotonic function, then both of these coefficients must not be used to determine the strength of the relationship between the variables.

How to choose between Pearson and Spearman correlation?

-

If you want to explore your data it is best to compute both, since the relation between the Spearman (S) and Pearson (P) correlations will give some information. Briefly, \(S\) is computed on ranks and so depicts monotonic relationships while \(P\) is on true values and depicts linear relationships.

-

As an example, if you set:

x=(1:100);

y=exp(x); % then,

corr(x,y,'type','Spearman'); % will equal 1, and

corr(x,y,'type','Pearson'); % will be about equal to 0.25

- This is because \(y\) increases monotonically with \(x\) so the Spearman correlation is perfect, but not linearly, so the Pearson correlation is imperfect.

corr(x,log(y),'type','Pearson'); % will equal 1

- Doing both is interesting because if you have \(S > P\), that means that you have a correlation that is monotonic but not linear. Since it is good to have linearity in statistics (it is easier) you can try to apply a transformation on \(y\) (such a log).

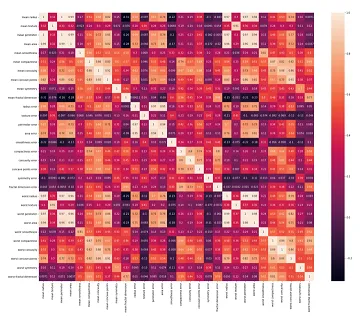

Multicollinearity

- Multicollinearity refers to the high correlation between input features in a dataset, which can adversely affect the performance of machine learning models. To identify multicollinearity, one can calculate the Pearson correlation coefficient or the Spearman correlation coefficient between the input features. The Pearson correlation coefficient measures the linear relationship between variables, while the Spearman correlation coefficient assesses the monotonic relationship between variables.

- Creating a heatmap by visualizing the correlation coefficients of input features can effectively reveal multicollinearity. In the heatmap, lighter colors indicate a high correlation, while darker colors indicate a low correlation.

- To mitigate multicollinearity, one approach is to employ Principal Component Analysis (PCA) as a data preprocessing step. PCA leverages the existing correlations among input features to combine them into a new set of uncorrelated features. By applying PCA, multicollinearity can be automatically addressed. After PCA transformation, a new heatmap can be generated to confirm the reduced correlation among the transformed features.

- For a practical demonstration of removing multicollinearity using PCA, you may refer to the article “How do you apply PCA to Logistic Regression to remove Multicollinearity?” to gain hands-on experience in its application.

- (Source image)

Mention three ways to make your model robust to outliers?

-

Investigating the outliers is always the first step in understanding how to treat them. After you understand the nature of why the outliers occurred you can apply one of the several methods mentioned below.

-

Add regularization that will reduce variance, for example, L1 or L2 regularization.

-

Use tree-based models (random forest, gradient boosting ) that are generally less affected by outliers.

-

Winsorize the data. Winsorizing or winsorization is the transformation of statistics by limiting extreme values in the statistical data to reduce the effect of possibly spurious outliers. In numerical data, if the distribution is almost normal using the Z-score we can detect the outliers and treat them by either removing or capping them with some value. If the distribution is skewed using IQR we can detect and treat it by again either removing or capping it with some value. In categorical data check for value_count in the percentage if we have very few records from some category, either we can remove it or can cap it with some categorical value like others.

-

Transform the data, for example, you do a log transformation when the response variable follows an exponential distribution or is right-skewed.

-

Use more robust error metrics such as MAE or Huber loss instead of MSE.

-

Remove the outliers, only do this if you are certain that the outliers are true anomalies that are not worth adding to your model. This should be your last consideration since dropping them means losing information.

What are L1 and L2 regularization? What are the differences between the two?

- Regularization is a technique used to avoid overfitting by trying to make the model more simple. One way to apply regularization is by adding the weights to the loss function. This is done in order to consider minimizing unimportant weights. In L1 regularization we add the sum of the absolute of the weights to the loss function. In L2 regularization we add the sum of the squares of the weights to the loss function.

- So both L1 and L2 regularization are ways to reduce overfitting, but to understand the difference it’s better to know how they are calculated:

- Loss (L2) : Cost function + \(L\) * \(weights^2\)

- Loss (L1) : Cost function + \(L\) * \(\|weights\|\)

- Where \(L\) is the regularization parameter

- L2 regularization penalizes huge parameters preventing any of the single parameters to get too large. But weights never become zeros. It adds parameters square to the loss. Preventing the model from overfitting on any single feature.

- L1 regularization penalizes weights by adding a term to the loss function which is the absolute value of the loss. This leads to it removing small values of the parameters leading in the end to the parameter hitting zero and staying there for the rest of the epochs. Removing this specific variable completely from our calculation. So, It helps in simplifying our model. It is also helpful for feature selection as it shrinks the coefficient to zero which is not significant in the model.

What are the Bias and Variance in a Machine Learning Model and explain the bias-variance trade-off?

-

The goal of any supervised machine learning model is to estimate the mapping function (f) that predicts the target variable (y) given input (x). The prediction error can be broken down into three parts:

-

Bias: The bias is the simplifying assumption made by the model to make the target function easy to learn. Low bias suggests fewer assumptions made about the form of the target function. High bias suggests more assumptions made about the form of the target data. The smaller the bias error the better the model is. If the bias error is high, this means that the model is underfitting the training data.

-

Variance: Variance is the amount that the estimate of the target function will change if different training data was used. The target function is estimated from the training data by a machine learning algorithm, so we should expect the algorithm to have some variance. Ideally, it should not change too much from one training dataset to the next, meaning that the algorithm is good at picking out the hidden underlying mapping between the inputs and the output variables. If the variance error is high this indicates that the model overfits the training data.

-

Irreducible error: It is the error introduced from the chosen framing of the problem and may be caused by factors like unknown variables that influence the mapping of the input variables to the output variable. The irreducible error cannot be reduced regardless of what algorithm is used.

-

-

The goal of any supervised machine learning algorithm is to achieve low bias and low variance. In turn, the algorithm should achieve good prediction performance. The parameterization of machine learning algorithms is often a battle to balance out bias and variance.

- For example, if you want to predict the housing prices given a large set of potential predictors. A model with high bias but low variance, such as linear regression will be easy to implement, but it will oversimplify the problem resulting in high bias and low variance. This high bias and low variance would mean in this context that the predicted house prices are frequently off from the market value, but the value of the variance of these predicted prices is low.

- On the other side, a model with low bias and high variance such as a neural network will lead to predicted house prices closer to the market value, but with predictions varying widely based on the input features.

Feature Scaling

- Feature scaling is a preprocessing step in machine learning that aims to bring all features or variables to a similar scale or range. It is essential because many machine learning algorithms perform better when the features are on a similar scale. Here are some common techniques for feature scaling:

1) Standardization (Z-score normalization): This technique scales the features to have zero mean and unit variance. It transforms the data so that it follows a standard normal distribution. Standardization is useful when the features have different scales and the algorithm assumes a Gaussian distribution.

2) Normalization (Min-Max scaling): This technique scales the features to a specific range, usually between 0 and 1. It preserves the relative relationships between data points. Normalization is suitable when the data does not follow a Gaussian distribution and the algorithm does not make assumptions about the distribution.

3) Logarithmic Transformation: This technique applies a logarithmic function to the data. It is useful when the data is skewed or has a wide range of values. Logarithmic transformation can help in reducing the impact of outliers and making the data more normally distributed.

4) Robust Scaling: This technique scales the features based on their interquartile range (IQR). It is similar to standardization but uses the median and IQR instead of the mean and standard deviation. Robust scaling is more resistant to outliers compared to standardization.

When working with AWS, you can use the following toolings for feature scaling:

-

Amazon SageMaker Data Wrangler: It provides built-in transformations for feature scaling, including standardization and normalization. You can preprocess your data using Data Wrangler’s visual interface or through its Python SDK.

-

AWS Glue: It is a fully managed extract, transform, and load (ETL) service. Glue allows you to create and execute data transformation jobs using Apache Spark. You can leverage Spark’s capabilities to perform feature scaling along with other preprocessing steps.

-

Amazon Athena: Athena is an interactive query service that allows you to query data directly from your data lake. You can use SQL queries to perform feature scaling operations within your queries, applying functions like standardization or normalization.

-

These tools provide efficient ways to preprocess and scale your features, enabling you to prepare your data for machine learning tasks effectively.

Metrics

Precision

- Definition: Precision is the ratio of true positive predictions to the total predicted positives.

- Formula: Precision = TP / (TP + FP)

- Interpretation: Measures how many of the predicted positive instances are actually positive. High precision indicates a low false positive rate.

Recall (Sensitivity)

- Definition: Recall is the ratio of true positive predictions to the total actual positives.

- Formula: Recall = TP / (TP + FN)

- Interpretation: Measures how many of the actual positive instances are correctly identified. High recall indicates a low false negative rate.

AUC-ROC (Area Under the Receiver Operating Characteristic Curve)

- Definition: AUC-ROC is a performance measurement for classification problems at various threshold settings.

- ROC Curve: Plots the true positive rate (recall) against the false positive rate (1-specificity).

- AUC Value: Represents the likelihood that the model ranks a random positive instance higher than a random negative one. A higher AUC indicates better model performance.

- Interpretation: AUC-ROC provides a single metric to compare model performance across different thresholds, with 1 being perfect and 0.5 representing random guessing.

Data

Overfitting

- Cross-Validation: Essential for evaluating model performance and ensuring generalization.

- Regularization: Effective for many models and relatively easy to implement (e.g., L1/L2 regularization).

- Early Stopping: Useful in neural networks to prevent over-training.

- Simplify the Model: Reducing complexity is a straightforward way to mitigate overfitting.

- Pruning: Specifically for decision trees and random forests, helps remove overfitted branches.

- Dropout: Specifically for neural networks, helps prevent nodes from co-adapting too much.

- Ensemble Methods: Combines multiple models to improve generalization (e.g., bagging, boosting).

- Train with More Data: If feasible, more data helps the model learn better.

- Data Augmentation: Especially useful in image processing to artificially increase dataset size.

- Feature Selection: Reduces the number of input variables, simplifying the model and reducing overfitting risks.

Underfitting

- Increase Model Complexity: Use a more complex model or add layers/neurons to a neural network to capture more intricate patterns.

- Feature Engineering: Create new features or transform existing ones to provide more relevant information to the model.

- Decrease Regularization: Reduce the strength of regularization to allow the model to fit the training data better.

- Train Longer: Ensure the model has sufficient training time to learn from the data.

- Use Different Algorithms: Experiment with more complex algorithms that might better capture the data patterns.

- Hyperparameter Tuning: Optimize the model’s hyperparameters to improve its learning capability.

- Remove Noise from Data: Clean the dataset to ensure that irrelevant or incorrect data points do not affect the model’s performance.

- Increase Training Data Quality: Improve the quality of the data rather than quantity, ensuring the data is more representative of the problem.

- Combine Models: Use ensemble methods to combine the predictions of multiple models for a stronger overall model.

- Use Pretrained Models: Leverage transfer learning by using models pretrained on similar tasks and fine-tuning them for your specific problem.

Data Imbalance:

-

Data imbalance is a common problem in machine learning where certain classes or outcomes are underrepresented in the training data. This can lead to biased models that perform well on the majority class but poorly on the minority class, simply because the model has not seen enough examples of the minority class to learn from. Data imbalance is especially problematic in applications like fraud detection or disease diagnosis, where the minority class (fraudulent transactions or positive disease cases) is often the most important to detect.

-

Strategies to address data imbalance include:

-

Resampling Techniques: Adjusting the dataset to balance the class distribution. This can be done through oversampling the minority class, undersampling the majority class, or synthesizing new data with techniques such as SMOTE (Synthetic Minority Over-sampling Technique).

-

Cost-Sensitive Learning: Modifying algorithms to make them more sensitive to the minority class by assigning higher misclassification costs to the minority class.

-

Anomaly Detection: In cases where the minority class is very rare, anomaly detection techniques might be more appropriate than standard classification methods.

-

Ensemble Methods: Using ensemble techniques such as bagging or boosting to improve the robustness of the model against the imbalance.

Long Tail Data:

-

Long tail data refers to the phenomenon where a significant portion of occurrences or events in a dataset are represented by many low-frequency, infrequent instances. In many real-world datasets, a small number of categories (the “head”) have a high number of instances, and a large number of categories (the “tail”) have a low number of instances.

-

The challenges with long tail data include:

-

Model Overfitting: The model may overfit to the head of the distribution and perform poorly on the tail instances.

-

Underrepresentation: The instances in the long tail are underrepresented, making it difficult for the model to learn from them.

- Addressing long tail issues may involve:

-

Tailored Sampling Strategies: Deliberately sampling more instances from the tail to give the model more examples to learn from.

-

Specialized Models: Developing models or components of models specifically designed to handle the long tail, such as few-shot learning techniques.

-

Transfer Learning: Using transfer learning to leverage information from related domains where data might not be as sparse.

-

Meta-Learning: Applying meta-learning approaches which train models on a variety of tasks so they can better adapt to new tasks with limited data.

- In all cases, the key to managing data imbalance, ensuring diversity, and handling long tail data is to be aware of these issues during the dataset construction, model design, and evaluation stages, and to employ strategies that mitigate their potential negative impacts on model performance.

Focal loss for imbalance class

- Focal loss is an alternative loss function to the standard cross-entropy loss used in classification problems, particularly designed to address class imbalance in datasets where there is a large discrepancy between the number of instances in each class. It was introduced by Lin et al. in the paper “Focal Loss for Dense Object Detection,” primarily for improving object detection models where the background class significantly outnumbers the object classes.

- The idea behind focal loss is to modify the cross-entropy loss so that it reduces the relative loss for well-classified examples and focuses more on hard, misclassified examples. This is achieved by adding a modulating factor to the cross-entropy loss, which down-weights the loss assigned to well-classified examples.

- The focal loss function is defined as follows:

-

Where:

- \(p_t\) is the model’s estimated probability for the class with label \(y = 1\).

- For the class labeled as \(y = 0\), the probability \(p_t\) is replaced with \((1 - p_t)\) to reflect the probability of the negative class.

- \(\alpha_t\) is a weighting factor for the class \(t\), which can be set to inverse class frequency or another vector of values to counteract class imbalance.

-

\(\gamma\) is the focusing parameter that smoothly adjusts the rate at which easy examples are down-weighted. When \(\gamma = 0\), focal loss is equivalent to cross-entropy loss. As \(\gamma\) increases, the effect of the modulating factor also increases.

- How Focal Loss Helps:

-

Balancing the Gradient: In imbalanced datasets, the majority class can dominate the gradient and cause the model to become biased towards it. Focal loss prevents this by reducing the contribution of easy examples, which typically come from the majority class, thereby allowing the model to focus on difficult examples.

-

Improving Model Performance: By concentrating on the harder examples, the model is encouraged to learn more complex features that are necessary to classify these examples correctly, often resulting in improved performance on the minority class.

-

Flexibility: The hyperparameters \(\alpha_t\) and \(\gamma\) offer flexibility to adjust the focal loss for specific problems and datasets. It allows one to balance the importance of positive/negative samples and the focusing parameter.

-

Versatility: While initially proposed for object detection tasks, focal loss has been found beneficial in various other contexts where class imbalance is a significant issue.

- In practice, focal loss has been shown to be particularly effective for training on datasets with extreme class imbalance and has been a critical component in the success of many state-of-the-art object detection models, such as RetinaNet.

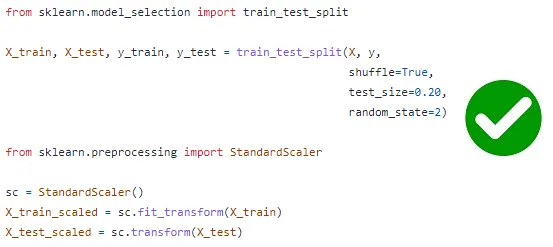

Data leaks

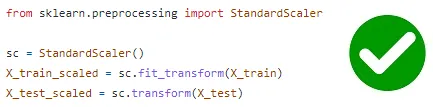

- Data leakage occurs when preprocessing and transforming data, leading to biased and unreliable results. Two common scenarios where data leakage can occur are during feature standardization and when applying transformations to the data.

- In the case of feature standardization, data leakage happens when the entire dataset is standardized before splitting into training and test sets. This is problematic because the test set, which is derived from the full dataset, is used to calculate the mean and standard deviation for standardization. To prevent data leakage, it is recommended to perform feature standardization separately on the training and test sets after the data split.

- Similarly, data leakage can occur when applying transformations to the data, such as using functions like StandardScaler or PCA. If the fit() method of these functions is called twice, once on the training set and again on the test set, new values are computed based on the test set, leading to biased results. To avoid data leakage, it is essential to call the fit() method only on the training set.

- By addressing these issues and avoiding data leakage, we can ensure the integrity and reliability of machine learning models.

- Data leakage can compromise the accuracy and generalizability of machine learning models. It is crucial to be cautious during preprocessing and transformation steps to prevent unintentional data leakage. By adhering to best practices and following proper procedures, we can minimize the risk of data leakage and obtain more robust and trustworthy results.

Data Diversity:

- Data diversity refers to the variety and representativeness of the data used to train machine learning models. Lack of diversity can lead to models that do not perform well across different groups or situations. This is a critical issue in areas like facial recognition, where a model trained on non-diverse data might fail to correctly identify faces from underrepresented groups.

- To ensure data diversity, practitioners can:

-

Collect More Representative Data: Expand data collection efforts to include a wider range of scenarios, conditions, and demographics.

-

Augmentation: Artificially expand the dataset with augmented data that has been modified in ways that are plausible in the real world, such as different lighting conditions for images or different accents in speech recognition.

-

Domain Adaptation: Adapt models trained on one domain to work on another domain, helping to generalize better across different conditions.

-

Fairness and Bias Evaluation: Use fairness metrics and bias evaluation techniques to actively measure and address issues of fairness in model predictions.

Balancing data:

- Balancing data refers to adjusting the class distribution in a dataset to ensure that each class or category is represented fairly. This is often done when there is a significant class imbalance, meaning some classes have significantly fewer samples compared to others. Balancing the data can help prevent bias and improve the performance of machine learning models.

- Here are some common techniques for balancing data:

- Oversampling: Increase the number of samples in the minority class by randomly replicating existing samples or generating synthetic samples using techniques like SMOTE (Synthetic Minority Over-sampling Technique). This helps to create a more balanced representation of classes.

- Undersampling: Decrease the number of samples in the majority class by randomly removing instances. This method aims to reduce the dominance of the majority class and increase the influence of the minority class.

- Stratified Sampling: During the dataset splitting process (e.g., train-test split or cross-validation), ensure that the ratio of different classes remains consistent in each subset. This helps maintain the class distribution across the training and evaluation phases.

- Ensemble Methods: Utilize ensemble learning techniques that combine multiple models trained on balanced subsets of the data. Each model focuses on a different subset or variation of the data to capture diverse representations.

- Cost-sensitive Learning: Assign different costs or weights to different classes during model training. This gives higher importance to underrepresented classes, forcing the model to pay more attention to them.

- Data Augmentation: Generate additional samples by applying transformations or perturbations to existing data. This technique can help increase the number of samples in the minority class, providing more training data without collecting new data.

Randomness

- Randomness plays a role in machine learning models, and the random state is a hyperparameter used to control the randomness within these models. By using an integer value for the random state, we can ensure consistent results across different executions. However, relying solely on a single random state can be risky because it can significantly affect the model’s performance.

- For instance, consider the train_test_split() function, which splits a dataset into training and testing sets. The random_state hyperparameter in this function determines the shuffling process prior to the split. Depending on the random state value, different train and test sets will be generated, and the model’s performance is highly influenced by these sets.

- To illustrate this, let’s look at the root mean squared error (RMSE) scores obtained from three linear regression models, where only the random state value in the train_test_split() function was changed:

- Random state = 0 → RMSE: 909.81

- Random state = 35 → RMSE: 794.15

- Random state = 42 → RMSE: 824.33

- As observed, the RMSE values vary significantly depending on the random state.

- To mitigate this issue, it is recommended to run the model multiple times with different random state values and calculate the average RMSE score. However, performing this manually can be tedious. Instead, cross-validation techniques can be employed to automate this process and obtain a more reliable estimate of the model’s performance.

- Relying on a single random state in machine learning models can yield inconsistent results, and it is advisable to leverage cross-validation methods to mitigate this issue.

Sigmoid vs Softmax

- Output Range: The sigmoid function outputs a value between 0 and 1 for each input, making it suitable for binary classification. Softmax outputs a probability distribution over multiple classes, with each value between 0 and 1 summing up to 1.

- Use Case: Sigmoid is used for binary classification tasks, where each input needs to be classified into one of two classes. Softmax is used for multi-class classification tasks, where each input is assigned to one of several classes.

- Mathematical Formulation: Sigmoid is defined as ( \sigma(x) = \frac{1}{1 + e^{-x}} ), applying independently to each input. Softmax is defined as ( \text{softmax}(x_i) = \frac{e^{x_i}}{\sum_{j} e^{x_j}} ), normalizing inputs into a probability distribution.

- Gradient Properties: Sigmoid can suffer from vanishing gradients, particularly for extreme values, which can slow down learning. Softmax, while also susceptible to gradient issues, is generally more stable for multi-class classification and allows gradients to propagate effectively across all classes.

Deep Learning

- Deep Learning (DL) is a specialized branch of machine learning that employs deep neural networks to process and make sense of vast amounts of data automatically. By learning to recognize patterns directly from data, deep learning excels in managing unstructured data such as images and text. This capability has significantly advanced fields like computer vision and natural language processing, pushing the boundaries of applications such as real-time translation and autonomous driving.

Fully Connected Network

- A fully connected layer is a neural network layer where each neuron connects to all neurons in the previous layer. It performs a linear transformation using a weight matrix and bias, followed by an activation function to introduce non-linearity.

- A fully connected network (FCN) consists entirely of FC layers and is commonly used in MLPs (Multilayer Perceptrons).

- Activation functions act as filters, determining which neurons activate, but all connections remain intact, maintaining full connectivity.

Analogy: A Fully Connected Network as a Highway System 🚗🛣️

Imagine a highway system where:

- Neurons = Intersections

- Connections (Weights) = Roads between intersections

-

Activation Function = Traffic lights controlling traffic flow

-

Each intersection (neuron) connects to all intersections in the next city (layer) via roads (weights), ensuring full connectivity. However, activation functions act as traffic lights—some roads (connections) may have a red light (inactive neuron), preventing traffic (signals), while others have a green light (active neuron), allowing data to pass.

- Even if some roads are temporarily blocked, they still exist and can be used later if the activation changes, ensuring that the network remains fully connected at all times.

Transformer differences

- Encoder-Only Models:

- Purpose: Primarily used for tasks requiring understanding or representation of input data, such as text classification and embeddings generation (e.g., BERT).

- Function: The encoder transforms the input into a dense representation (embedding) without generating sequential outputs.

- Encoder-Decoder Models:

- Purpose: Designed for sequence-to-sequence tasks, such as machine translation and text summarization, where input needs to be converted into a different sequence.

- Function: The encoder processes the input into a context vector (embedding), and the decoder generates the output sequence from this context, ensuring meaningful transformations.

- Decoder-Only Models:

- Purpose: Used for generative tasks where the model generates sequences from initial input, such as text generation and language modeling (e.g., GPT).

- Function: The decoder autoregressively generates each token in the output sequence based on the previous tokens and initial input, without an explicit encoder phase.

Why did the transition happen from RNNs to LSTMs

- Long-term Dependencies: LSTMs effectively capture long-term dependencies in sequences, addressing RNNs’ limitations in handling long-term information due to vanishing gradients.

- Gradient Issues: LSTMs mitigate the vanishing and exploding gradient problems that RNNs suffer from, ensuring stable training over long sequences.

- Memory Cells: LSTMs use memory cells and gates (input, forget, and output) to control the flow of information, allowing selective retention and forgetting, which enhances learning efficiency.

- Performance: LSTMs generally outperform RNNs in tasks involving complex temporal patterns, such as language modeling, speech recognition, and time-series prediction.

What is the difference between self attention and Bahdanau (traditional) attention

- Self-attention computes attention scores within a single sequence, allowing each element to focus on all other elements, enabling the model to capture dependencies regardless of their distance.

- Bahdanau attention (additive attention) is used in sequence-to-sequence models, where the decoder focuses on different parts of the input sequence to generate each output element, using a learned alignment mechanism to determine relevant input parts. Self-attention is typically used in models like Transformers, while Bahdanau attention is common in earlier sequence-to-sequence models like RNNs and LSTMs.

Bahdanau Attention

- Query (Q): Decoder hidden state at the current time step.

- Key (K) and Value (V): Encoder hidden states.

- Process: Compute attention scores by applying a neural network to (Q, K), use softmax to get weights, and produce a context vector by weighted sum of V.

Self-Attention

- Query (Q), Key (K), and Value (V): All derived from the same input sequence.

-

Process: Compute attention scores using dot-product of Q and K, scale, apply softmax to get weights, and produce output by weighted sum of V.

- These methods differ in their source of Q, K, and V and their application context within sequence models.

Two Tower

- Separate Towers for Users and Items: Two-tower architectures in recommendation systems consist of two neural network models, one for encoding user features and another for item features, allowing for separate and specialized processing of each type.

- Embedding Generation: Each tower generates embeddings for users and items independently, capturing their respective characteristics and preferences.

- Similarity Computation: The embeddings from the user and item towers are then compared using a similarity measure, like dot product or cosine similarity, to generate recommendations.

- Scalability and Flexibility: This architecture allows for efficient retrieval in large-scale systems, as embeddings can be precomputed and indexed, and supports flexible integration of diverse feature types for both users and items.

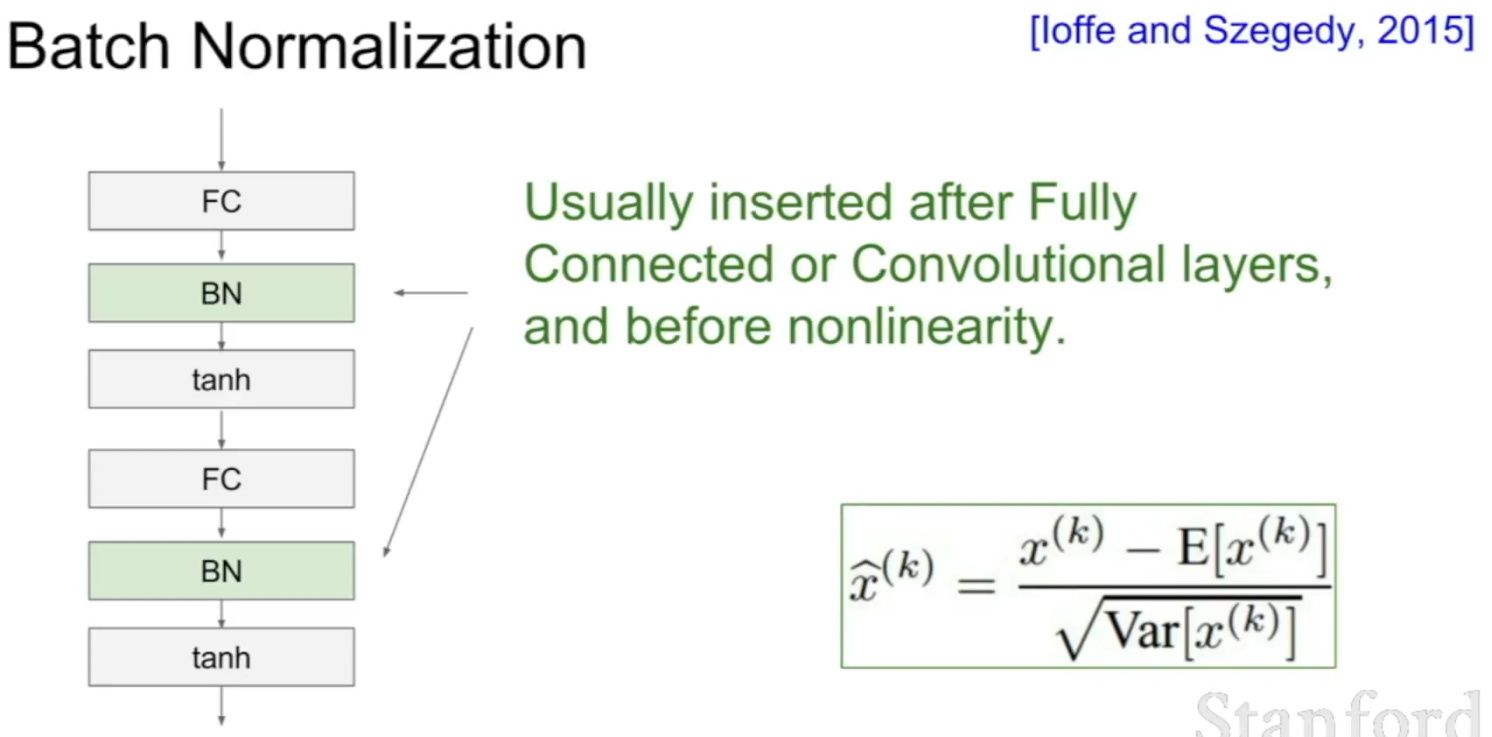

Why should we use Batch Normalization?

- Batch normalization is a technique for training very deep neural networks that standardizes the inputs to a layer for each mini-batch.

- Usually, a dataset is fed into the network in the form of batches where the distribution of the data differs for every batch size. By doing this, there might be chances of vanishing gradient or exploding gradient when it tries to backpropagate. In order to combat these issues, we can use BN (with irreducible error) layer mostly on the inputs to the layer before the activation function in the previous layer and after fully connected layers.

- Batch Normalisation has the following effects on the Neural Network:

- Robust Training of the deeper layers of the network.

- Better covariate-shift proof NN Architecture.

- Has a slight regularization effect.

- Centered and controlled values of Activation.

- Tries to prevent exploding/vanishing gradient.

- Faster training/convergence.

What is weak supervision?

- Weak Supervision (which most people know as the Snorkel algorithm) is an approach designed to help annotate data at scale, and it’s a pretty clever one too.

- Imagine that you have to build a content moderation system that can flag LinkedIn posts that are offensive. Before you can build a model, you’ll first have to get some data. So you’ll scrape posts. A lot of them, because content moderation is particularly data-greedy. Say, you collect 10M of them. That’s when trouble begins: you need to annotate each and every one of them - and you know that’s gonna cost you a lot of time and a lot of money!

- So you want to use autolabeling (basically, you want to apply a pre-trained model) to generate ground truth. The problem is that such a model doesn’t just lie around, as this isn’t your vanilla object detection for autonomous driving use case, and you can’t just use YOLO v5.

- Rather than seek the budget to annotate all that data, you reach out to subject matter experts you know on LinkedIn, and you ask them to give you a list of rules of what constitutes, according to each one of them, an offensive post.

Person 1's rules:

- The post is in all caps

- There is a mention of Politics

Person 2's rules:

- The post is in all caps

- It uses slang

- The topic is not professional

...

Person 20's rules:

- The post is about religion

- The post mentions death

- You then combine all rules into a mega processing engine that functions as a voting system: if a comment is flagged as offensive by at least X% of those 20 rule sets, then you label it as offensive. You apply the same logic to all 10M records and are able to annotate then in minutes, at almost no costs.

- You just used a weakly supervised algorithm to annotate your data.

- You can of course replace people’s inputs by embeddings, or some other automatically generated information, which comes handy in cases when no clear rules can be defined (for example, try coming up with rules to flag a cat in a picture).

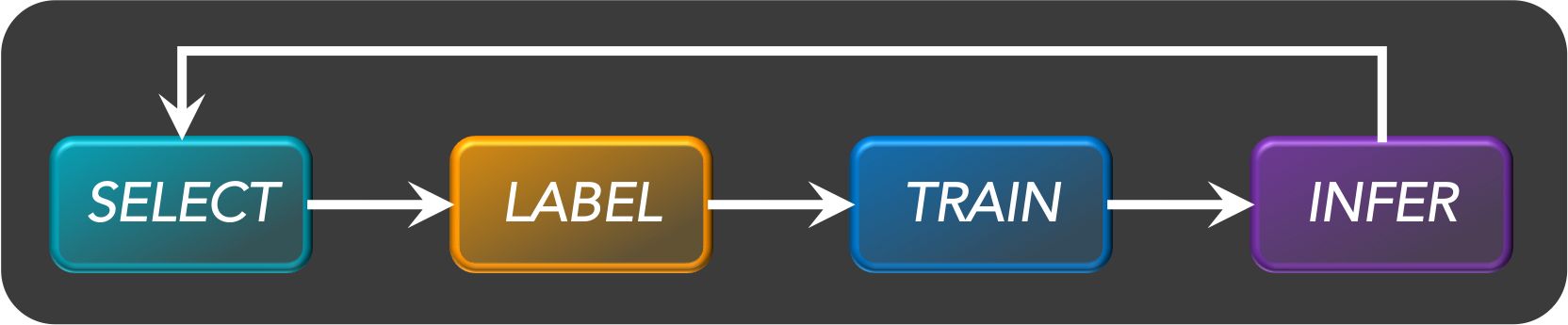

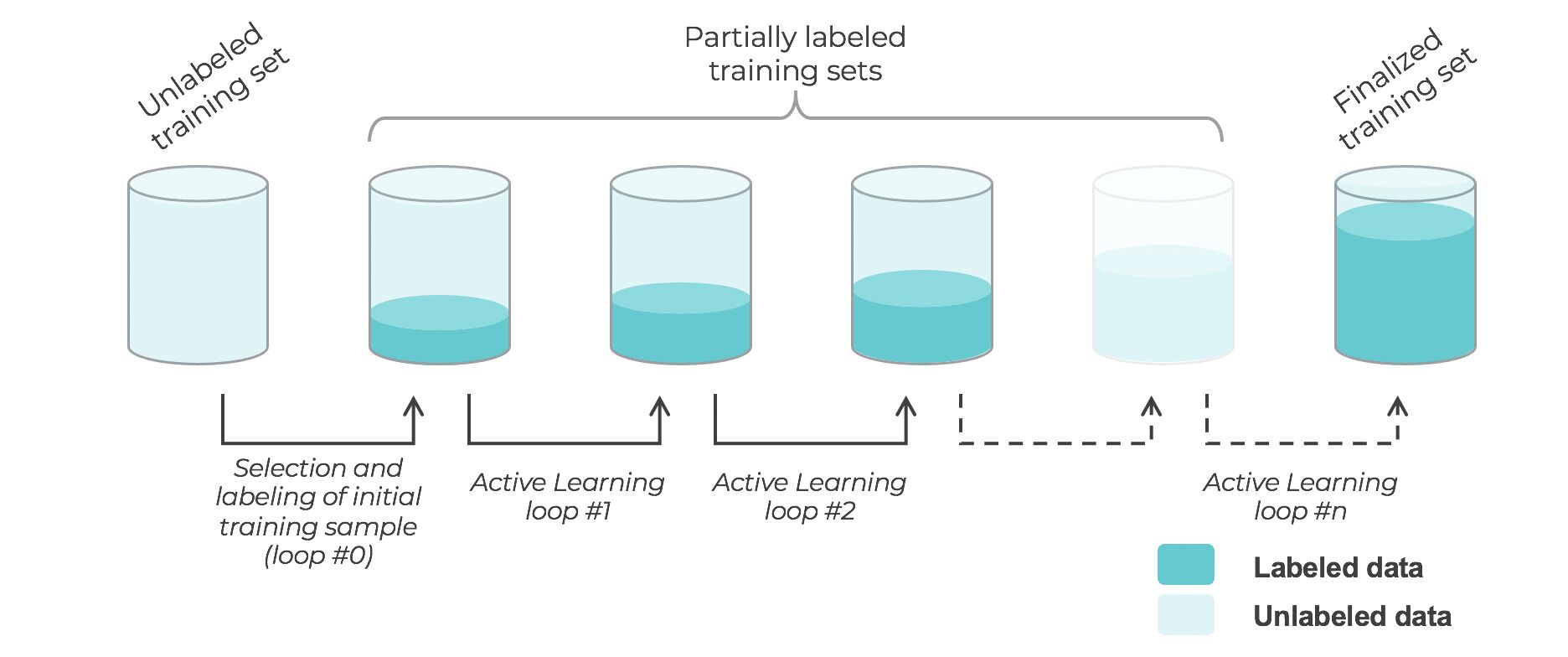

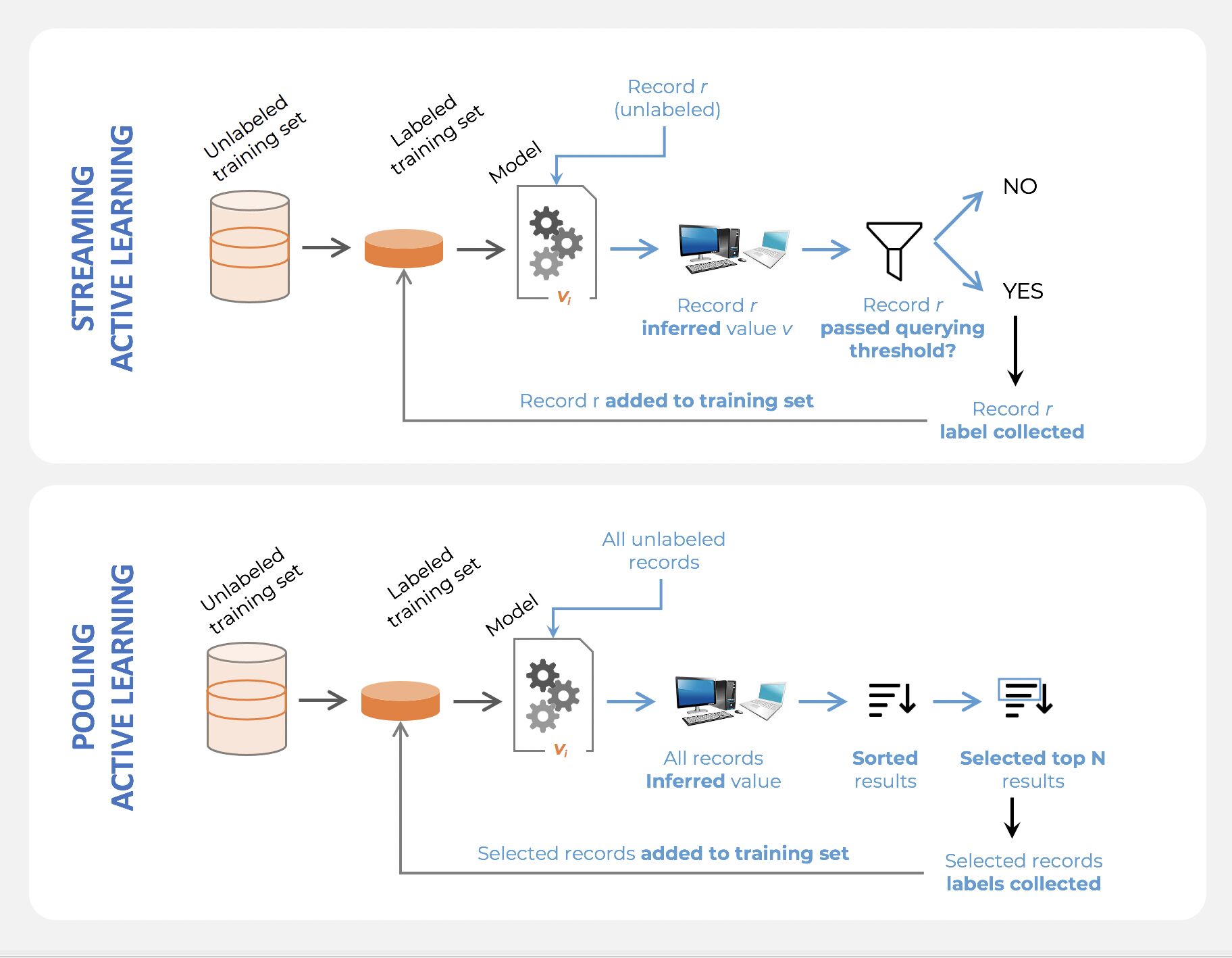

Active learning

- Active learning is a semi-supervised ML training paradigm which, like all semi-supervised learning techniques, relies on the usage of partially labeled data.

- Active Learning consists of dynamically selecting the most relevant data by sequentially:

- selecting a sample of the raw (unannotated) dataset (the algorithm used for that selection step is called a querying strategy).

- getting the selected data annotated.

- training the model with that sample of annotated training data.

- running inference on the remaining (unannotated) data.

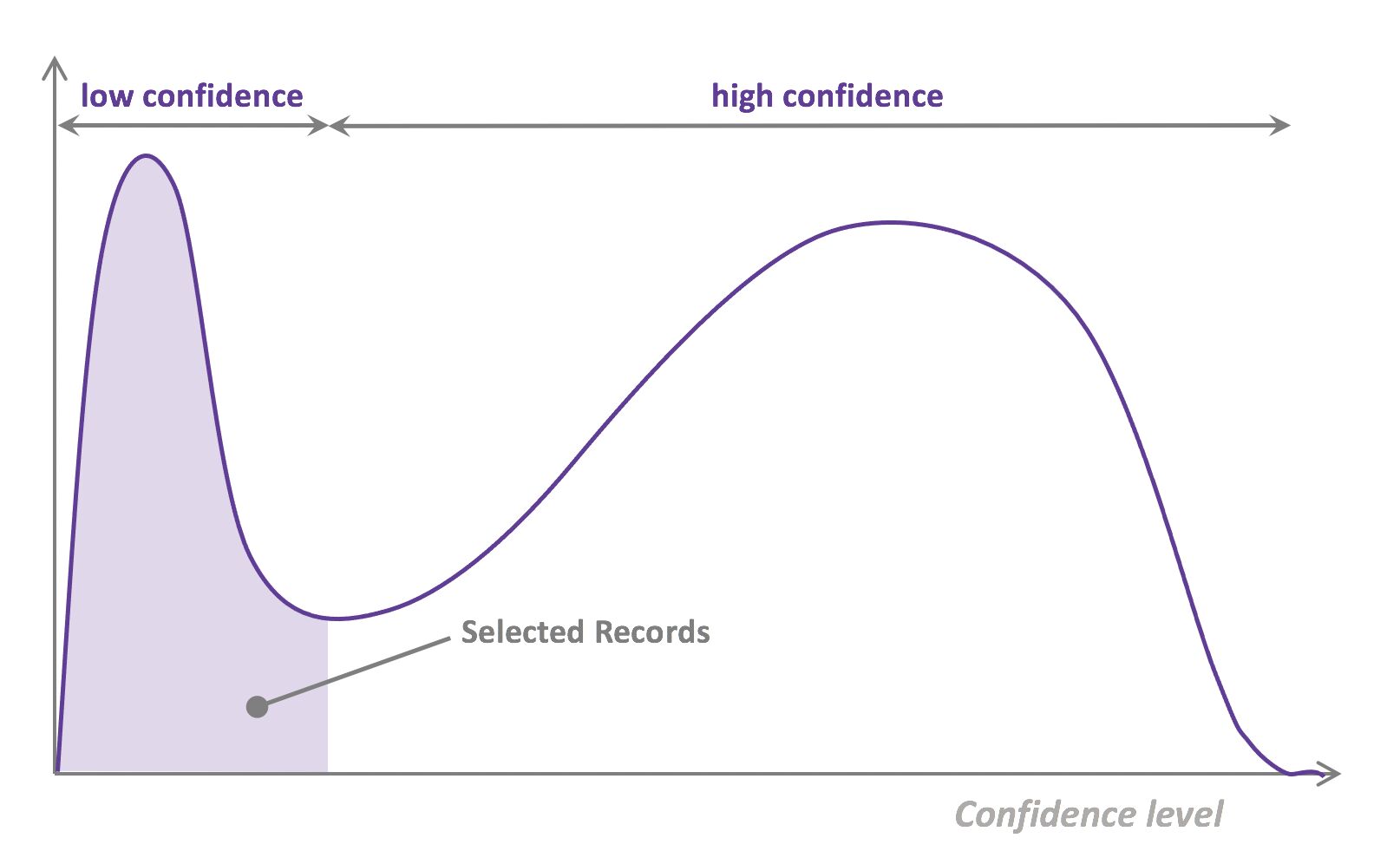

- That last step is used to evaluate which records should be then selected for the next iteration (called a loop). However, since there is no ground truth for the data used in the inference step, one cannot simply decide to feed the – data where the model failed to make the correct prediction, and has instead to use metadata (such as the confidence level of the prediction) to make that decision.

- The easiest and most common querying strategy used for selecting the next batch of useful data consists of picking the records with the lowest confidence level; this is called the least-confidence querying strategy, which is one of many possible querying strategies.

What is active learning?

- When you don’t have enough labeled data and it’s expensive and/or time consuming to label new data, active learning is the solution. Active learning is a semi-supervised ML training paradigm which, like all semi-supervised learning techniques, relies on the usage of partially labeled data. Active Learning helps to select unlabeled samples to label that will be most beneficial for the model, when retrained with the new sample.

- Active Learning consists of dynamically selecting the most relevant data by sequentially:

- selecting a sample of the raw (unannotated) dataset (the algorithm used for that selection step is called a querying strategy)

- getting the selected data annotated

- training the model with that sample of annotated training data

- running inference on the remaining (unannotated) data.

- That last step is used to evaluate which records should be then selected for the next iteration (called a loop). However, since there is no ground truth for the data used in the inference step, one cannot simply decide to feed the data where the model failed to make the correct prediction, and has instead to use metadata (such as the confidence level of the prediction) to make that decision.

- The easiest and most common querying strategy used for selecting the next batch of useful data consists of picking the records with the lowest confidence level; this is called the least-confidence querying strategy, which is one of many possible querying strategies. (Technically, those querying strategies are usually brute-force, arbitrary algorithms which can be replaced by actual ML models trained on metadata generated during the training and inference phases for more sophistication).

- Thus, the most important criterion is selecting samples with maximum prediction uncertainty. You can use the model’s prediction confidence to ascertain uncertain samples. Entropy is another way to measure such uncertainty. Another criterion could be diversity of the new sample with respect to exiting training data. You could also select samples close to labeled samples in the training data with poor performance. Another option could be selecting samples from regions of the feature space where better performance is desired. You could combine all the strategies in your active learning decision making process.

- The training is an iterative process. With active learning you select new sample to label, label it and retrain the model. Adding one labeled sample at a time and retraining the model could be expensive. There are techniques to select a batch of samples to label. For deep learning the most popular active learning technique is entropy with is Monte Carlo dropout for prediction probability.

- The process of deciding the samples to label could also be implemented with Multi Arm Bandit. The reward function could be defined in terms of prediction uncertainty, diversity, etc.

- Let’s go deeper and explain why the vanilla form of Active Learning, “uncertainty-based”/”least-confidence” Active Learning, actually perform poorly via real-life datasets:

- Let’s take the example of a binary classification model identifying toxic content in tweets, and let’s say we have 100,000 tweets as our dataset.

- Here is how uncertainty-based AL would work:

- We pick 1,000 (or another number, depending on how we tune the process) records - at that stage, randomly.

- We annotate that data as toxic / not-toxic.

- We train our model with it and get a (not-so-good) model.

- We use the model to infer the remaining 99,000 (unlabeled) records.

- We don’t have ground truth for those 99,000, so we can’t select which records are incorrectly predicted, but we can use metadata, such as the confidence level, as a proxy to detect bad predictions. With least confidence Active Learning, we would pick the 1,000 records predicted with the lowest confidence level as our next batch.