Paper Reviews

Introduction

- I plan to summarize the research papers and articles from blogs I read in my own words. The original papers and articles will be linked on the title, and I recommend checking them out!

Blogs

Google AI Blog

- Check out the Google’s research AI blog here:Google AI.

- Below are the links to specific articles I’ve really enjoyed:

MURAL : Multimodal, Multi-task Retrieval Across Languages

- Authors: Aashi Jain and Yinfei Yang

- This article goes over the ambiguity that can arise when we translate sentences from one language to another. How often times, we can also lose the cultural representation behind the source sentence.

- The solution is to use neural machine translation along with image recognition. With both text and image paired together, they are able to reduce the ambiguity.

- While we currently have solutions that take both image and text and embed them in the same vector space with solutions like CLIP and ALIGN, we do not have solutions that scale for languages outside of English due to lack of training data.

- MURAL: Multimodal, Multitask Retrieval Across Languages is able to solve this problem by using multitask learning applied to image-text pairs along with translation pairs for over 100 languages.

- The reason this is important and powerful is because this could mean we can directly translate from the source language to the target language without needing language and just with the use of images.

-

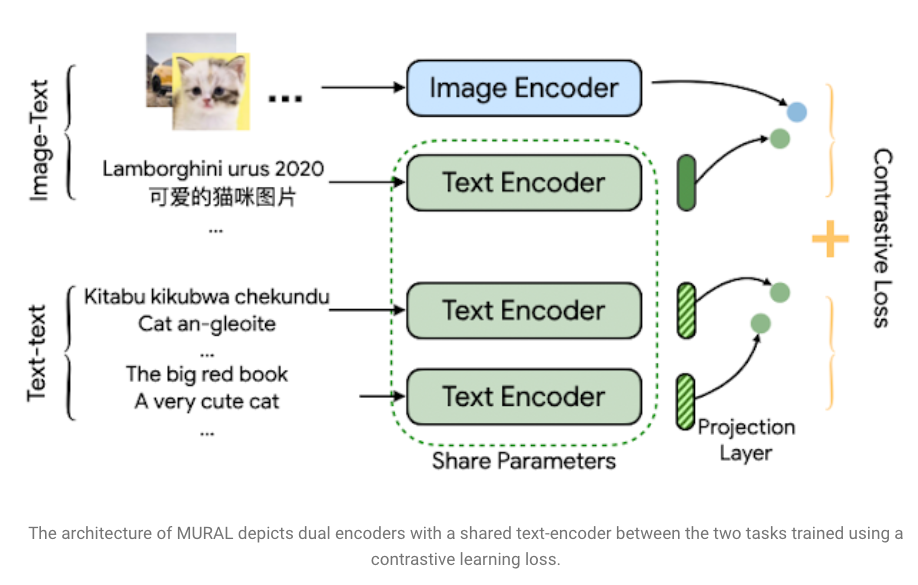

The MURAL architecture (displayed below) is based off the architecture of ALIGN but employed in a multitask fashion:

- Key achievements:

- Training jointly with both image and text, it is possibly to overcome scarcity of data for low-resource languages.

- Training jointly also increases cross-modal performance.