Models • Generative Pre-trained Transformer (GPT)

- Introduction

- GPT-1: Improving Language Understanding by Generative Pre-Training

- GPT-2: Language Models are Unsupervised Multitask Learners

- GPT-3: Language Models are Few-Shot Learners

- Other models in the family

- References

Introduction

- The Generative Pre-trained Transformer (GPT) by OpenAI is a family of autoregressive language models.

- GPT utilizes the decoder architecture from the standard Transformer network (with a few engineering tweaks) as a independent unit. This is coupled with an unprecedented size of 2048 as the number of tokens as input and 175 billion parameters (requiring ~800 GB of storage).

- The training method is “generative pretraining”, meaning that it is trained to predict what the next token is. The model demonstrated strong few-shot learning on many text-based tasks.

- The end result is the ability to generate human-like text with swift response time and great accuracy. Owing to the GPT family of models having been exposed to a reasonably large dataset and number of parameters (175B), these language models require few or in some cases no examples to fine-tune the model (a process that is called “prompt-based” fine-tuning) to fit the downstream task. The quality of the text generated by GPT-3 is so high that it can be difficult to determine whether or not it was written by a human, which has both benefits and risks (source).

- Before GPT, language models (LMs) were typically trained on a large amount of accurately labelled data, which was hard to come by. These LMs offered great performed on the supervised task that they were trained to do, but were unable to be domain-adapted to other tasks.

- Microsoft announced on September 22, 2020, that it had licensed “exclusive” use of GPT-3; others can still use the public API to receive output, but only Microsoft has access to GPT-3’s underlying model.

- Let’s look below at each GPT1, GPT2, and GPT3 (with a little more emphasis to the latter as its more widely used today) and how they were able to make a dent in how Natural Language Processing tasks would be done.

GPT-1: Improving Language Understanding by Generative Pre-Training

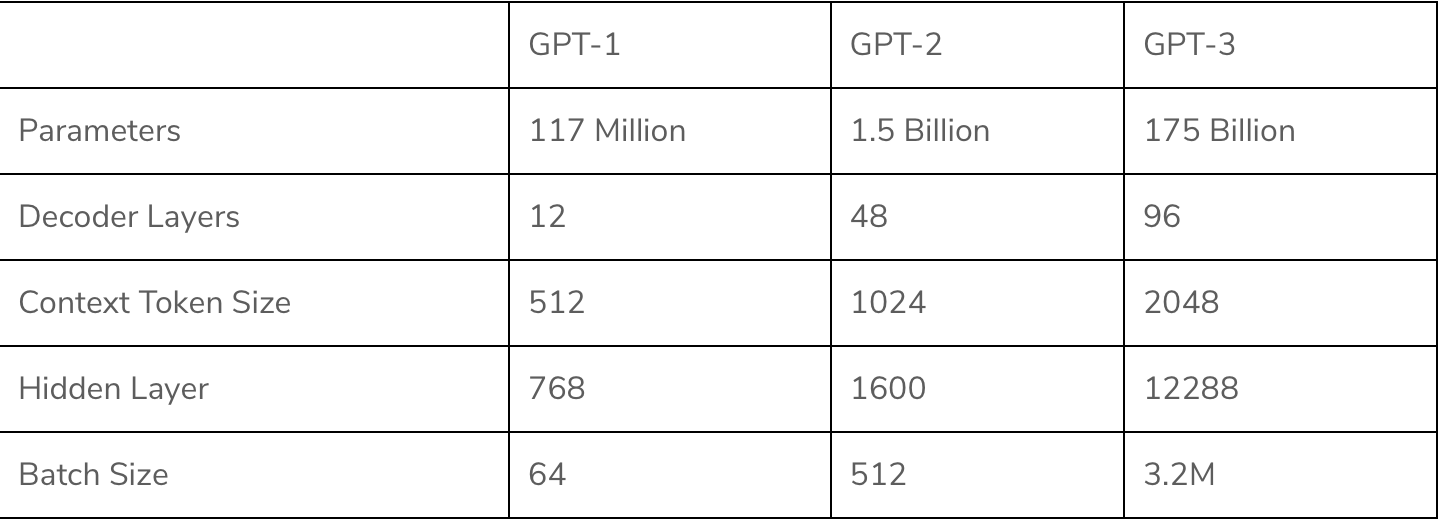

- GPT-1 was released in 2018 by OpenAI. It contained 117 million parameters.

- Trained on an enormous BooksCorpus dataset, this generative language model was able to learn large range dependencies and acquire vast knowledge on a diverse corpus of contiguous text and long stretches. (source)

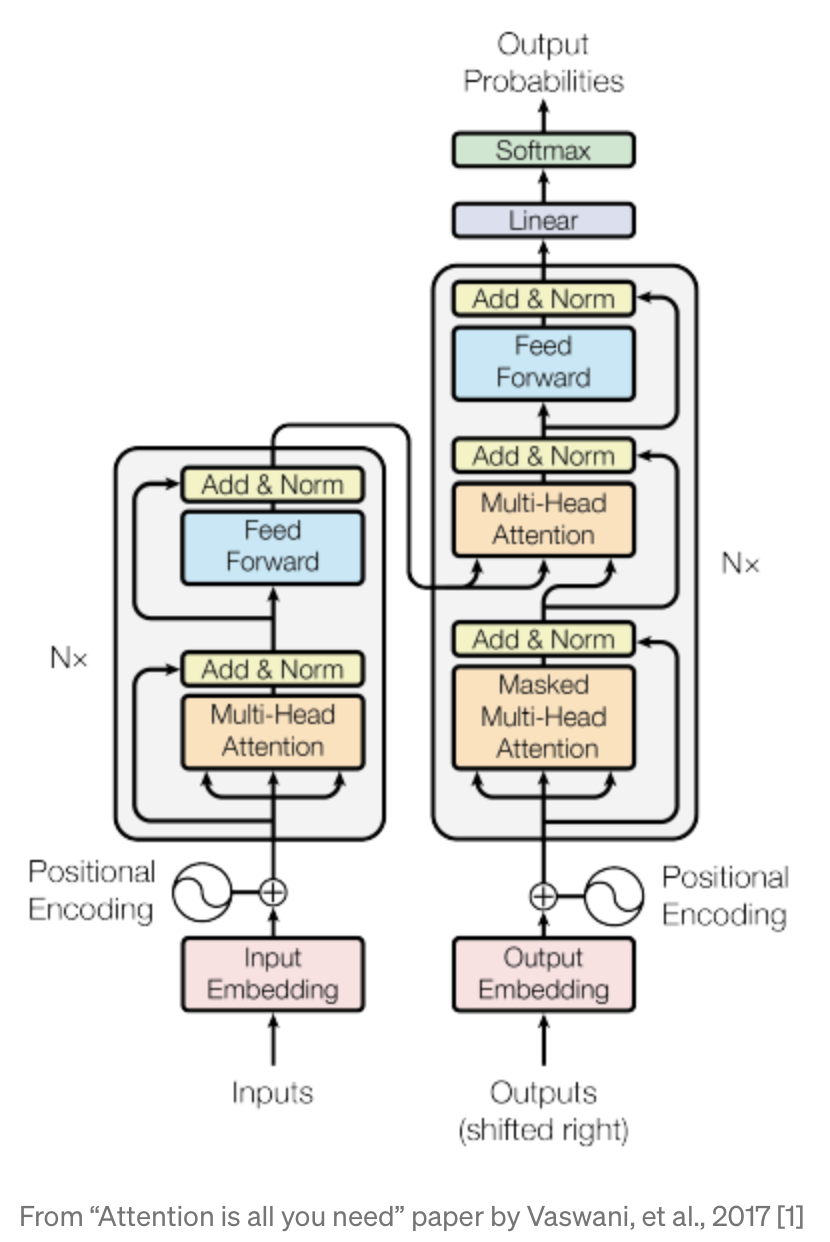

- GPT-1 uses the 12-layer decoder from the original transformer architecture that contains self attention.

- GPT was able to use transfer learning and thus, was able to perform many NLP tasks with very little fine-tuning.

- The right side of the image below, from the original transformer paper, “Attention is all you need”, representing the decoder model:

GPT-2: Language Models are Unsupervised Multitask Learners

- GPT-2 was released in February 2019 by OpenAI and it used a larger dataset while also adding additional parameters to build a more robust language model.

- GPT-2 became 10 times larger than GPT-1 with 1.5 billion parameters and had 10 times the data compared to GPT-1.

- Write With Transformer is a webapp created and hosted by Hugging Face showcasing the generative capabilities of several models. GPT-2 is one of them and is available in five different sizes: small, medium, large, XL and a distilled version of the small checkpoint: distilgpt-2.

- GPT-2 is an unsupervised deep learning transformer-based language model created by OpenAI to help in predicting the next word or words in a sentence.

- Language tasks such as reading, summarizing and translation can be learned by GPT-2 from raw text without using domain specific training data.

- GPT-2 (Generative Pre-trained Transformer 2), layer normalization is applied after each multi-head attention and feedforward layer in the transformer block.

- This helps to stabilize the training process and improve the model’s generalization ability.

- Layer Normalization normalizes the activations of each layer in the network. It is similar to batch normalization, but instead of normalizing over a batch of inputs, it normalizes over the features or channels of each individual sample.

Code for GPT-2

- Source for the code below

- Let’s look at the code used for the feedforward layer:

class Conv1D(nn.Module):

def __init__(self, nx, nf):

super().__init__()

self.nf = nf

w = torch.empty(nx, nf)

nn.init.normal_(w, std=0.02)

self.weight = nn.Parameter(w)

self.bias = nn.Parameter(torch.zeros(nf))

def forward(self, x):

size_out = x.size()[:-1] + (self.nf,)

x = torch.addmm(self.bias, x.view(-1, x.size(-1)), self.weight)

x = x.view(*size_out)

return x

GPT-3: Language Models are Few-Shot Learners

- It is a massive language prediction and generation model developed by OpenAI capable of generating long sequences of the original text. GPT-3 became the OpenAI’s breakthrough AI language program. (source)

- GPT-3 is able to generate paragraphs and texts to almost sound like a person has generated them instead.

- GPT-3 contains 175 billion parameters and is 100 times larger than GPT-2. Its trained on 500 billion word data set known as “Common Crawl”.

- GPT-3 is also able to write code snippets, like SQL queries, and perform other intelligent tasks. However, it is expensive and inconvenient to perform inference owing to its 175B-parameter size.

- GPT-3 eliminates the finetuning step that was needed for its predecessors as well as for encoder models such as BERT.

- GPT-3 is capable of responding to any text by generating a new piece of text that is both creative and appropriate to its context.

- Here is a working use case of GPT-3 you can try out: debuild.io where GPT will give you the code to build the application you define.

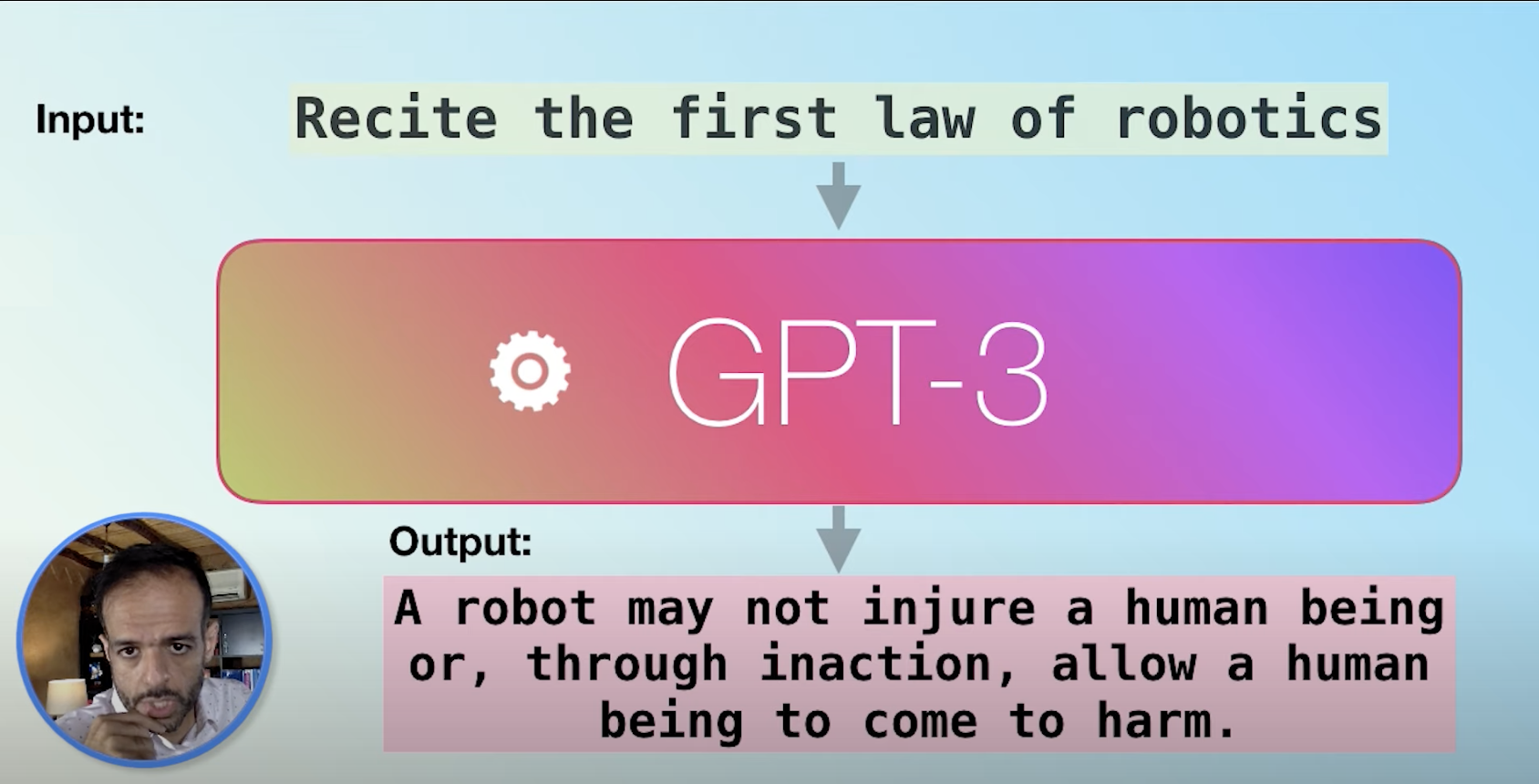

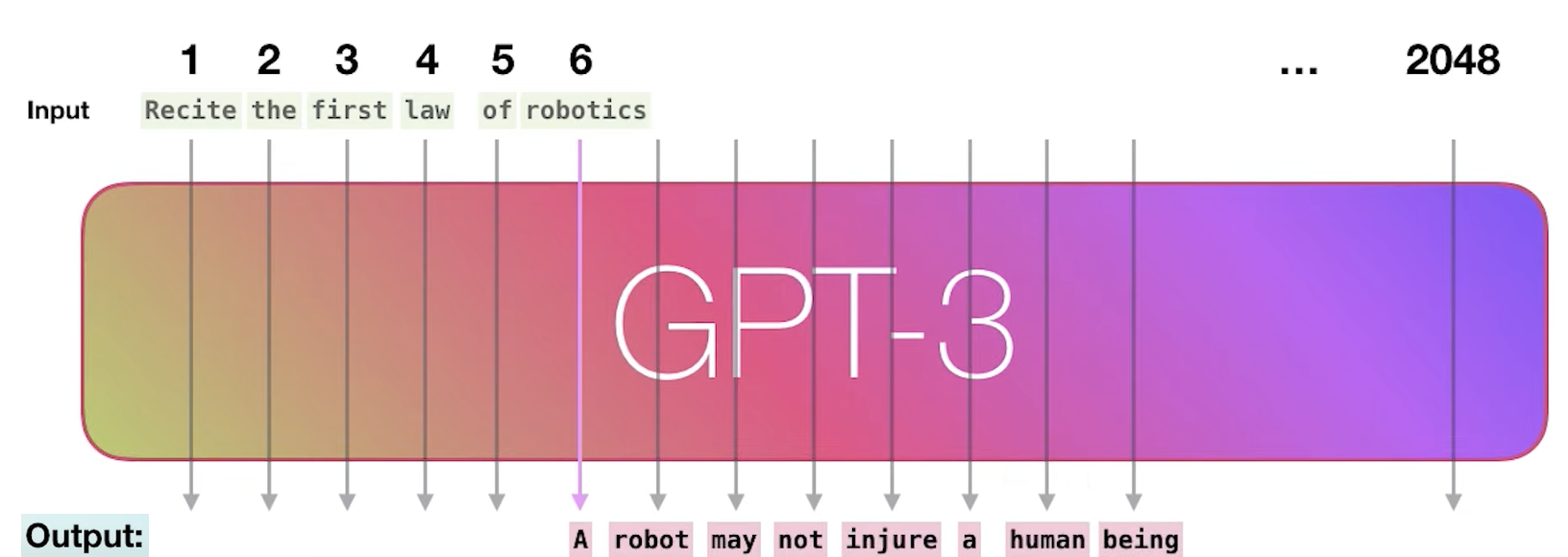

- Just like its predecessors, GPT-3 is an API served by OpenAI, lets look at the image below to see a visual representation of how this black box works:

- The output here is generated by what GPT has learned during the training phase. GPT-3’s dataset was quite massive with 300 billion tokens or words that it was trained on.

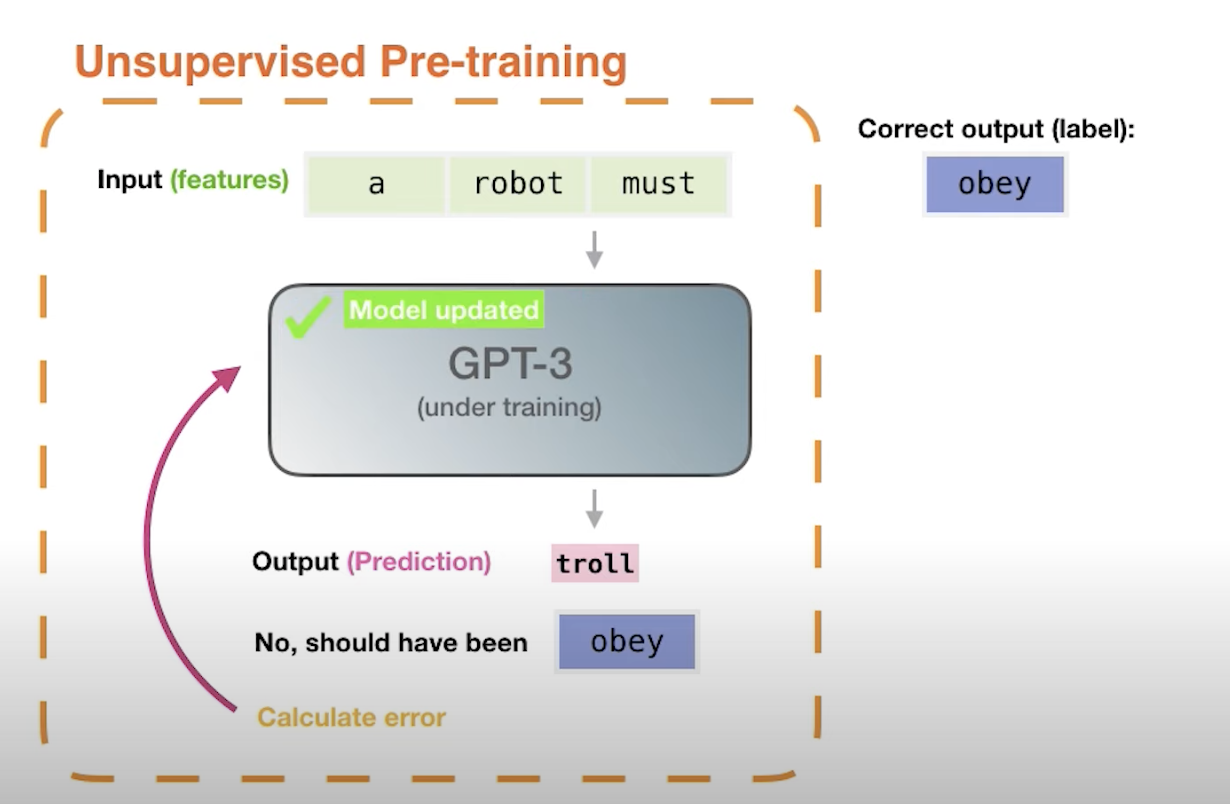

- It was trained on only one specific task which was predicting the next word, and thus, is an unsupervised pre-trained model.

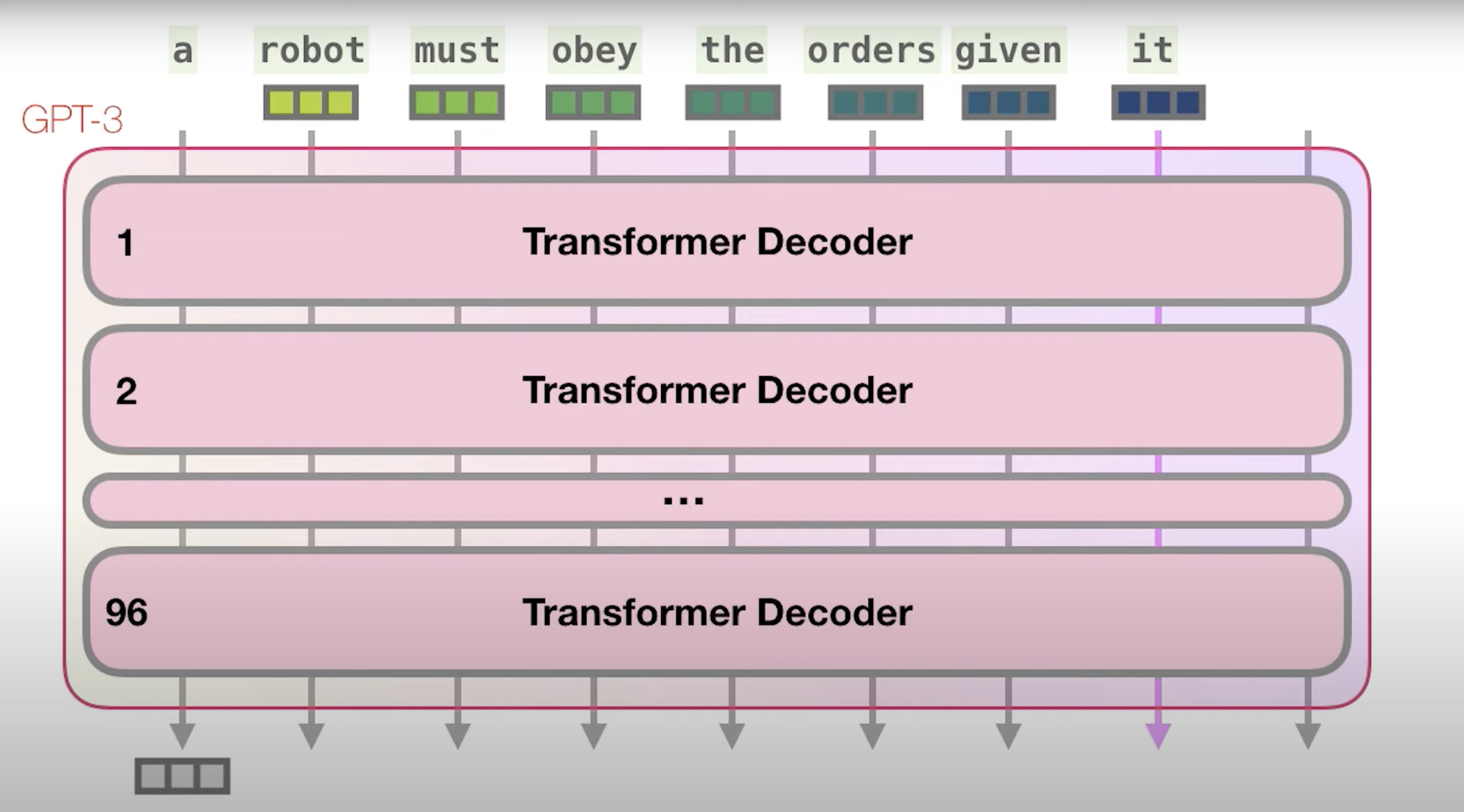

- In the image below, we can see what the training process looks like for GPT-3, sourced from Jay Alammar.

- We feed words into our model.

- We expect an output.

- We then check if the output matches the expected output.

- If not, we calculate the error or loss and update the model and ask for a new prediction.

- Thus, the next time it comes across this example, it knows what the output should look like.

- This is the general loop used in supervised training, nothing out of the ordinary, lets look below.

- Let’s visualize another way of looking at the model in the image below sourced from Jay Alammar.

- Each word goes through its own track and the model has a context window of say 2048 tokens. Thus the input and output have to fit within this range. Note, there are ways to expand and adjust this number but we will use this number for now.

- Each token is only processed in its own track.

- Let’s look at it even deeper. Each token/word is a vector on its own, so when we process a word, we process a vector.

- This vector will then go through many layers of Transformer Decoders, GPT-3 has 96 layers, which are all stacked one on top of another. This is what the depth is in deep learning and its able to make predictions that are a little more nuanced using the high number of layers where the computation flows through.

- The last token is the response or the next word prediction from the model.

Other models in the family

- The source for this whole section with a catalog of related models and their metadata is linked here.

Anthropic Assistant • Reference:23 see also24 [13, 14] • Family: GPT • Pretraining Architecture: Decoder • Pretraining Task: Protein folding prediction*ion of BERT using parameter sharing, which is much more efficient given the same number of parameters • Extension:These models do not introduce novelties at the architecture/pretraining level and they are based on GPT-3 but rather focuses on how to improve alignment through fine-tuning and prompting. Note that the Anthropic Assistant includes several models optimized for different tasks. Latest versions of this work focus on the benefits of RLHF • Application: Different models with different applications from general dialog to code assistant. • Date (of first known publication): 12/2021 • Num. Params:10M to 52B • Corpus:400B tokens from filtered Common Crawl and Books. They also create several Dialogue Preference datasets for the RLHF training. • Lab:Anthropic

References

- Jay Alammar: How GPT3 Works - Easily Explained with Animations

- Improving Language Understanding by Generative Pre-Training

- Language Models are Unsupervised Multitask Learners

- Language Models are Few-Shot Learners

- Priya Shree: The Journey of Open AI GPT models

- 360digitmg: GPT-1, GPT-2 and GPT-3

- dzone: GPT-2 (GPT2) vs. GPT-3 (GPT3): The OpenAI Showdown