NLP • Retrieval Augmented Generation

- Overview

- Motivation

- Lexical Retrieval

- Semantic Retrieval

- Hybrid Retrieval (Lexical + Semantic)

- The Retrieval Augmented Generation (RAG) Pipeline

- Benefits of RAG

- Ensemble of RAG

- Choosing a Vector DB using a Feature Matrix

- Building a RAG pipeline

- Ingestion

- Retrieval

- Re-ranking

- Response Generation / Synthesis

- RAG in Multi-Turn Chatbots: Embedding Queries for Retrieval

- Component-Wise Evaluation

- Multimodal Input Handling

- Multimodal RAG

- Agentic Retrieval-Augmented Generation

- How Agentic RAG Works

- Agentic Decision-Making in Retrieval

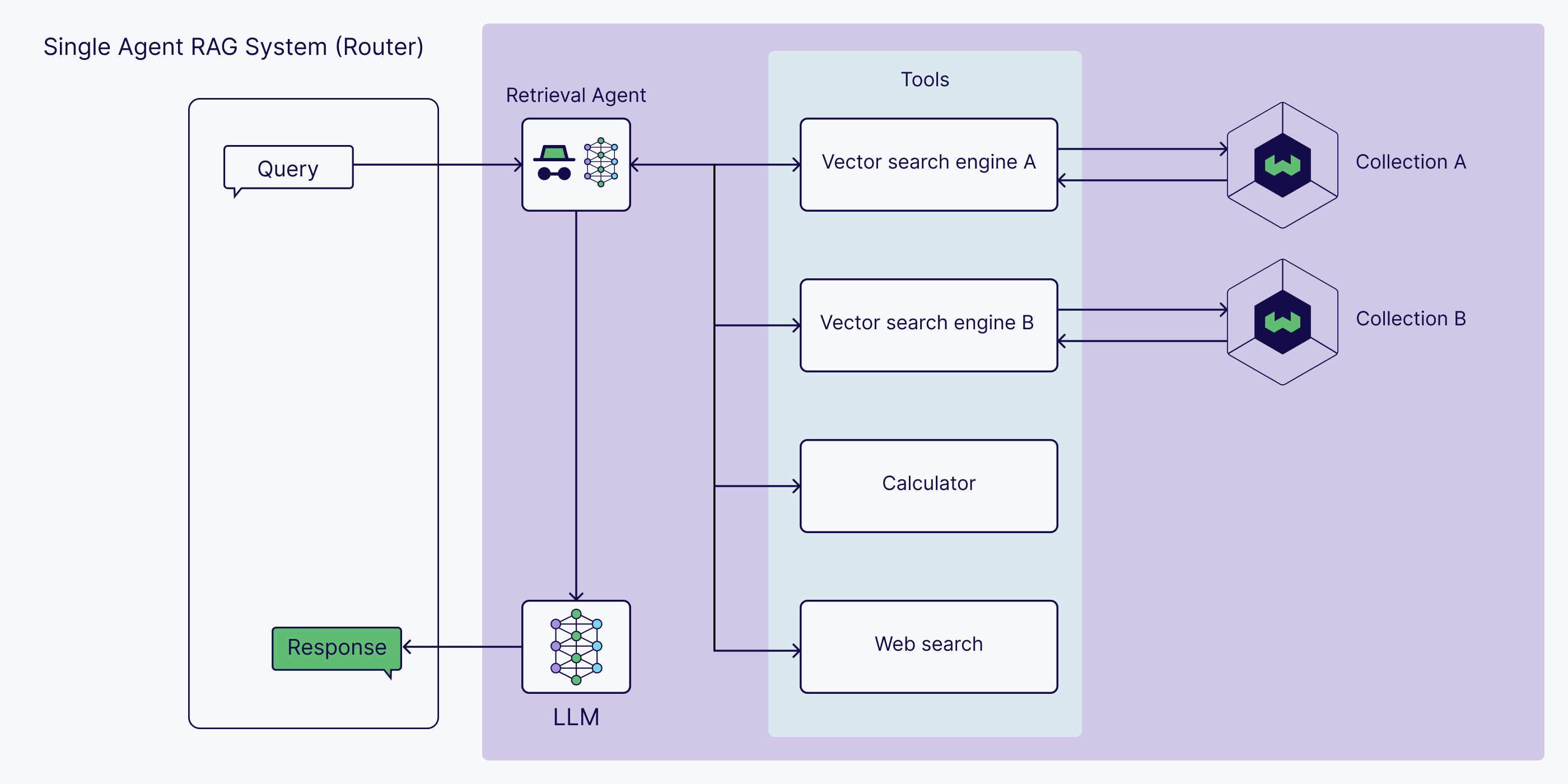

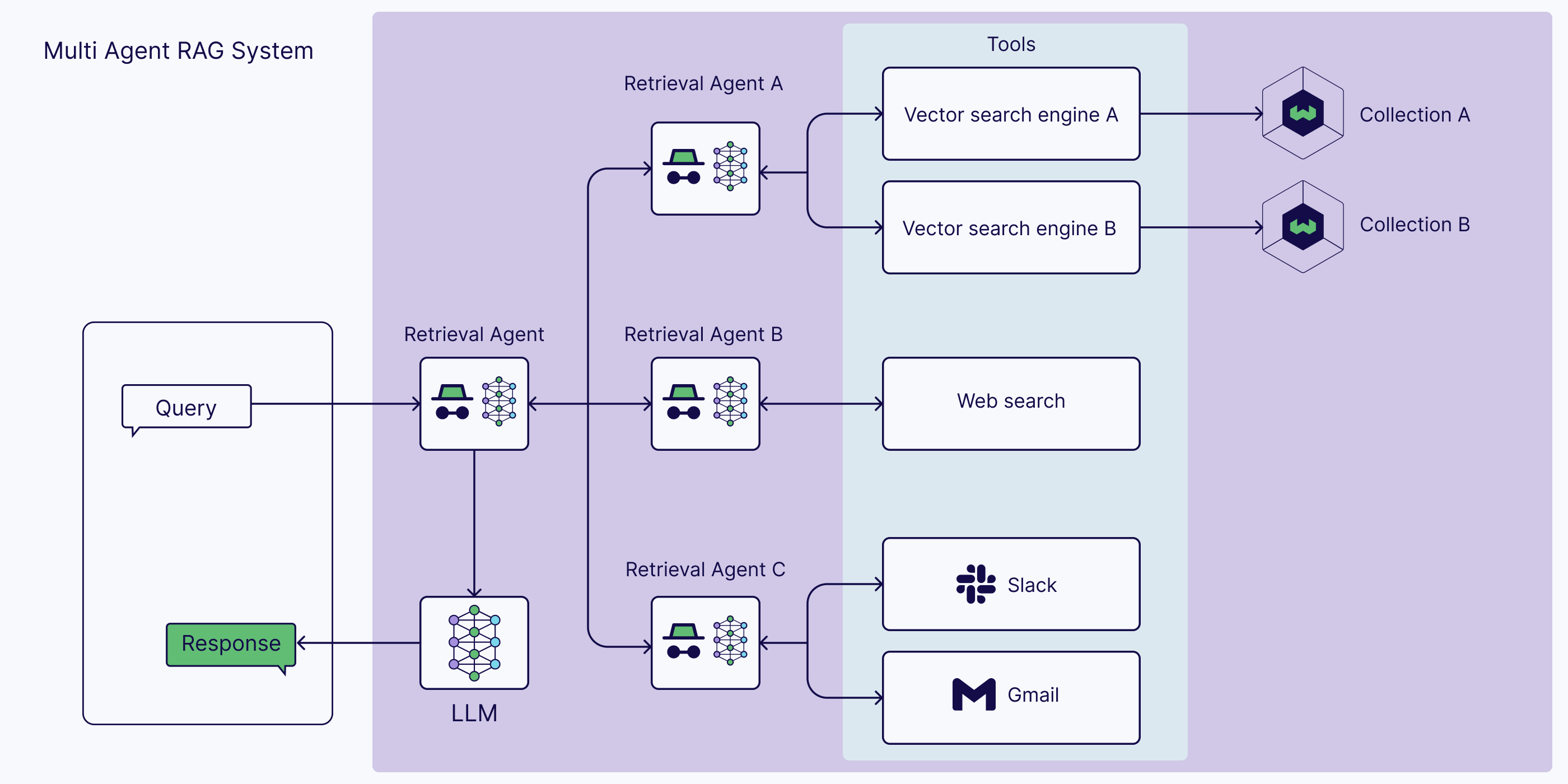

- Agentic RAG Architectures: Single-Agent vs. Multi-Agent Systems

- Beyond Retrieval: Expanding Agentic RAG’s Capabilities

- Agentic RAG vs. Vanilla RAG: Key Differences

- Implementing Agentic RAG: Key Approaches

- Enterprise-driven Adoption

- Benefits

- Limitations

- Code

- Disadvantages of Agentic RAG

- Summary

- RAG vs. Long Context Windows

- Improving RAG Systems

- RAG 2.0

- Selected Papers

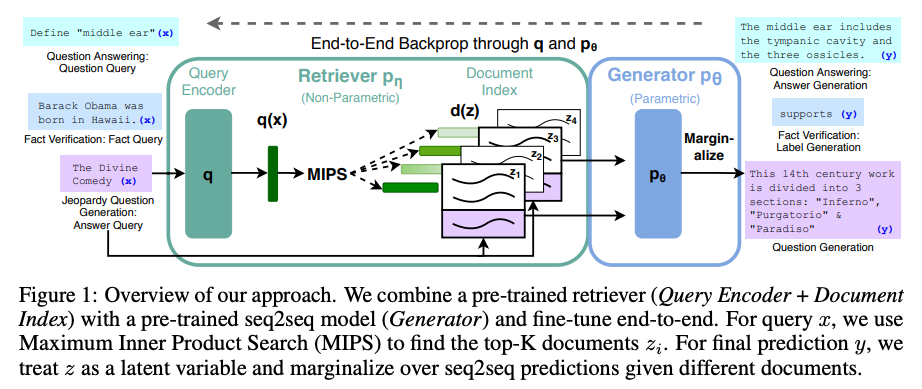

- Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks

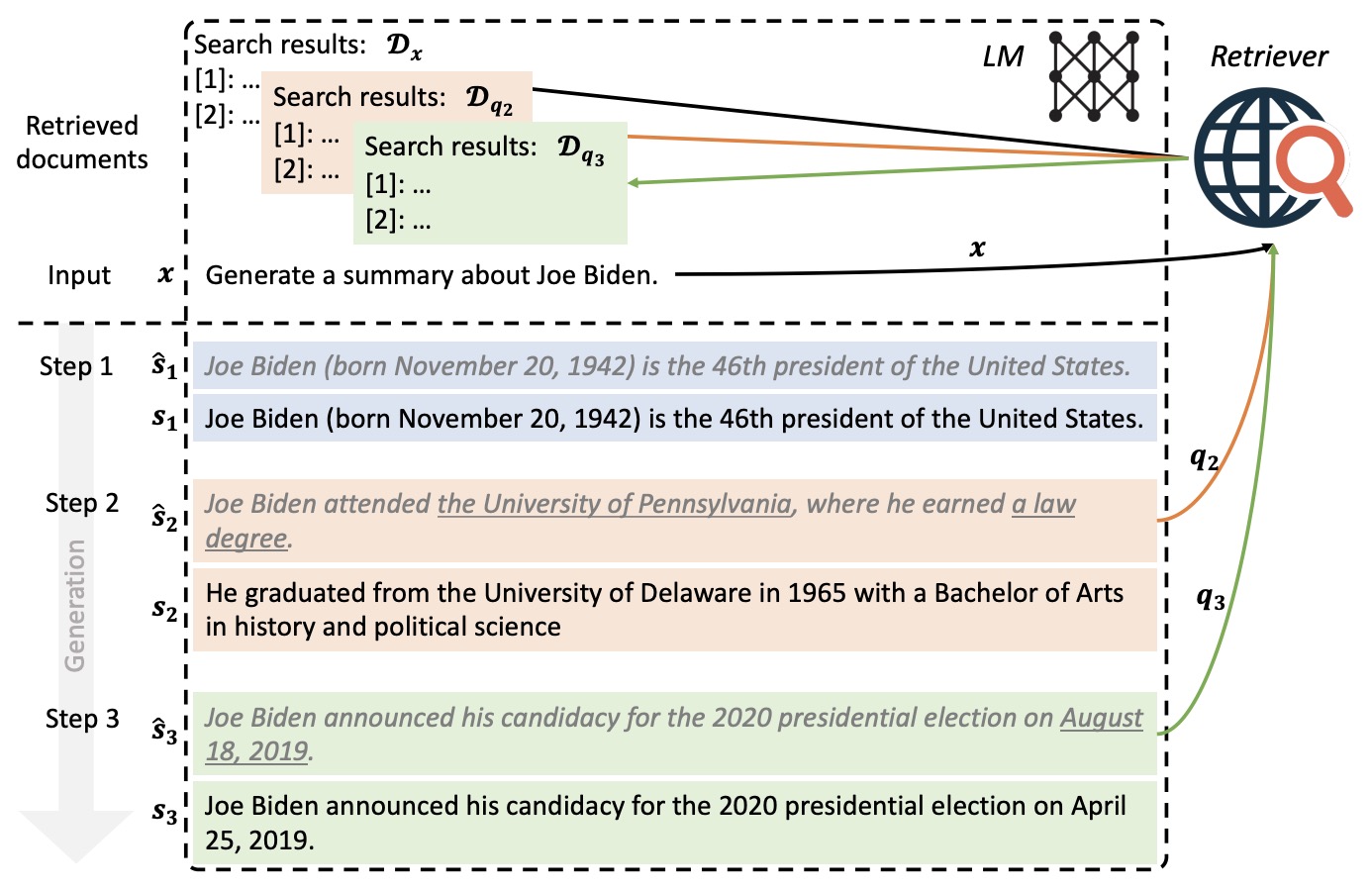

- Active Retrieval Augmented Generation

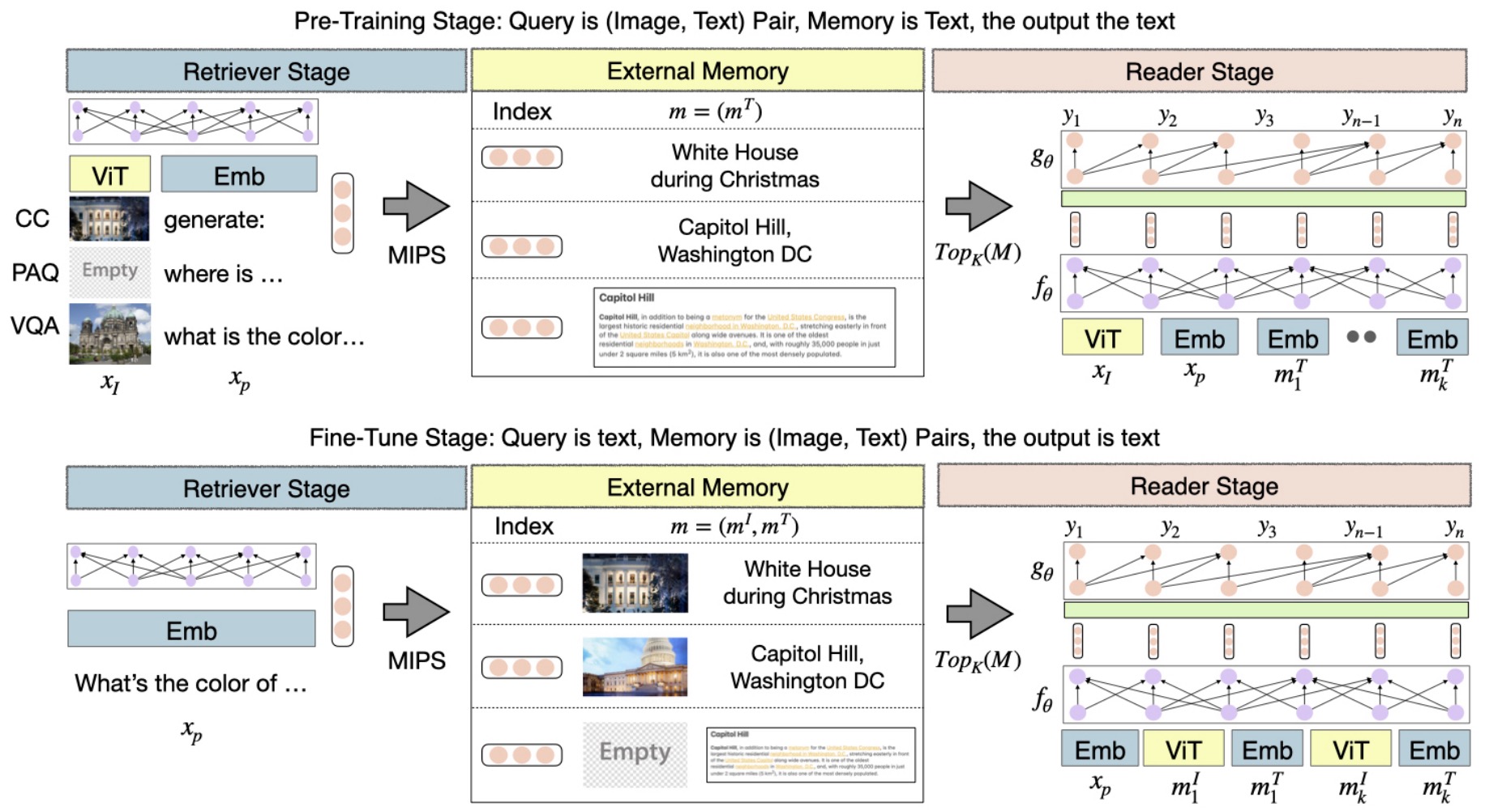

- MuRAG: Multimodal Retrieval-Augmented Generator

- Hypothetical Document Embeddings (HyDE)

- RAGAS: Automated Evaluation of Retrieval Augmented Generation

- Fine-Tuning or Retrieval? Comparing Knowledge Injection in LLMs

- Dense X Retrieval: What Retrieval Granularity Should We Use?

- ARES: An Automated Evaluation Framework for Retrieval-Augmented Generation Systems

- Seven Failure Points When Engineering a Retrieval Augmented Generation System

- RAPTOR: Recursive Abstractive Processing for Tree-Organized Retrieval

- The Power of Noise: Redefining Retrieval for RAG Systems

- MultiHop-RAG: Benchmarking Retrieval-Augmented Generation for Multi-Hop Queries

- RAG vs Fine-tuning: Pipelines, Tradeoffs, and a Case Study on Agriculture

- RAFT: Adapting Language Model to Domain Specific RAG

- Corrective Retrieval Augmented Generation

- Fine Tuning vs. Retrieval Augmented Generation for Less Popular Knowledge

- HGOT: Hierarchical Graph of Thoughts for Retrieval-Augmented In-Context Learning in Factuality Evaluation

- How faithful are RAG models? Quantifying the tug-of-war between RAG and LLMs’ internal prior

- Adaptive-RAG: Learning to Adapt Retrieval-Augmented Large Language Models through Question Complexity

- RichRAG: Crafting Rich Responses for Multi-faceted Queries in Retrieval-Augmented Generation

- HiQA: A Hierarchical Contextual Augmentation RAG for Massive Documents QA

- References

- Citation

Overview

- Retrieval-Augmented Generation (RAG) is an advanced technique designed to enhance the output of Language Models (LMs) by incorporating external knowledge sources.

- RAG is achieved by retrieving relevant information from a large corpus of documents and utilizing that information to guide and inform the generative process of the model. The subsequent sections provide a detailed examination of this methodology.

Motivation

- In many real-world scenarios, organizations maintain extensive collections of proprietary documents, such as technical manuals, from which precise information must be extracted. This challenge is often analogous to locating a needle in a haystack, given the sheer volume and complexity of the content.

- While recent advancements, such as OpenAI’s introduction of GPT-4 Turbo, offer improved capabilities for processing lengthy documents, they are not without limitations. Notably, these models exhibit a tendency known as the “Lost in the Middle” phenomenon, wherein information positioned near the center of the context window is more likely to be overlooked or forgotten. This issue is akin to reading a comprehensive text such as the Bible, yet struggling to recall specific content from its middle chapters.

- To address this shortcoming, the RAG approach has been introduced. This method involves segmenting documents into discrete units—typically paragraphs—and creating an index for each. Upon receiving a query, the system efficiently identifies and retrieves the most relevant segments, which are then supplied to the language model. By narrowing the input to only the most pertinent information, this strategy mitigates cognitive overload within the model and substantially improves the relevance and accuracy of its responses.

Lexical Retrieval

-

Lexical retrieval is the traditional approach to information retrieval based on exact word matches and term frequency. Two commonly used methods in this category are TF-IDF and BM25.

- TF-IDF (Term Frequency-Inverse Document Frequency):

- TF-IDF evaluates the importance of a word in a document relative to a corpus. It increases proportionally with the number of times a word appears in the document but is offset by how frequently the word appears across all documents.

- While TF-IDF is simple and effective for many scenarios, it does not take into account the saturation of term frequency and lacks the ability to differentiate between rare and common words beyond the basic IDF scaling.

- BM25 (Best Matching 25):

- BM25 is a more refined version of TF-IDF. It introduces term frequency saturation and document length normalization, improving relevance scoring.

- One of the key advantages of BM25 over TF-IDF is its ability to handle multiple occurrences of a term in a more nuanced way. It prevents overly frequent terms from dominating the score, making retrieval results more balanced.

- BM25 also scales better with document length, giving fair chances to both short and long documents.

- TF-IDF (Term Frequency-Inverse Document Frequency):

- Advantages of Lexical Retrieval:

- Fast and computationally efficient.

- Easy to interpret and implement.

- Works well when exact keyword matching is important.

- Limitations:

- Cannot handle synonyms or paraphrased queries effectively.

- Limited ability to capture semantic meaning.

Semantic Retrieval

-

Semantic retrieval, previously referred to as neural retrieval, is a more recent approach that relies on machine learning models to understand the meaning behind queries and documents.

-

These systems use neural networks to embed both queries and documents into a shared vector space, where semantic similarity can be calculated using metrics like cosine similarity.

- How it works:

- Vector Encoding:

- Both queries and documents are transformed into dense vectors using pre-trained or fine-tuned encoders.

- These encoders are typically trained on large datasets, enabling them to capture semantic nuances beyond surface-level keyword overlap.

- Semantic Matching:

- Vectors are compared to identify the most semantically relevant documents, even if they don’t share explicit terms with the query.

- Vector Encoding:

- Advantages of Semantic Retrieval:

- Handles paraphrasing, synonyms, and conceptual similarity effectively.

- Supports more natural and conversational queries.

- Multilingual capabilities are often built-in.

- Challenges and Considerations:

- Requires significant computational resources.

- Retrieval quality is sensitive to training data and may reflect biases.

- Updating document embeddings for dynamic content can be complex.

Hybrid Retrieval (Lexical + Semantic)

-

A hybrid retrieval system combines the strengths of lexical and semantic methods to deliver more accurate and robust results.

-

One popular technique for hybrid retrieval is Reciprocal Rank Fusion (RRF). RRF merges the rankings from different retrieval models (e.g., BM25 and a neural retriever) by assigning higher scores to documents that consistently rank well across systems.

- How RRF works:

- Each document receives a score based on its position in the ranked lists from multiple retrieval methods.

- The scores are combined using the following reciprocal formula:

where:

- \(d\) is the document,

- \(\text{rank}_i(d)\) is the rank position of document \(d\) in the \(i^{\text{th}}\) ranked list,

- \(k\) is a constant (typically set to 60),

- \(n\) is the number of retrieval systems.

- Example:

-

Suppose two retrieval systems return the following top-5 rankings for a given query:

- BM25: [DocA, DocB, DocC, DocD, DocE]

-

Neural Retriever: [DocF, DocC, DocA, DocG, DocB]

-

RRF scores are calculated as follows:

-

For DocA (rank 1 in BM25, rank 3 in Neural Retriever):

\[\text{RRF Score}(\text{DocA}) = \frac{1}{60 + 1} + \frac{1}{60 + 3}\] -

For DocC (rank 3 in BM25, rank 2 in Neural Retriever):

\[\text{RRF Score}(\text{DocC}) = \frac{1}{60 + 3} + \frac{1}{60 + 2}\] -

For DocB (rank 2 in BM25, rank 5 in Neural Retriever):

\[\text{RRF Score}(\text{DocB}) = \frac{1}{60 + 2} + \frac{1}{60 + 5}\] -

After computing scores for all documents, the final RRF ranking is determined by sorting them in descending order of their cumulative scores.

-

-

- Benefits of Hybrid Retrieval:

- Increases recall by retrieving relevant documents that either lexical or semantic methods might miss individually.

- Balances precision and coverage.

- Makes the retrieval system more resilient to query variations and noise.

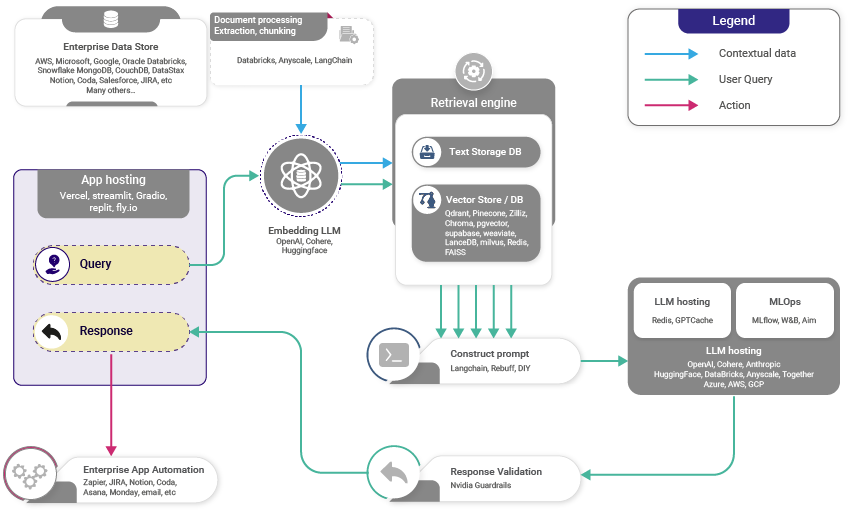

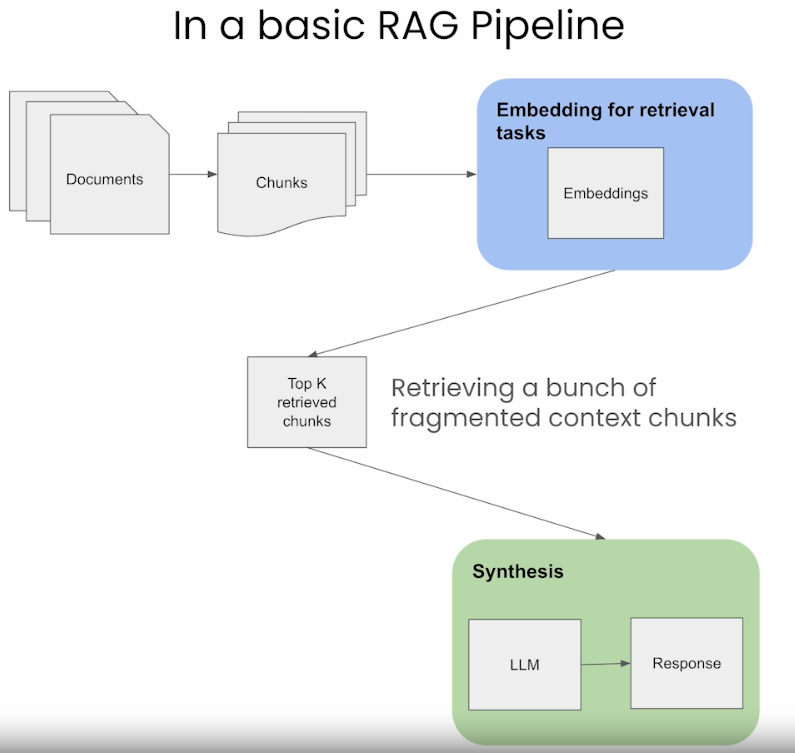

The Retrieval Augmented Generation (RAG) Pipeline

- With RAG, the LLM is able to leverage knowledge and information that is not necessarily in its weights by providing it access to external knowledge sources such as databases.

- It leverages a retriever to find relevant contexts to condition the LLM, in this way, RAG is able to augment the knowledge-base of an LLM with relevant documents.

- The retriever here could be any of the following depending on the need for semantic retrieval or not:

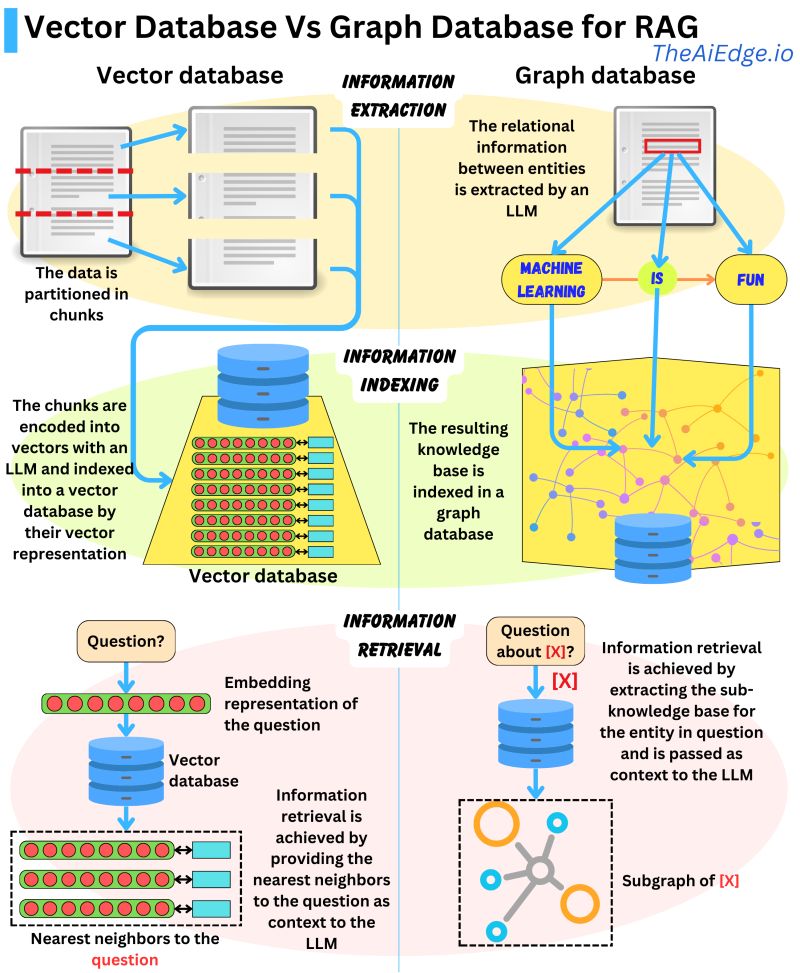

- Vector database: Typically, queries are embedded using models like BERT for generating dense vector embeddings. Alternatively, traditional methods like TF-IDF can be used for sparse embeddings. The search is then conducted based on term frequency or semantic similarity.

- Graph database: Constructs a knowledge base from extracted entity relationships within the text. This approach is precise but may require exact query matching, which could be restrictive in some applications.

- Regular SQL database: Offers structured data storage and retrieval but might lack the semantic flexibility of vector databases.

- The image below from Damien Benveniste, PhD talks a bit about the difference between using Graph vs Vector database for RAG.

- In his post linked above, Damien states that Graph Databases are favored for Retrieval Augmented Generation (RAG) when compared to Vector Databases. While Vector Databases partition and index data using LLM-encoded vectors, allowing for semantically similar vector retrieval, they may fetch irrelevant data.

- Graph Databases, on the other hand, build a knowledge base from extracted entity relationships in the text, making retrievals concise. However, it requires exact query matching which can be limiting.

-

A potential solution could be to combine the strengths of both databases: indexing parsed entity relationships with vector representations in a graph database for more flexible information retrieval. It remains to be seen if such a hybrid model exists.

- After retrieving, you may want to look into filtering the candidates further by adding ranking and/or fine ranking layers that allow you to filter down candidates that do not match your business rules, are not personalized for the user, current context, or response limit.

- Let’s succinctly summarize the process of RAG and then delve into its pros and cons:

- Vector Database Creation: RAG starts by converting an internal dataset into vectors and storing them in a vector database (or a database of your choosing).

- User Input: A user provides a query in natural language, seeking an answer or completion.

- Information Retrieval: The retrieval mechanism scans the vector database to identify segments that are semantically similar to the user’s query (which is also embedded). These segments are then given to the LLM to enrich its context for generating responses.

- Combining Data: The chosen data segments from the database are combined with the user’s initial query, creating an expanded prompt.

- Generating Text: The enlarged prompt, filled with added context, is then given to the LLM, which crafts the final, context-aware response.

- The image below (source) displays the high-level working of RAG.

Benefits of RAG

- So why should you use RAG for your application?

- With RAG, the LLM is able to leverage knowledge and information that is not necessarily in its weights by providing it access to external knowledge bases.

- RAG doesn’t require model retraining, saving time and computational resources.

- It’s effective even with a limited amount of labeled data.

- However, it does have its drawbacks, namely RAG’s performance depends on the comprehensiveness and correctness of the retriever’s knowledge base.

- RAG is best suited for scenarios with abundant unlabeled data but scarce labeled data and is ideal for applications like virtual assistants needing real-time access to specific information like product manuals.

- Scenarios with abundant unlabeled data but scarce labeled data: RAG is useful in situations where there is a lot of data available, but most of it is not categorized or labeled in a way that’s useful for training models. As an example, the internet has vast amounts of text, but most of it isn’t organized in a way that directly answers specific questions.

- Furthermore, RAG is ideal for applications like virtual assistants: Virtual assistants, like Siri or Alexa, need to pull information from a wide range of sources to answer questions in real-time. They need to understand the question, retrieve relevant information, and then generate a coherent and accurate response.

- Needing real-time access to specific information like product manuals: This is an example of a situation where RAG models are particularly useful. Imagine you ask a virtual assistant a specific question about a product, like “How do I reset my XYZ brand thermostat?” The RAG model would first retrieve relevant information from product manuals or other resources, and then use that information to generate a clear, concise answer.

- In summary, RAG models are well-suited for applications where there’s a lot of information available, but it’s not neatly organized or labeled.

- Below, let’s take a look at the publication that introduced RAG and how the original paper implemented the framework.

RAG vs. Fine-tuning

- The table below (source) compares RAG vs. fine-tuning.

- To summarize the above table:

- RAG offers Large Language Models (LLMs) access to factual, access-controlled, timely information. This integration enables LLMs to fetch precise and verified facts directly from relevant databases and knowledge repositories in real-time. While fine-tuning can address some of these aspects by adapting the model to specific data, RAG excels at providing up-to-date and specific information without the substantial costs associated with fine-tuning. Moreover, RAG enhances the model’s ability to remain current and relevant by dynamically accessing and retrieving the latest data, thus ensuring the responses are accurate and contextually appropriate. Additionally, RAG’s approach to leveraging external sources can be more flexible and scalable, allowing for easy updates and adjustments without the need for extensive retraining.

- Fine-tuning adapts the style, tone, and vocabulary of LLMs so that your linguistic “paint brush” matches the desired domain and style. RAG does not provide this level of customization in terms of linguistic style and vocabulary.

- Focus on RAG first. A successful LLM application typically involves connecting specialized data to the LLM workflow. Once you have a functional application, you can add fine-tuning to enhance the style and vocabulary of the system.

Ensemble of RAG

- Leveraging an ensemble of RAG systems offers a substantial upgrade to the model’s ability to produce rich and contextually accurate text. Here’s an enhanced breakdown of how this procedure could work:

- Knowledge sources: RAG models retrieve information from external knowledge stores to augment their knowledge in a particular domain. These can include passages, tables, images, etc. from domains like Wikipedia, books, news, databases.

- Combining sources: At inference time, multiple retrievers can pull relevant content from different corpora. For example, one retriever searches Wikipedia, another searches news sources. Their results are concatenated into a pooled set of candidates.

- Ranking: The model ranks the pooled candidates by their relevance to the context.

- Selection: Highly ranked candidates are selected to condition the language model for generation.

- Ensembling: Separate RAG models specialized on different corpora can be ensembled. Their outputs are merged, ranked, and voted on.

- Multiple knowledge sources can augment RAG models through pooling and ensembles. Careful ranking and selection helps integrate these diverse sources for improved generation.

- One thing to keep in mind when using multiple retrievers is to rank the different outputs from each retriever before merging them to form a response. This can be done in a variety of ways, using LTR algorithms, multi-armed bandit framework, multi-objective optimization, or according to specific business use cases.

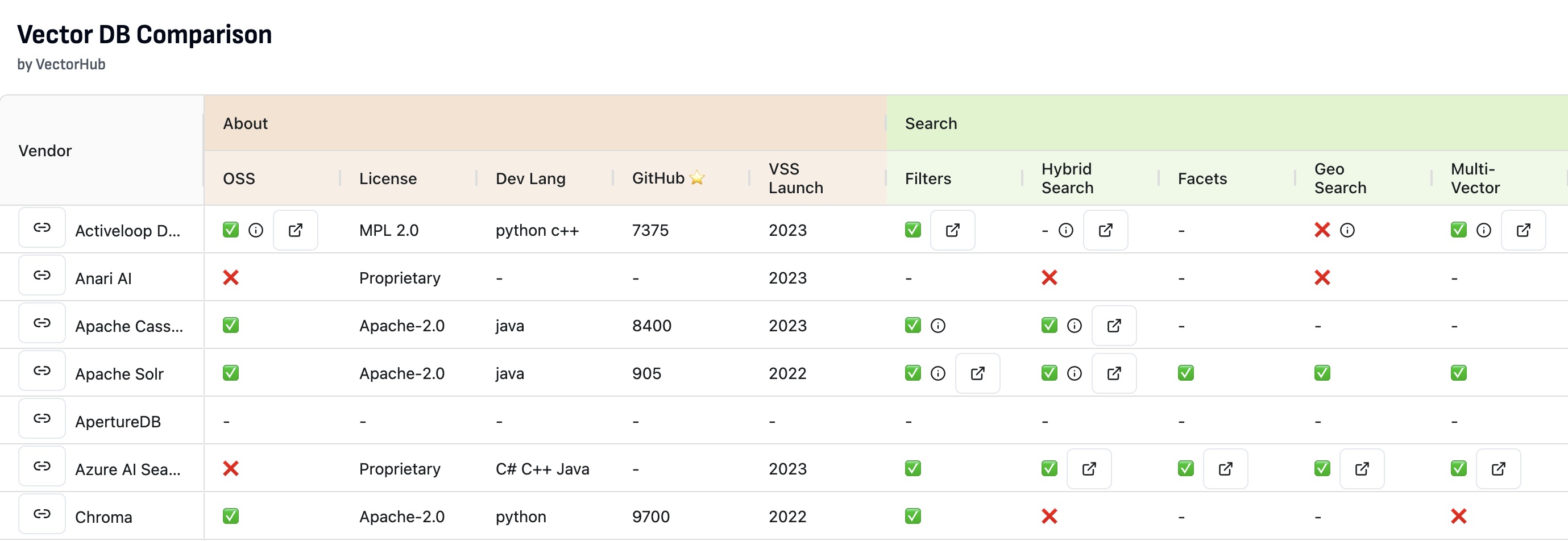

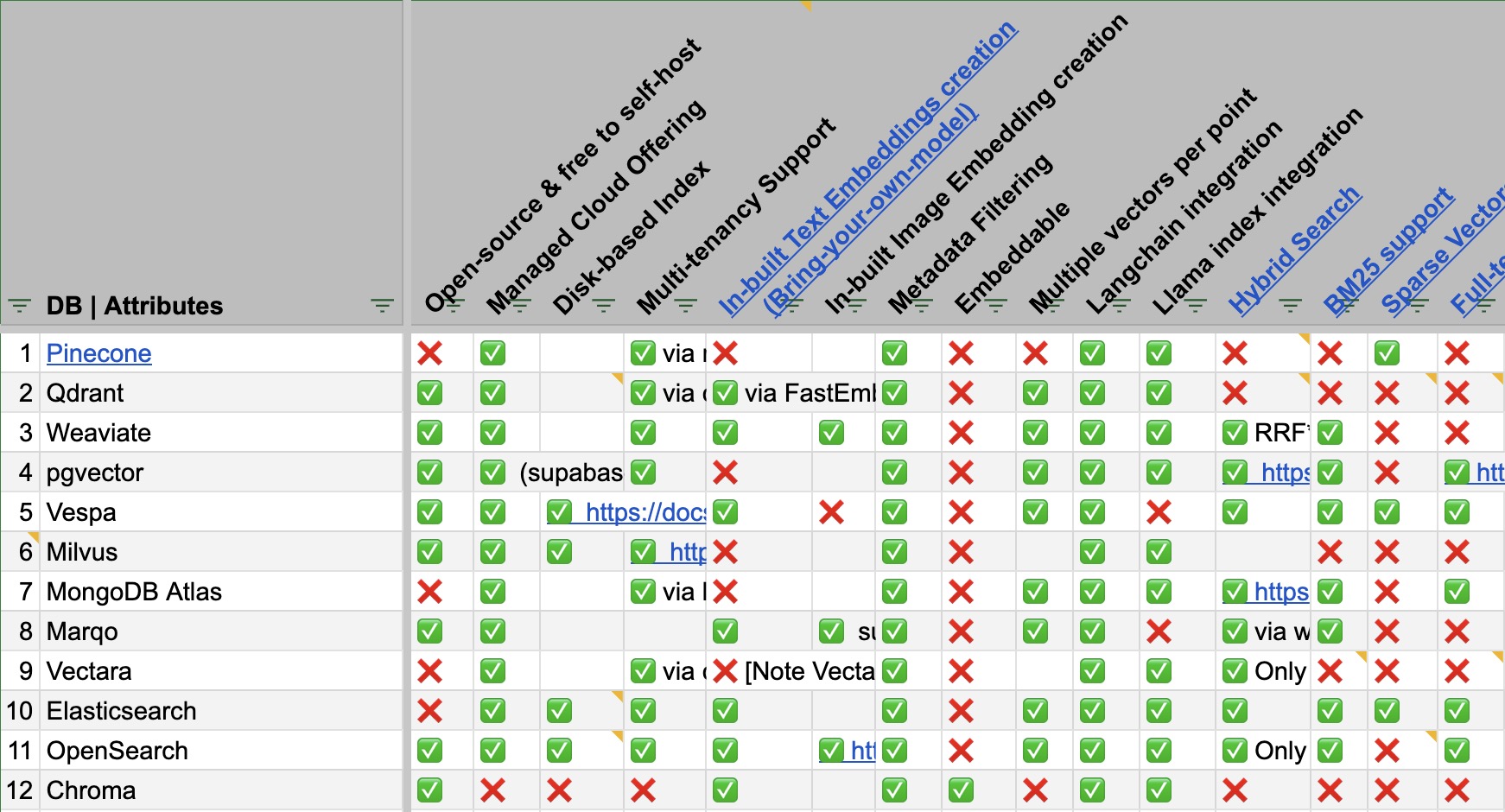

Choosing a Vector DB using a Feature Matrix

- To compare the plethora of Vector DB offerings, a feature matrix that highlights the differences between Vector DBs and which to use in which scenario is essential.

- Vector DB Comparison by VectorHub offers a great comparison spanning 37 vendors and 29 features (as of this writing).

- As a secondary resource, the following table (source) shows a comparison of some of the prevalent Vector DB offers along various feature dimensions:

- Access the full spreadsheet here.

Building a RAG pipeline

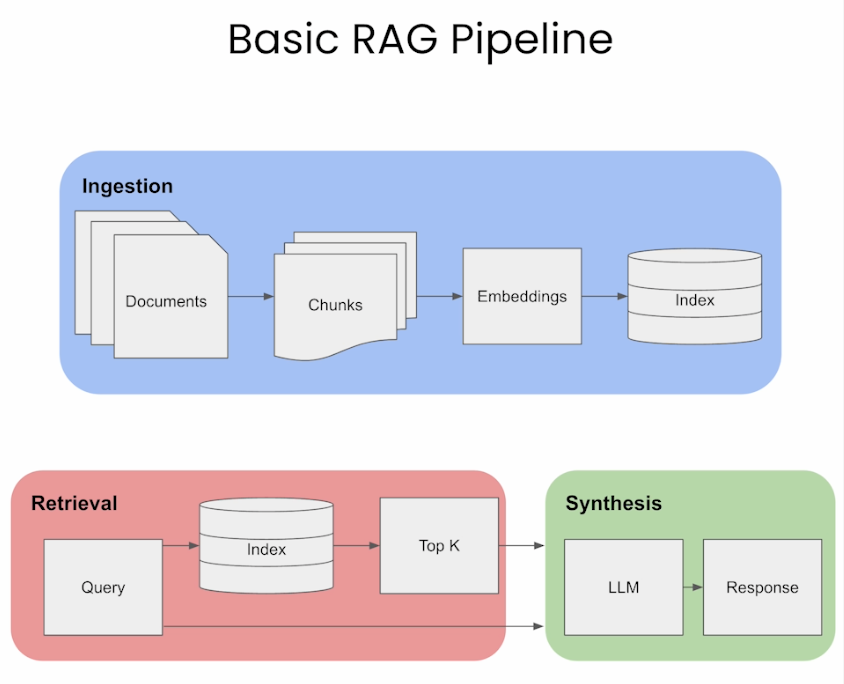

- The image below (source), gives a visual overview of the three different steps of RAG: Ingestion, Retrieval, and Synthesis/Response Generation.

- In the sections below, we will go over these key areas.

Ingestion

Chunking

- Chunking is the process of dividing the prompts and/or the documents to be retrieved, into smaller, manageable segments or chunks. These chunks can be defined either by a fixed size, such as a specific number of characters, sentences, or paragraphs. The choice of chunking strategy plays a critical role in determining both the performance and efficiency of your RAG system.

- Each chunk is encoded into an embedding vector for retrieval. Smaller, more precise chunks lead to a finer match between the user’s query and the content, enhancing the accuracy and relevance of the information retrieved.

- Larger chunks might include irrelevant information, introducing noise and potentially reducing the retrieval accuracy. By controlling the chunk size, RAG can maintain a balance between comprehensiveness and precision.

- So the next natural question that comes up is, how do you choose the right chunk size for your use case? The choice of chunk size in RAG is crucial. It needs to be small enough to ensure relevance and reduce noise but large enough to maintain the context’s integrity. Let’s look at a few methods below referred from Pinecone:

- Fixed-size Chunking: Simply decide the number of tokens in our chunk along with whether there should be overlap between them or not. Overlap between chunks guarantees there to be minimal semantic context loss between chunks. This option is computationally cheap and simple to implement.

text = "..." # your text from langchain.text_splitter import CharacterTextSplitter text_splitter = CharacterTextSplitter( separator = "\n\n", chunk_size = 256, chunk_overlap = 20 ) docs = text_splitter.create_documents([text]) - Context-aware Chunking: Content-aware chunking leverages the intrinsic structure of the text to create chunks that are more meaningful and contextually relevant. Here are several approaches to achieving this:

- Sentence Splitting: This method aligns with models optimized for embedding sentence-level content. Different tools and techniques can be used for sentence splitting:

- Naive Splitting: A basic method where sentences are split using periods and new lines. Example:

text = "..." # Your text docs = text.split(".")- This method is quick but may overlook complex sentence structures.

- NLTK (Natural Language Toolkit): A comprehensive Python library for language processing. NLTK includes a sentence tokenizer that effectively splits text into sentences. Example:

text = "..." # Your text from langchain.text_splitter import NLTKTextSplitter text_splitter = NLTKTextSplitter() docs = text_splitter.split_text(text) - spaCy: An advanced Python library for NLP tasks, spaCy offers efficient sentence segmentation. Example:

text = "..." # Your text from langchain.text_splitter import SpacyTextSplitter text_splitter = SpacyTextSplitter() docs = text_splitter.split_text(text)

- Naive Splitting: A basic method where sentences are split using periods and new lines. Example:

- Recursive Chunking: Recursive chunking is an iterative method that splits text hierarchically using various separators. It adapts to create chunks of similar size or structure by recursively applying different criteria. Example using LangChain:

text = "..." # Your text from langchain.text_splitter import RecursiveCharacterTextSplitter text_splitter = RecursiveCharacterTextSplitter( chunk_size = 256, chunk_overlap = 20 ) docs = text_splitter.create_documents([text]) - Structure-based Chunking: For formatted content like Markdown or LaTeX, specialized chunking can be applied to maintain the original structure:

- Markdown Chunking: Recognizes Markdown syntax and divides content based on structure. Example:

from langchain.text_splitter import MarkdownTextSplitter markdown_text = "..." markdown_splitter = MarkdownTextSplitter(chunk_size=100, chunk_overlap=0) docs = markdown_splitter.create_documents([markdown_text]) - LaTeX Chunking: Parses LaTeX commands and environments to chunk content while preserving its logical organization.

- Markdown Chunking: Recognizes Markdown syntax and divides content based on structure. Example:

- Semantic Chunking: Segment text based on semantic similarity. This means that sentences with the strongest semantic connections are grouped together, while sentences that move to another topic or theme are separated into distinct chunks. Notebook.

- Semantic chunking can be summarized in four steps:

- Split the text into sentences, paragraphs, or other rule-based units.

- Vectorize a window of sentences or other units.

- Calculate the cosine distance between the embedded windows.

- Merge sentences or units until the cosine similarity value reaches a specific threshold.

- The following figure (source) visually summarizes the overall process:

- Semantic chunking can be summarized in four steps:

- Sentence Splitting: This method aligns with models optimized for embedding sentence-level content. Different tools and techniques can be used for sentence splitting:

- Fixed-size Chunking: Simply decide the number of tokens in our chunk along with whether there should be overlap between them or not. Overlap between chunks guarantees there to be minimal semantic context loss between chunks. This option is computationally cheap and simple to implement.

- “As a rule of thumb, if the chunk of text makes sense without the surrounding context to a human, it will make sense to the language model as well. Therefore, finding the optimal chunk size for the documents in the corpus is crucial to ensuring that the search results are accurate and relevant.” (source)

Figuring out the ideal chunk size

- Choosing the right chunk size is foundational to building an effective RAG system. It directly influences retrieval quality, model efficiency, and how well the system captures relevant context for downstream tasks. Poor chunking can lead to fragmented information or excessive context loss, undermining overall performance.

-

Building a RAG system involves determining the ideal chunk sizes for the documents that the retriever component will process. The ideal chunk size depends on several factors:

-

Data Characteristics: The nature of your data is crucial. For text documents, consider the average length of paragraphs or sections. If the documents are well-structured with distinct sections, these natural divisions might serve as a good basis for chunking.

-

Retriever Constraints: The retriever model you choose (like BM25, TF-IDF, or a neural retriever like DPR) might have limitations on the input length. It’s essential to ensure that the chunks are compatible with these constraints.

-

Memory and Computational Resources: Larger chunk sizes can lead to higher memory usage and computational overhead. Balance the chunk size with the available resources to ensure efficient processing.

-

Task Requirements: The nature of the task (e.g., question answering, document summarization) can influence the ideal chunk size. For detailed tasks, smaller chunks might be more effective to capture specific details, while broader tasks might benefit from larger chunks to capture more context.

-

Experimentation: Often, the best way to determine the ideal chunk size is through empirical testing. Run experiments with different chunk sizes and evaluate the performance on a validation set to find the optimal balance between granularity and context.

-

Overlap Consideration: Sometimes, it’s beneficial to have overlap between chunks to ensure that no important information is missed at the boundaries. Decide on an appropriate overlap size based on the task and data characteristics.

-

- To summarize, determining the ideal chunk size for a RAG system is a balancing act that involves considering the characteristics of your data, the limitations of your retriever model, the resources at your disposal, the specific requirements of your task, and empirical experimentation. It’s a process that may require iteration and fine-tuning to achieve the best results.

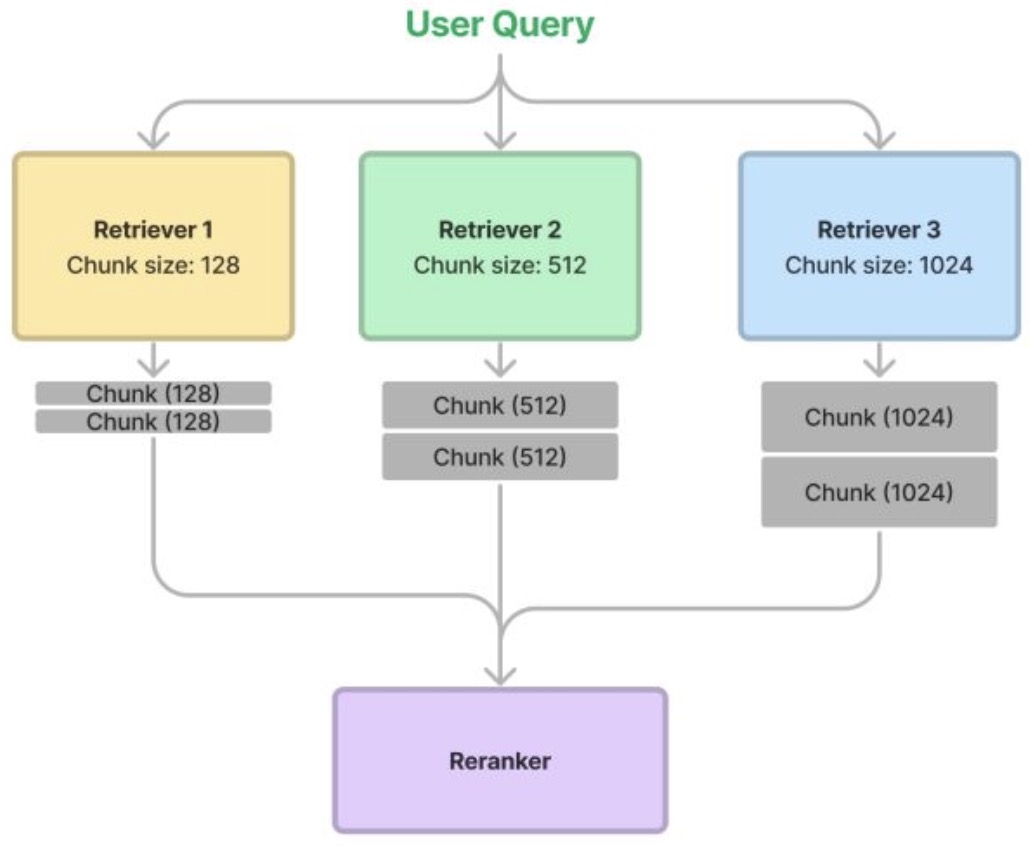

Retriever Ensembling and Reranking

- In some scenarios, it may be beneficial to simultaneously utilize multiple chunk sizes and apply a re-ranking mechanism to refine the retrieved results. A detailed discourse on re-ranking is available in the Re-ranking section.

- This approach serves two primary purposes:

- It potentially improves the quality of retrieved content—albeit at increased computational cost—by aggregating outputs from multiple chunking strategies, provided the re-ranker performs with a reasonable degree of accuracy.

- It enables systematic comparison of different retrieval methods relative to the re-ranker’s effectiveness.

-

The methodology proceeds as follows:

- Segment the same source document using various chunk sizes, for example: 128, 256, 512, and 1024 tokens.

- During the retrieval phase, extract relevant segments from each retrieval method, thereby forming an ensemble of retrievers.

- Apply a re-ranking model to prioritize and filter the aggregated results.

- The following diagram (source) illustrates the process.

- According to evaluation data provided by LlamaIndex, the ensemble retrieval strategy leads to a modest improvement in faithfulness metrics, suggesting slightly enhanced relevance of retrieved content. However, pairwise comparisons show equal preference between the ensembled and baseline approaches, thereby leaving the superiority of ensembling open to debate.

- It is important to note that this ensembling methodology is not limited to variations in chunk size. It can also be extended to other dimensions of a RAG pipeline, including vector-based, keyword-based, and hybrid search strategies.

Embeddings

- Once you have your prompt chunked appropriately, the next step is to embed it. Embedding prompts and documents in RAG involves transforming both the user’s query (prompt) and the documents in the knowledge base into a format that can be effectively compared for relevance. This process is critical for RAG’s ability to retrieve the most relevant information from its knowledge base in response to a user query. Here’s how it typically works:

- One option to help pick which embedding model would be best suited for your task is to look at HuggingFace’s Massive Text Embedding Benchmark (MTEB) leaderboard. There is a question of whether a dense or sparse embedding can be used so let’s look into benefits of each below:

- Sparse embedding: Sparse embeddings such as TF-IDF are great for lexical matching the prompt with the documents. Best for applications where keyword relevance is crucial. It’s computationally less intensive but may not capture the deeper semantic meanings in the text.

- Semantic embedding: Semantic embeddings, such as BERT or SentenceBERT lend themselves naturally to the RAG use-case.

- BERT: Suitable for capturing contextual nuances in both the documents and queries. Requires more computational resources compared to sparse embeddings but offers more semantically rich embeddings.

- SentenceBERT: Ideal for scenarios where the context and meaning at the sentence level are important. It strikes a balance between the deep contextual understanding of BERT and the need for concise, meaningful sentence representations. This is usually the preferred route for RAG.

Naive Chunking vs. Late Chunking vs. Late Interaction (ColBERT and ColPali)

-

The choice between naive chunking, late chunking, and late interaction (ColBERT/ColPali) depends on the specific requirements of the retrieval task:

- Naive Chunking is suitable for scenarios with strict resource constraints but where retrieval precision is less critical.

- Late Chunking, introduced by JinaAI, offers an attractive middle ground, maintaining context and providing improved retrieval accuracy without incurring significant additional costs. Put simply, late chunking balances the trade-offs between cost and precision, making it an excellent option for building scalable and effective RAG systems, particularly in long-context retrieval scenarios.

- Late Interaction (ColBERT/ColPali) is best suited for applications where retrieval precision is paramount and resource costs are less of a concern.

-

Let’s explore the differences between three primary strategies: Naive Chunking, Late Chunking, and Late Interaction (ColBERT and ColPali), focusing on their methodologies, advantages, and trade-offs.

Overview

- Long-context retrieval presents a challenge when balancing precision, context retention, and cost efficiency. Solutions range from simple and low-cost, like Naive Chunking, to more sophisticated and resource-intensive approaches, such as Late Interaction ([ColBERT](https://arxiv.org/abs/2004.12832)). Late Chunking, a novel approach by JinaAI, offers a middle ground, preserving context with efficiency comparable to Naive Chunking.

Naive/Vanilla Chunking

What is Naive/Vanilla Chunking?

- As discussed in the Chunking section, naive/vanilla chunking divides a document into fixed-size chunks based on metrics like sentence boundaries or token count (e.g., 512 tokens per chunk).

- Each chunk is independently embedded into a vector without considering the context of neighboring chunks.

Example

-

Consider the following paragraph: Alice went for a walk in the woods one day and on her walk, she spotted something. She saw a rabbit hole at the base of a large tree. She fell into the hole and found herself in a strange new world.

-

If chunked by sentences:

- Chunk 1: “Alice went for a walk in the woods one day and on her walk, she spotted something.”

- Chunk 2: “She saw a rabbit hole at the base of a large tree.”

- Chunk 3: “She fell into the hole and found herself in a strange new world.”

Advantages and Limitations

- Advantages:

- Efficient in terms of storage and computation.

- Simple to implement and integrate with most retrieval pipelines.

- Limitations:

- Context Loss: Each chunk is processed independently, leading to a loss of contextual relationships. For example, the connection between “she” and “Alice” would be lost, reducing retrieval accuracy for context-heavy queries like “Where did Alice fall?”.

- Fragmented Meaning: Splitting paragraphs or semantically related sections can dilute the meaning of each chunk, reducing retrieval precision.

Late Chunking

What is Late Chunking?

- Late Chunking flips the order of vectorizing (i.e., embedding generation) and chunking compared to naive/vanilla chunking. In other words, it delays the chunking process until after the entire document has been embedded into token-level representations. This allows chunks to retain context from the full document, leading to richer, more contextually aware embeddings.

How Late Chunking Works

- Embedding First: The entire document is embedded into token-level representations using a long context model.

- Chunking After: After embedding, the token-level representations are pooled into chunks based on a predefined chunking strategy (e.g., 512-token chunks).

- Context Retention: Each chunk retains contextual information from the full document, allowing for improved retrieval precision without increasing storage costs.

Example

- Using the same paragraph:

- The entire paragraph is first embedded as a whole, preserving the relationships between all sentences.

- The document is then split into chunks after embedding, ensuring that chunks like “She fell into the hole…” are contextually aware of the mention of “Alice” from earlier sentences.

Advantages and Trade-offs

- Advantages:

- Context Preservation: Late chunking ensures that the relationship between tokens across different chunks is maintained.

- Efficiency: Late chunking requires the same amount of storage as naive chunking while significantly improving retrieval accuracy.

- Trade-offs:

- Requires Long Context Models: To embed the entire document at once, a model with long-context capabilities (e.g., supporting up to 8192 tokens) is necessary.

- Slightly Higher Compute Costs: Late chunking introduces an extra pooling step after embedding, although it’s more efficient than late interaction approaches like ColBERT.

Late Interaction

What is Late Interaction?

- Late Interaction refers to a retrieval approach where token embeddings for both the document and the query are computed separately and compared at the token level, without any pooling operation. The key advantage is fine-grained, token-level matching, which improves retrieval accuracy.

ColBERT: Late Interaction in Practice

- ColBERT (Contextualized Late Interaction over BERT) by Khattab et al. (2020) uses late interaction to compare individual token embeddings from the query and document using a MaxSim operator. This allows for granular, token-to-token comparisons, which results in highly precise matches but at a significantly higher storage cost.

MaxSim: A Key Component of ColBERT

- MaxSim (Maximum Similarity) is a core component of the ColBERT retrieval framework. It refers to a specific way of calculating the similarity between token embeddings of a query and document during retrieval.

- Here’s a step-by-step breakdown of how MaxSim works:

- Token-level Embedding Comparisons:

- When a query is processed, it is tokenized and each token is embedded separately (e.g., “apple” and “sweet”).

- The document is already indexed at the token level, meaning that each token in the document also has its own embedding.

- Similarity Computation:

- At query time, the system compares each query token embedding to every token embedding in the document. The similarity between two token embeddings is often measured using a dot product or cosine similarity.

- For example, given a query token

"apple"and a document containing tokens like"apple","banana", and"fruit", the system computes the similarity of"apple"to each of these tokens.

- Selecting Maximum Similarity (MaxSim):

- The system selects the highest similarity score between the query token and the document tokens. This is known as the MaxSim operation.

- In the above example, the system compares the similarity of

"apple"(query token) with all document tokens and selects the highest similarity score, say between"apple"and the corresponding token"apple"in the document.

- MaxSim Aggregation:

The MaxSim scores for each token in the query are aggregated (usually summed) to calculate a final relevance score for the document with respect to the query.

- This approach allows for token-level precision, capturing subtle nuances in the document-query matching that would be lost with traditional pooling methods.

- Token-level Embedding Comparisons:

Example

-

Consider the query

"sweet apple"and two documents:- Document 1: “The apple is sweet and crisp.”

- Document 2: “The banana is ripe and yellow.”

-

Each query token,

"sweet"and"apple", is compared with every token in both documents:- For Document 1,

"sweet"has a high similarity with"sweet"in the document, and"apple"has a high similarity with"apple". - For Document 2,

"sweet"does not have a strong match with any token, and"apple"does not appear.

- For Document 1,

-

Using MaxSim, Document 1 would have a higher relevance score for the query than Document 2 because the most similar tokens in Document 1 (i.e.,

"sweet"and"apple") align more closely with the query tokens.

Advantages and Trade-offs

- Advantages:

- High Precision: ColBERT’s token-level comparisons, facilitated by MaxSim, lead to highly accurate retrieval, particularly for specific or complex queries.

- Flexible Query Matching: By calculating similarity at the token level, ColBERT can capture fine-grained relationships that simpler models might overlook.

- Trade-offs:

- Storage Intensive: Storing all token embeddings for each document can be extremely costly. For example, storing token embeddings for a corpus of 100,000 documents could require upwards of 2.46 TB.

- Computational Complexity: While precise, MaxSim increases computational demands at query time, as each token in the query must be compared to all tokens in the document.

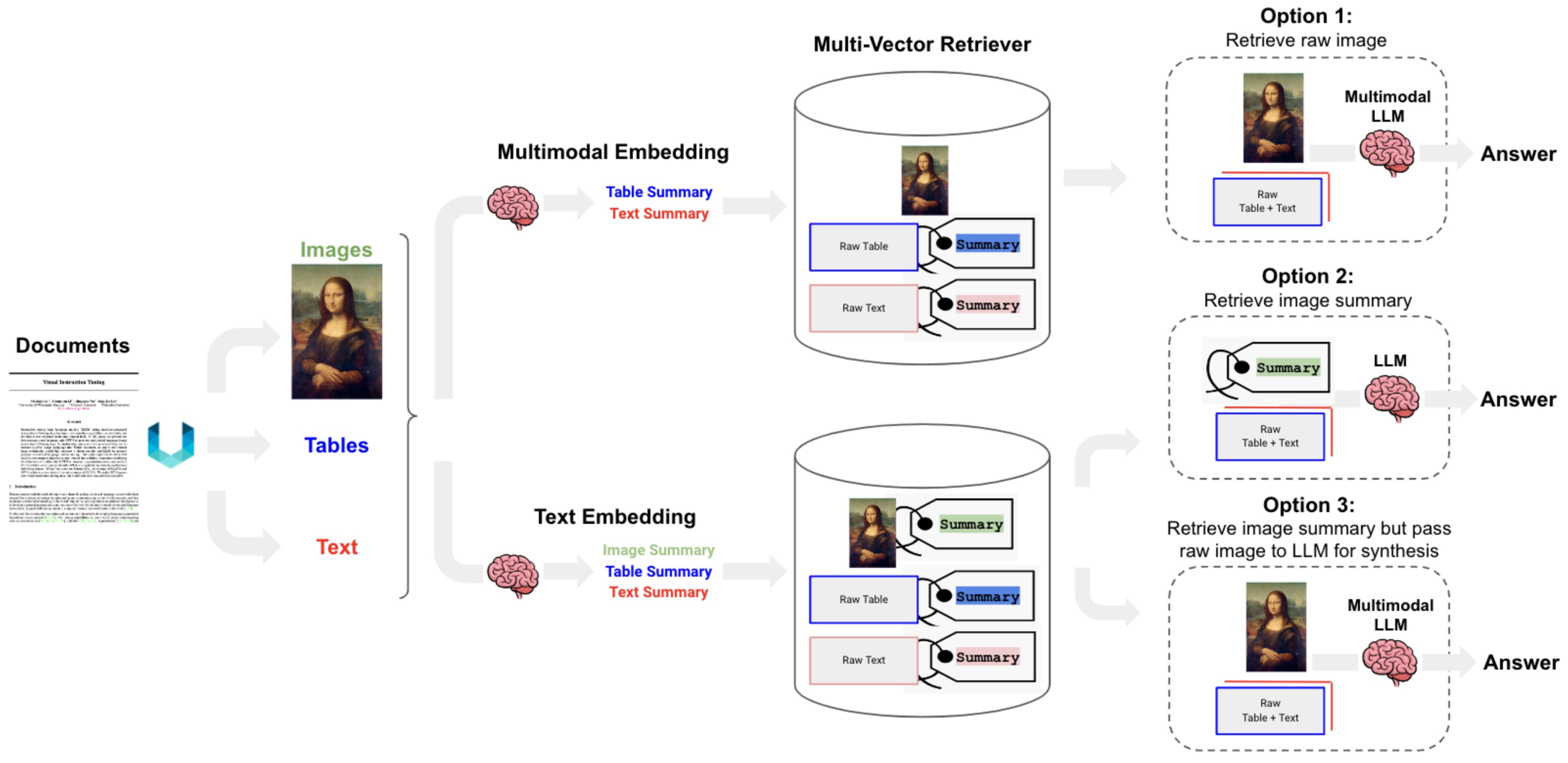

ColPali: Expanding to Multimodal Retrieval

- ColPali by Faysse et al. (2024) integrates the late interaction mechanism from ColBERT with a Vision Language Model (VLM) called PaliGemma to handle multimodal documents, such as PDFs with text, images, and tables. Instead of relying on OCR and layout parsing, ColPali uses screenshots of PDF pages to directly embed both visual and textual content. This enables powerful multimodal retrieval in complex documents.

Example

- Consider a complex PDF with both text and images. ColPali treats each page as an image and embeds it using a VLM. When a user queries the system, the query is matched with embedded screenshots via late interaction, improving the ability to retrieve relevant pages based on both visual and textual content.

.jpg)

Comparative Analysis

| Metric | Naive Chunking | Late Chunking | Late Interaction ([ColBERT](https://arxiv.org/abs/2004.12832)) |

|---|---|---|---|

| Storage Requirements | Minimal storage, ~4.9 GB for 100,000 documents | Same as naive chunking, ~4.9 GB for 100,000 documents | Extremely high storage, ~2.46 TB for 100,000 documents |

| Retrieval Precision | Lower precision due to context fragmentation | Improved precision by retaining context across chunks | Highest precision with token-level matching |

| Complexity and Cost | Simple implementation, minimal resources | Moderately more complex, efficient in compute and storage | Highly complex, resource-intensive in both storage and computation |

Sentence Embeddings: The What and Why

Background: Differences compared to Token-Level Models like BERT

- As an overview, let’s look into how sentence transformers differ compared to token-level embedding models such as BERT.

- Sentence Transformers are a modification of the traditional BERT model, tailored specifically for generating embeddings of entire sentences (i.e., sentence embeddings). The key differences in their training approaches are:

- Objective: BERT is trained to predict masked words in a sentence and next sentence prediction. It’s optimized for understanding words and their context within a sentence. Sentence Transformers, on the other hand, are trained specifically to understand the meaning of entire sentences. They generate embeddings where sentences with similar meanings are close in the embedding space.

- Level of Embedding: The primary difference lies in the level of embedding. BERT provides embeddings for each token (word or subword) in a sentence, whereas sentence transformers provide a single embedding for the entire sentence.

- Training Data and Tasks: While BERT is primarily trained on large text corpora with tasks focused on understanding words in context, Sentence Transformers are often trained on data sets that include sentence pairs. This training focuses on similarity and relevance, teaching the model how to understand and compare the meanings of entire sentences.

- Siamese and Triplet Network Structures: Sentence Transformers often use Siamese or Triplet network structures. These networks involve processing pairs or triplets of sentences and adjusting the model so that similar sentences have similar embeddings, and dissimilar sentences have different embeddings. This is different from BERT’s training, which does not inherently involve direct comparison of separate sentences.

- Fine-Tuning for Specific Tasks: Sentence Transformers are often fine-tuned on specific tasks like semantic similarity, paraphrase identification, or information retrieval. This fine-tuning is more focused on sentence-level understanding as opposed to BERT, which might be fine-tuned for a wider range of NLP tasks like question answering, sentiment analysis, etc., focusing on word or phrase-level understanding.

- Applicability: BERT and similar models are more versatile for tasks that require understanding at the token level (like named entity recognition, question answering), whereas sentence transformers are more suited for tasks that rely on sentence-level understanding (like semantic search, sentence similarity).

- Efficiency in Generating Sentence Embeddings or Similarity Tasks: In standard BERT, generating sentence embeddings usually involves taking the output of one of the hidden layers (often the first token,

[CLS]) as a representation of the whole sentence. However, this method is not always optimal for sentence-level tasks. Sentence Transformers are specifically optimized to produce more meaningful and useful sentence embeddings and are thus more efficient for tasks involving sentence similarity computations. Since they produce a single vector per sentence, computing similarity scores between sentences is computationally less intensive compared to token-level models.

- In summary, while BERT is a general-purpose language understanding model with a focus on word-level contexts, Sentence Transformers are adapted specifically for understanding and comparing the meanings of entire sentences, making them more effective for tasks that require sentence-level semantic understanding.

Related: Training Process for Sentence Transformers vs. Token-Level Embedding Models

- Let’s look into how sentence transformers trained differently compared to token-level embedding models such as BERT.

- Sentence transformers are trained to generate embeddings at the sentence level, which is a distinct approach from token-level embedding models like BERT. Here’s an overview of their training and how it differs from token-level models:

- Model Architecture: Sentence transformers often start with a base model similar to BERT or other transformer architectures. However, the focus is on outputting a single embedding vector for the entire input sentence, rather than individual tokens.

- Training Data: They are trained on a variety of datasets, often including pairs or groups of sentences where the relationship (e.g., similarity, paraphrasing) between the sentences is known.

- Training Objectives: BERT is pre-trained on objectives like masked language modeling (predicting missing words) and next sentence prediction, which are focused on understanding the context at the token level. Sentence transformers, on the other hand, are trained specifically to understand the context and relationships at the sentence level. Their training objective is typically to minimize the distance between embeddings of semantically similar sentences while maximizing the distance between embeddings of dissimilar sentences. This is achieved through contrastive loss functions like triplet loss, cosine similarity loss, etc.

- Output Representation: In BERT, the sentence-level representation is typically derived from the embedding of a special token (like

[CLS]) or by pooling (i.e., averaging) token embeddings. Sentence transformers are designed to directly output a meaningful sentence-level representation. - Fine-tuning for Downstream Tasks: Sentence transformers can be fine-tuned on specific tasks, such as semantic text similarity, where the model learns to produce embeddings that capture the nuanced meaning of entire sentences.

- In summary, sentence transformers are specifically optimized for producing representations at the sentence level, focusing on capturing the overall semantics of sentences, which makes them particularly useful for tasks involving sentence similarity and clustering. This contrasts with token-level models like BERT, which are more focused on understanding and representing the meaning of individual tokens within their wider context.

Applying Sentence Transformers for RAG

- Now, let’s look into why sentence transformers are the numero uno choice of models to generate embeddings for RAG.

- RAG leverages Sentence Transformers for their ability to understand and compare the semantic content of sentences. This integration is particularly useful in scenarios where the model needs to retrieve relevant information before generating a response. Here’s how Sentence Transformers are useful in a RAG setting:

- Improved Document Retrieval: Sentence Transformers are trained to generate embeddings that capture the semantic meaning of sentences. In a RAG setting, these embeddings can be used to match a query (like a user’s question) with the most relevant documents or passages in a database. This is critical because the quality of the generated response often depends on the relevance of the retrieved information.

- Efficient Semantic Search: Traditional keyword-based search methods might struggle with understanding the context or the semantic nuances of a query. Sentence Transformers, by providing semantically meaningful embeddings, enable more nuanced searches that go beyond keyword matching. This means that the retrieval component of RAG can find documents that are semantically related to the query, even if they don’t contain the exact keywords.

- Contextual Understanding for Better Responses: By using Sentence Transformers, the RAG model can better understand the context and nuances of both the input query and the content of potential source documents. This leads to more accurate and contextually appropriate responses, as the generation component of the model has more relevant and well-understood information to work with.

- Scalability in Information Retrieval: Sentence Transformers can efficiently handle large databases of documents by pre-computing embeddings for all documents. This makes the retrieval process faster and more scalable, as the model only needs to compute the embedding for the query at runtime and then quickly find the closest document embeddings.

- Enhancing the Generation Process: In a RAG setup, the generation component benefits from the retrieval component’s ability to provide relevant, semantically-rich information. This allows the language model to generate responses that are not only contextually accurate but also informed by a broader range of information than what the model itself was trained on.

- In summary, Sentence Transformers enhance the retrieval capabilities of RAG models with LLMs by enabling more effective semantic search and retrieval of information. This leads to improved performance in tasks that require understanding and generating responses based on large volumes of text data, such as question answering, chatbots, and information extraction.

Retrieval

- Let’s look at three different types of retrieval: standard, sentence window, and auto-merging. Each of these approaches has specific strengths and weaknesses, and their suitability depends on the requirements of the RAG task, including the nature of the dataset, the complexity of the queries, and the desired balance between specificity and contextual understanding in the responses.

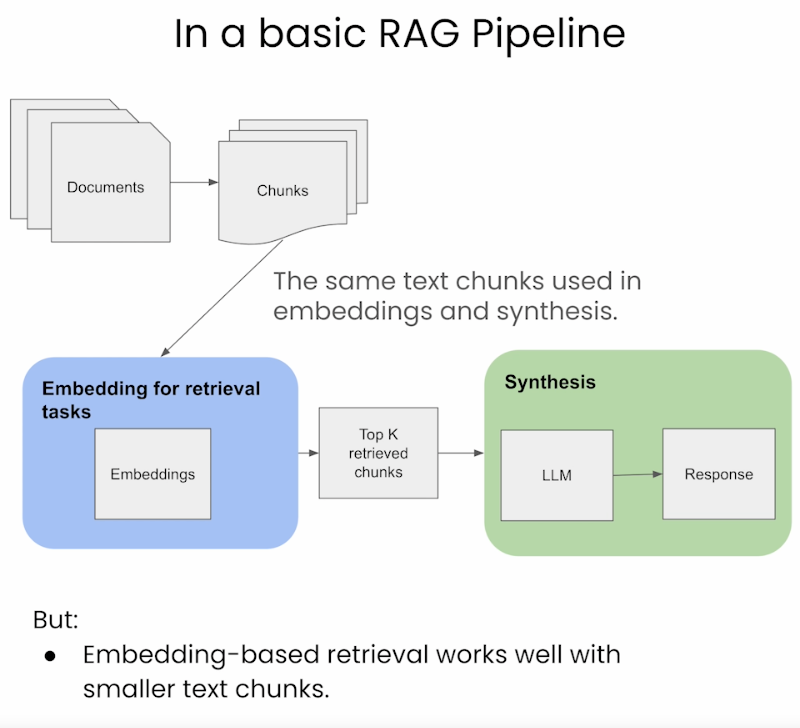

Standard/Naive approach

- As we see in the image below (source), the standard pipeline uses the same text chunk for indexing/embedding as well as the output synthesis.

- In the context of RAG in LLMs, here are the advantages and disadvantages of the three approaches:

Advantages

- Simplicity and Efficiency: This method is straightforward and efficient, using the same text chunk for both embedding and synthesis, simplifying the retrieval process.

- Uniformity in Data Handling: It maintains consistency in the data used across both retrieval and synthesis phases.

Disadvantages

- Limited Contextual Understanding: LLMs may require a larger window for synthesis to generate better responses, which this approach may not adequately provide.

- Potential for Suboptimal Responses: Due to the limited context, the LLM might not have enough information to generate the most relevant and accurate responses.

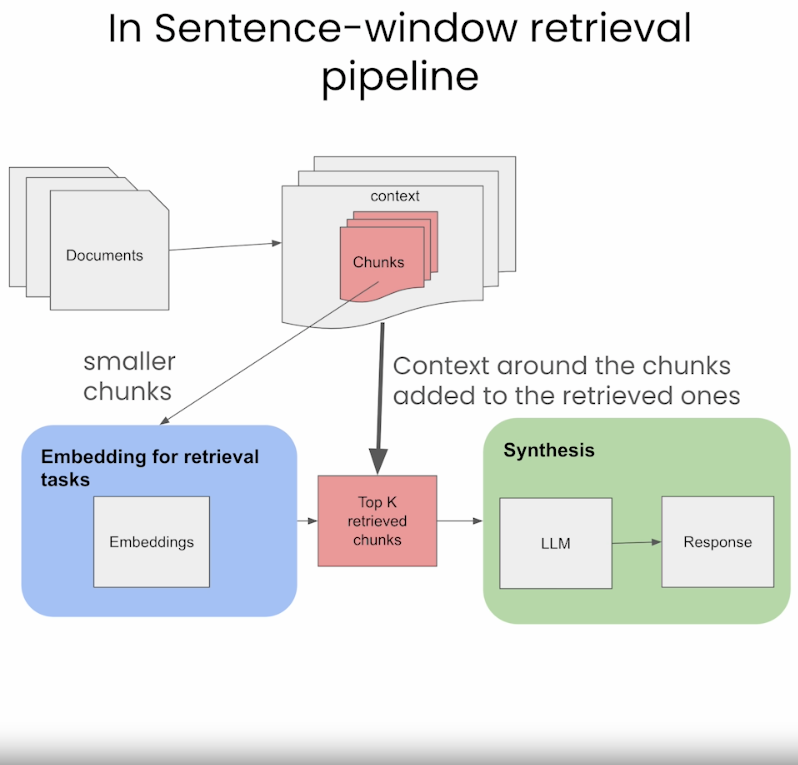

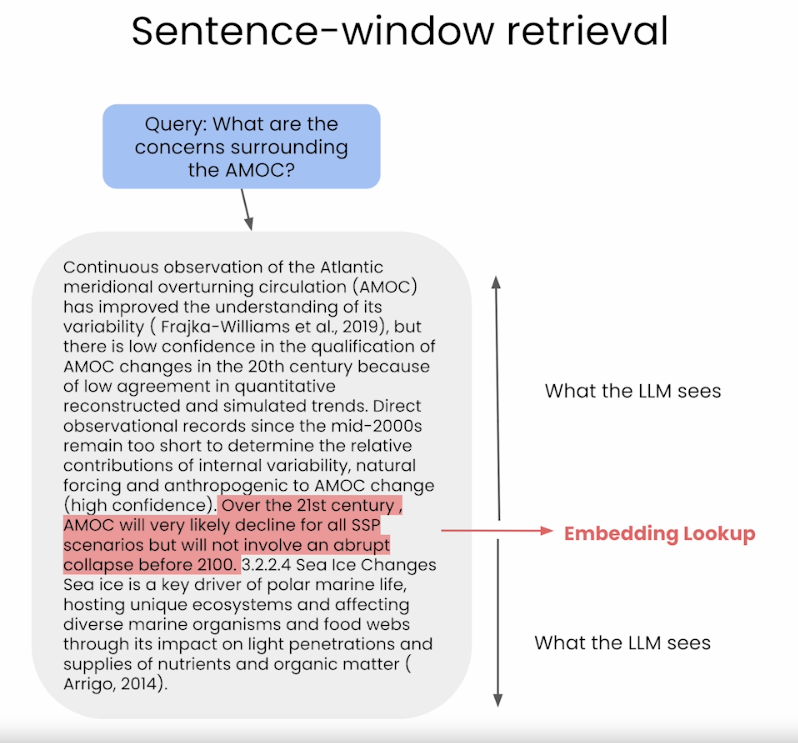

Sentence-Window Retrieval / Small-to-Large Retrieval

- The sentence-window approach breaks down documents into smaller units, such as sentences or small groups of sentences.

- It decouples the embeddings for retrieval tasks (which are smaller chunks stored in a Vector DB), but for synthesis it adds back in the context around the retrieved chunks, as seen in the image below (source).

- During retrieval, we retrieve the sentences that are most relevant to the query via similarity search and replace the sentence with the full surrounding context (using a static sentence-window around the context, implemented by retrieving sentences surrounding the one being originally retrieved) as shown in the figure below (source).

Advantages

- Enhanced Specificity in Retrieval: By breaking documents into smaller units, it enables more precise retrieval of segments directly relevant to a query.

- Context-Rich Synthesis: It reintroduces context around the retrieved chunks for synthesis, providing the LLM with a broader understanding to formulate responses.

- Balanced Approach: This method strikes a balance between focused retrieval and contextual richness, potentially improving response quality.

Disadvantages

- Increased Complexity: Managing separate processes for retrieval and synthesis adds complexity to the pipeline.

- Potential Contextual Gaps: There’s a risk of missing broader context if the surrounding information added back is not sufficiently comprehensive.

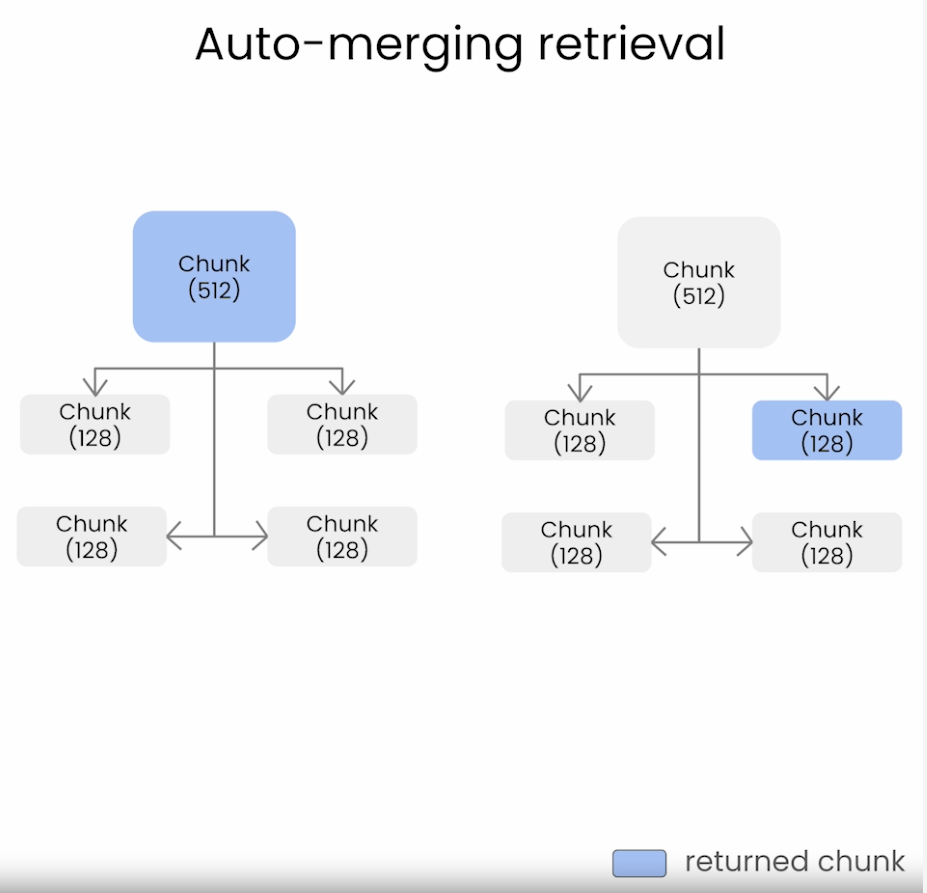

Auto-merging Retriever / Hierarchical Retriever

- The image below (source), illustrates how auto-merging retrieval can work where it doesn’t retrieve a bunch of fragmented chunks as would happen with the naive approach.

- The fragmentation in the naive approach would be worse with smaller chunk sizes as shown below (source).

- Auto-merging retrieval aims to combine (or merge) information from multiple sources or segments of text to create a more comprehensive and contextually relevant response to a query. This approach is particularly useful when no single document or segment fully answers the query but rather the answer lies in combining information from multiple sources.

- It allows smaller chunks to be merged into bigger parent chunks. It does this via the following steps:

- Define a hierarchy of smaller chunks linked to parent chunks.

- If the set of smaller chunks linking to a parent chunk exceeds some threshold (say, cosine similarity), then “merge” smaller chunks into the bigger parent chunk.

- The method will finally retrieve the parent chunk for better context.

Advantages

- Comprehensive Contextual Responses: By merging information from multiple sources, it creates responses that are more comprehensive and contextually relevant.

- Reduced Fragmentation: This approach addresses the issue of fragmented information retrieval, common in the naive approach, especially with smaller chunk sizes.

- Dynamic Content Integration: It dynamically combines smaller chunks into larger, more informative ones, enhancing the richness of the information provided to the LLM.

Disadvantages

- Complexity in Hierarchy and Threshold Management: The process of defining hierarchies and setting appropriate thresholds for merging is complex and critical for effective functioning.

- Risk of Over-generalization: There’s a possibility of merging too much or irrelevant information, leading to responses that are too broad or off-topic.

- Computational Intensity: This method might be more computationally intensive due to the additional steps in merging and managing the hierarchical structure of text chunks.

Contextual Retrieval

- For LLMs to deliver relevant and accurate responses, they must retrieve the right information from a knowledge base. Traditional RAG improves model accuracy by fetching relevant text chunks and appending them to the prompt. However, such methods often remove crucial context when encoding information, leading to failed retrievals and suboptimal outputs.

- Contextual Retrieval, introduced by Anthropic, is an advanced technique designed to improve this process by ensuring that retrieved chunks maintain their original context. It employs contextual embeddings – embeddings that incorporate chunk-specific background information and contextual BM25 – an enhanced BM25 ranking that considers the broader document context.

-

By prepending contextual metadata to each document chunk before embedding, Contextual Retrieval significantly enhances search accuracy. This approach reduces failed retrievals by 49% and, when combined with reranking, by 67%.

- Why Context Matters in Retrieval:

- Traditional RAG solutions divide documents into small chunks for efficient retrieval. However, these fragments often lose critical context. For example, the statement “The company’s revenue grew by 3% over the previous quarter” lacks information about which company or quarter it refers to. Contextual Retrieval solves this by embedding relevant metadata into each chunk.

- Implementation of Contextual Retrieval:

- To implement Contextual Retrieval, a model like Claude 3 Haiku can generate concise context for each chunk. This context is then prepended before embedding and indexing, ensuring more precise retrieval. Developers can automate this process at scale using specialized retrieval pipelines.

- Prompt Used for Contextual Retrieval:

- Anthropic’s method involves using Claude to generate a short, document-specific context for each chunk using the following prompt:

<document> </document> Here is the chunk we want to situate within the whole document: <chunk> </chunk> Please give a short, succinct context to situate this chunk within the overall document for the purposes of improving search retrieval of the chunk. Answer only with the succinct context and nothing else. - This process automatically generates a concise contextualized description that is prepended to the chunk before embedding and indexing.

- Anthropic’s method involves using Claude to generate a short, document-specific context for each chunk using the following prompt:

- Combining Contextual Retrieval with Reranking:

- For maximum performance, Contextual Retrieval can be paired with reranking models, which filter and reorder retrieved chunks based on their relevance. This additional step enhances retrieval precision, ensuring only the most relevant chunks are passed to the LLM.

- The following flowchart from Anthropic’s blog shows the combined contextual retrieval and reranking stages which seek to maximize retrieval accuracy.

- Key Takeaways:

- Contextual Embeddings improve retrieval accuracy by preserving document meaning.

- BM25 + Contextualization enhances exact-match retrieval.

- Combining Contextual Retrieval with reranking further boosts retrieval effectiveness.

- Developers can implement Contextual Retrieval using prompt-based preprocessing and automated pipelines.

- With Contextual Retrieval, LLM-powered knowledge systems can achieve greater accuracy, scalability, and relevance, unlocking new levels of performance in real-world applications.

Using Approximate Nearest Neighbors (ANN) for Retrieval

- The next step is to consider which approximate nearest neighbors (ANN) library to choose from indexing. One option to pick the best option is to look at ANN-Benchmarks.

- A detailed discourse on the concept of ANN can be found in our ANN primer.

Re-ranking

- Re-ranking is an optional yet critical component in RAG pipelines, functioning as the refinement stage where an initially retrieved candidate set—usually limited to dozens of documents or passages—is reordered based on their relevance to the input query. This step ensures that the most pertinent content is prioritized for inclusion in the final prompt presented to the language model.

- As the candidate set is small, computationally intensive but accurate re-ranking techniques are feasible, with neural Learning-to-Rank (LTR) models being the most commonly used.

Neural Re-rankers: Types and Architectures

-

Neural re-rankers are broadly classified into three methodological paradigms based on how they evaluate relevance: pointwise, pairwise, and listwise. Each of these paradigms corresponds to specific models: monoBERT exemplifies the pointwise approach, duoBERT represents the pairwise method, and ListBERT along with ListT5 embody the listwise strategy, as detailed below:

-

monoBERT, proposed by Nogueira et al. (2019) in Multi-Stage Document Ranking with BERT, scores each document-query pair independently using BERT as a cross-encoder. Each document is concatenated with the query, and a relevance score is predicted for that pair alone. This makes monoBERT a pointwise model, offering high-quality relevance estimation but at a high inference cost when applied to many pairs.

-

duoBERT, also proposed by Nogueira et al. (2019) in Multi-Stage Document Ranking with BERT, extends monoBERT by comparing pairs of documents relative to a given query. It predicts which of two documents is more relevant, allowing for more nuanced and direct ranking decisions. This pairwise approach helps resolve fine-grained distinctions in relevance that monoBERT might overlook.

-

ListBERT, proposed by Kumar et al. (2022) in ListBERT: Learning to Rank E-commerce products with Listwise BERT, brings a listwise learning paradigm to transformer-based ranking. Instead of scoring documents independently or in pairs, ListBERT considers a full list of documents simultaneously. It uses listwise loss functions tailored for ranking tasks (e.g., ListMLE, Softmax Cross Entropy) and was originally applied in the context of e-commerce to rank products effectively.

-

ListT5, proposed by Yoon et al. (2024) in ListT5: Listwise Reranking with Fusion-in-Decoder Improves Zero-shot Retrieval, advances the listwise approach further by employing a Fusion-in-Decoder (FiD) architecture adapted from T5. This model jointly attends to multiple candidate documents during both training and inference, making it particularly suitable for zero-shot retrieval scenarios. It has shown state-of-the-art performance in contexts requiring the ranking of passages with minimal labeled data.

-

-

While monoBERT and duoBERT utilize cross-encoder architectures, ListBERT and ListT5 employ different mechanisms. ListBERT uses a listwise approach with BERT, and ListT5 is based on a Fusion-in-Decoder (FiD) architecture adapted from T5, which is not a traditional cross-encoder.

Domain-Specific Adaptations

- Reranking models can also be fine-tuned for specific domains to improve performance in specialized applications. For instance, Legal-BERT has been adapted for tasks like legal document classification and contract clause retrieval, demonstrating that domain-specific pretraining significantly boosts accuracy in re-ranking. Similar adaptations exist in finance, healthcare, and technical fields where vocabulary and relevance judgments differ markedly from general-purpose datasets.

Instruction-Following Re-ranking: Precision and Control in RAG

-

A growing frontier in reranking is instruction-following re-ranking, which introduces the ability to control ranking behavior using natural language instructions. This approach addresses the limitations of static relevance criteria by allowing dynamic, context-specific customization, which is particularly beneficial in enterprise RAG systems where documents may conflict or vary in trustworthiness and recency.

-

Examples of Natural Language Instructions:

- “Prioritize internal documentation over third-party sources. Favor the most recent information.”

- “Disregard news summaries. Emphasize detailed technical reports from trusted analysts.”

-

Advantages:

- Dynamic Relevance Modeling: Instructions enable runtime tuning of what “relevance” means, depending on the user’s intent or business context.

- Conflict Resolution: They allow systems to resolve contradictory or overlapping sources by enforcing prioritization rules.

- Prompt Optimization: Ensuring higher-quality content appears earlier in the prompt helps maximize the utility of the limited context window, where the LLM’s attention is most focused.

-

Implementation and Deployment:

- These rerankers are often deployed as standalone APIs or integrated modules that rescore a shortlist of candidate documents after the initial retrieval phase. They can be combined with other techniques such as late chunking or retriever ensembling, effectively acting as the final curation layer before the documents are fed into the language model.

- A notable example of this is Contextual AI’s system, presented in their post on the world’s first instruction-following reranker, which demonstrates real-world integration of this technology.

Response Generation / Synthesis

- The last step of the RAG pipeline is to generate responses back to the user. In this step, the model synthesizes the retrieved information with its pre-trained knowledge to generate coherent and contextually relevant responses. This process involves integrating the insights gleaned from various sources, ensuring accuracy and relevance, and crafting a response that is not only informative but also aligns with the user’s original query, maintaining a natural and conversational tone.

- Note that while creating the expanded prompt (with the retrieved top-\(k\) chunks) for an LLM to make an informed response generation, a strategic placement of vital information at the beginning or end of input sequences could enhance the RAG system’s effectiveness and thus make the system more performant. This is summarized in the paper below.

Lost in the Middle: How Language Models Use Long Contexts

- While recent language models have the ability to take long contexts as input, relatively little is known about how well the language models use longer context.

- This paper by Liu et al. from Percy Liang’s lab at Stanford, UC Berkeley, and Samaya AI analyzes language model performance on two tasks that require identifying relevant information within their input contexts: multi-document question answering and key-value retrieval. Put simply, they analyze and evaluate how LLMs use the context by identifying relevant information within it.

- They tested open-source (MPT-30B-Instruct, LongChat-13B) and closed-source (OpenAI’s GPT-3.5-Turbo and Anthropic’s Claude 1.3) models. They used multi-document question-answering where the context included multiple retrieved documents and one correct answer, whose position was shuffled around. Key-value pair retrieval was carried out to analyze if longer contexts impact performance.

- They find that performance is often highest when relevant information occurs at the beginning or end of the input context, and significantly degrades when models must access relevant information in the middle of long contexts. In other words, their findings basically suggest that Retrieval-Augmentation (RAG) performance suffers when the relevant information to answer a query is presented in the middle of the context window with strong biases towards the beginning and the end of it.

- A summary of their learnings is as follows:

- Best performance when the relevant information is at the beginning.

- Performance decreases with an increase in context length.

- Too many retrieved documents harm performance.

- Improving the retrieval and prompt creation step with a ranking stage could potentially boost performance by up to 20%.

- Extended-context models (GPT-3.5-Turbo vs. GPT-3.5-Turbo (16K)) are not better if the prompt fits the original context.

- Considering that RAG retrieves information from an external database – which most commonly contains longer texts that are split into chunks. Even with split chunks, context windows get pretty large very quickly, at least much larger than a “normal” question or instruction. Furthermore, performance substantially decreases as the input context grows longer, even for explicitly long-context models. Their analysis provides a better understanding of how language models use their input context and provides new evaluation protocols for future long-context models.

- “There is no specific inductive bias in transformer-based LLM architectures that explains why the retrieval performance should be worse for text in the middle of the document. I suspect it is all because of the training data and how humans write: the most important information is usually in the beginning or the end (think paper Abstracts and Conclusion sections), and it’s then how LLMs parameterize the attention weights during training.” (source)

- In other words, human text artifacts are often constructed in a way where the beginning and the end of a long text matter the most which could be a potential explanation to the characteristics observed in this work.

- You can also model this with the lens of two popular cognitive biases that humans face (primacy and recency bias), as in the following figure (source).

- The final conclusion is that combining retrieval with ranking (as in recommender systems) should yield the best performance in RAG for question answering.

- The following figure (source) shows an overview of the idea proposed in the paper: “LLMs are better at using info at beginning or end of input context”.

- The following figure from the paper illustrates the effect of changing the position of relevant information (document containing the answer) on multidocument question answering performance. Lower positions are closer to the start of the input context. Performance is generally highest when relevant information is positioned at the very start or very end of the context, and rapidly degrades when models must reason over information in the middle of their input context.

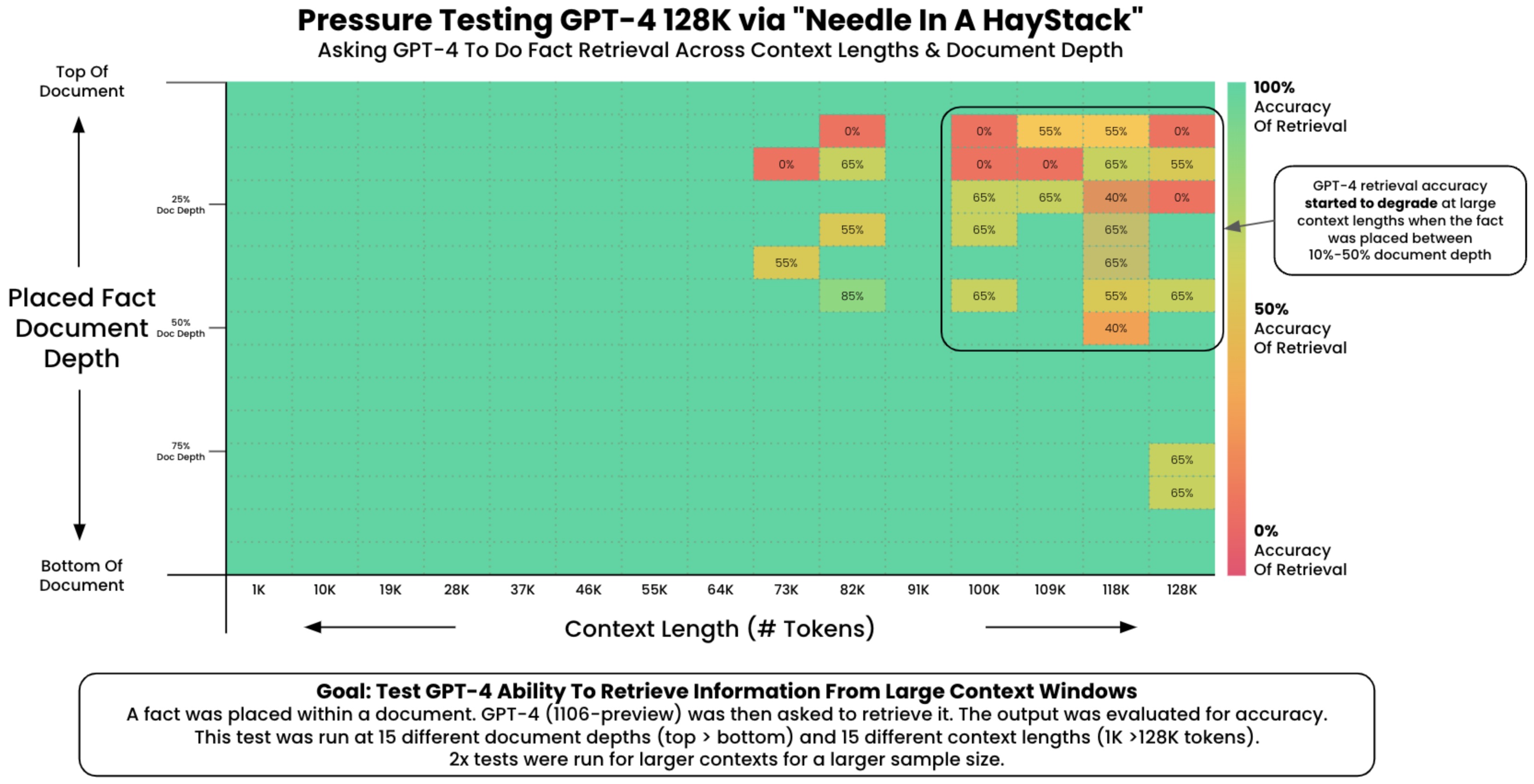

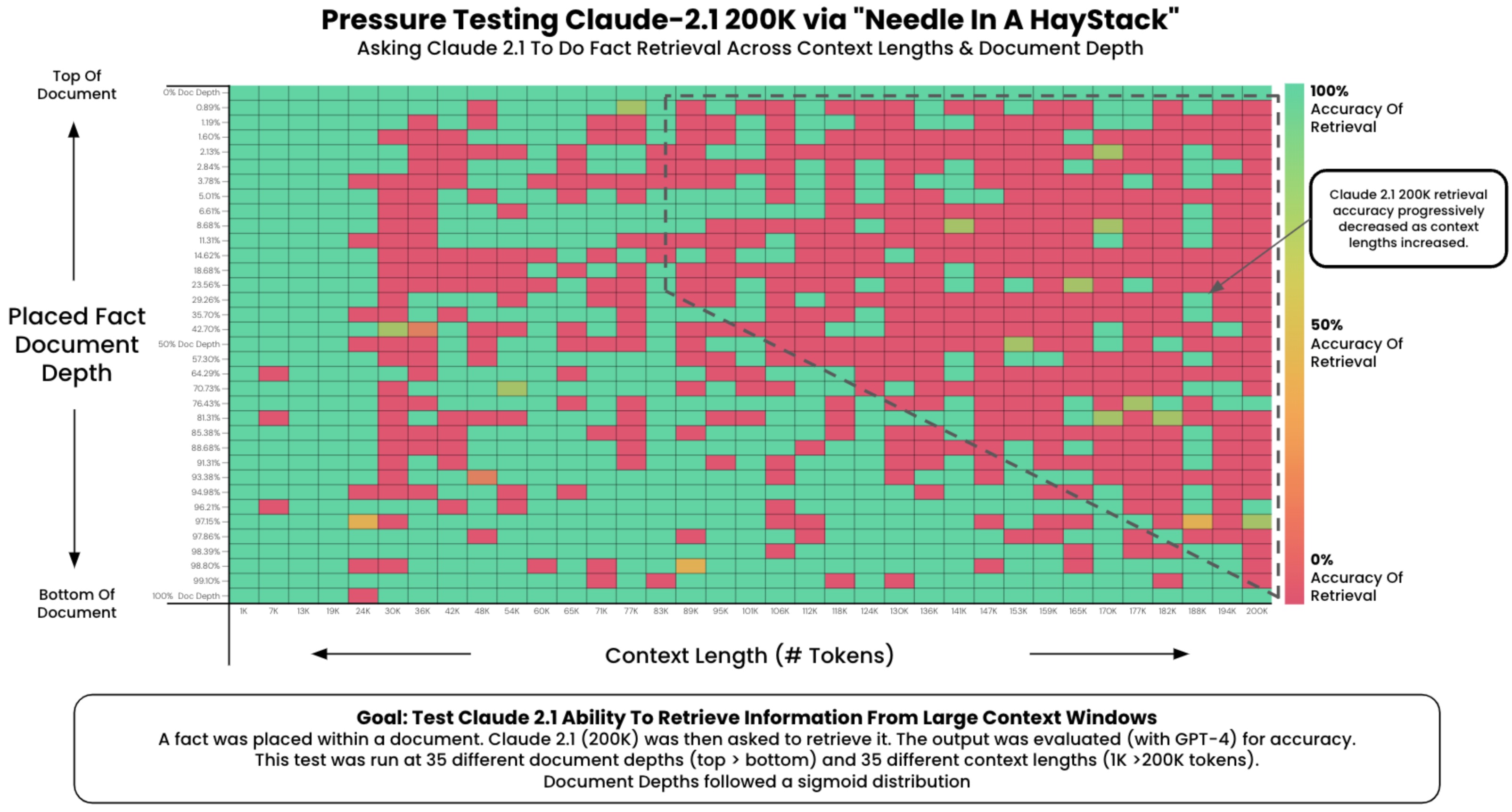

The “Needle in a Haystack” Test

- To understand the in-context retrieval ability of long-context LLMs over various parts of their prompt, a simple ‘needle in a haystack’ analysis could be conducted. This method involves embedding specific, targeted information (the ‘needle’) within a larger, more complex body of text (the ‘haystack’). The purpose is to test the LLM’s ability to identify and utilize this specific piece of information amidst a deluge of other data.

- In practical terms, the analysis could involve inserting a unique fact or data point into a lengthy, seemingly unrelated text. The LLM would then be tasked with tasks or queries that require it to recall or apply this embedded information. This setup mimics real-world situations where essential details are often buried within extensive content, and the ability to retrieve such details is crucial.

- The experiment could be structured to assess various aspects of the LLM’s performance. For instance, the placement of the ‘needle’ could be varied—early, middle, or late in the text—to see if the model’s retrieval ability changes based on information location. Additionally, the complexity of the surrounding ‘haystack’ can be modified to test the LLM’s performance under varying degrees of contextual difficulty. By analyzing how well the LLM performs in these scenarios, insights can be gained into its in-context retrieval capabilities and potential areas for improvement.

- This can be accomplished using the Needle In A Haystack library. The following plot shows OpenAI’s GPT-4-128K’s (top) and (bottom) performance with varying context length.

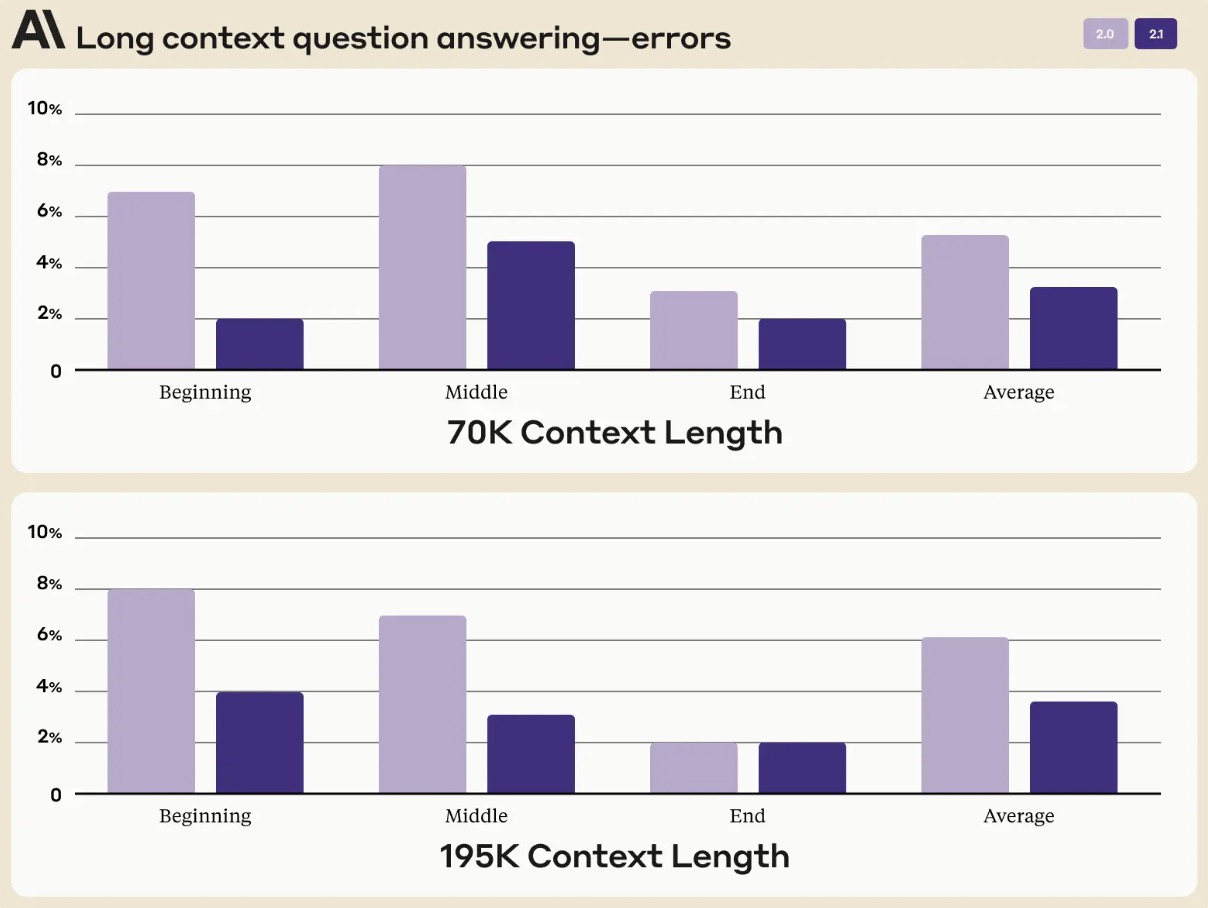

- The following figure (source) shows Claude 2.1’s long context question answering errors based on the areas of the prompt context length. On an average, Claude 2.1 demonstrated a 30% reduction in incorrect answers compared to Claude 2.

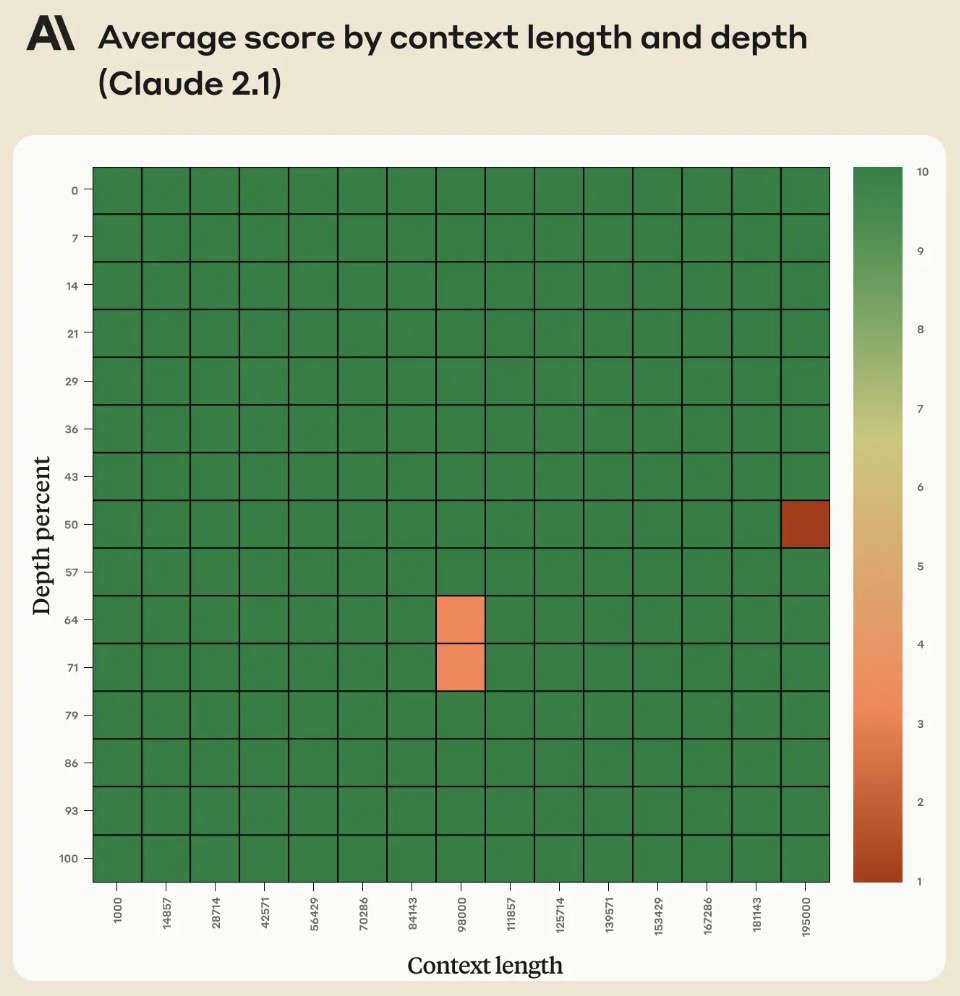

- However, in Anthropic’s Long context prompting for Claude 2.1 blog, Anthropic noted that adding “Here is the most relevant sentence in the context:” to the start of Claude’s response raised the score from 27% to 98% on the original evaluation! The figure below from the blog shows that Claude 2.1’s performance when retrieving an individual sentence across its full 200K token context window. This experiment uses the aforementioned prompt technique to guide Claude in recalling the most relevant sentence.

RAG in Multi-Turn Chatbots: Embedding Queries for Retrieval

-

In multi-turn chatbot environments, RAG must extend beyond addressing isolated, single-turn queries. Conversations are inherently dynamic—context accumulates, user objectives evolve, and intent may shift subtly across multiple interactions. This dynamic nature renders one design decision particularly critical: determining which input text should be embedded during the retrieval phase. This decision has a direct impact on both the relevance of the retrieved content and the overall quality of the generated response.

-

In contrast to single-turn systems, where embedding the current user input may suffice, multi-turn RAG systems face a more fluid and complex challenge. Limiting retrieval inputs to only the most recent user message is computationally efficient but often insufficient for capturing the nuances of ongoing discourse. Incorporating recent conversational turns offers improved contextual grounding, while advanced techniques such as summarization and query rewriting can significantly enhance retrieval precision.

-

There is no universally optimal approach—the most suitable strategy depends on factors such as the application’s specific requirements, available computational resources, and tolerance for system complexity. Nevertheless, the most robust implementations often adopt a layered methodology: integrating recent dialogue context, monitoring evolving user intent, and utilizing reformulated or enriched queries. This composite approach typically results in more accurate, contextually appropriate retrieval and, consequently, more coherent and effective responses.

-

The following sections outlines the key strategies and considerations for query embedding in multi-turn RAG chatbot systems.

Embedding the Latest User Turn Only

- The simplest approach is to embed just the latest user message. For example, if a user says, “What are the symptoms of Lyme disease?”, that exact sentence is passed to the retriever for embedding.

- Pros:

- Fast and computationally cheap.

- Reduces the risk of embedding irrelevant or stale context.

- Cons:

- Ignores conversational context and prior turns, which may contain critical disambiguating details (e.g., “Is it common in dogs?” following a discussion about pets).

Embedding Concatenated Recent Turns (Truncated Dialogue History)

- A more nuanced approach involves embedding the current user message along with a sliding window of recent turns (usually alternating user and assistant messages).

- For example:

User: My dog has been acting strange lately. Assistant: Can you describe the symptoms? User: He’s tired, limping, and has a fever. Could it be Lyme disease?- The retriever input would include all or part of the above.

- Pros:

- Preserves immediate context that can significantly improve retrieval relevance.

- Especially useful for resolving pronouns and follow-up queries.

- Cons:

- Can dilute the focus of the query if too many irrelevant prior turns are included.

- Risk of exceeding input length limits for embedding models.

Embedding a Condensed or Summarized History

- In this strategy, prior turns are summarized into a condensed form before concatenation with the current turn. This reduces token count while preserving key context.

- Can be achieved using simple heuristics, hand-written rules, or another lightweight LLM summarization pass.

- For example:

Condensed history: The user is concerned about their dog's health, showing signs of fatigue and limping. Current query: Could it be Lyme disease?- Embed the concatenated string: “The user is concerned… Could it be Lyme disease?”

- Pros:

- Retains relevant prior context while minimizing noise.

- Helps improve retrieval accuracy for ambiguous follow-up questions.

- Cons:

- Requires additional processing and potential summarization latency.

- Summarization quality can affect retrieval quality.

Embedding Structured Dialogue State

- This approach formalizes the conversation history into a structured format (like intent, entities, or user goals), which is then appended to the latest query before embedding.

- For instance:

[Intent: Diagnose pet illness] [Entity: Dog] [Symptoms: fatigue, limping, fever] Query: Could it be Lyme disease? - Pros:

- Allows precision targeting of relevant documents, especially in domain-specific applications.

- Supports advanced reasoning by aligning with KBs or ontology-driven retrieval.

- Cons:

- Requires reliable NLU and state-tracking pipelines.

- Adds system complexity.

Task-Optimized Embedding via Query Reformulation

- Some systems apply a query rewriting model that reformulates the latest turn into a fully self-contained question, suitable for retrieval.

- For example, turning “What about dogs?” into “What are the symptoms of Lyme disease in dogs?”

- These reformulated queries are then embedded for retrieval.

- Pros:

- Ensures clarity and focus in queries passed to the retriever.

- Significantly boosts retrieval performance in ambiguous or shorthand follow-ups.

- Cons:

- Introduces dependency on a high-quality rewrite model.

- Risk of introducing hallucination or incorrect reformulations.

Best Practices and Considerations

- Window Size: Most systems use a sliding window of 1-3 previous turns depending on token limits and task specificity.

- Query Length vs. Clarity Tradeoff: Longer queries with more context may capture nuance but risk introducing noise. Condensed or reformulated queries can help mitigate this.

- Personalization: In some advanced setups, user profiles or long-term memory can be injected into the retrieval query, but this must be carefully curated to avoid privacy or relevance pitfalls.

- System Goals: If the chatbot is task-oriented (e.g., booking travel), structured state may be best. If it is open-domain (e.g., a virtual assistant), concatenated dialogue or rewrite strategies tend to perform better.

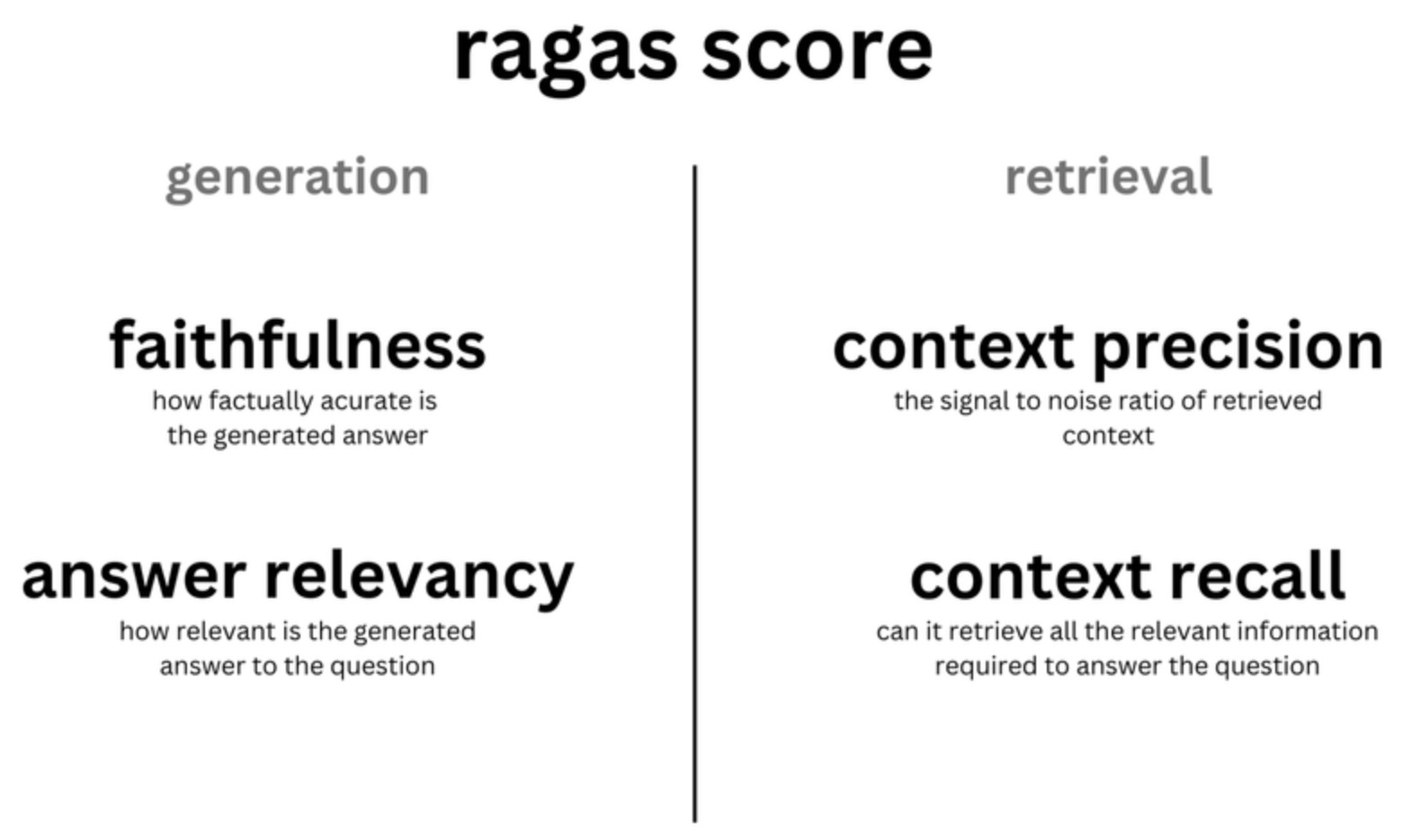

Component-Wise Evaluation

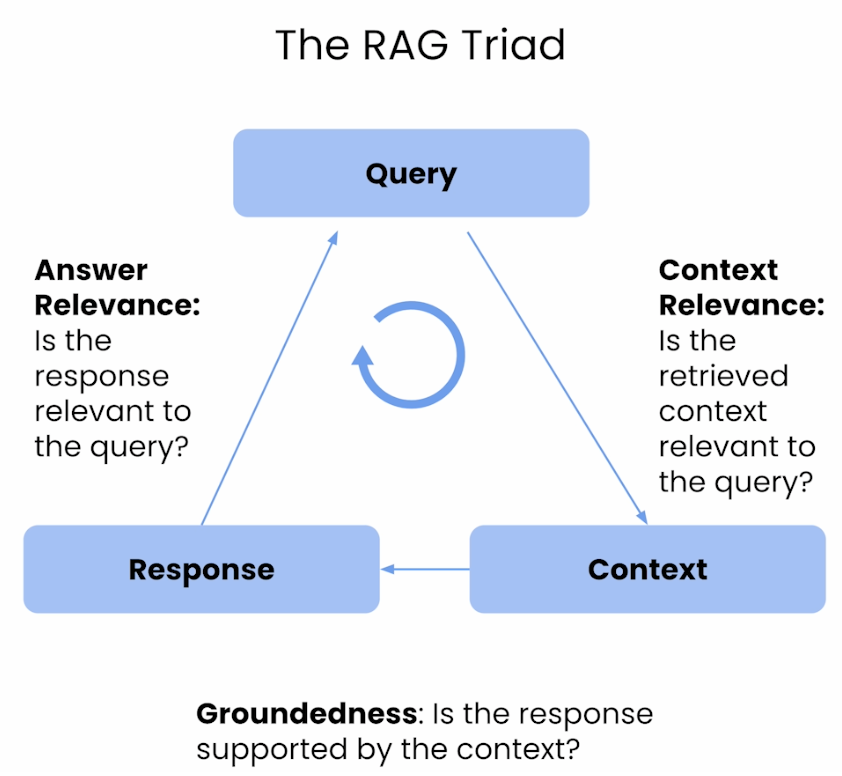

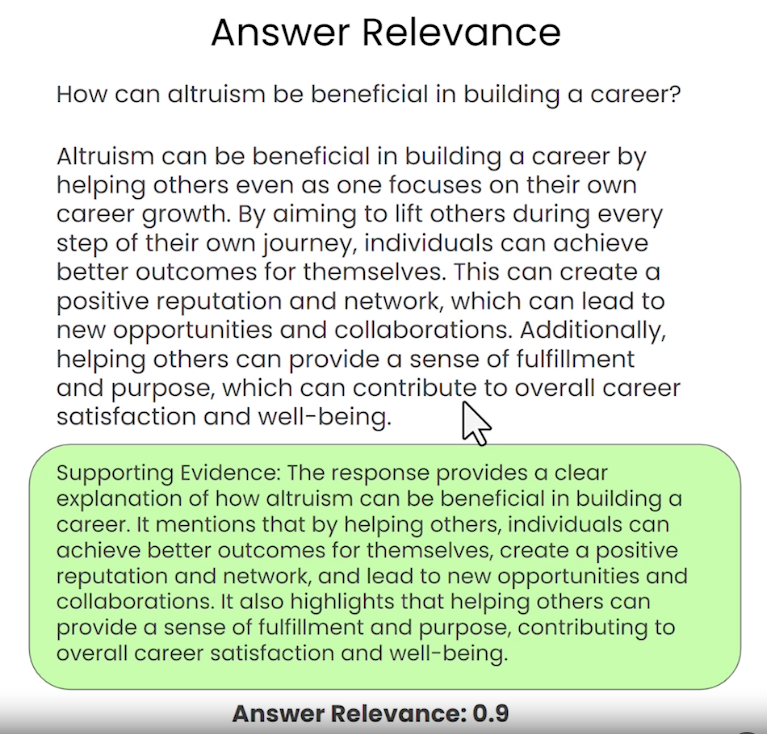

- Component-wise evaluation in RAG systems for LLMs involves assessing individual components of the system separately. This approach typically examines the performance of the retrieval component, which fetches relevant information from a database or corpus, and the generation component, which synthesizes responses based on the retrieved data. By evaluating these components individually, researchers can identify specific areas for improvement in the overall RAG system, leading to more efficient and accurate information retrieval and response generation in LLMs.

- While metrics such as Context Precision, Context Recall, and Context Relevance provide insights into the performance of the retrieval component of the RAG system, Groundedness, and Answer Relevance offer a view into the quality of the generation.

- Specifically,