Overview

- Hypernetworks (or hypernets) are neural networks that produce the weights for another neural network, which is termed the target network.

- They provide several advantages in deep learning, such as flexibility, adaptability, speedier training, sharing information between tasks, and reducing the model size.

- Hypernets have been effective across a range of deep learning challenges, from continual learning (where the model needs to learn tasks sequentially without forgetting) to zero-shot learning (where the model predicts classes it hasn’t seen during training) and even complex tasks in NLP and reinforcement learning.

- Hypernets and HyperDNNs (which we will discuss below as well) are related in spirit to PEFT but they address the challenge in a distinct manner.

Key features of Hypernets

- Soft Weight Sharing: Hypernets can train to produce weights for many DNNs, especially when tasks are related. This is different from traditional weight sharing and allows the transfer of information between tasks.

- Dynamic Architectures: Hypernets can create a neural network whose structure (like the number of layers) might change during training or when making predictions. This is helpful when you’re uncertain about the best network design beforehand.

- Data-Adaptive DNNs: Unlike typical DNNs that have set weights once training is done, HyperDNNs can tweak the primary network based on the input data.

- Uncertainty Quantification: Hypernets can be used to train networks that are aware of their prediction uncertainty. This is done by creating multiple variations of the main network. This feature is essential for critical applications, such as in healthcare.

- Parameter Efficiency: HyperDNNs may require fewer parameters compared to traditional DNNs, leading to faster training and potentially reduced computational needs.

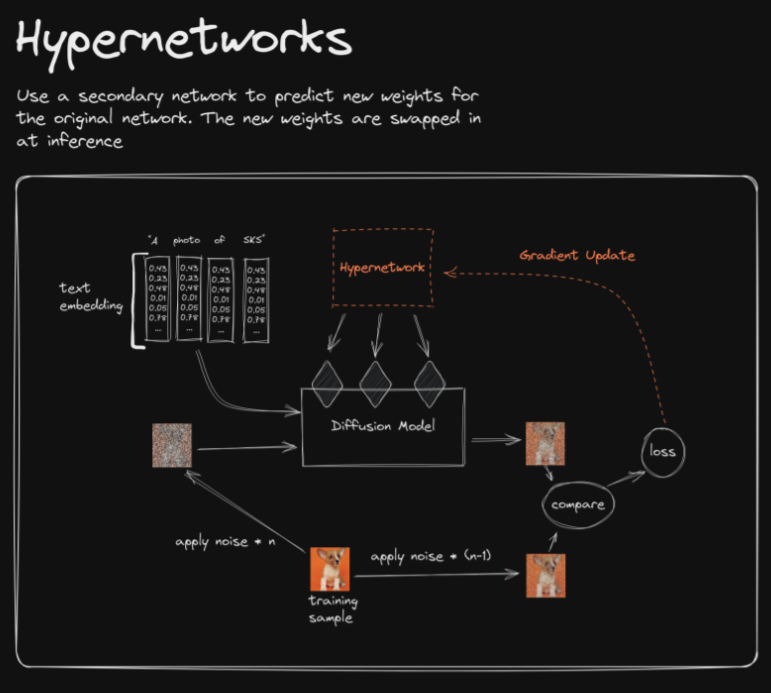

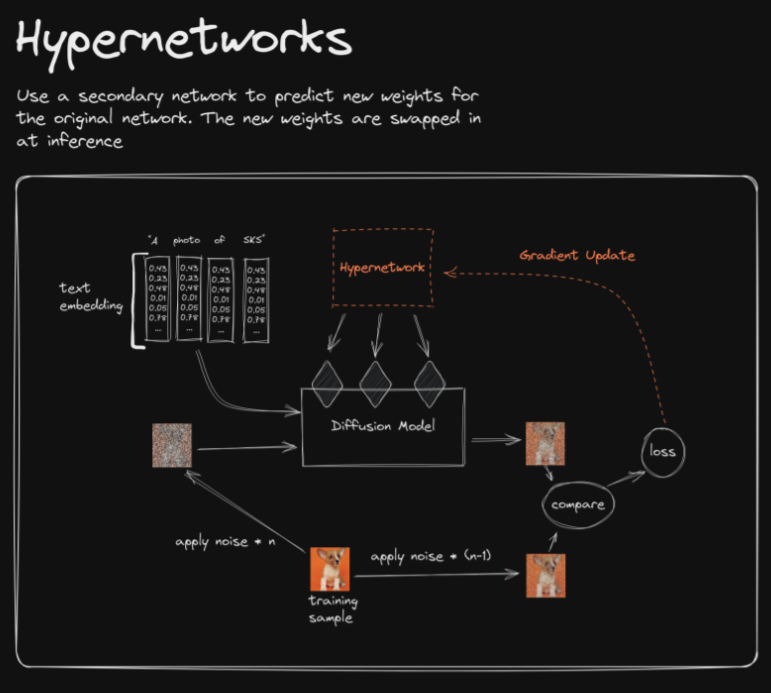

- The image below, source, displays hypernetworks in action for a diffusion model.

HyperDNN

- As previously described, hypernets generate the weights for a primary or target network. This means that instead of storing a full set of weights for different tasks or conditions, a hypernet can generate them dynamically.

- However, HyperDNNs, which are systems composed of hypernets and their target networks, can adapt to different tasks or conditions without necessarily increasing the number of parameters linearly with the number of tasks.

- While a hypernetwork is like a “controller” that decides the behavior of another network by determining its weights.

- A HyperDNN refers to the combined system of a hypernetwork (that generates weights) and its associated target neural network (that utilizes these weights for some task).

- The HyperDNN is the actual deep neural network that performs tasks, but its weights are dynamically generated or modulated by a hypernet. This means the behavior and performance of the HyperDNN can be adaptive and flexible, depending on how the hypernet is conditioned or designed.

- A HyperDNN is the full system that includes both the controller (hypernetwork) and the network being controlled (target network).

- Let’s look at an example by using the analogy of a radio:

- The hypernetwork is like the tuner dial, determining which frequency (or station) the radio will tune into.

- The HyperDNN is the entire radio system, including the tuner dial and the speaker that produces the sound for the chosen station.

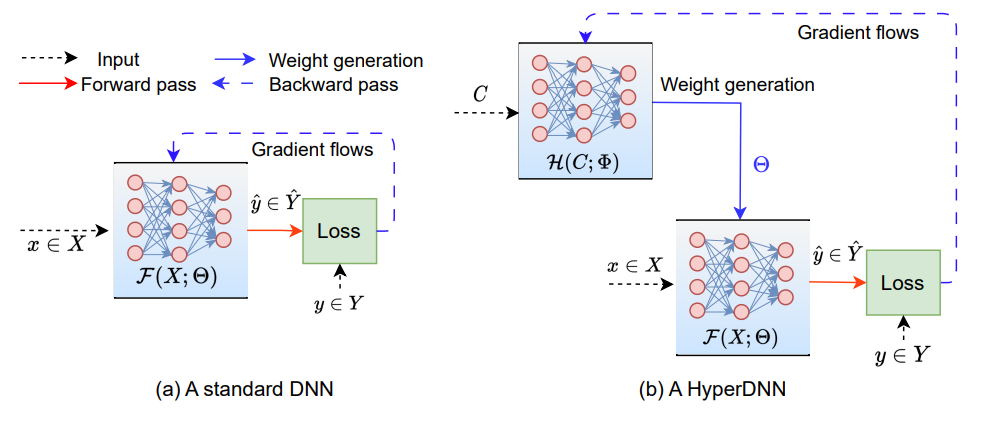

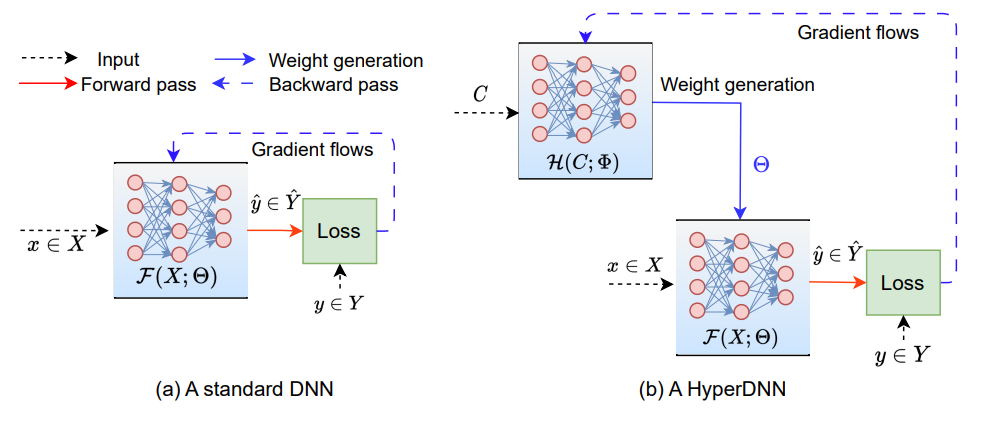

- The image below, source, displays a standard DNN learns weights \(\Theta\) directly through gradient flows, whereas a HyperDNN uses a hypernet with weights \(\Phi\) to dynamically generate the DNN’s weights \(\Theta\) during training.

Architectures of Hypernets

- Hypernetworks can be classified based on their architectures into four main types:

- MLPs (Multi-Layer Perceptrons): These use a fully connected structure where every input neuron connects to every output neuron, enabling extensive weight generation by utilizing the full input information.

- CNNs (Convolutional Neural Networks): These utilize convolutional layers to identify local and spatial patterns, making them ideal for tasks that involve spatial data like image or video analysis.

- RNNs (Recurrent Neural Networks): RNN hypernetworks contain recurrent connections, allowing them to process sequential information. This makes them apt for tasks like natural language processing or time series analysis.

- Attention-based Networks: These hypernetworks integrate attention mechanisms, enabling them to focus on relevant input features selectively. This helps in handling long-range dependencies and enhances the quality of the generated outputs.

- Each architecture has distinct advantages, allowing hypernetworks to generate weights in tune with the specific requirements of the target network and the data at hand.

- That being said, let’s look at a few pros anc cons of hypernets below.

Hypernets Pros and Cons

- Hypernets (Hypernetworks) Pros:

- Flexibility and Adaptability: They can generate weights dynamically, allowing for flexible and adaptable model designs.

- Soft Weight Sharing: Hypernets can be conditioned to generate weights for multiple related DNNs, enabling information sharing among tasks and promoting transfer learning.

- Dynamic Architectures: Capable of generating weights for a network whose architecture might change during training or inference.

- Data-adaptive: Unlike standard DNNs, which have fixed weights at inference, HyperDNNs can adapt to input data, making the model more versatile.

- Uncertainty Quantification: They can produce an ensemble of models by generating different sets of weights, aiding in estimating model uncertainty.

- Hypernets Cons:

- Increased Complexity: Introducing a network to generate weights for another network adds a layer of complexity to the model design.

- Training Stability: Training hypernets can be more challenging due to the indirect weight generation, potentially leading to instability.

- Resource Intensive: Despite potential weight compression, the introduction of hypernets may increase the overall computational requirements.

- Harder to Interpret: The added layer of abstraction might make the model harder to understand and interpret.

HyperDNNs Pros and Cons

- HyperDNNs Pros:

- Parameter Efficiency: HyperDNNs can achieve weight compression, potentially resulting in fewer parameters than standard DNNs.

- Adaptability: Since the weights are generated dynamically, HyperDNNs can better adapt to varying input data or tasks.

- Potential for Better Performance: By tailoring weights based on input data or conditions, HyperDNNs might achieve better performance on certain tasks compared to traditional DNNs.

- Versatility Across Tasks: HyperDNNs can be employed across a wide range of deep learning problems, from ensemble learning to neural architecture search.

- HyperDNNs Cons:

- Training Challenges: The indirect weight generation might make the training process more intricate and challenging.

- Possible Overfitting: Due to the added complexity and adaptability, there’s a potential risk of overfitting if not managed properly.

- Performance Variability: Since weights are generated dynamically, there might be variability in performance across different inputs or conditions.

- Resource Considerations: Even though they might have fewer weights, the computational cost of dynamically generating these weights can be resource-intensive.

- It’s essential to note that the choice between hypernets, HyperDNNs, and traditional DNNs would depend on the specific problem at hand, the available resources, and the desired outcomes.

References