Natural Language Processing • Tokenizer

- Overview

- Tokenization: The Specifics

- Sub-word Tokenization

- WordPiece

- Byte Pair Encoding

- Unigram Sub-word Tokenization

- SentencePiece

- Comparative Analysis Summary

- References

Overview

- This section focuses on the significance of tokenization in Natural Language Processing (NLP) and how it enables machines to understand language.

- Teaching machines to comprehend language is a complex task. The objective is to enable machines to read and comprehend the meaning of text.

- To facilitate language learning for machines, text needs to be divided into smaller units called tokens, which are then processed.

- Tokenization is the process of breaking text into tokens, and it serves as the input for language models like BERT.

- The extent of semantic understanding achieved by models is still not fully understood, although it is believed that they acquire syntactic knowledge at lower levels of the neural network and semantic knowledge at higher levels (source).

- Instead of representing text as a continuous string, it can be represented as a vector or list of its constituent vocabulary words. This transformation is known as tokenization, where each vocabulary word in a text becomes a token.

Tokenization: The Specifics

- Let’s dive a little deeper on how tokenization even works today.

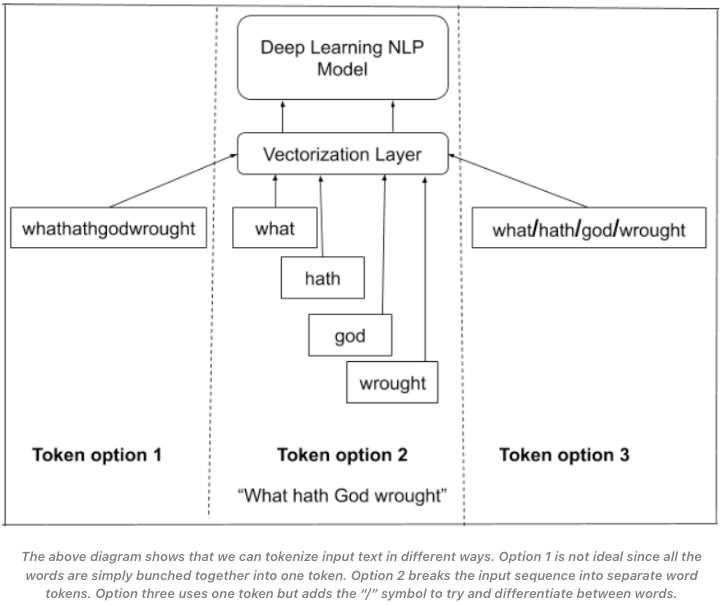

- To start off, there are many ways and options to tokenize text. You can tokenize by removing spaces, adding a split character between words or simply break the input sequence into separate words.

- This can be visualized by the image above (source).

- As we stated earlier, we use one of these options as a way to split the larger text into a smaller unit, a token, to serve as input to the model.

- Additionally, in order for the model to learn relationships between words in a sequence of text, we need to represent it as a vector.

- We do this in lieu or hard coding grammatical rules within our system as the complexity for this would be exponential since it would change per language.

- Instead, with vector representation, the model has encoded meaning in any dimension of this vector.

Sub-word Tokenization

- Sub-word tokenization is a method used to break words down into smaller sub-tokens. It is based on the concept that many words in a language share common prefixes or suffixes, and by breaking words into smaller units, we can handle rare and out-of-vocabulary words more effectively.

- By employing sub-word tokenization, we can better handle out-of-vocabulary words (OOV) by combining one or more common words. For example, “anyplace” can be broken down into “any” and “place”. This approach not only aids in handling OOV words but also reduces the model size and improves efficiency.

- Tokenizers are specifically designed to address the challenge of out-of-vocabulary (OOV) words by breaking down words into smaller units. This enables the model to handle a broader range of vocabulary.

- There are several algorithms available for performing sub-word tokenization, each with its own strengths and characteristics. These algorithms offer different strategies for breaking down words into sub-tokens, and their selection depends on the specific requirements and goals of the NLP task at hand.

WordPiece

- Initial Inventory: WordPiece starts with an inventory of all individual characters in the text.

- Build Language Model: A language model is created using this initial inventory.

- New Word Unit Creation: The algorithm iteratively creates new word units by combining existing units in the inventory. The selection criterion for the new word unit is based on which combination most increases the likelihood of the training data when added to the model.

- Iterative Process: This process continues until reaching a predefined number of word units or the likelihood increase falls below a certain threshold.

- WordPiece Tokenization Example

- Initial Inventory:

- WordPiece starts with individual characters as the initial inventory: ‘s’, ‘h’, ‘e’, ‘ ‘, ‘w’, ‘a’, ‘l’, ‘k’, ‘d’, ‘.’, ‘i’, ‘o’, ‘g’, ‘r’

- Building the Vocabulary:

- First Iteration:

- Suppose the most significant likelihood increase comes from combining ‘w’ and ‘a’ to form ‘wa’.

- New Inventory: ‘s’, ‘h’, ‘e’, ‘ ‘, ‘wa’, ‘l’, ‘k’, ‘d’, ‘.’, ‘i’, ‘o’, ‘g’, ‘r’

- Second Iteration:

- Next, the combination ‘l’ and ‘k’ gives a good likelihood boost, forming ‘lk’.

- New Inventory: ‘s’, ‘h’, ‘e’, ‘ ‘, ‘wa’, ‘lk’, ‘d’, ‘.’, ‘i’, ‘o’, ‘g’, ‘r’

- Third Iteration:

- Let’s say combining ‘wa’ and ‘lk’ into ‘walk’ is the next most beneficial addition.

- New Inventory: ‘s’, ‘h’, ‘e’, ‘ ‘, ‘walk’, ‘d’, ‘.’, ‘i’, ‘o’, ‘g’, ‘r’

- Further Iterations:

- The process continues, adding more combinations like ‘er’, ‘ed’, etc., based on what increases the likelihood the most.

- First Iteration:

Tokenizing the Text:

- “she walked. he is a dog walker. i walk”

- With the current state of the vocabulary, the algorithm would tokenize the text as follows:

- ‘she’, ‘walk’, ‘ed’, ‘.’, ‘he’, ‘is’, ‘a’, ‘dog’, ‘walk’, ‘er’, ‘.’, ‘i’, ‘walk’

- Here, words like ‘she’, ‘he’, ‘is’, ‘a’, ‘dog’, ‘i’ remain as they are since they’re either already in the initial inventory or don’t present a combination that increases likelihood significantly.

- ‘walked’ is broken down into ‘walk’ and ‘ed’, ‘walker’ into ‘walk’ and ‘er’, as these subwords (‘walk’, ‘ed’, ‘er’) are present in the expanded inventory.

- Note:

- In a real-world scenario, the WordPiece algorithm would perform many more iterations, and the decision to combine tokens depends on complex statistical properties of the training data.

- The examples given are simplified and serve to illustrate the process. The actual tokens generated would depend on the specific training corpus and the parameters set for the vocabulary size and likelihood thresholds.

Byte Pair Encoding

- Initial Inventory: Similar to WordPiece, BPE begins with all individual characters in the language.

- Frequency-Based Merges: Instead of using likelihood to guide its merges, BPE iteratively combines the most frequently occurring pairs of units in the current inventory.

- Fixed Number of Merges: This process is repeated for a predetermined number of merges.

- Consider the input text: “she walked. he is a dog walker. i walk”

- BPE Merges:

- First Merge: If ‘w’ and ‘a’ are the most frequent pair, they merge to form ‘wa’.

- Second Merge: Then, if ‘l’ and ‘k’ frequently occur together, they merge to form ‘lk’.

- Third Merge: Next, ‘wa’ and ‘lk’ might merge to form ‘walk’.

- Vocabulary: At this stage, the vocabulary includes all individual characters, plus ‘wa’, ‘lk’, and ‘walk’.

- Handling Rare/Out-of-Vocabulary (OOV) Words

- Subword Segmentation: Any word not in the vocabulary is broken down into subword units based on the vocabulary.

- Example: For a rare word like ‘walking’, if ‘walking’ is not in the vocabulary but ‘walk’ and ‘ing’ are, it gets segmented into ‘walk’ and ‘ing’.

- Benefits

- Common Subwords: This method ensures that words with common roots or morphemes, like ‘walked’, ‘walker’, ‘walks’, are represented using common subwords (e.g., ‘walk@@’). This helps the model to better understand and process these variations, as ‘walk@@’ would appear more frequently in the training data.

Unigram Sub-word Tokenization

- The algorithm starts by defining a vocabulary of the most frequent words and represent the remaining words as a combination of the vocabulary words.

- Then it iteratively splits the most probable word into smaller parts until a certain number of sub-words is reached.

- Unigram: A fully probabilistic model which does not use frequency occurrences. Instead, it trains a LM using a probabilistic model, removing the token which improves the overall likelihood the least and then starting over until it reaches the final token limit.(source)

SentencePiece

- Unsegmented Text Input: Unlike WordPiece and BPE, which typically operate on pre-tokenized or pre-segmented text (like words), SentencePiece works directly on raw, unsegmented text (including spaces). This approach allows it to model the text and spaces as equal citizens, essentially learning to tokenize from scratch without any assumption about word boundaries.

Initial Process:

- Initial Inventory: SentencePiece starts with an inventory of individual characters, including spaces, from the text.

- No Pre-defined Word Boundaries: Since it operates on raw text, there are no pre-existing word boundaries. Every character, including space, is treated as a distinct symbol.

Example:

Consider the input text: “she walked. he is a dog walker. i walk”

Vocabulary Building:

- First Iteration:

- SentencePiece might begin by combining frequent pairs of characters, including spaces. For example, ‘e’ and ‘ ‘ (space) might be a frequent combination.

- New Inventory: ‘s’, ‘h’, ‘e’, ‘ ‘, ‘e ‘, ‘w’, ‘a’, ‘l’, ‘k’, ‘d’, ‘.’, ‘i’, ‘o’, ‘g’, ‘r’

- Subsequent Iterations:

- The process continues, combining characters and character sequences into longer pieces.

- For example, ‘w’, ‘a’, ‘l’, ‘k’ might combine to form ‘walk’.

- New Inventory: ‘s’, ‘h’, ‘e’, ‘ ‘, ‘e ‘, ‘walk’, ‘d’, ‘.’, ‘i’, ‘o’, ‘g’, ‘r’, …

- Until Vocabulary Size is Reached:

- This iterative process of combining continues until a predefined vocabulary size is reached.

Tokenizing the Text:

- “she walked. he is a dog walker. i walk”

- SentencePiece might tokenize this as: ‘she’, ‘walk’, ‘ed.’, ‘he’, ‘is’, ‘a’, ‘dog’, ‘walk’, ‘er.’, ‘i’, ‘walk’

- Notice how punctuation and spaces are treated as tokens (‘ed.’ and ‘er.’).

Handling Rare/Out-of-Vocabulary (OOV) Words:

- Robust to OOV: Like WordPiece and BPE, any word not in the vocabulary is broken down into subword units. However, since SentencePiece does not rely on pre-segmented text, its approach to handling OOV words is more flexible and can capture nuances in how spaces and punctuation are used.

Benefits:

- No Need for Pre-tokenization: SentencePiece eliminates the need for language-specific pre-tokenization. This makes it particularly useful for languages where word boundaries are not clear or in multilingual contexts.

- Uniform Treatment of Characters: By treating spaces and other characters equally, SentencePiece can capture a broader range of linguistic patterns, which is beneficial for modeling diverse languages and text types.

In summary, SentencePiece offers a unique approach to tokenization by working directly on raw text and treating all characters, including spaces, on an equal footing. This method is particularly beneficial for languages with complex word structures and for multilingual models.

Comparative Analysis Summary

- Below is a comparative analysis of the aforementioned four algorithms.

Unigram Subword Tokenization

- It starts with a set of words, and then iteratively splits the most probable word into smaller parts.

- It assigns a probability to the newly created subwords based on their frequency in the text.

- It is less popular compare to other subword tokenization methods like BPE or SentencePiece.

- It has been reported to have good performance in some NLP tasks such as language modeling and text-to-speech.

Byte Pair Encoding (BPE)

- How It Works: BPE is a data compression technique that has been adapted for use in NLP. It starts with a base vocabulary of individual characters and iteratively merges the most frequent pair of tokens to form new, longer tokens. This process continues for a specified number of merge operations.

- Advantages: BPE is effective in handling rare or unknown words, as it can decompose them into subword units that it has seen during training. It also strikes a balance between the number of tokens (length of the input sequence) and the size of the vocabulary.

- Use in NLP: BPE is widely used in models like GPT-2 and GPT-3.

WordPiece

- How It Works: WordPiece is similar to BPE but differs slightly in its approach to creating new tokens. Instead of just merging the most frequent pairs, WordPiece looks at the likelihood of the entire vocabulary and adds the token that increases the likelihood of the data the most.

- Advantages: This method often leads to a more efficient segmentation of words into subwords compared to BPE. It’s particularly good at handling words not seen during training.

- Use in NLP: WordPiece is used in models like BERT and Google’s neural machine translation system.

SentencePiece

- How It Works: SentencePiece is a tokenization method that does not rely on pre-tokenized text. It can directly process raw text (including spaces and special characters) into tokens. SentencePiece can be trained to use either the BPE or unigram language model methodologies.

- Advantages: One of the key benefits of SentencePiece is its ability to handle multiple languages and scripts without needing pre-tokenization or language-specific logic. This makes it particularly useful for multilingual models.

- Use in NLP: SentencePiece is used in models like ALBERT and T5.

Comparison

- Granularity: BPE and WordPiece focus on subword units, while SentencePiece includes spaces and other non-text symbols in its vocabulary, allowing it to tokenize raw text directly.

- Handling of Rare Words: All three methods are effective in handling rare or unknown words by breaking them down into subword units.

- Training Data Dependency: BPE and WordPiece rely more heavily on the specifics of the training data, while SentencePiece’s ability to tokenize raw text makes it more flexible and adaptable to various languages and scripts.

- Use Cases: The choice of tokenization method depends on the specific requirements of the language model and the nature of the text it will process. For example, SentencePiece’s ability to handle raw text without pre-processing makes it a good choice for models dealing with diverse languages and scripts.

Each of these tokenization methods plays a crucial role in the preprocessing step of NLP model development, impacting the model’s performance, particularly in terms of its ability to generalize and handle diverse linguistic phenomena.