Recsys - Embeddings

- Overview

- Factorization Machines vs. Matrix Factorization

- Representing Demographic Data

- Content-Based Filtering

- Comparative Analysis

Overview

- This article will go over different methods of generating embeddings in recommender systems.

- Embeddings are a key component in many recommender systems. They provide low-dimensional vector representations of users and items that capture latent characteristics. Here are some common embedding techniques used in recommenders:

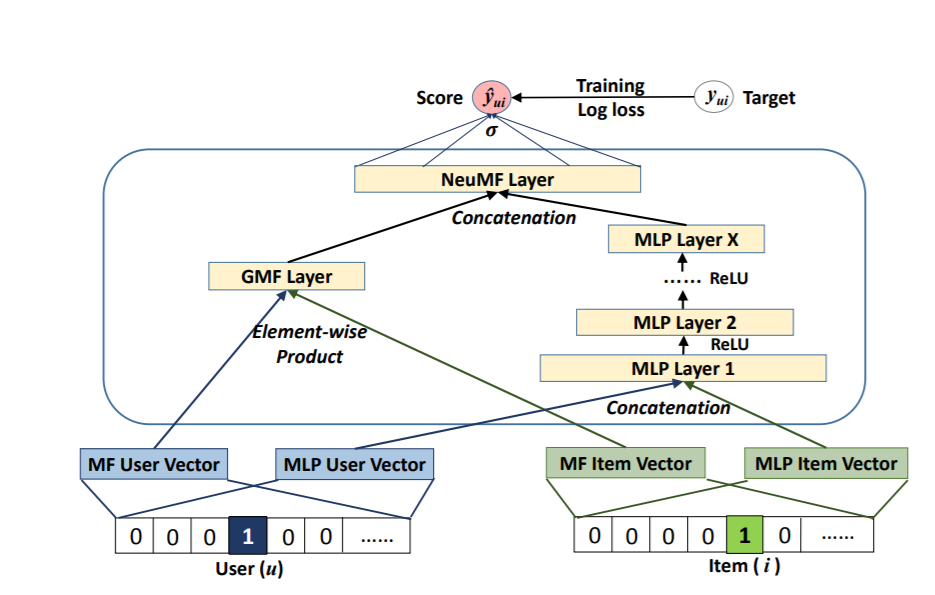

Neural Collaborative Filtering (NCF)

- Input: User-item interaction data (e.g. ratings, clicks)

- Computation: Trains a neural network model on the interaction data to learn embeddings for users and items that can predict interactions. Combines matrix factorization and multi-layer perceptron approaches.

- Output: Learned user and item embeddings.

- Advantages: Captures complex non-linear patterns. Performs well on sparse data.

- Limitations: Requires large amounts of training data. Computationally expensive.

Matrix Factorization (MF)

- Input: User-item interaction matrix.

- Computation: Decomposes the matrix into low-rank user and item embedding matrices using SVD or ALS.

- Output: User and item embeddings.

- Advantages: Simple and interpretable.

- Limitations: Limited capability for sparse and complex data.

Factorization Machines (FM)

- Input: User features, item features, interactions.

- Computation: Models feature interactions through factorized interaction matrix. Captures linear and non-linear relationships.

- Output: User and item embeddings.

- Advantages: Handles sparse and high-dimensional data well. Flexible modeling of feature interactions.

- Limitations: Less capable for highly complex data.

Graph Neural Networks (GNNs)

- Input: User-item interaction graph.

- Computation: Propagate embeddings on graph using neighbor aggregation, graph convolutions etc.

- Output: User and item node embeddings.

- Advantages: Captures graph relations and structure.

- Limitations: Requires graph data structure. Computationally intensive.

Factorization Machines vs. Matrix Factorization

-

Key differences:

- Modeling approach: MF directly factorizes interaction matrix. FM models feature interactions.

- Handling features: MF doesn’t explicitly model features. FM factorizes feature interactions.

- Data representation: MF uses interaction matrix. FM uses feature vectors.

- Flexibility: MF has limited modeling capability. FM captures non-linear relationships.

- Applications: MF for collaborative filtering. FM for various tasks involving features.

Representing Demographic Data

Approaches for generating user embeddings from demographics:

- One-hot encoding: Simple but causes sparsity.

- Embedding layers: Maps attributes to lower dimensions, capturing non-linear relationships.

- Pretrained embeddings: Leverage semantic relationships from large corpora.

-

Autoencoders: Learn compressed representations via neural networks.

- Choose based on data characteristics and availability of training data.

Content-Based Filtering

- Represents items via content features like text, attributes, metadata.

- Computes user-item similarities using TF-IDF, word embeddings, etc.

- Recommends items similar to user profile.

Comparative Analysis

The choice of embedding technique depends on the characteristics and requirements of the recommender system:

- Use NCF or DMF for systems involving complex non-linear relationships and abundant training data.

- Prefer MF when interpretability is critical and data is limited.

- FM excels for sparse data with rich features.

-

GNNs are suitable for graph-structured interaction data.

- Here’s a table summarizing the different methods and their characteristics to help you decide which approach to choose for your recommendation system:

| Method | Use Case | Input | Output | Computation | Advantages | Limitations |

|---|---|---|---|---|---|---|

| Neural Collaborative Filtering (NCF) | Collaborative filtering with deep learning | User-item interaction data | User and item embeddings | Training neural networks | Captures complex patterns in data | Requires large amounts of training data |

| Matrix Factorization (MF) | Traditional collaborative filtering | User-item interaction matrix | User and item embeddings | Matrix factorization techniques | Simplicity and interpretability | Struggles with handling sparse data |

| Factorization Machines (FM) | General-purpose recommender system | User and item features, interaction data | User and item embeddings | Factorization of feature interactions | Handles high-dimensional and sparse data | Limited modeling capability for complex data |

| Deep Matrix Factorization (DMF) | Matrix factorization with deep learning | User and item features, interaction data | User and item embeddings | Deep neural networks with factorization | Captures non-linear interactions | Requires more computational resources |

| Graph Neural Networks (GNN) | Graph-based recommender systems | User-item interaction graph | User and item embeddings | Graph propagation algorithms | Captures relational dependencies in data | Requires graph-based data and computation |