Recommendation Systems • Content Moderation

Overview

How to handle a recommendation system obtaining high engagement with questionable videos on the platform

- It’s important to first understand the platform and companies policies and to identify if the action to take here it to eliminate these videos, moderate them for an age group, or allow them for everyone.

- Content Moderation: Implement robust content moderation mechanisms to filter and remove inappropriate or questionable videos from the platform. This can involve employing human moderators, using automated algorithms for content analysis, and providing reporting mechanisms for users to flag problematic content.

- User Feedback and Reporting: Encourage users to provide feedback and report any problematic videos they come across. Create a user-friendly reporting system that allows users to easily flag inappropriate content. Act promptly on user reports to investigate and take necessary actions.

- Machine Learning Algorithms: Leverage machine learning algorithms to identify and classify questionable content. Train models to detect patterns, explicit content, hate speech, or other forms of harmful or misleading information. Continuously improve these algorithms by incorporating user feedback and expert annotations.

- Recommendation Algorithm Adjustments: Modify your recommendation algorithms to consider factors beyond engagement metrics alone. Include content quality, credibility, user preferences, and diversity in the recommendations. Balance engagement with responsible content curation to ensure a healthy and trustworthy user experience.

-

Continuous Monitoring and Evaluation: Regularly monitor the platform’s content and user feedback to identify emerging trends or loopholes that allow questionable content to surface. Keep refining your strategies and algorithms based on insights and user behavior patterns.

-

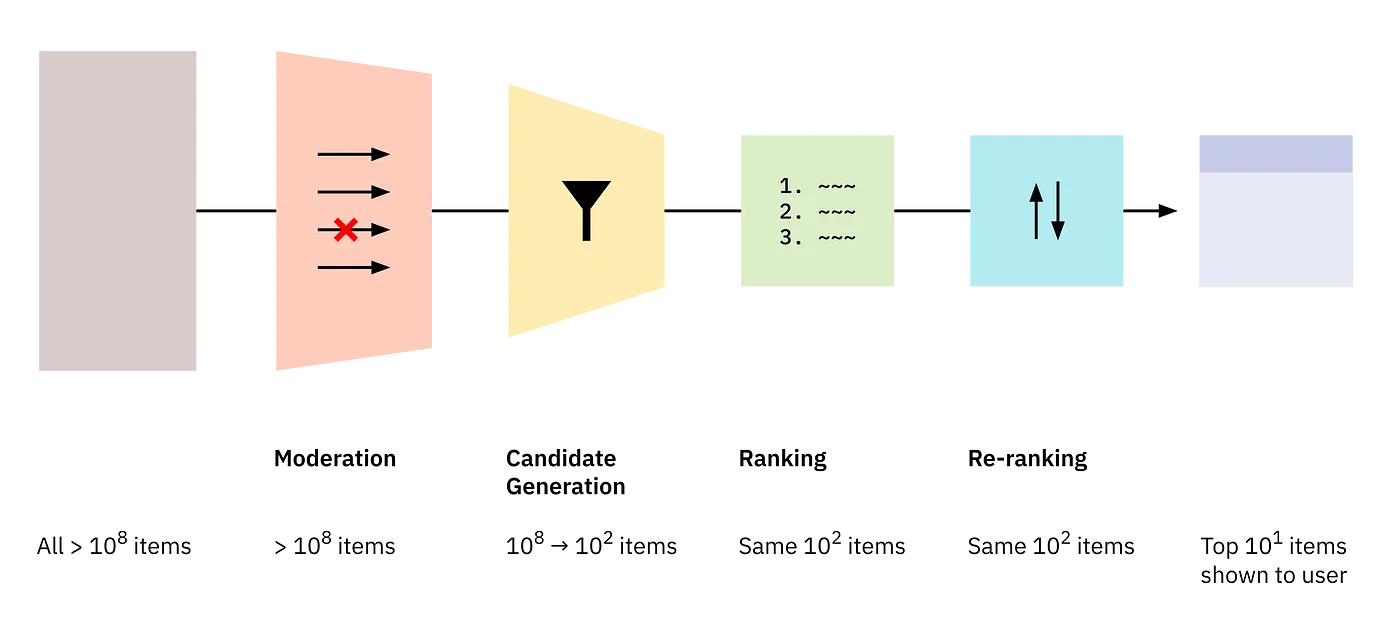

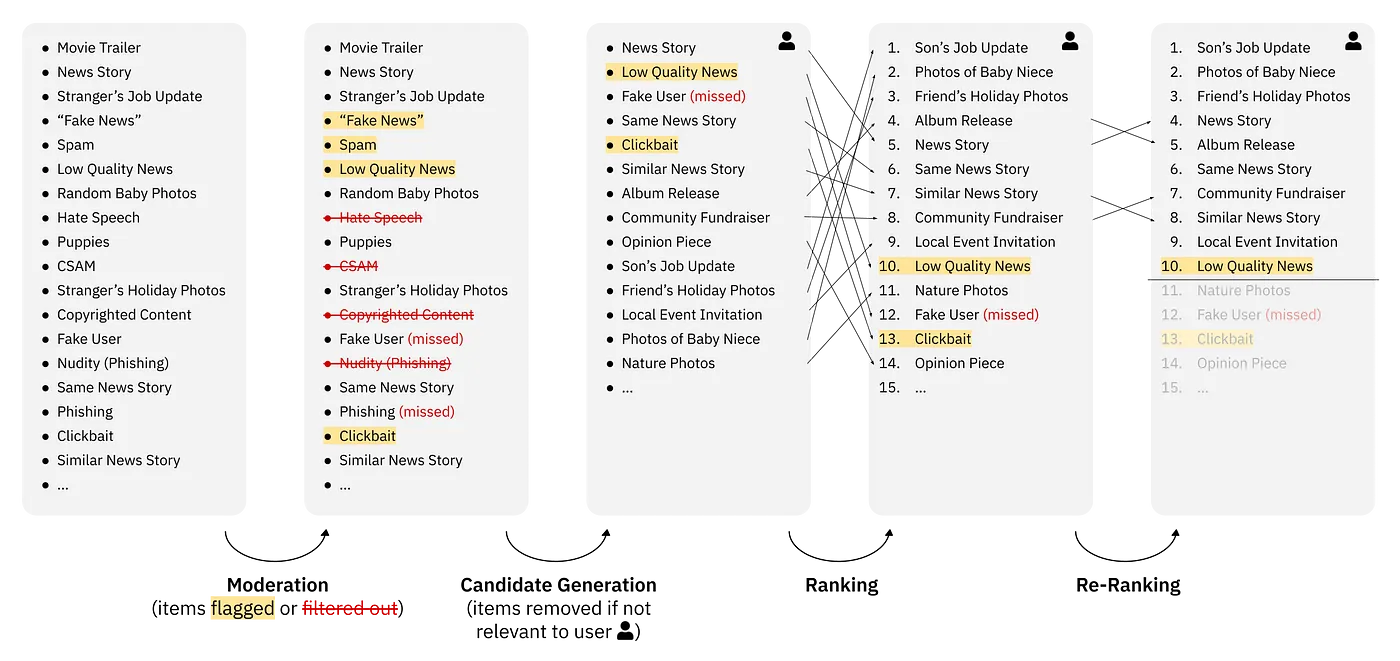

The first stage of content moderation involves removing or flagging undesirable items from a pool of content. Moderation is a complex process that includes policy-making, human content raters, automated classifiers, and an appeals process. In this context, “moderation” specifically refers to the automated processes that remove content from consideration for recommendation. Companies can be held responsible for hosting content related to various issues like copyright infringement, defamation, child sexual abuse material (CSAM), or hate speech. Platforms also have policies to filter out harmful content such as nudity, coordinated inauthentic behavior, or public health misinformation. Automated filters play a significant role in moderating content, catching different categories of undesirable content. The actions taken on filtered items depend on the category and platform policies. They may be removed from consideration for recommendation or flagged for down-ranking at a later stage.

-

Also write about last stage moderation

- content moderation at input and output