Recommendation Systems • Multi-Armed Bandits

- Overview

- Why Contextual Bandits?

- Use-cases

- Introduction to Bandit Algorithms

- Algorithms

- Comparative Analysis of Multi-Armed Bandit Algorithms

- Advantages

- Disadvantages

- Simplicity and Assumptions

- Exploration vs. Exploitation Balance

- Suboptimal Performance in Complex Environments

- Scalability Issues

- Cold Start Problem

- Poor Handling of Variability

- Incompatibility with Complex Reward Structures

- Suboptimal Performance in Collaborative Environments

- Dependence on Reward Model

- Limited Applicability to Large-Scale Sequential Decision Problems

- Contextual Bandits

- How Contextual Bandits Work

- Algorithms

- Challenges and Considerations

- Advantages

- Personalization and Adaptation to Context

- Improved Learning Efficiency

- Reduced Exploration Costs

- Better Handling of Dynamic Environments

- Higher Overall Rewards

- Scalability to Complex Decision Spaces

- Applicable to a Wide Range of Applications

- Natural Extension of Reinforcement Learning

- Incorporation of Feature-Based Models

- Disadvantages

- High Dimensionality of Contexts

- Exploration vs. Exploitation Trade-off Complexity

- Non-Stationarity of Contexts

- Data Sparsity

- Scalability Issues

- Dependence on Context Quality

- Delayed Rewards or Feedback

- Algorithmic Complexity

- Cold-Start Problem

- Assumption of Independence of Contextual Information

- Lack of Robustness

- Constrained Contextual Bandits

- Adversarial bandits

- Off-Policy Evaluation (OPE)

- Challenges of Using Reinforcement Learning in Recommendation Systems

- Use-cases

- Content Recommendation Systems

- Ad Optimization

- E-commerce Personalization

- Email Marketing Optimization

- Game Design & Personalized Gaming Experiences

- Healthcare Personalization

- Education & Learning Platforms

- Website Personalization

- Music Streaming

- Customer Support & Chatbots

- Why Bandits Excel in Personalization

- Industrial Deployments of Contextual Bandits

- Spotify: Multi-Objective Optimization in Music Streaming

- Yahoo: News Recommendation with Contextual Bandits

- Netflix: Personalizing Movie Images

- Instacart: Personalized Item Retrieval and Ranking

- DoorDash: Contextual Bandits for Cuisine Recommendations

- Spotify’s Recsplanations: Optimizing Recommendation Explanations

- Offline Evaluation Replay

- Industry examples of Bandit Deployments

- FAQs

- How are MABs implemented? what state do they track during runtime to make effective explore-exploit tradeoffs?

- How do MABs (and derivative approaches such as Contextual MABs) make real-time decisions?

- Are bandits used for non-personalized search?

- In the context of \(\epsilon\)-greedy bandit algorithm, what are some strategies/schedules to decay \(\epsilon\) for exploration?

- Do contextual bandits perform better on the cold start problem compared to traditional recommender system architectures?

- Why do Contextual Bandits Perform Better in Cold Start Scenarios?

- Are contextual bandits used in personalized search?

- Are contextual bandits used in a specific layer of the ranking pipeline?

- How do you add a new arm to a contextual bandit?

- Why do contextual bandit not require large-scale data unlike deep learning models?

- Can we do recommendations in batches with bandits (or contextual bandits)?

- What are slate and combinatorial bandits?

- What is slate evaluation?

- References

- Citation

Overview

- Bandit algorithms, or simply “bandits,” are inspired by the “Multi-Armed Bandit (MAB) problem,” a concept rooted in decision-making under uncertainty. This problem is analogous to a gambler facing a row of slot machines, often referred to as “one-armed bandits” (or simply “bandit”). Each machine (or “arm”) has an unknown probability distribution of rewards, and the gambler’s goal is to maximize cumulative rewards over time (i.e., over multiple iterations) by choosing which arm to pull (i.e., which machine to play). The key challenge lies in balancing two competing objectives: exploitation, where the gambler selects the machine that has provided the highest reward so far to maximize immediate returns, and exploration, where the gambler tries different machines to gather more information, as some unexplored machines may yield better rewards.

- The MAB problem has become a foundational model in fields such as statistics, machine learning, Reinforcement Learning (RL), operations research, and economics. It is especially relevant in applications where there is a need to balance exploration (gathering information about uncertain actions) and exploitation (leveraging known actions to maximize reward). Examples of real-world applications include online advertising (selecting which ads to show), clinical trials (deciding which treatments to allocate to patients), and recommendation systems (choosing which content to recommend). For a refresher on RL, please refer our RL primer.

- More formally, the MAB problem can be framed as a sequential decision-making process where a decision-maker (the gambler) must choose an action \(a_t\) from a set of available actions (arms) \(A\) at each time step \(t\). Each action \(a_t\) provides a stochastic reward \(r(a_t)\), sampled from a distribution with an unknown mean \(\mu(a_t)\). The objective of the decision-maker is to maximize the expected cumulative reward over \(T\) time steps: \(\max \mathbb{E} \left[ \sum_{t=1}^T r(a_t) \right].\)

- Simultaneously, the decision-maker aims to minimize regret, defined as the difference between the cumulative reward that could have been obtained by always choosing the optimal action and the actual cumulative reward obtained. Regret serves as a measure of the opportunity cost of not knowing the optimal action in advance.

- The trade-off between exploration and exploitation is a key challenge in solving MAB problems. Exploration involves selecting less-known arms to gather information about their reward distributions, which may reveal better options. Exploitation, on the other hand, involves selecting the arm that currently seems to have the highest expected reward based on available information. A naive strategy that always exploits may converge to a suboptimal solution due to insufficient exploration, while excessive exploration can lead to slower accumulation of rewards. An optimal strategy must carefully balance these two competing objectives.

- In RL, MABs are often considered a special case of more complex decision-making problems modeled by Markov Decision Processes (MDPs), where actions yield immediate rewards and affect future states and rewards. However, in MABs, actions are independent and do not influence future states, making them simpler yet highly relevant in various applications. Since RL techniques are oriented towards long-horizon strategies, MABs, as a type of RL, are suitable for applications requiring long-term reward optimization. For instance, Netflix leverages bandits to make predictions that span multiple user visits, recommending content based on delayed rewards, as members may only provide feedback after watching a series over several sessions or viewing multiple movies.

- In the context of personalization of online platforms, bandit algorithms play a critical role in optimizing user satisfaction and performance metrics, such as click-through rates (CTR) and engagement. These algorithms must balance two key objectives:

- Exploration: Testing different search results or content options to learn more about their performance.

- Exploitation: Showing the results or content options that are already known to perform well based on past user interactions.

- By dynamically adjusting the balance between exploration and exploitation, bandit algorithms continuously improve overall performance, aiming to ensure user satisfaction while learning which options yield the highest rewards.

- Bandit algorithms, when applied to search engines or recommendation systems, operate through specific elements to balance exploration and exploitation effectively.

- Arms: These represent different actions, such as presenting a particular search result or recommendation option to users.

- Rewards: These are the user’s responses to the presented options, such as clicks, conversions, or the amount of time spent on a webpage.

- Goal: The algorithm seeks to maximize the expected reward (e.g., engagement, satisfaction) by deciding when to explore less frequently selected options or exploit those that are known to perform well.

- By optimizing these elements—arms, rewards, and goal—the system continuously learns and adapts to improve user engagement and satisfaction by effectively picking the right trade-off for exploration-exploitation.

- Beyond personalization, bandit algorithms provide a more efficient approach to online A/B or multivariate testing than traditional methods. In conventional A/B testing, traffic is divided equally between different variants to assess their performance. This approach can be inefficient, as it allocates traffic to underperforming variants. Bandit algorithms address this by dynamically adjusting traffic allocation, directing more users to higher-performing options in real time. By balancing exploration (testing new options) and exploitation (favoring known successful options), bandit algorithms optimize both user satisfaction and platform performance. Their adaptive nature enables platforms to continuously learn from user behavior, leading to better content and experience optimization across platforms.

- Below, we will explore the various aspects and challenges of the MAB problem further, including its extensions to contextual bandits, where the decision-making process incorporates external information or “context” to dynamically adjust action choices, and non-stationary bandits, where reward distributions may change over time.

Why Contextual Bandits?

- Contextual bandit techniques offer significant advantages for improving recommender systems by addressing key challenges such as feedback loops, biased training data, short-term optimization pitfalls, and establishing causal relationships in user behavior. By enabling the collection of unbiased data and emphasizing long-term user satisfaction, these techniques provide a robust, adaptive, and user-focused approach to building effective recommendation systems.

-

Below are the main benefits contextual bandits bring to the table in the context of recommender systems:

-

Breaking Feedback Loops: In real-world recommender systems, biases in user-item interaction data, such as presentation and position biases, can create a feedback loop. These loops arise when the system is trained on observed user actions from a previous time-step, which are biased due to the recommendations shown at that time. For example, users are more likely to interact with items that are prominently displayed, creating a cycle that amplifies these biases. Contextual bandits mitigate this by introducing randomness into recommendations, helping to break (or dampen) the feedback loop and train models on less biased data. This reduces the mismatch between offline and online performance metrics, which is a persistent issue in recommender systems.

-

Optimization for Long-Term Rewards: Unlike traditional recommender systems that optimize for immediate rewards (e.g., clicks or short-term engagement), contextual bandits align with reinforcement learning (RL) principles to optimize for long-term user satisfaction. RL frameworks have been highly effective in applications like robotics (e.g., making robots walk) and games (e.g., learning Go), which require strategies for sustained performance. Integrating RL with contextual bandits in recommender systems enables models to prioritize long-term user engagement and satisfaction, offering a significant improvement over purely myopic models.

-

Causal Recommender Systems: By incorporating exploration and recording propensities for different actions, contextual bandits allow for more robust causal inference in recommender systems. This helps identify the actual impact of recommendations on user behavior, separating correlation from causation. Such causal insights improve the interpretability and effectiveness of recommendations by focusing on what truly drives user satisfaction.

-

Bias Removal Through Exploration: Contextual bandit techniques are particularly effective at reducing biases in data (e.g., Wang et al. 2020). By incorporating randomness into the recommendations, they enable the system to collect cleaner training data while keeping track of propensities for the shown recommendations. Although this randomness may cause slight short-term degradation in user experience, it ultimately improves the long-term quality of recommendations. Online tests have shown that, with careful design of the exploration strategy, the impact of initial randomness is negligible and within the noise floor of the algorithm.

-

Complementary Data Exploration with Search Data: In addition to introducing randomness, contextual bandits can leverage multiple discovery pathways, such as search data, to further reduce feedback loops. For example, if an item is recommended, the user does not need to search for it; conversely, if it is not recommended, it may prompt a search. Training the system on both recommended and searched items helps reduce bias without randomizing displayed recommendations, thereby avoiding short-term degradation of the user experience. While this method requires careful tuning to determine the importance of different data sources, it is highly effective in breaking feedback loops in environments where users have multiple ways to discover items.

-

Balancing Exploration and User Experience: Contextual bandits offer the flexibility to balance exploration (to collect unbiased training data) and exploitation (to optimize user satisfaction). By employing adaptive exploration strategies and hybrid approaches (e.g., combining bandit techniques with search data), contextual bandits gather cleaner training data while minimizing user dissatisfaction during the exploration phase. This balance is crucial for improving the long-term effectiveness of recommender systems without compromising short-term user experience.

-

Use-cases

- MABs are widely applied across recommendation systems, online advertising, medical trials, and dynamic pricing. They are especially valuable for applications where decisions are made sequentially over time, and feedback is available on the outcomes of those decisions.

Personalization

-

In personalization contexts, MAB approaches often outperform traditional A/B testing due to their ability to adapt continuously to incoming data in real-time. Traditional A/B testing involves splitting users into predefined groups, exposing each group to a different version (control or treatment), and comparing aggregate performance metrics after a fixed period. In contrast, MAB algorithms dynamically adjust resource allocation based on observed rewards from different versions, enabling them to prioritize high-performing variations more rapidly.

-

One of the core advantages of MABs in personalization is their capacity to manage a larger number of arms (variations) compared to A/B testing, which typically compares only two or a few versions. MABs effectively explore multiple arms simultaneously and converge on the best-performing arm for each user segment based on feedback. This is achieved through algorithms such as Epsilon-Greedy, Thompson Sampling, and Upper Confidence Bound (UCB). For example, Thompson Sampling handles uncertainty by assigning probabilities to each arm’s expected reward and selecting arms based on the probability that they will outperform others.

-

Additionally, MABs can more effectively handle non-stationary environments, where user preferences or behavior may evolve. Since MAB algorithms continuously learn and update their understanding of the system, they can adjust recommendations as user behavior shifts, unlike A/B tests, which are static and must be reset to accommodate such changes.

-

MABs are especially advantageous in situations where different user segments exhibit distinct preferences. For example, an MAB algorithm may allocate more exposure to a specific variation for users likely to respond positively to it (e.g., younger users might prefer a different UI design than older users). This dynamic allocation fosters personalized experiences at an individual or segment level, maximizing user engagement and conversion rates.

-

However, the choice between A/B testing and MAB often depends on the context. A/B testing may be more suitable for simpler scenarios where only a few versions are tested, the population is homogeneous, or when statistical certainty and interpretability are of primary concern. Conversely, MAB excels in scenarios requiring faster adaptation, testing of multiple variations, or where user preferences evolve over time.

-

The sophistication level required also factors into the decision. MAB algorithms are typically more complex to implement and require more computational resources than A/B testing. A/B testing is simpler and more transparent, offering clearer statistical results with confidence intervals and p-values. MAB, while faster and more adaptive, requires more advanced statistical understanding to properly tune algorithms and evaluate performance.

Testing

- MAB-based online testing is a widely used methodology for experimenting with and optimizing user experiences in domains such as website design, online advertising, and recommendation systems, as an alternative to A/B testing. Both A/B testing and MAB-based online testing aim to identify the most effective version of a product or service by comparing different variants, but they differ significantly in methodology, efficiency, and suitability for various contexts.

Background: A/B Testing

- Method: A/B testing, also known as split testing, is a classical experimental framework where the audience is randomly divided into two or more groups (commonly A and B). Each group is shown a different version (or “variant”) of the product or service, and the performance of each version is evaluated against predefined metrics. For example, in an A/B test for a website, one version might use a new button color (variant A), while the other keeps the original color (variant B). Common metrics include click-through rates, conversion rates, bounce rates, and time on site. The experiment is run for a predetermined duration or until a statistically significant result is achieved, after which the best-performing version is selected.

- Advantages:

- Simplicity and Transparency: A/B testing is straightforward to implement and interpret. With proper randomization and statistical design (e.g., calculating adequate sample size and using appropriate significance levels), it provides clear and unbiased comparisons between different versions.

- Statistical Robustness: A/B tests rely on established statistical methodologies such as hypothesis testing and confidence intervals. The results are typically more interpretable due to the simplicity of the experimental setup.

- Definitive Results: A/B tests can yield strong, definitive conclusions as to which variant performs better across the whole population if the test is well-designed and runs for an adequate amount of time.

- Limitations:

- Inefficiency and Cost: A/B testing can be resource-intensive, particularly in cases where the test exposes a large segment of users to suboptimal variants for an extended period. While the experiment runs, a significant portion of traffic is diverted to versions that may not perform well, leading to potential revenue or user experience loss.

- Static Allocation: A/B testing is static in nature, meaning that once the audience is split and the test begins, there is no adjustment or learning during the test. It waits until the end of the test to declare a winner, which can be slow in rapidly changing environments.

- Limited Scalability to Personalization: A/B tests assume homogeneity across user segments, meaning they test whether a particular variant is superior for the entire user base. This makes it challenging to use A/B testing for personalization, where different variants may work better for different segments of users (e.g., based on geographic region, behavior, or device type).

MAB-based Online Testing

- Method: MAB algorithms address the trade-off between exploration (testing different options to gather information) and exploitation (leveraging the currently best-performing option). Named after the analogy of choosing among multiple slot machines (“bandits”) with unknown payouts, MAB approaches dynamically adjust the traffic allocation to each variant as more performance data is gathered. Popular MAB algorithms include epsilon-greedy, Upper Confidence Bound (UCB), and Thompson Sampling, each of which governs how much traffic to allocate to each variant based on current estimates of performance.

- Exploration: Initially, the algorithm explores by assigning users to different variants to gather data on their performance.

- Exploitation: As data accumulates, the algorithm begins to favor the better-performing variants, directing more traffic to those while still leaving some traffic for continued exploration, thus refining its understanding in real-time.

- Advantages:

- Dynamic Learning and Efficiency: MAB-based methods continuously learn from incoming data, allowing for real-time adjustment of traffic allocation. This reduces the exposure of users to suboptimal variants, potentially improving overall performance during the experiment itself. This approach can be especially beneficial in fast-moving environments such as online ads where results need to be optimized continuously.

- Handling Non-Stationarity: Unlike A/B testing, which assumes performance metrics are static over the experiment, MAB algorithms can adapt to time-varying changes in user behavior or external conditions, such as seasonality or shifts in user preferences.

- Suitability for Personalization: MAB methods excel in scenarios requiring personalization. By continuously learning and adapting to different user segments, MABs can optimize individual experiences more effectively than a one-size-fits-all A/B test.

- Limitations:

- Complexity: Implementing and managing MAB algorithms can be complex, requiring expertise in machine learning and algorithm design. Tuning the exploration-exploitation balance and handling edge cases (e.g., convergence to suboptimal solutions due to noise) can require advanced skills.

- Sensitivity to Noise: MAB methods can be more sensitive to short-term fluctuations or noise in the data. For instance, a short-term spike in performance (due to random chance or external factors) may cause the algorithm to prematurely favor one variant, leading to potential instability.

- Interpretability: Because MABs constantly adjust based on real-time data, interpreting the final outcome can be less straightforward than A/B testing. Results are not expressed as a single, clean comparison but rather as a time-varying process, which may require more sophisticated statistical analysis to explain.

Use Cases and Hybrid Approaches

- A/B Testing: A/B testing is often used when the environment is relatively stable, when interpretability is paramount, or when the stakes of running suboptimal variants for a short time are low. For example, testing a new layout on an e-commerce site where there is no immediate need for dynamic adaptation.

- MAB Testing: MAB testing is preferable in situations where rapid learning and adaptation are critical, such as online advertising, recommendation systems, and personalization algorithms. MAB testing can quickly identify the best-performing content or product recommendations in real-time, improving user experience as the experiment runs.

- Hybrid Approaches: In some cases, hybrid approaches that combine A/B testing and MAB algorithms can be employed. For instance, A/B testing can be used initially to screen out poor variants, followed by MAB optimization for the remaining high-performing variants. This can offer the best of both worlds by providing interpretability and robust conclusions while maximizing efficiency and adapting to changing conditions.

Introduction to Bandit Algorithms

- Bandit algorithms are a class of reinforcement learning (RL) algorithms designed to solve decision-making problems where the goal is to maximize cumulative reward by sequentially selecting actions based on past observations. These algorithms are particularly valuable in situations where each action yields uncertain rewards, requiring the learner to balance two competing objectives: exploration (trying new actions to gather information) and exploitation (choosing known actions that provide higher rewards).

- Several prominent algorithms have been developed to address this exploration-exploitation trade-off:

- Epsilon-Greedy: This simple and effective algorithm exploits the current best option with a probability of \(1-\epsilon\), while with a probability of \(\epsilon\), it explores a randomly chosen option. The parameter \(\epsilon\) controls the balance between exploration and exploitation.

- Thompson Sampling: A Bayesian approach that samples from a posterior probability distribution over potential rewards for each action. It updates beliefs about each action’s reward distribution based on observed outcomes, thus automatically balancing exploration and exploitation through probabilistic sampling.

- Upper Confidence Bound (UCB): This algorithm selects the action with the highest upper confidence bound on its estimated reward, ensuring that underexplored actions are given a chance. As an action is selected more frequently, its confidence bound decreases, leading to a natural balance between exploration and exploitation.

- Each of these algorithms – detailed below – approaches the exploration-exploitation challenge differently, offering various ways to optimize decision-making in uncertain environments.

Bandit Framework

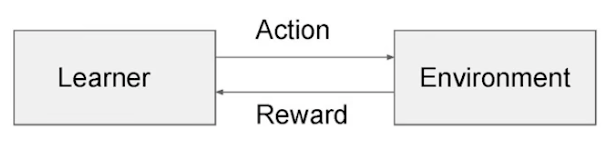

- As illustrated in the image below (source), a learner (also called the agent) interacts with the environment in a sequential decision-making process. Each action, chosen from a finite set of actions (often referred to as arms in the context of MAB problems), elicits a reward from the environment. The learner’s objective is to maximize the cumulative reward over time.

-

The interaction between the learner and the environment can be mathematically represented as follows:

- Action Space \(\mathcal{A}\): A finite set of arms or actions \(\{a_1, a_2, \dots, a_k\}\), where \(k\) is the total number of available actions.

- Reward Function: At each time step \(t\), the learner selects an arm/action \(a_t \in \mathcal{A}\). The environment then generates a stochastic reward \(r_t \in \mathbb{R}\), typically sampled from an unknown probability distribution associated with that action, denoted by \(\mathbb{P}(r \| a)\).

- Objective:

-

The learner’s goal is to maximize the expected cumulative reward over \(n\) rounds: \(\text{Maximize } \mathbb{E}\left[\sum_{t=1}^{n} r_t\right].\)

- Equivalently, the learner seeks to minimize the cumulative regret. Regret is defined as the difference between the reward that would have been obtained by always selecting the optimal action and the reward actually obtained by following the learner’s strategy. Formally, regret \(R(n)\) over \(n\) rounds is given by: \(R(n) = \sum_{t=1}^{n} \left(r^* - r_t\right)\)

- where \(r^*\) is the expected reward of the optimal action, i.e., the action that has the highest expected reward.

-

Bandit Algorithm Workflow

-

The bandit algorithm operates in the following manner for each round \(t\):

- The learner/agent selects an arm/action \(a_t\) from the action space \(\mathcal{A}\). This selection is based on a strategy that balances the trade-off between exploration (trying different actions to gather more information about their reward distributions) and exploitation (choosing actions that are known to provide high rewards based on previous observations).

- The learner communicates this action to the environment.

- The environment generates a stochastic reward \(r_t\) from the unknown reward distribution corresponding to the selected action and returns it to the learner.

- The learner updates its knowledge of the environment based on the received reward, typically by updating estimates of the expected rewards of each action or by adjusting the selection probabilities.

-

The primary goal of the learner is to optimize its decision-making strategy over time to maximize cumulative rewards or equivalently, minimize cumulative regret.

Exploration vs. Exploitation Trade-off

- The exploration-exploitation trade-off is a fundamental dilemma in RL, especially within the context of MAB problems. This trade-off represents the challenge of balancing two competing objectives: gathering new information (exploration) and leveraging existing knowledge to maximize immediate rewards (exploitation).

- Exploration allows the agent to learn and adapt to new opportunities by trying out different actions, even if they are not currently believed to be the best. This helps the agent discover potentially higher-rewarding options. Exploitation, on the other hand, focuses on capitalizing on the agent’s current knowledge by selecting actions that are expected to provide the highest immediate payoff. By exploiting known information, the agent can maximize short-term success.

- Achieving the right balance between these two strategies is essential for long-term success in decision-making algorithms. Excessive exploration can lead to suboptimal short-term performance, while over-exploitation may cause the agent to miss out on discovering better options. Sophisticated approaches, such as Upper Confidence Bound (UCB) and Thompson Sampling, are designed to strike a balance between exploration and exploitation, allowing the system to adapt to the evolving nature of real-world environments and ensure sustained optimal performance.

Key Concepts

-

Exploration: This refers to the agent trying out different actions to gather more information about the environment. Even if an action is not currently believed to be the best, exploration allows the learner to discover potentially better alternatives in the long run. For example, in the context of recommendation systems, exploration might involve suggesting new or less popular content to learn more about user preferences.

-

Exploitation: This refers to the agent using its current knowledge to select the action that maximizes immediate reward. The agent relies on past experiences or accumulated data to make decisions that are expected to yield the highest payoff. In the recommendation system scenario, exploitation would suggest recommending content that is known to have a high probability of being well-received by the user.

-

The challenge arises because excessive exploitation can lead to missing out on potentially better options (actions that could offer higher rewards but haven’t been tried enough). On the other hand, too much exploration can result in suboptimal choices in the short term, where better-known actions are not fully utilized.

Exploitation Strategies

-

Exploitation focuses on selecting the most promising option based on current data, leveraging all the information that has been gathered so far. Below are some key strategies commonly used for exploitation:

-

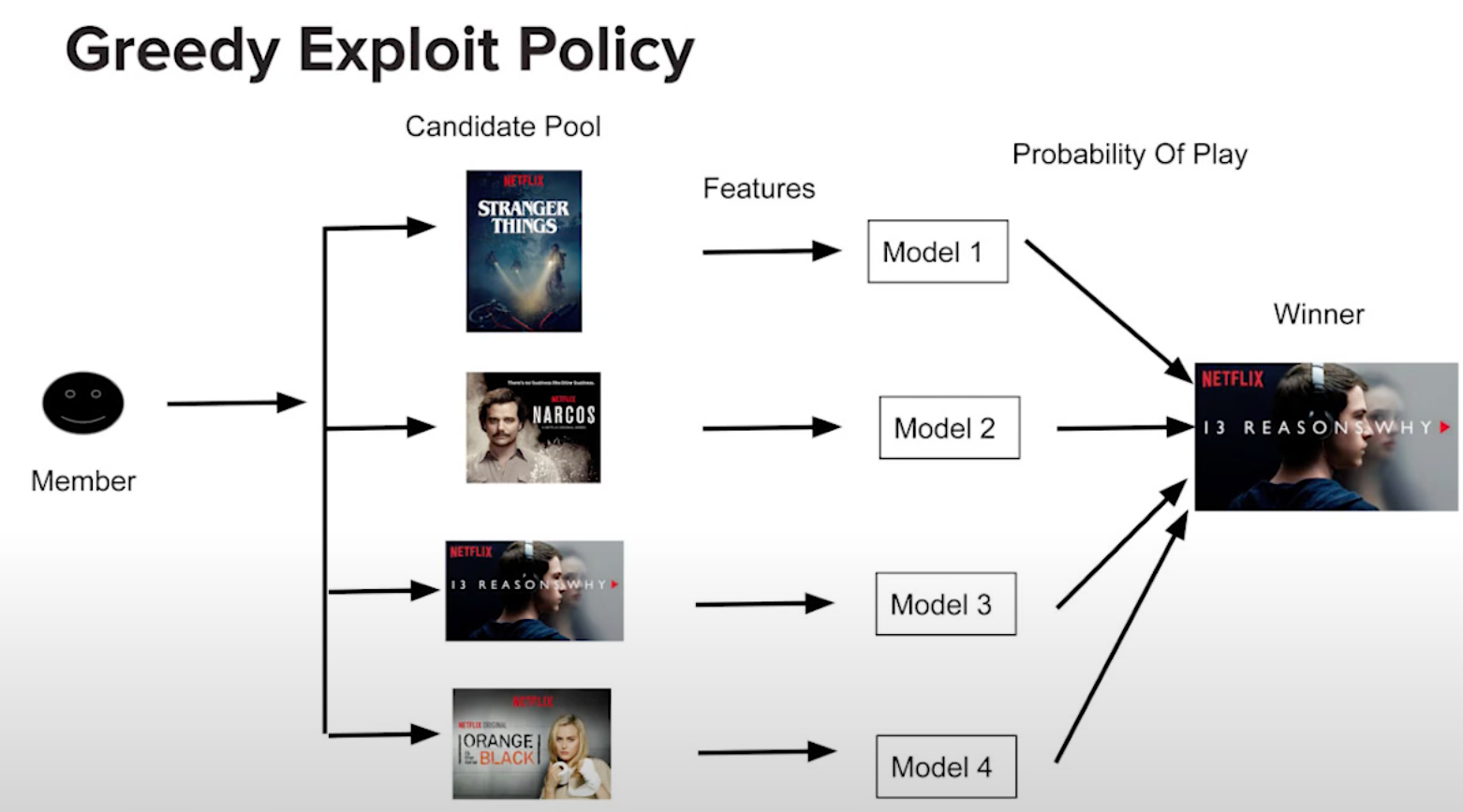

Greedy Exploit Policy: In this approach, the agent always selects the action (or arm) with the highest estimated reward. It assumes that the current estimates are accurate, meaning the system is confident that the chosen action is optimal based on the available data. This strategy doesn’t explore other arms, which means it could miss out on potentially higher rewards. However, it maximizes short-term gains by acting on the information at hand.

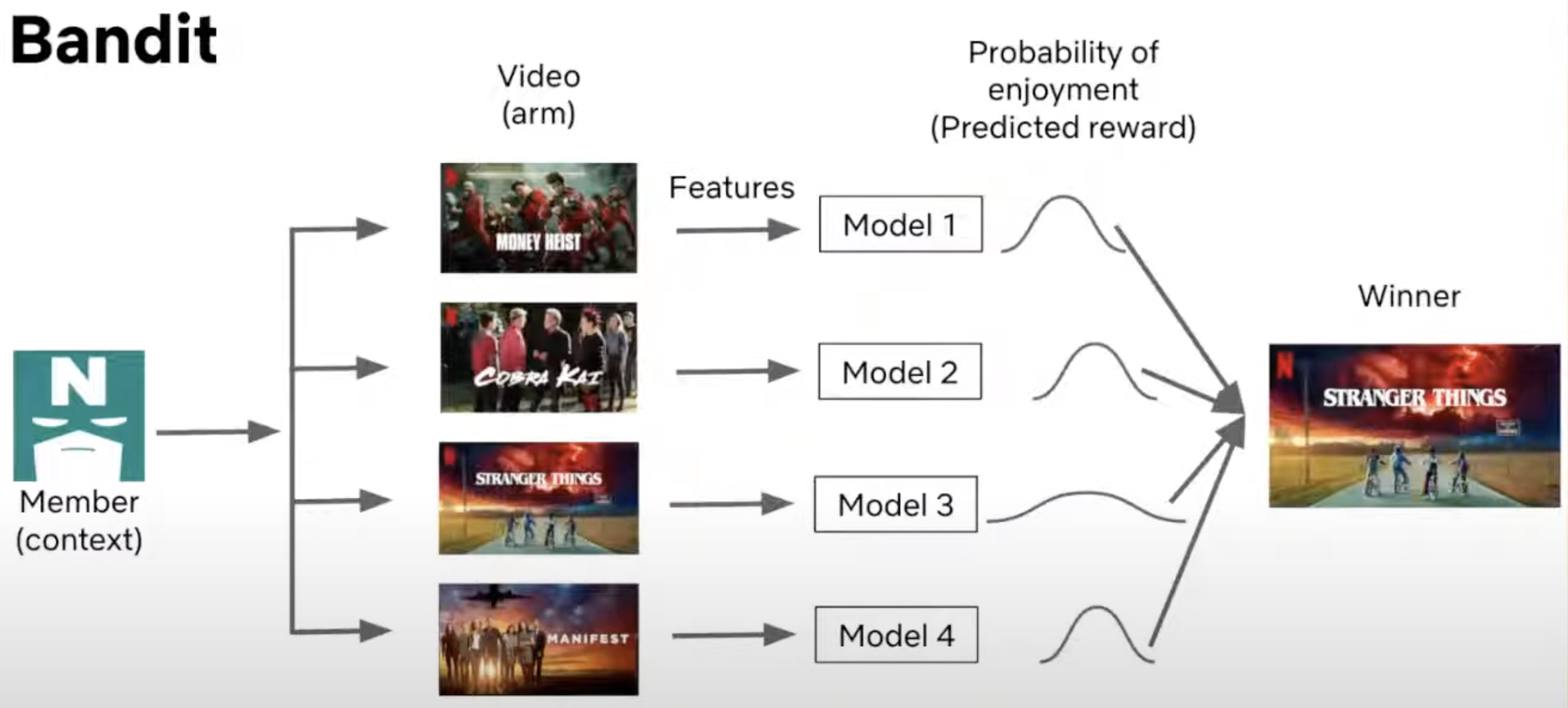

- Example (Netflix Recommendation System): The image below illustrates how Netflix uses a greedy exploit policy to recommend content. When a user arrives at the homepage, the system extracts both user features (such as viewing history) and content features (such as genre or popularity). These features are fed into pre-trained models that score the potential recommendations. The model with the highest probability of engagement is chosen, ensuring the most relevant content is recommended based on the data available.

- In this case, Netflix relies heavily on exploitation by selecting the title that the model predicts the user is most likely to watch, based on previous data. This maximizes the user’s engagement in the short term by leveraging known preferences.

Exploration Strategies

-

Exploration, while not immediately focused on maximizing rewards, helps gather more information to improve future decisions. It often involves sacrificing short-term performance for potential long-term gains. There are various exploration strategies:

-

Naive Exploration (Random Exploration): This method involves selecting actions randomly, without considering current knowledge about their potential rewards. A simple example of this is the Epsilon-Greedy Algorithm. In this approach, with a probability of 1 - \(\epsilon\), the agent selects the best-known action (exploitation), but with a small probability of \(\epsilon\), it selects a random action (exploration). This strategy allows for a balance between exploration and exploitation by introducing some randomness into the decision-making process.

- Advantages: It ensures that all actions (arms) are occasionally tested, but lacks direction, as exploration happens randomly rather than being driven by strategic uncertainty.

-

Probability Matching (Thompson Sampling): This strategy, exemplified by Thompson Sampling, involves selecting actions in proportion to their probability of being the best. Instead of always choosing the highest estimated reward, the agent selects actions based on a distribution that reflects its belief about the probabilities of each action being optimal.

- Advantages: Thompson Sampling is a well-balanced exploration-exploitation strategy that tends to perform well in practice, especially when the environment is highly uncertain. By sampling from the probability distribution, it ensures both high-performing and uncertain actions are tested.

-

Optimism in the Face of Uncertainty (UCB): This approach adds optimism to actions with less information, effectively favoring exploration of uncertain or unexplored options. For example, the Upper Confidence Bound (UCB) algorithm selects actions based not only on their estimated rewards but also on the uncertainty or variance in those estimates. Actions with high uncertainty are given an optimistic estimate, encouraging the agent to explore them further.

- Advantages: UCB balances exploration and exploitation by giving preference to actions where the potential reward is uncertain, thus focusing exploration efforts on areas that could lead to significant improvements in understanding.

Balancing Exploration and Exploitation

- Achieving the right balance between exploration and exploitation depends on the specific context of the problem and the desired outcomes. In dynamic environments, where user preferences or system states change over time, more exploration may be necessary. In contrast, in more static environments, exploitation may be more beneficial, as the system’s understanding of the optimal actions is likely to remain accurate.

Real-World Implications

-

Recommendation Systems: In practice, many modern systems dynamically adjust the exploration-exploitation balance. For instance, streaming services like Netflix might employ more exploration for new users to quickly gather data on their preferences, but focus more on exploitation for returning users where data is plentiful and accurate.

-

Advertising Systems: Online advertisers also face the exploration-exploitation trade-off. Exploring new advertising strategies or placements can be costly in the short term but might lead to discovering more effective campaigns. Exploiting known high-performance ads ensures immediate revenue but may lead to stagnation if new strategies are not explored.

Types of Bandit Problems

-

Bandit algorithms have numerous variations based on the specific characteristics of the environment:

- Stochastic Bandits: In this classic setting, each arm has a fixed but unknown reward distribution, and the goal is to identify the best arm over time.

- Contextual Bandits: Here, the learner observes additional context (e.g., user features) before selecting an action. The challenge is to learn a policy that adapts the action to the context to maximize rewards.

- Adversarial Bandits: In this setting, the environment can change over time, and rewards are not necessarily drawn from fixed distributions. The learner must adapt to dynamic or adversarial environments.

Algorithms

- Bandit algorithms address the fundamental trade-off between exploration, which involves gathering more information about the different options or “arms,” and exploitation, which focuses on capitalizing on the option that has performed well so far.

- Algorithms like Epsilon-Greedy, Thompson Sampling, and Upper Confidence Bound (UCB) each provide different strategies for balancing this trade-off. The choice of algorithm depends on the specific application and problem characteristics. Thompson Sampling often performs well in practice due to its Bayesian approach, while UCB is theoretically optimal in many settings under certain assumptions. The Epsilon-Greedy algorithm is simple and effective, particularly when combined with a decaying schedule for \(\epsilon\).

- Below, we detail the aforementioned commonly used algorithms that address the exploration-exploitation trade-off in different ways:.

Epsilon-Greedy Algorithm

-

The Epsilon-Greedy algorithm is a simple yet powerful strategy for solving the MAB problem. It dynamically balances between exploiting the best-known arm and exploring potentially better arms.

-

Exploitation: With probability \(1 - \epsilon\), the algorithm selects the arm with the highest average reward. This is the “greedy” choice, as it seeks to exploit the current knowledge to maximize short-term reward.

-

Exploration: With probability \(\epsilon\), the algorithm selects a random arm, regardless of its past performance. This ensures that even suboptimal arms are occasionally tested, preventing the algorithm from prematurely converging on a suboptimal solution.

-

The parameter \(\epsilon\) controls the balance between exploration and exploitation. A larger \(\epsilon\) results in more exploration, while a smaller \(\epsilon\) results in more exploitation. The challenge lies in choosing an appropriate value for \(\epsilon\). Typically, \(\epsilon\) is decayed over time (e.g., \(\epsilon = \frac{1}{t}\), where \(t\) is the current timestep) to gradually favor exploitation as more information is gained.

Example: Ad Placement Optimization

Scenario

-

Imagine you’re managing a platform that displays ads from various advertisers. Each ad has a different click-through rate (CTR), but you don’t know which one performs the best. Your goal is to maximize revenue by learning which ads get clicked the most. This is a classic MAB problem, where each “arm” is an ad, and pulling an arm corresponds to showing a particular ad to a user. You want to balance exploring new ads and exploiting the ads with the highest click rates so far. The Epsilon-Greedy algorithm is an effective way to solve this problem.

- Ads (Arms): You have 3 different ads: Ad A, Ad B, and Ad C.

- Reward: A reward of 1 is assigned if the user clicks the ad and 0 otherwise.

- Objective: Maximize the overall click-through rate by selecting the most effective ad over time.

Code Example with Numerical Data

- Here’s an implementation of the Epsilon-Greedy algorithm for this ad placement optimization scenario, with numerical data and a plot showing how the estimated probability of success (CTR) for each ad evolves over time.

import numpy as np

import matplotlib.pyplot as plt

# Ads CTR simulation (actual unknown click-through rates)

actual_ctr = [0.05, 0.03, 0.08] # Ad A, Ad B, Ad C

# Epsilon-Greedy Parameters

epsilon = 0.1 # Probability of exploration

n_trials = 1000 # Total number of trials or rounds

n_ads = len(actual_ctr) # Number of available ads (arms)

# Tracking number of times each ad was selected and rewards collected

num_plays = np.zeros(n_ads) # Counts the number of times each ad has been played

total_reward = np.zeros(n_ads) # Tracks cumulative reward for each ad

# Function to simulate playing an ad and receiving a reward

def play_ad(ad_idx):

return np.random.rand() < actual_ctr[ad_idx] # Returns 1 (click) with probability = CTR

# Epsilon-Greedy Algorithm Implementation

avg_rewards = np.zeros((n_trials, n_ads)) # Store estimated CTR for each ad over time

for t in range(n_trials):

x = np.random.rand() # Generate a uniformly distributed random number between 0 and 1

if x < epsilon: # Exploration phase: with probability epsilon, select a random ad

chosen_ad = np.random.randint(0, n_ads)

else: # Exploitation phase: with probability 1 - epsilon, select the best-performing ad

chosen_ad = np.argmax(total_reward / (num_plays + 1e-10)) # Avoid division by zero

# Play the chosen ad and receive a reward (click or no click)

reward = play_ad(chosen_ad)

num_plays[chosen_ad] += 1 # Update play count for the selected ad

total_reward[chosen_ad] += reward # Update total reward (click count) for the selected ad

# Store the average reward estimates (CTR) for plotting purposes

avg_rewards[t] = total_reward / (num_plays + 1e-10) # Estimated CTR for each ad

# Plotting the estimated CTR for each ad

plt.plot(avg_rewards[:, 0], label='Ad A (True CTR=0.05)')

plt.plot(avg_rewards[:, 1], label='Ad B (True CTR=0.03)')

plt.plot(avg_rewards[:, 2], label='Ad C (True CTR=0.08)')

plt.xlabel('Trial')

plt.ylabel('Estimated CTR')

plt.legend()

plt.title('Estimated CTR of Ads Over Time (Epsilon-Greedy)')

plt.show()

Output Example

- In this code:

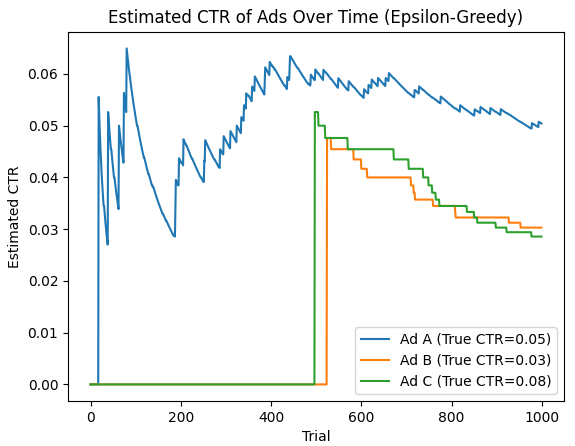

- Ad A has a true CTR of 0.05, Ad B has 0.03, and Ad C has 0.08.

- The algorithm will explore different ads, but over time, it should gravitate toward Ad C because it has the highest true CTR.

Plot Analysis

- The plot below shows how the estimated CTR for each ad changes over time, with Ad C’s estimated CTR converging to its true value.

- The initial part of the plot shows a lot of fluctuation because the algorithm is randomly exploring all three ads. The estimates of the CTRs for each ad will oscillate significantly in the early trials due to limited data.

- As trials progress, the estimated CTR of Ad C should gradually increase and stabilize around 0.08, since Ad C has the highest true CTR.

- The estimated CTRs of Ad A and Ad B will stabilize around their true values (0.05 and 0.03), but with fewer trials played compared to Ad C, especially after the algorithm begins favoring Ad C based on higher rewards.

- Over time, the Epsilon-Greedy algorithm will shift from exploration to exploitation, focusing on showing Ad C more frequently as it learns that it yields the highest rewards.

Why This Works

-

Exploitation vs. Exploration: The Epsilon-Greedy algorithm balances exploration (trying different ads to learn their CTRs) and exploitation (showing the ad with the best-known CTR to maximize clicks). Early on, exploration allows the algorithm to test different ads and gather data on their performance, preventing it from prematurely settling on a suboptimal ad. As time goes on, exploitation dominates, ensuring that the algorithm capitalizes on the ad with the best performance (Ad C).

-

Adjustable Exploration: The parameter \(\epsilon\) provides a tunable balance between exploration and exploitation. A fixed or decayed value of \(\epsilon\) allows the algorithm to explore enough early on while eventually favoring exploitation. Decaying \(\epsilon\) over time ensures that the algorithm settles on the best-performing ad as more data is gathered.

-

Long-Term Improvement: By maintaining a level of exploration, the Epsilon-Greedy algorithm avoids getting stuck in local optima. In the long run, it maximizes the overall reward (CTR) by continuously learning from both random explorations and exploiting the ads that have proven to yield the highest reward.

Thompson Sampling (Bayesian Inference)

-

Thompson Sampling is a stochastic, Bayesian approach to the MAB problem that uses random sampling from posterior distributions to guide decision-making. It maintains a posterior distribution over the reward probabilities of each arm and stochastically samples from these distributions to balance exploration and exploitation. As more data is collected, the posterior distributions narrow, leading to more confident and informed decisions over time.

-

Bayesian Updating: For each arm, Thompson Sampling keeps track of the number of successes and failures. The reward of each arm is modeled as a Bernoulli distribution with an unknown parameter (the probability of success). Using a Beta distribution as a conjugate prior for the Bernoulli likelihood, the posterior distribution can be updated efficiently with observed data.

-

Arm Selection: In each round, the algorithm samples a reward probability for each arm from its respective posterior distribution. The arm with the highest sampled reward is selected. This strategy implicitly balances exploration and exploitation: arms with uncertain estimates (wide posteriors) are explored more often, while arms with confident estimates (narrow posteriors) are exploited.

Mathematical Formulation

- Let \(\theta_i\) represent the unknown reward probability of arm \(i\). Initially, assume that \(\theta_i\) follows a Beta distribution \(\text{Beta}(\alpha_i, \beta_i)\), where \(\alpha_i\) and \(\beta_i\) represent the number of successes and failures, respectively. After observing a success or failure, the posterior distribution is updated as follows:

- If the chosen arm returns a success: \(\alpha_i \gets \alpha_i + 1\)

- If the chosen arm returns a failure: \(\beta_i \gets \beta_i + 1\)

Example: Ad Placement Optimization

Scenario

-

Imagine you’re running an online platform where you need to decide which of five different ads to show to users. Each ad has an unknown click-through rate (CTR), and your goal is to maximize user engagement by choosing the best-performing ad over time. Initially, you don’t know which ad performs best, so you must balance testing (exploration) with showing the most promising ad (exploitation).

- Environment: The platform where users view ads.

- Context: There’s no direct user-specific context in this basic example, but user behavior influences which ads are more successful over time.

- Arms: Each ad is considered an arm that you can “pull” (i.e., show to users).

- Agent: The Thompson Sampling algorithm selects which ad to show based on sampling from the posterior distributions.

- Policy: The algorithm uses Bayesian updating to refine the estimates for each ad’s CTR, balancing between exploration (testing ads with uncertain performance) and exploitation (showing ads with high expected performance).

-

Reward: The reward is whether the user clicks on the ad.

- Over time, Thompson Sampling improves its estimates of each ad’s CTR and begins to focus on the ads that are most likely to generate clicks.

Code Example

- In this example, we simulate the click-through rates (CTR) for five ads, and we will observe how Thompson Sampling updates its belief over time to estimate the true CTR for each ad.

import numpy as np

import matplotlib.pyplot as plt

# Number of arms (ads)

n_arms = 5

# True unknown probability of success (CTR) for each arm (unknown to the algorithm)

true_probs = np.array([0.05, 0.1, 0.15, 0.2, 0.25])

# Number of rounds (decisions)

n_rounds = 1000

# Initialize counters for each arm: number of pulls and successes

n_pulls = np.zeros(n_arms)

n_successes = np.zeros(n_arms)

# Store history for analysis

history = {'rounds': [], 'chosen_arm': [], 'reward': [], 'estimated_probs': []}

# Thompson Sampling main loop

for t in range(n_rounds):

# Sample from the posterior Beta distribution for each arm

sampled_probs = np.random.beta(n_successes + 1, n_pulls - n_successes + 1)

# Choose the arm with the highest sampled probability

chosen_arm = np.argmax(sampled_probs)

# Simulate pulling the chosen arm and observe the reward (click or no click)

reward = np.random.binomial(1, true_probs[chosen_arm])

# Update counters for the chosen arm

n_pulls[chosen_arm] += 1

n_successes[chosen_arm] += reward

# Store history for analysis

history['rounds'].append(t)

history['chosen_arm'].append(chosen_arm)

history['reward'].append(reward)

history['estimated_probs'].append(n_successes / np.where(n_pulls == 0, 1, n_pulls)) # Avoid division by zero

# Calculate final estimated probabilities of success (CTR) for each arm

estimated_probs = n_successes / np.where(n_pulls == 0, 1, n_pulls)

print("True CTRs:", true_probs)

print("Estimated CTRs after 1000 rounds:", estimated_probs)

# Plot estimated probabilities over time

plt.figure(figsize=(10, 6))

for i in range(n_arms):

estimates = [est[i] for est in history['estimated_probs']]

plt.plot(estimates, label=f'Arm {i} (True CTR={true_probs[i]})')

plt.title('Thompson Sampling: Estimated CTRs Over Time')

plt.xlabel('Rounds')

plt.ylabel('Estimated Probability of Success (CTR)')

plt.legend()

plt.show()

Output Example

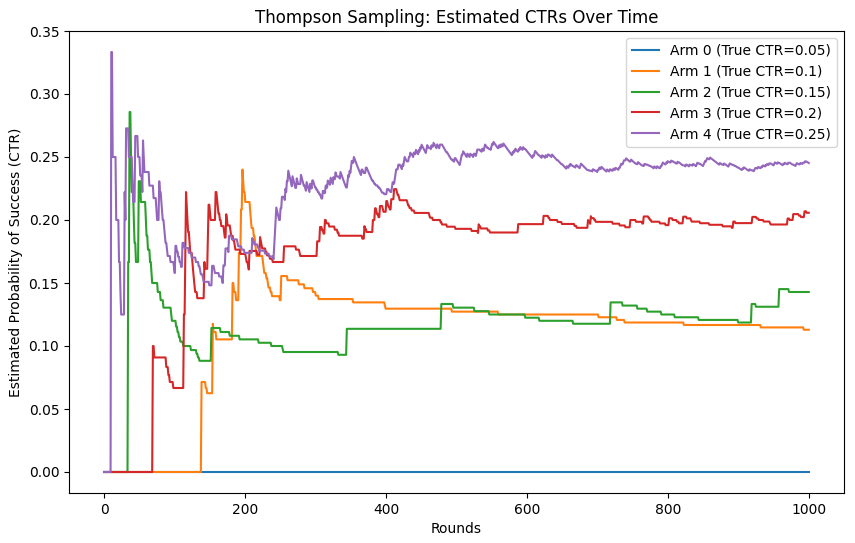

True CTRs: [0.05 0.1 0.15 0.2 0.25]

Estimated CTRs after 1000 rounds: [0. 0.11290323 0.14285714 0.20571429 0.24522761]

- In the final output, we can see that the estimated CTRs after 1,000 rounds closely match the true click-through rates (CTR) for each ad. The algorithm has efficiently balanced exploration and exploitation, gradually honing in on the correct probabilities.

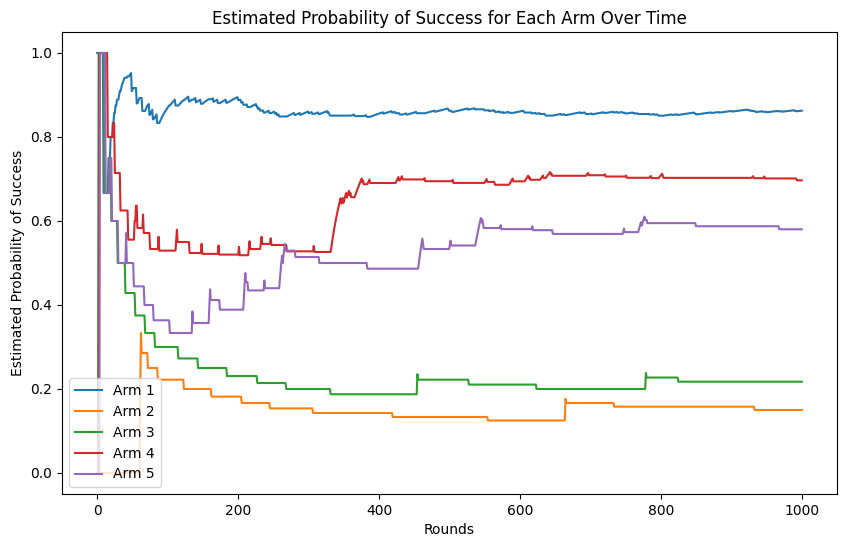

Plot Analysis

- The plot below shows how the estimated CTRs for each ad change over time. In the beginning, the estimates fluctuate significantly as the algorithm explores different arms. Over time, as more data is collected, the estimates stabilize around the true CTRs for each ad.

Why This Works

- Exploration and Exploitation Balance: Thompson Sampling uses the uncertainty in its posterior estimates to explore uncertain arms (ads) and exploit those with higher sampled success probabilities. This balance allows the algorithm to test new options while focusing on the most promising ones.

- Bayesian Updating Efficiency: The Beta distribution allows for simple and effective updates based on observed successes and failures, adapting the model as more data is collected. The algorithm quickly learns which ads perform better without completely abandoning less-explored options.

- Thompson sampling helps identify the best-performing ads over time, optimizing for long-term rewards by making better choices with increasing confidence.

Upper Confidence Bound (UCB)

-

Upper Confidence Bound (UCB) algorithms follow the principle of optimism in the face of uncertainty. This means that the algorithm not only considers the average reward of each arm but also factors in the uncertainty of that estimate. By doing so, it encourages exploration of arms with high uncertainty.

-

Exploration-Exploitation Balance: UCB constructs an optimistic estimate of the reward for each arm by adding a confidence term to the estimated reward. This confidence term decreases as more observations are made (since uncertainty reduces with more data). Therefore, early in the process, the algorithm explores arms more aggressively, while over time, it shifts towards exploitation.

-

Mathematical Formulation: Let \(\hat{\mu}_i\) represent the estimated mean reward for arm \(i\) after \(T_i\) plays. UCB chooses the arm with the highest upper confidence bound: \(\text{UCB}_i(t) = \hat{\mu}_i + \sqrt{\frac{2 \log t}{T_i}}\)

- where \(t\) is the total number of plays and \(T_i\) is the number of times arm \(i\) has been played. The square root term represents the confidence interval, which encourages exploration of less-tested arms.

Example: Ad Placement Optimization

Scenario

- Imagine you are optimizing ad placement across five different websites. Each website has a different, unknown probability of a user clicking the ad. Your goal is to figure out which website generates the highest click-through rate (CTR) over time while balancing exploration (trying different websites) and exploitation (continuing to show ads on websites that perform well). The UCB algorithm helps you make this decision by considering both the estimated performance of each website (arm) and the uncertainty about that estimate.

Code Example

import numpy as np

import matplotlib.pyplot as plt

# Number of arms (websites in this case)

n_arms = 5

# True unknown click-through rates for each website (unknown to the algorithm)

true_probs = np.random.rand(n_arms)

# Number of rounds (ad impressions)

n_rounds = 1000

# Initialize counters for each arm

n_pulls = np.zeros(n_arms)

n_successes = np.zeros(n_arms)

estimated_probs_over_time = []

# UCB main loop

for t in range(1, n_rounds + 1):

# Calculate the upper confidence bound for each arm

ucb_values = n_successes / (n_pulls + 1e-6) + np.sqrt(2 * np.log(t) / (n_pulls + 1e-6))

# Choose the arm with the highest UCB

chosen_arm = np.argmax(ucb_values)

# Simulate the reward (click or no click) for the chosen arm

reward = np.random.binomial(1, true_probs[chosen_arm])

# Update the counters for the chosen arm

n_pulls[chosen_arm] += 1

n_successes[chosen_arm] += reward

# Store estimated probabilities for plotting

estimated_probs_over_time.append(n_successes / (n_pulls + 1e-6))

# Calculate final estimated probabilities of success for each arm

estimated_probs = n_successes / n_pulls

print("Estimated Probabilities of Success:", estimated_probs)

# Plot the evolution of the estimated probabilities of success over time

plt.figure(figsize=(10, 6))

for i in range(n_arms):

plt.plot([est[i] for est in estimated_probs_over_time], label=f"Arm {i + 1}")

plt.xlabel("Rounds")

plt.ylabel("Estimated Probability of Success")

plt.title("Estimated Probability of Success for Each Arm Over Time")

plt.legend()

plt.show()

Output Example

- After running the code, you will see the final estimated probabilities for each arm (website) printed out:

Estimated Probabilities of Success: [0.86269071 0.15 0.2173913 0.69677419 0.58024691]

- The output shows the algorithm’s estimates of the click-through rate (CTR) for each website after 1,000 rounds of ad impressions.

Plot Analysis

- The plot below shows the estimated probability of success for each arm (website) over time. Initially, the algorithm explores all arms, so the estimated probabilities fluctuate significantly. As more rounds occur, the UCB algorithm becomes more confident about the true performance of each arm, and the estimates stabilize. Over time, arms with lower estimated CTRs are pulled less frequently, while arms with higher CTRs are pulled more often. The arms’ probabilities begin to converge towards their true values as more data is gathered.

Why This Works

- The UCB algorithm works in this scenario because it balances exploration and exploitation effectively. Early on, it explores different arms to learn about their performance, even pulling arms with higher uncertainty more often. As the algorithm gathers more data, it shifts toward exploiting the best-performing arms while still occasionally exploring others to avoid getting stuck in suboptimal decisions. This makes UCB well-suited for ad placement optimization, where you need to quickly identify which placements are most effective while still gathering enough data to adapt to changes in user behavior.

Comparative Analysis of Multi-Armed Bandit Algorithms

- MAB algorithms address a fundamental challenge in decision-making problems: the exploration-exploitation trade-off. In this setting, a learner repeatedly chooses from a set of actions (or arms) and receives rewards from the environment in response to those actions. The learner’s goal is to maximize cumulative rewards over time. However, the learner faces the dilemma of whether to explore—gather more information about less-tested actions—or to exploit—capitalize on the actions that are known to yield high rewards based on past experience.

- Several well-known strategies have been developed to address this challenge, each balancing exploration and exploitation differently. The most prominent algorithms include Epsilon-Greedy, Thompson Sampling, and Upper Confidence Bound (UCB). Selecting the right MAB algorithm is crucial to achieving optimal outcomes, and the choice largely depends on the specific application and environment. The right algorithm must take into account factors like the complexity of the environment, the frequency and timeliness of feedback, and whether the feedback is immediate or delayed.

- As explained in Performance Comparison, while Epsilon-Greedy is favored for its simplicity and ease of implementation, it often struggles in more complex environments. Thompson Sampling is particularly powerful in environments with delayed or uncertain feedback, allowing for continued exploration and typically achieving better long-term performance. On the other hand, UCB offers a more structured approach, excelling in cases where feedback is timely and frequent.

Epsilon-Greedy

- The Epsilon-Greedy Algorithm is a simple yet effective method for managing the exploration-exploitation trade-off. It works by choosing an action randomly with a probability of \(\epsilon\) (exploration) and selecting the action with the highest observed reward with a probability of \(1 - \epsilon\) (exploitation). The parameter \(\epsilon\) controls how much the algorithm explores versus exploits, and it can be fixed or decayed over time. A higher value of \(\epsilon\) leads to more exploration, whereas a lower value promotes exploitation.

While straightforward, the epsilon-greedy approach is often unguided in its exploration since it selects actions uniformly at random during exploration phases, which can lead to suboptimal performance compared to more sophisticated method such as Thompson Sampling and UCB. It is, therefore, also referred to as a “naive exploration” algorithm.

Thompson Sampling

- Thompson Sampling is a Bayesian approach to the MAB problem. It models the reward distribution of each action probabilistically and samples from the posterior distribution to select actions. Actions with higher posterior reward estimates are more likely to be chosen, which creates a balance between exploration and exploitation based on the uncertainty of the model.

Thompson Sampling is particularly adept at handling uncertain environments because it samples actions based on the estimated likelihood of them being optimal. This allows it to explore efficiently while also maximizing exploitation based on learned information. It is, therefore, also referred to as a “bayesian probability matching” algorithm.

Upper Confidence Bound

- UCB is a more advanced technique that selects actions based on an optimism-in-the-face-of-uncertainty principle. In this approach, the learner maintains confidence intervals around the estimated rewards of each action, reflecting the uncertainty of those estimates. UCB chooses the action with the highest upper confidence bound, thus favoring actions with less-known or under-explored reward distributions.

UCB encourages exploration of arms with higher uncertainty while still promoting exploitation of arms that have demonstrated high rewards, leading to better-guided exploration than the epsilon-greedy method. It is, therefore, also referred to as an algorithm based on the “optimism-in-the-face-of-uncertainty” principle. UCB is particularly effective because its exploration diminishes as actions are explored more frequently, making it a robust approach for many scenarios.

Performance Comparison

- According to a comparative analysis by Eugene Yan in his blog post “Bandits for Recommender Systems” and supported by research from audited studies, UCB and Thompson Sampling generally outperform epsilon-greedy. The primary shortcoming of epsilon-greedy is its unguided exploration, which, while effective in simple environments, becomes inefficient in more complex settings. In contrast, UCB and Thompson Sampling use more intelligent mechanisms to explore actions with higher uncertainty, resulting in lower regret over time.

- When considering delayed feedback, a common occurrence in real-world applications such as recommender systems, Thompson Sampling shows superior performance over UCB. Delayed feedback occurs when user interactions are not immediately processed due to resource or runtime constraints. In these cases, UCB’s deterministic selection of actions may lead to repeated choices of the same action until new feedback is incorporated, which limits exploration. Thompson Sampling, on the other hand, continues to explore because it selects actions stochastically, even without immediate reward updates. Studies by Yahoo and Deezer demonstrated that this wider exploration leads to better outcomes in delayed feedback scenarios.

- In Yahoo’s simulations with varying update delays of 10, 30, and 60 minutes, Thompson Sampling remained competitive across all delays. While UCB performed better with short delays (e.g., 10 minutes), its performance deteriorated with longer delays, being outpaced by Thompson Sampling at 30- and 60-minute delays. These findings suggest that stochastic policies like Thompson Sampling are more robust in environments where feedback is delayed because they maintain a degree of exploration, ensuring that learning continues even without timely updates.

Advantages

- MABs offer significant advantages over traditional batch machine learning and A/B testing methods, particularly in decision-making scenarios where optimizing choices based on limited feedback is crucial. MAB algorithms excel at balancing the trade-off between exploration (gathering new information) and exploitation (leveraging known information to maximize rewards). Unlike A/B testing, which requires extensive data collection and waiting for test results, MAB continuously learns and adapts its recommendations in real-time, minimizing regret by optimizing decisions as data comes in.

- This makes MAB especially effective in situations with limited data, such as long-tail or cold-start scenarios, where traditional batch recommenders might favor popular items over lesser-known yet potentially relevant options. By continuously adapting, MAB can deliver better performance in dynamic environments, ensuring that recommendations remain optimal even with evolving user preferences and behaviors.

- Here are the key advantages of MAB, specifically focusing on personalization and online testing.

Personalization

- One of the most significant advantages of MAB is its ability to dynamically adjust decisions in real-time, making it particularly useful for personalized experiences. This adaptability allows systems to tailor outcomes for individual users or segments of users based on their unique behaviors and preferences.

Real-Time Adaptation

- MAB algorithms adapt as new data arrives, which is crucial for personalization in dynamic environments. Unlike traditional A/B testing, where changes are made only after a full testing cycle, MAB can update preferences continuously. For example, in a personalized recommendation system (e.g., Netflix, Amazon), a MAB approach adjusts which recommendations to present to a user based on what has previously been successful for that specific user, making the system more responsive and personal.

User-Specific Targeting

- Personalization requires systems to understand users’ diverse preferences and behaviors. With MAB, each user’s actions provide feedback that is fed into the algorithm, which then adjusts the rewards for different options (e.g., recommendations, ads, or content delivery). Over time, the system can become increasingly accurate at predicting which choices will yield the highest engagement or satisfaction for each user, as it learns from individual feedback instead of using broad averages.

Avoidance of Segmentation Bias

- In traditional methods, users are often segmented into predefined groups for personalization purposes, which can introduce biases or lead to over-generalization. MAB allows personalization without requiring these rigid segments. By treating each user interaction as a unique opportunity for learning, MAB algorithms personalize decisions at the individual level without assuming that all users within a segment will behave the same way.

Online Testing

- MAB excels at online testing because it improves efficiency by continually balancing the exploration of new possibilities with the exploitation of known successful outcomes. This dynamic process leads to faster convergence to optimal solutions, making it particularly advantageous for environments where rapid iteration and real-time feedback are essential, such as in web optimization or online advertising.

Faster Experimentation

- In traditional A/B testing, each variation is tested over a fixed period, after which statistical analysis is performed to determine the winner. This method can be slow and resource-intensive, especially if many variations need to be tested. MAB, on the other hand, dynamically reallocates traffic to the better-performing options during the testing process. As soon as one option shows superiority, more traffic is directed towards it, reducing the time needed to reach conclusive results. This leads to faster iteration cycles and quicker optimization of online experiments.

Improved Resource Allocation

- MAB ensures that the majority of resources are allocated to the best-performing choices during the testing phase. Instead of waiting until the end of a test cycle to determine which option is superior (as in A/B testing), MAB continuously shifts resources away from poorly performing options. This means that even during the testing phase, the system is optimizing performance rather than merely collecting data, which can lead to better overall outcomes.

Reduction in Opportunity Cost

- A/B tests can sometimes lock a system into a lengthy exploration phase where a suboptimal variant is displayed to users for a long period. With MAB, the algorithm reduces this opportunity cost by minimizing the time that suboptimal choices are tested. Because MAB adapts dynamically, underperforming options are shown less frequently, reducing the potential negative impact on business performance (e.g., fewer clicks, lower conversion rates) compared to traditional A/B tests.

Multivariate and Multi-Objective Optimization

- MAB is also well-suited for multivariate testing (testing multiple factors simultaneously) and for scenarios where there are multiple competing objectives (e.g., optimizing for both click-through rates and user satisfaction). While traditional A/B testing is often limited to a binary choice between two versions, MAB can handle many different variations simultaneously, adjusting their probabilities of being shown based on real-time feedback. This allows businesses to optimize for more complex goals in a more flexible and granular way.

Summary

-

The advantages of MAB can be boiled down to the following:

- Personalization:

- Real-Time Adaptation: MAB adjusts decisions dynamically based on real-time feedback, which is critical for personalization.

- User-Specific Targeting: It allows for more accurate user-level targeting by continuously learning from each user’s behavior.

- Avoidance of Segmentation Bias: MAB can personalize without the need for broad, predefined user segments, leading to more accurate and individualized experiences.

- Online Testing:

- Faster Experimentation: MAB accelerates the testing process by shifting traffic towards better-performing options as soon as they show superiority.

- Improved Resource Allocation: By dynamically reallocating resources away from poor-performing options, MAB ensures that the testing phase is more efficient.

- Reduction in Opportunity Cost: The algorithm minimizes the exposure to suboptimal choices, reducing the potential negative impact on business performance.

- Multivariate and Multi-Objective Optimization: MAB excels in environments where multiple factors or goals are being tested and optimized simultaneously.

- Personalization:

-

In conclusion, MAB is a flexible and powerful approach, particularly suited for applications where personalization and online testing are critical. It enables better, faster, and more adaptive decision-making than traditional methods, making it an ideal solution for dynamic environments such as e-commerce, digital advertising, content recommendation, and more.

Disadvantages

- While MAB are a powerful tool for decision-making across various contexts, they come with notable disadvantages and limitations, especially in complex, dynamic, or large-scale environments. These models often struggle to incorporate context, adapt to changing environments, manage delayed or sequential rewards, or balance multiple objectives, which limits their applicability in many real-world scenarios. Additionally, scalability issues and challenges related to exploration hinder their effectiveness when faced with a vast number of possible actions or arms. These limitations necessitate careful consideration and adaptation to specific applications, as outlined in more detail below.

Simplicity and Assumptions

- Limited Contextual Information: Traditional MAB algorithms assume that each arm provides a single, independent reward. This approach does not take into account context or other complex variables that might affect decision-making, making them unsuitable for situations where rewards are influenced by external factors (e.g., user characteristics in online recommendation systems). However, this limitation is not applicable to contextual MABs, which are specifically designed to incorporate external factors such as user characteristics or environmental conditions into the decision-making process. In contextual MABs, the reward is influenced by both the selected action and the context, allowing for more tailored and effective recommendations.

- Fixed Arms Assumption: The classical MAB framework assumes that the set of arms is fixed. In many real-world applications, the set of available actions may change over time (e.g., new products in an e-commerce setting). MAB algorithms may struggle with dynamically evolving action spaces.

Exploration vs. Exploitation Balance

- Delayed Exploration Payoff: While MAB algorithms try to balance exploration (testing new arms) and exploitation (using known, rewarding arms), they often struggle to explore effectively in situations where exploration does not yield immediate rewards. In some cases, the optimal arm may not be discovered until late in the game because exploration wasn’t aggressive enough.

- Over-Exploitation Risk: MAB algorithms may become overly exploitative, especially in greedy strategies (e.g., epsilon-greedy). Once a seemingly good arm is found, the algorithm may over-exploit it, neglecting other potentially more rewarding arms that have not been sufficiently explored.

Suboptimal Performance in Complex Environments

- Non-Stationary Environments: Classic MAB assumes that the reward distribution of each arm is stationary, meaning that the probabilities of rewards do not change over time. In real-world applications (e.g., advertising or user preferences), this assumption is often violated as environments can be dynamic. MAB algorithms may fail to adapt to these changing conditions, leading to suboptimal decision-making. This assumption is not applicable to adversarial MABs, where the rewards are controlled by an adversary and can change arbitrarily over time. In adversarial settings, there is no underlying stationary distribution, so algorithms designed for this scenario (like EXP3) focus on minimizing regret against the worst-case outcomes, rather than learning from stationary or predictable patterns.

- Delayed Rewards: In many scenarios, rewards are not immediate and may be delayed (e.g., in advertising campaigns or medical trials). Traditional MAB algorithms are not designed to handle delayed feedback, which can make it hard for them to attribute which arm led to which outcome.

Scalability Issues

- High Number of Arms: As the number of arms increases, especially in high-dimensional action spaces, MAB algorithms can suffer from scalability issues. With a large number of arms, the exploration phase may take a prohibitively long time, causing the algorithm to perform poorly in the short term before identifying the best arms. This is particularly problematic in settings like online recommendations or personalized treatments, where the number of options (arms) can be vast.

- Computational Complexity: Some advanced MAB algorithms, such as those involving Bayesian updates (e.g., Thompson Sampling), can become computationally expensive as the number of arms and the complexity of the problem increases. This limits their practical applicability in large-scale problems. UCB (Upper Confidence Bound) algorithms, on the other hand, tend to have lower computational complexity since they don’t rely on Bayesian posterior updates. Instead, UCB balances exploration and exploitation using simple confidence bounds, making it more scalable for large-scale problems where computational efficiency is critical.

Cold Start Problem

- Insufficient Initial Data: MAB algorithms require some initial exploration to learn which arms are the most promising. In situations where you start with no information (cold start), the initial phase of learning can be inefficient, resulting in suboptimal performance until enough data has been gathered. This can be problematic in environments where poor initial decisions can have significant consequences (e.g., medical or financial settings).

Poor Handling of Variability

- High Variance in Rewards: If the reward distributions of different arms have high variance, traditional MAB algorithms may be slow to learn the true value of each arm. For example, in environments where some arms produce occasional, but very high rewards (high variance), MAB algorithms might struggle to differentiate between genuinely good arms and those that provide high rewards only sporadically, potentially leading to poor decisions. In adversarial bandits, where the reward structure can change dynamically due to an adversary, this high variance makes it even harder for the algorithm to adapt, as the adversary could exploit the uncertainty in high-variance arms to mislead the learning process.

- Reward Skewness: Similarly, MAB algorithms may struggle when reward distributions are heavily skewed. For example, if some arms provide rewards that are often small but occasionally large, algorithms that focus too much on average rewards may miss out on arms with long-tail payoffs. In an adversarial bandit setting, skewed rewards can be particularly problematic because an adversary could manipulate the distribution to appear deceptively unpromising in the short term, encouraging the algorithm to abandon potentially high-reward arms, leading to suboptimal long-term performance.

Incompatibility with Complex Reward Structures

- Multi-Objective Optimization: MAB algorithms are typically designed to optimize a single reward metric. However, in many real-world problems, there may be multiple, potentially conflicting objectives (e.g., maximizing user engagement while minimizing costs). Standard MAB formulations are not well-suited to handle these multi-objective problems.

- Delayed or Sequential Rewards: In many real-world applications, rewards may come in sequences or with delays. For instance, actions taken now may influence future rewards in complex ways, such as in reinforcement learning scenarios. Traditional MAB models, which assume that actions lead to immediate rewards, struggle with these more complex, temporally dependent reward structures. However, stochastic policies like Thompson Sampling are more robust in environments where feedback is delayed because they maintain a degree of exploration, ensuring that learning continues even without timely updates. This allows the system to keep trying new actions while awaiting feedback, reducing the risk of prematurely converging on suboptimal choices.

Suboptimal Performance in Collaborative Environments