Meta

- Overview

- GenAI 1point3acres

- GenAI 1point3acres

- Interview questions from earlier

- Experience

- Technical Breadth

- People management

- Agility

- Motivation

- Questions for the manager

- HOld

- RAG

- Onsite

- dig Meta

- AI coding and onsite:

- Yashar Topics

Overview

This chat focuses on your past experience there’s not much you need to do to prepare. However, it would be advisable to pull your thoughts together ahead of time, and here’s the structure you can expect:

- Experience - covering the type of roles you’ve held, the breadth and depth of responsibility, and size of projects managed. This is also your opportunity to showcase your content expertise in your field of work

- Technical breadth and depth - At FB, we emphasize collaboration across multiple teams and individuals, so be able to talk about how your work has spanned multiple teams and/or iterations

- TL (project management) skills - including technical mentoring – think about your role in the setup, execution and delivery of a project

- People management skills - including upward influencing, mentorship, empathy, people growth etc.

- Agility - Indicator of your growth opportunities, as measured through your capability and willingness to absorb knowledge from your experience, and use these lessons to become even more effective in new and different situations

- Motivation - What would you be interested in working on at Facebook, or why are you interested in working to make Facebook better? Do you exhibit a strong general drive and desire to progress as a leader?

-

Meta/Barlas things: IR, DPR, Llama 2, GenAI, LoRA

- Also, don’t be afraid to be vulnerable and talk about difficult subjects, show senior level leadership qualities as this is a senior role.

GenAI 1point3acres

Certainly! Here’s the text with added spaces for readability:

GenAI 1point3acres

- Handwritten logistic/linear regression forward and backward calculation + common optimizer

- Manual data manipulation Hand-made simple machine learning pipeline (data + model + verification) - baidu 1point3acres Hand-made simple model (LR forward and reverse)

- Design a personalized location recommendation system

- Testing regularization, evaluation metrics

- Basically, it is to explain the process of recommendation from candidate generation to ranking which is e5

- What I encountered was to write an online code to calculate the mean and variance and a calculation about average precision AUC questions Hello! The content hidden in this post requires a score higher than 188 to be viewed. Your current score is 0. Use VIP to instantly unlock reading rights or view other ways to earn points.

- The first question is to give you a list such as and find the sum. For each number in this array when calculating the sum, it must be multiplied by the series of the array. For example, the sum of the example array = 11 + 21.

- Design a harmful content detection system. Can you share the focus process? Thanks (check dataset).

- The meta research scientist in the interview machine learning store: Likou wants a long time logistics. The first question used BFS and later the interviewer was asked to use DFS. VO: a total of four rounds 2 coding + ML design + BQ advertise. In the first round of ml design, a Korean girl designed a Facebook event predictor. I asked how to collect data, how to do feature engineering, what model to use, how to evaluate, how to improve, and some basic ML questions. In the second round of coding, I encountered an unreliable person.

- Frequently asked questions: Write K-means, write knn, implement a conv2d, write code to calculate precision, recall.

- If it is difficult, look at the pseudo code of the paper and write a python code to implement it. It simply allows you to write a linear regression and knn or something. I have seen similar ones in the field. You can search for them.

- Implement batch norm.

- The two questions I encountered are: 1. Top K frequent words (file reading and writing, a common follow-up is what if the file is too large and cannot be read at once. Since I have already considered it when stating the solution. So the interviewer didn’t ask about follow-up and asked me to analyze the complexity and correctness). 2. Generate mines, follow-up is minesweeping (the first question is very simple, the second question is lc529 classic BFS).

- Yi Erjiu Liu Shenliu (Follow up: how to detect invalid begin/end time) notification pass in the morning and afternoon.

- ML design: Design a classifier for bad ads.

- BQ: A time you had conflict with others, most challenging.

- The focus is on how to train a speech translator. It is probably the questions that a general train model will ask: data, model, loss, evaluation. But I am not familiar with this field so I feel that I can’t answer it well. Then I read the interviewer’s questions. Through experience, I found that the researcher from FAIR is doing research in this area. I guess NLP is very interested in the background of the interviewers, so if you know the name of the interviewer, it is best to look for it and have a preparation direction. I interviewed E5 but was down-leveled. I’m looking to see if I can earn it back.

- They were basically simple ML foundation things such as which metric evaluation to choose, the reasons for dropout in NNet, etc.

- The Indian lady was more than ten minutes late, so she only took one question: merge intervals from two sorted arrays O(n) time complexity. VO: ML system design: Design a simplified ads ranking system; 1 billion daily active users, 2 ads shown to customer. It involves how the specific system pipeline is set up and what components are there. ML research: For Facebook marketplace, how to categorize customers’ posts based on the image and text information.

- Supplementary round of extra noodles ML design: Instagram newsfeed ranking system.

- My understanding is that this user scale needs to use load balancer or Kubernetes to handle user requests. 2 ads shown per user should not care much about pairwise ranking order and need to take the two with the highest absolute value. Ads candidates are also large scale modeling wise, definitely use the funnel approach. Basically, we asked about everything from system pipeline to model to feature and data sample. ML research is about how to process image and text data for classification, not user content recommendation.

Interview questions from earlier

- How to design LLM end to end, query -> song return

- Read Eugene Yan LLM

- Gen AI text diffusion

- prompt, what metadata

- Equation hallucination

- Fine tuning Bert two losses

- Losses evaluation

- Mean average precision at k

- Papers personalizatiom recommender system

- Amazon llm articles

- How do you measure diversity

- do you finetune the LLM or not

- Hallucination vs CT^2

Experience

Amazon Music

- Currently, at Amazon, I lead the Music team for query understanding (map to standard functions, play Music) (finetuned an LLM input text, output is the API call. Trained base LLM, finetuned with API data with human annotations) and personalization.

- So as a user interacts with an Alexa device, say they say “play music”, we need to help understand the request and personalize it with the customers detail.

- Read LLM + Recsys

Alternative use Gorilla esque for LLM to output API

- User prompts

- document with API documentation retrieved via BM25

- Added with the prompt

- outputs the API

Finetuning LLM process

- Data Collection:

- Gather labeled data: Assemble a dataset where each input represents a user’s music-related query, and the corresponding output is the appropriate API function, such as

playMusic()orplayLatestMusic(). - Data augmentation: Increase the variety of your dataset by rephrasing music requests, introducing typical misspellings, or using diverse ways to request music. Fine-tuning a Large Language Model (LLM) like GPT-3 or GPT-4 to emit or generate APIs would require a dataset that contains descriptions or prompts along with their corresponding API structures or responses. The dataset should cover a wide range of use cases, contexts, and scenarios to ensure that the LLM can generalize well to different API requirements. Here’s an illustrative breakdown of what such a dataset might look like:

- Gather labeled data: Assemble a dataset where each input represents a user’s music-related query, and the corresponding output is the appropriate API function, such as

-

Dataset Structure:

The dataset would ideally be structured with input-output pairs, where the input is a description or a task, and the output is the corresponding API structure or response.

{ "input": "Create a REST API endpoint to retrieve user information based on user ID.", "output": "GET /users/:id" }, { "input": "Design an API that allows updating a product's price in an e-commerce system.", "output": "PUT /products/:productId/price" }, ... -

API Types:

Different types of APIs should be covered:

- RESTful APIs: Emphasizing different HTTP methods like GET, POST, PUT, DELETE.

- GraphQL: Queries, mutations, and subscriptions.

- Websockets: How to set up a connection or how messages are sent/received.

- RPC: Procedure calls for certain functions.

-

API Elements:

The dataset should cover different elements associated with APIs:

- Endpoints: Different paths, route parameters.

- Headers: Authorization, content type, etc.

- Parameters: Query parameters, body parameters.

- Responses: Status codes, response bodies, error messages.

-

Complex Scenarios:

It’s not just about endpoints, but also about combining different elements:

{ "input": "Design an API to upload an image, ensure it's in PNG format and the size should not exceed 5MB.", "output": "POST /upload/image\nHeaders: Content-Type: image/png\nConstraints: Max size 5MB" } -

Real-world Examples:

Include real-world scenarios and existing API documentation. This helps the model understand commonly used patterns and best practices in API design.

-

Contextual Cases:

The dataset should also contain examples that require a deeper understanding of context:

{ "input": "Create an endpoint for a blogging platform to allow authors to save drafts.", "output": "POST /blog/drafts" } -

Validation and Errors:

Incorporate examples that handle validation, error messages, and other edge cases:

{ "input": "Design an API to register a user. Ensure the email is valid.", "output": "POST /users/register\nParameters: {email: 'valid-email@example.com', password: 'string'}\nErrors: {status: 400, message: 'Invalid email format'}" } -

Versioning:

Incorporate examples that demonstrate versioning in APIs:

{ "input": "Introduce a version 2 for retrieving user data which also includes their purchase history.", "output": "GET /v2/users/:id/purchase-history" } -

Natural Language Variability:

For better generalization, include various ways of phrasing the same requirement. This ensures that the model can understand diverse prompts.

- Annotations:

For advanced fine-tuning, you can annotate the dataset to specify parts of the API, like “endpoint”, “method”, “parameter”, etc.

To fine-tune an LLM effectively, this dataset should be sufficiently large and diverse. Once the model is trained, rigorous testing and validation are necessary to ensure the generated APIs are accurate, feasible, and secure.

- Preprocessing:

- Tokenize: Break down music queries into tokens, which can be words or subwords.

- Contextualize: Take into account prior context. This might include details like the last song the user listened to or their current mood.

- Use NER: Extract specific entities like song titles, artist names, or genres from the user’s query using Named Entity Recognition. This will help in better understanding and categorizing user requests.

- Fine-tuning:

- Set up the model: Start with an LLM that has pretrained weights.

- Define a task-specific head: For this job, you’d probably want a classification layer where each class matches a different API function.

- Train: Use your music dataset to adapt the model. Adjust settings, like learning rates and batch sizes, when needed.

- Evaluation:

- Validation: Throughout training, check how well the model is doing using a separate validation set.

- Testing: After the fine-tuning is done, evaluate how well the model understands music-related queries using a distinct test set.

- Deployment:

- Once you’re sure the model is reliable, add it to your system. Now, it will figure out user’s music wishes and trigger the right API calls, like

playMusic()orplayLatestMusic().

- Once you’re sure the model is reliable, add it to your system. Now, it will figure out user’s music wishes and trigger the right API calls, like

- Feedback Loop:

- Regularly get feedback on the model’s real-world performance in interpreting music requests.

- Update the model using new data from time to time. This keeps its performance high and helps it stay in tune with changing music tastes or user behaviors.

- Important Points to Remember:

- Compute and Storage Costs: Think about the amount of computer power and storage you’ll need, both for training and for using the LLM.

- Ethical Matters: Make sure your data respects privacy rules. And aim to reduce any biases in the model, even those related to music.

- Versioning: When you make updates, keep track of model versions. This way, you can go back to an older one if a new version causes problems.

- With an LLM that’s been fine-tuned this way, users can tell the system about their music choices in a more natural way. In turn, the system can figure out what they mean and play songs or offer a music experience that fits them just right.

- Validation

- Validate against a schema

- Confidence score

Finer details

In building a music recommendation and playback system:

-

Entity Recognition: The system identifies key details like song names, artist names, and genre to decide the appropriate playlist or station, ensuring a range of songs rather than just one.

-

Intent Classification: It determines user’s request type, e.g., general music playback like “play songs by Adele” versus specific requests such as “play Adele’s latest music.”

-

Context Understanding: Factors such as user’s location, time, holidays, and content preferences (like explicit content) are considered.

-

Process Overview:

- Intent Recognition: Determines the primary user action, like “play music.”

- Slot Filling: Extracts details like song (“Hello”), artist (“Adele”), playback device (“living room speaker”), and volume (“60%”).

- Argument Building: Uses extracted details to form function arguments, e.g.,

track="Hello", artist="Adele". - Query Resolution: The system matches the intent and details to an API function:

playMusic(track="Hello", artist="Adele", device="living room speaker", volume=60). - Handling Incomplete Queries: If a query lacks details, the system asks follow-up questions, like clarifying the artist for a song title.

- Execution: The determined function is triggered, initiating the playback or other actions.

Evaluation

Evaluating the fine-tuned LLM for music intents requires a comprehensive approach that ensures not only its technical performance but also its usability and relevance to users. Here’s a structured plan:

- Quantitative Metrics:

- Accuracy: Calculate the percentage of user queries that the model classifies correctly into the intended API functions like

playMusic()orplayLatestMusic(). - Precision, Recall, and F1-score: Especially important if there’s a class imbalance in the API functions. For instance, if users more frequently request to play music than to play the latest music.

- Confusion Matrix: Understand which categories or intents are commonly misinterpreted.

- Accuracy: Calculate the percentage of user queries that the model classifies correctly into the intended API functions like

- Qualitative Analysis:

- User Testing: Engage a diverse group of users to interact with the model in a real-world setting. Gather feedback regarding its accuracy, relevance of music choices, and overall user satisfaction.

- Error Analysis: Manually review a subset of misclassifications to identify common themes or patterns. This might reveal, for instance, that the model struggles with recognizing certain genres or artists.

- Real-world Performance Metrics:

- Engagement Metrics: Monitor how often users engage with the music played. A decrease in skips or an increase in full song plays can be indicators of good recommendations.

- Retention Rate: Measure how often users return to use the recommendation feature. A higher return rate can indicate user satisfaction.

- Feedback Collection: Allow users to provide feedback directly (e.g., “this wasn’t what I was looking for”) and use this feedback to iteratively improve the model.

- NER Evaluation:

- Entity Recognition Accuracy: Since NER is used in preprocessing, measure how accurately the model identifies and categorizes entities like song titles, artist names, or genres.

- Coverage: Determine the range of entities the model can recognize. It should ideally recognize a wide array of songs, artists, and genres without significant gaps.

- Usability Testing:

- Intuitiveness: Gauge how easily users can formulate queries and if the system’s responses align with their expectations.

- Response Time: Since it’s a real-time recommendation system, the model’s response time should be quick to ensure a seamless user experience.

- A/B Testing (if possible):

- Comparison with Baseline: Compare the LLM’s performance against a baseline system (perhaps the current system in use or a simpler recommendation model). By randomly assigning users to interact with either system, you can measure differences in user engagement and satisfaction.

- In essence, using the LLM, you’re dynamically translating natural language instructions into structured function calls that the system can understand and act upon. This approach makes interactions intuitive for users while ensuring precise actions on the backend.

- It’s about gauging both the implicit and explicit needs and delivering a seamless music experience.

- Our team’s focus is around customer growth so we serve recommendations that will help grow our customer base

- This includes, Next Best Action via Multi-armed bandit, where we look to educate inactive users by giving them 3 personalized push notifications, prompting them to perform different actions on the app.

- The number 3 was decided after several experimentation where we didn’t want to bombard the user but still educate them

- We also have a partnership with Amazon.com retail where we find correlation between retail products and music latent factors and have it on the Amazon.com page item to item

- This includes, Next Best Action via Multi-armed bandit, where we look to educate inactive users by giving them 3 personalized push notifications, prompting them to perform different actions on the app.

NuAIg

- Spinoff from Oracle in the healthcare domain automating administrative and operational task

- Creating a Clinical Documentation Tool:

- Named Entity Recognition (NER): To identify specific entities in the text, such as patient names, medication names, diseases, procedures, dates, and other relevant medical terms.

- Information Extraction: Beyond just recognizing entities, this task involves extracting relationships and attributes associated with these entities. For instance, understanding that a specific drug was prescribed for a particular symptom or disease.

- Text Classification: To categorize different parts of a clinical note (e.g., diagnosis section, treatment section, patient history).

- Topic Modeling: To automatically identify the main topics covered in a clinical note, aiding in quick summarization.

- Designing an Information Retrieval System: –> FAISS

- Document Indexing: Efficiently indexing medical guidelines, patient data, and treatment options for rapid retrieval.

- Query Understanding: Interpreting what a user (possibly a healthcare professional) is looking for, even if their query is in natural, conversational language.

- Document Ranking: Sorting the retrieved documents by relevance based on the user’s query and possibly other factors like patient specifics.

- Semantic Search: Using embeddings and other advanced techniques to ensure the retrieval system understands the meaning and context, not just keyword matches.

- Automating Claims Processing:

- Named Entity Recognition (NER): As mentioned earlier, this would be used to identify specific entities like patient names, diseases, treatments, amounts, dates, etc.

- Text Classification: To categorize different sections of the claim form or to determine if a particular document is, in fact, a claim.

- Relationship Extraction: To understand the relationships between entities. For instance, connecting a diagnosis with a specific treatment or procedure.

- Automated Form Filling: Once relevant information is extracted, populating standardized forms or databases using the extracted data.

- Error Detection: Using NLP to spot inconsistencies or errors in claims, ensuring higher accuracy.

Oracle

- Modeling Server Capacity Data to Predict Outages:

- ML Techniques:

- Time Series Analysis & Forecasting: Methods like ARIMA, Prophet, or LSTM (Long Short-Term Memory networks) to predict server capacity based on historical data.

- Regression Models: For predicting capacity, techniques like Linear Regression or Support Vector Regression might be relevant.

- Random Forest & Gradient Boosting: Ensemble methods that can predict server outages based on a multitude of factors and historical data.

- ML Techniques:

- Predicting Server Health Using LogBERT to Understand Anomalies:

- NLP Techniques:

- Transfer Learning: Using a pre-trained model like BERT (in this case, a variant called LogBERT) and fine-tuning it to analyze server logs.

- Semantic Embeddings: Representing server logs as vectors in a high-dimensional space using embeddings derived from models like BERT.

- ML Techniques:

- Anomaly Detection: Techniques such as One-Class SVM, Isolation Forest, or Autoencoders can be employed to detect anomalies in the log embeddings.

- Clustering: Using unsupervised algorithms like K-Means or DBSCAN to cluster similar logs and identify potential anomalous patterns.

- NLP Techniques:

- Outlier Detection for Current Latency and Storage Models:

- ML Techniques:

- Statistical Methods: Techniques like the Z-Score, Box-Plot, or IQR (Interquartile Range) for basic outlier detection.

- Isolation Forest: A tree-based method designed specifically for anomaly and outlier detection.

- Density-Based Spatial Clustering (DBSCAN): Useful for detecting clusters in data and identifying points that do not belong to any cluster as potential outliers.

- Autoencoders: Neural network-based approach where the network is trained to reproduce the input data, but anomalies produce higher reconstruction errors.

- ML Techniques:

Research

- I am a research fellow at the University of South Carolina where I collaborate on a few publications I focus mostly on NLP with a little vision and multimodality

CT2: AI-Generated Text Detection and Introduction of AI Detectability Index (ADI)

Evaluation of Current Techniques for Detecting AI-Generated Text:

- Watermarking: A method that subtly tags AI-generated text, using unpredictable word alterations.

- Shortcoming: These watermarks can be modified or removed, especially if one is aware of the watermarking method.

- Perplexity & Burstiness Estimation: Techniques examining how well a model predicts text and the occurrence of word clusters, respectively.

- Shortcoming: As AI models like GPT-3 become sophisticated, the differences in perplexity and burstiness between AI and human text are less discernible, rendering these metrics less effective.

- Negative Log-likelihood Curvature: This observes how small alterations in input affect the model’s output.

- Shortcoming: It’s not always reliable, especially for sophisticated models like GPT-3, where AI-generated content becomes hard to distinguish from human text.

- Stylometric Analysis: A method analyzing linguistic style differences.

- Shortcoming: Modern AI models like GPT-3 have become adept at mimicking human styles, making it challenging to spot AI-generated content based on stylistic nuances.

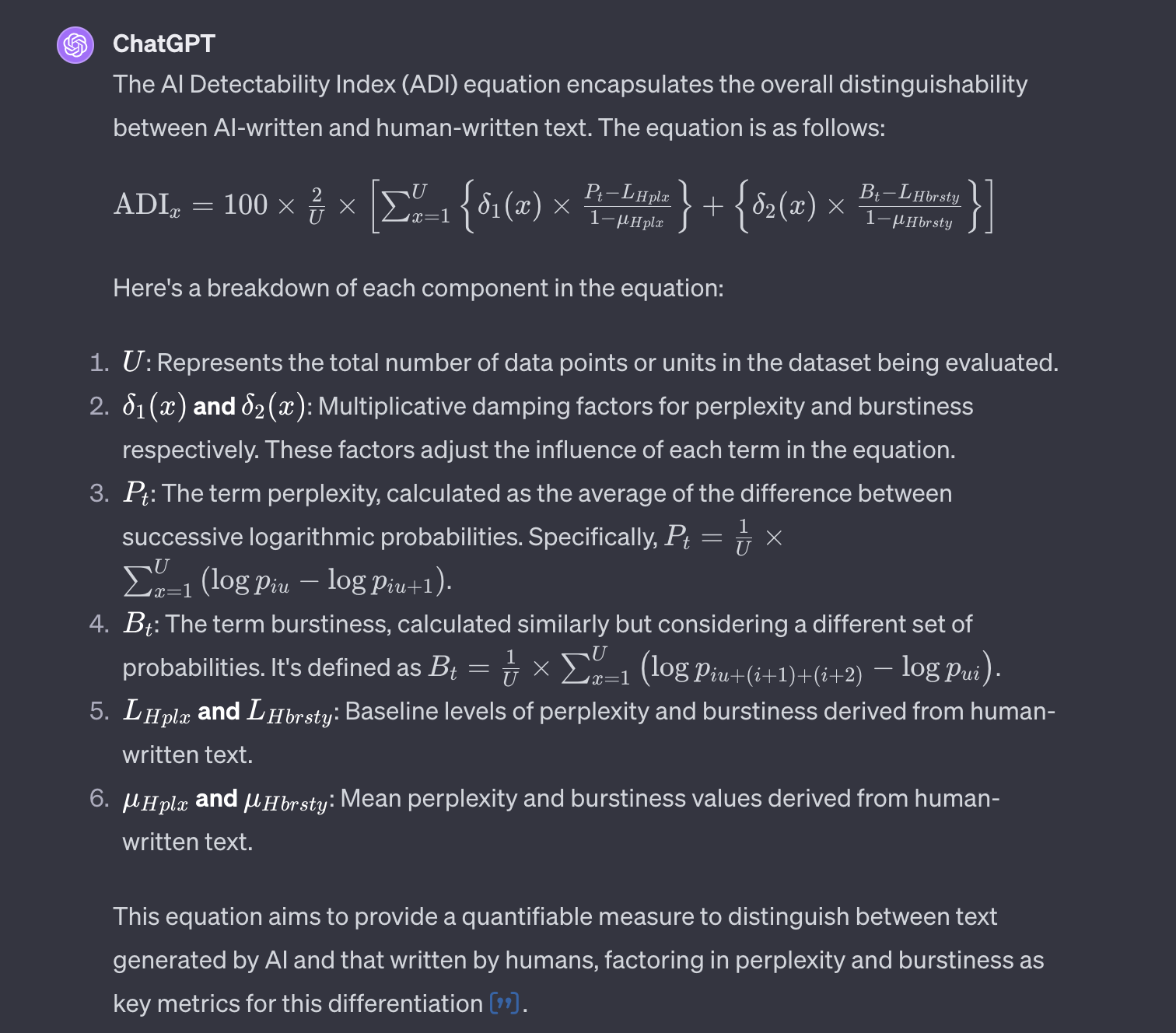

Introducing the AI Detectability Index (ADI):

-

What is ADI? It’s a metric combining perplexity (predictability of text) and burstiness (patterns in word choices).

-

Why Perplexity and Burstiness?

- Perplexity: Measures how predictable a text sequence is. Human writing is believed to have more variability in its predictability.

- Burstiness: Focuses on the repetitiveness of words or phrases. AI-generated text tends to have more repetitive word patterns.

- The ADI combines these elements, comparing the metrics against human-written standards, and ranks models based on the detectability of their generated content.

Research Findings:

- After extensive testing on 15 models, it was evident that conventional methods, including watermarking and stylometric analysis, have limitations, especially when faced with advanced models like GPT-3.

- While the ADI presents a novel way of leveraging perplexity and burstiness, it’s important to acknowledge that there are still challenges. As AI models evolve, there’s a need for continuous refinement in our detection methods.

Less refined CT2

- Overview:

- Research Focus: The paper emphasizes the importance of detecting AI-Generated Text and introduces the AI Detectability Index (ADI).

-

Achievement: This paper received the Best Paper Award for its innovative approach.

- Background on ADI:

- Definition: ADI is a composite metric that merges two linguistic measures: perplexity (syntactic) and burstiness (lexical).

- Empirical Basis: The composition of ADI is founded on empirical observations, and its formulation was influenced by the density function according to Le Cam’s Lemma.

-

Reflection & Future Work: The authors self-reflect, suggesting potential alternative features for ADI, and indicate opportunities for future research to expand and refine the ADI definition.

- Evaluation of Current AGTD Techniques:

- Overview: Various methods have recently been introduced to detect AI-generated text. However, the paper argues that most of these techniques are not robust against state-of-the-art models.

- Watermarking: Originally proposed to label AI text by switching high-entropy words, this technique is shown to be vulnerable to strategies like word replacements or paraphrasing.

- Perplexity & Burstiness Estimation: These techniques aim to identify statistical differences between AI and human-produced text. However, newer models, such as GPT-3, generate text so similar to humans that these methods become less effective.

- Negative Log-likelihood Curvature: This was introduced to identify AI text based on how perturbations influence probability. Yet, empirical evidence from the paper suggests it doesn’t offer a reliable indicator, especially for models like GPT-3.

- Stylometric Analysis: This method, aiming to discern linguistic style differences, is found to be constrained when applied to modern advanced models.

- The ADI is intended to provide a quantitative measure of how detectable an AI system’s generated text is, using current state-of-the-art detection methods. It aims to rank language models based on their detectability spectrum.

- The ADI equation incorporates two key stylometric features - perplexity and burstiness.

- Perplexity measures how predictable or expected a sequence of words is. The hypothesis is that human writing will have more variation in perplexity across sentences and passages compared to AI text. So the ADI computes the entropy or variability of perplexity scores across an excerpt of text to quantify this.

- Burstiness refers to patterns in word choice and vocabulary diversity. The hypothesis is that AI text will display more repetitive bursts of similar words or phrases compared to human writing. So again, the ADI looks at computing entropy of burstiness scores across sentences.

- The equation combines these two stylometric features, comparing the text excerpt’s perplexity and burstiness entropy to reference levels measured from human-written text. It uses weighting factors to rank the detectability.

-

Through comprehensive experiments on 15 different language models, we found that perplexity and burstiness are not reliable indicators to distinguish AI vs human text, especially for larger models. Other detection methods like watermarking, negative log curvature, and stylometrics also had limitations.

- So in summary, the ADI equation tries to quantify text detectability using perplexity and burstiness signals, but our experiments revealed fragility in current AI detection techniques, especially for more advanced language models. We hope ADI provides a rubric to guide further research and benchmarking as better methods emerge. Let me know if any part of the equation or results needs more explanation!

Certainly! Let’s delve deeper into each of these techniques:

1. Watermarking:

Concept: Watermarking involves subtly marking or tagging generated text in a manner that identifies it as AI-produced. This is akin to a digital “signature” embedded in the text. The original proposal for this technique was to label AI text by switching high-entropy (unpredictable) words.

Weakness: AI-generated text that’s been watermarked can often be “cleaned” by simply altering, paraphrasing, or replacing certain words. If a malicious actor is aware of the watermarking technique, they can take steps to remove or modify these watermarks, rendering the detection mechanism ineffective.

2. Perplexity & Burstiness Estimation:

Concept:

- Perplexity is a measure of how well a probability distribution predicts a sample. In the context of text, it gauges how well a language model anticipates the next word in a sequence. High perplexity indicates that the model finds the text unpredictable, while low perplexity means the opposite.

- Burstiness refers to the phenomenon where certain words or phrases appear in “bursts” or are clustered together more than would be expected by chance.

Weakness: As AI models like GPT-3 have improved, they generate text that’s statistically closer to human writing. This means the distinctions in perplexity and burstiness between AI and human text diminish, making these metrics less effective as discriminators.

3. Negative Log-likelihood Curvature:

Concept: This technique tries to identify AI-generated text based on how small perturbations or changes influence the likelihood or probability of generated sequences. Essentially, it looks at how sensitive the model’s output probability is to small changes in the input.

Weakness: The paper’s empirical evidence indicates that this method doesn’t consistently differentiate between AI and human text, especially when it comes to sophisticated models like GPT-3. As AI models become better, the curvature patterns become less distinguishable from those of human text.

4. Stylometric Analysis:

Concept: Stylometry is the study of linguistic style, and it’s used to attribute authorship to anonymous or disputed documents traditionally. Stylometric analysis seeks to discern differences in writing style between AI and human authors.

Weakness: As AI models have become more advanced, they’ve become better at mimicking human writing styles. This means that the subtle distinctions in style that stylometric methods rely on become less pronounced, making it harder to detect AI-generated content solely based on stylistic attributes.

In summary, while these techniques offer promising approaches to detect AI-generated text, they also come with limitations, especially when dealing with state-of-the-art models like GPT-3. This underscores the importance of continuous research in the field to keep pace with the rapid advancements in AI text generation capabilities.

1) While perplexity and burstiness may not work well in isolation, the authors believe combining them could provide a more robust signal. The ADI formula incorporates both features.

2) The ADI introduces additional factors like using human benchmark comparisons, weighting differences by model detectability, and averaging over many samples. So it enhances perplexity and burstiness in a more comprehensive metric.

3) The authors argue that other detection methods like stylometrics and classifications are ultimately dependent on core features like perplexity and burstiness. So distilling it down to these fundamental elements could offer a universal benchmark.

4) As models evolve, other detection methods may fail, but perplexity and burstiness can still indicate how close models get to mimicking human writing patterns.

- In essence, the authors are proposing a new way to leverage perplexity and burstiness as part of a more robust and adaptable detection metric in ADI. You raise a very fair point though that they are still utilizing features they demonstrated shortcomings of. More research is needed to validate the effectiveness of ADI as models continue to advance.

Hallucination

- This paper presents a detailed categorization and analysis of the hallucination phenomenon in large language models (LLMs). The key aspects are:

-

It defines two orientations of hallucination: Factual Mirage (FM) and Silver Lining (SL). FM is when the LLM distorts a factually correct prompt. SL is when the LLM generates an elaborate narrative for a incorrect prompt.

-

It further divides FM and SL into intrinsic and extrinsic sub-categories. Intrinsic is minor hallucination supplementary to the topic. Extrinsic is major hallucination deviating from the topic.

-

Six categories of hallucination are defined: Numeric Nuisance, Acronym Ambiguity, Generated Golem, Virtual Voice, Geographic Erratum, and Time Wrap. These cover common types of factual distortions in LLM outputs.

-

Three degrees of hallucination are proposed: mild, moderate and alarming - indicating the severity.

-

To quantify hallucination tendencies, the paper introduces the Hallucination Vulnerability Index (HVI):

- Where:

- U - Total sentences

- N(x) - Hallucinated sentences by LLM x

- P(EFM) - LLM’s tendency for EFM

- P(ESL) - LLM’s tendency for ESL

- δ1(x), δ2(x) - Damping factors

- The HVI provides a 0-100 comparative score of an LLM’s hallucination vulnerability. Higher HVI indicates greater hallucination tendency. The equation accounts for an LLM’s specific extrinsic hallucination biases and overall degree of hallucination.

-

The HILT dataset of 129K annotated sentences from 15 LLMs is introduced to support analysis.

-

Mitigation techniques like high entropy word replacement and textual entailment checking are proposed.

In summary, the paper provides a comprehensive framework to characterize and quantify hallucination tendencies in LLMs - defining categories, degrees and introducing the HVI metric. The insights can inform research into mitigation strategies.

Equation

- If asked to explain the Hallucination Vulnerability Index (HVI) equation in an interview, I would describe it this way:

- The HVI gives us a score from 0 to 100 that indicates how likely an AI model is to hallucinate or generate false information. A higher score means the model is more prone to hallucinating.

- The equation works by looking at two main factors - the model’s tendency for extrinsic factual mirage (EFM) and extrinsic silver lining (ESL) hallucinations. EFM is when the model distorts factual information and ESL is when it fabricates details for an incorrect prompt.

- First, we calculate the number of EFM and ESL hallucinations the model generates out of the total sentences. This gives us the error rates - P(EFM) and P(ESL).

- Next, we look at how many total hallucinated sentences there are, and compare that to the EFM and ESL rates. The bigger the difference, the more other types of hallucinations there are.

- We weight those differences by the error rates - so if EFM rate is high, more weight is given to the EFM-related difference.

- Finally, we factor in the damping parameters which help scale the scores across different models. This gives us the final HVI score.

- So in essence, the equation accounts for both the specific EFM and ESL tendencies, and the overall degree of hallucination of an AI model. The higher the score, the more likely the model is to hallucinate. It provides a standardized metric to compare different models’ hallucination vulnerabilities.

CONFLATOR: Code Mixing:

Background:

- Topic: Code-mixing in multilingual societies.

- Challenge: Traditional Transformers struggle with code-mixed text like “Hinglish” (Hindi-English mix).

- Key Difficulty: “Switching points” where languages change, making context hard to learn.

The CONFLATOR Model:

- Core Idea: Emphasize the switching points between languages for better understanding.

- Innovation: Uses Switching Point Rotatory Matrix (SPRM) to adjust positional encodings when a language switch is detected.

- Granularity: This technique is applied at two levels - for individual words (unigrams) and word pairs (bigrams).

- Architecture: Merges both unigram and bigram token representations.

Training & Data:

- Data Source: Trained on code-mixed Hindi-English tweets and news comments.

- Training Depth: Base models underwent 100k training steps, while larger models with SPRM underwent 250k steps.

Key Findings:

- Performance: CONFLATOR surpassed other models in understanding and analyzing mixed-language text.

- Understanding: The model grasped mixing patterns and recognized switching points better than other methods.

- Optimal Approach: For sentiment analysis, both unigram and bigram models worked well. However, for translation, the unigram SPRM model was the most effective.

In Summary: CONFLATOR, a novel neural model, introduces a unique approach to decode mixed-language content by focusing on the “switching points” between languages. By integrating this perspective into the positional encoding, it effectively captures the intricacies of mixed languages, making it a potential benchmark in the field of code-mixed language modeling.

Less clear CONFLATOR

- Certainly, I’ll explain the given passage in simpler terms:

- Switching-Point Based Rotary Positional Encoding:

- The authors introduce a new way to handle positional encoding in neural networks. Positional encoding is a technique used in Transformer architectures (a popular neural network model) to understand the position or order of words in a sentence.

- The new technique revolves around the idea of “switching points.” Whenever a switch from one language to another occurs in a code-mixed sentence, they change the rotation (or tweak the positional encoding). This helps the model learn when and how languages are mixed within a sentence.

- CONFLATOR:

- This is a new neural network model designed specifically for languages that are code-mixed, like Hinglish.

- The primary innovation in CONFLATOR is its use of the aforementioned switching-point based rotary positional encoding. Initially, the model looks at each word individually to determine if a switch has occurred. Then, it examines pairs of words (bigrams) to refine its understanding.

- Empirical Evidence:

- The authors claim to have evidence that CONFLATOR successfully learns the patterns of how languages are mixed together in Hinglish. They compare its performance to other models that use different methods to understand the order of words. Their findings suggest that CONFLATOR does a better job at this than other models, as depicted in “Figure 5” (which we don’t have access to in the given text). - In a nutshell, this paper is about introducing a new technique and model for understanding and processing sentences where two languages are mixed together, with a specific focus on the mix of Hindi and English known as “Hinglish.” - Textual Diffusion with Hallucination - Where we’re looking to incorporate factual ground truth during the denoising process to see if that can help mitigate hallucination.

This paper introduces a novel neural language modeling approach called CONFLATOR for code-mixed languages like Hinglish (Hindi-English). Here is a summary explaining the key aspects in an interview-style:

Background:

-

Code-mixing is prevalent in multilingual societies but modeling it is challenging due to data scarcity and unique properties like switching points between languages.

-

Transformers have set benchmarks in language modeling but still struggle on code-mixed text.

-

Switching points are a major bottleneck as the language changes, making it difficult to learn context.

Approach:

-

CONFLATOR hypothesizes that emphasizing switching points is key for code-mixed language modeling.

-

It incorporates positional information of switching points into Rotatory Positional Encodings (RoPE) which capture relative positions.

-

A Switching Point Rotatory Matrix (SPRM) is introduced that changes rotation when a switch point is encountered.

-

This is applied at both unigram and bigram level tokenizations.

-

The architecture merges unigram and bigram attention heads with learnable weights.

Loss Function:

-

Cross-entropy loss is used for the language modeling objective.

-

No specific custom loss function is introduced. The novelty is in the positional encodings.

Training:

-

CONFLATOR is trained from scratch on code-mixed Hindi-English tweets and news comments data.

-

Different configurations with unigram, bigram, SPRM are trained for comparison.

-

Around 100k steps of training is done for base models and 250k for bigger SPRM models.

Key Findings:

-

CONFLATOR outperforms baselines and prior state-of-the-art on intrinsic perplexity and extrinsic sentiment analysis and machine translation tasks.

-

Analysis shows it learns mixing patterns and differentiates switch points better than other positional encodings.

-

Bigram modeling helps for sentiment but not translation. Unigram SPRM achieves best results.

In summary, the key novelty is the switching point based rotatory positional encoding incorporated in a code-mixed neural language model, which shows promise in capturing nuances of mixed languages.

Let me know if you would like me to expand or clarify any part of the explanation! I’m happy to go into more details.

You raise some great points - let me explain the model architecture, inputs/outputs, and objectives in more detail:

Input:

-

The input to CONFLATOR is code-mixed text, such as Hinglish sentences mixing Hindi and English words.

-

For example: “mujhe yeh gaana bahut pasand hai” (I like this song very much).

-

The input text is tokenized into unigrams (individual words) and bigrams (pairs of words).

Architecture:

-

The tokenized input is passed through an embedding layer to get vector representations for each unigram/bigram.

-

These then go through the encoder layers equipped with the proposed Switching Point Rotatory Positional Encoding (SPRoPE).

-

SPRoPE modifies the standard Rotatory PE (RoPE) to change rotation when a language switch point is encountered, helping differentiate switch positions.

-

The unigram and bigram encoder outputs are merged with learnable weights and passed to the decoder.

Objectives:

-

CONFLATOR was trained for language modeling - to predict the next word/token given the previous context. This helps build robust representations.

-

It was also evaluated on downstream tasks:

- Sentiment analysis of code-mixed text

- Machine translation from Hinglish to English

Key Outcomes:

-

CONFLATOR outperformed baselines on perplexity (language modeling metric) showing its representations capture code-mixing better.

-

It achieved new state-of-the-art results on the extrinsic tasks, demonstrating the usefulness of emphasizing switch points.

-

The unigram model performed best overall, while bigram helped for sentiment analysis.

In summary, CONFLATOR takes code-mixed text as input, models it using SPRoPE for switch points, and is trained for language modeling and downstream tasks, outperforming previous benchmarks. The key contributions are around representing and incorporating switch points. Let me know if you need any part of the architecture or results clarified!

Deception Detection

- This paper focuses on detecting lies of omission specifically in textual data from news articles and headlines. The background is that prior work in deception detection has mostly looked at outright lies (lies of commission), while lies of omission have received comparatively little focus.

- Lies of omission are when someone deliberately leaves out key information, twisting the narrative. For example, a headline could read “New study shows vaccine is dangerous” but omit mentioning that the study was retracted.

- To explore lies of omission, the authors created a multi-layered dataset called SEPSIS using real news headlines from major outlets as well as existing fake news data. The lies in this dataset are omissions manually introduced based on real-world observations.

- So in summary, the SEPSIS dataset contains textual news statements and headlines that have different types of deliberate omissions introduced. The paper focuses on detecting these synthetically generated lies of omission, with the goal of gaining insights into how such deception manifests in textual language.

- The models are not detecting omissions in general text generated by LLMs. Rather, the dataset curation, augmentation and models focus specifically on understanding and detecting lies of omission in the textual news domain. This is an interesting area since such “twisted truth” via omission is hard to pinpoint yet can significantly alter narrative. I hope this helps explain the background and objective behind the paper! Let me know if you need any clarification or have additional questions.

- This paper presents an interesting study on lies of omission and introduces a novel framework called SEPSIS for detecting deception. Here are the key points about the paper:

Architecture:

-

SEPSIS uses a multi-task learning (MTL) architecture with 4 tasks corresponding to the 4 layers of deception: type of omission, color of lie, intent of lie, and topic of lie.

-

It first obtains word embeddings from a merged language model created by finetuning and dataless merging of multiple T5 models.

-

These embeddings are fed to a transformer encoder to create a latent representation.

-

This representation is passed through 4 multilabel classification heads, one for each task.

Training:

-

Dataset: The SEPSIS dataset contains over 876k data points with multilabel annotations across the 4 deception layers.

-

Augmentation: Paraphrasing and 5W masking were used for data augmentation.

-

Loss functions: A tailored loss function was used for each task head - distribution balanced loss for type, cross entropy for color, focal loss for intent, and dice loss for topic.

-

Model merging helped leverage shared information across tasks and improved performance.

Key Findings:

-

The MTL model achieved an F1 score of 0.87 on SEPSIS, demonstrating effectiveness across all deception layers and categories.

-

Analysis revealed correlations between propaganda techniques and lies of omission, e.g. loaded language correlates with speculation and black lies.

- Public release of the dataset and code will facilitate more research on deception detection.

- In summary, the paper presents a novel MTL framework for deception detection using a multilabel dataset, tailored loss functions, data augmentation and model merging techniques. The analysis provides new insights into lies of omission and their connection to propaganda.

Projects mentioned LoRA

Of course! I’ll explain the procedures and intentions behind each of these tasks:

-

Few-Shot Learning with Pre-trained Language Models:

-

Performed few-shot learning with pre-trained LLM: This means that a small amount of data was used to adapt (“fine-tune”) pre-existing language models (likely designed for broad tasks) to perform a more specific task. The fact that the models are pre-trained indicates that they already have a good grasp of the language due to previous training on large datasets.

-

such as GPT and BERT from HuggingFace’s libraries: The pre-trained models used were GPT and BERT, which are prominent models for understanding language context. These models were sourced from HuggingFace, a leading provider of state-of-the-art language models.

-

Experimented with more sophisticated fine-tuning methods such as LoRA: After starting with basic fine-tuning, more advanced methods were employed. LoRA (Localized Re-adaptation) is one such method that provides a sophisticated way to adapt a pre-trained model to a new task using a limited amount of data.

-

Used PyTorch framework: All the experiments and model training were done using PyTorch, which is a popular deep learning framework. This gives information about the tools and libraries that might have been employed during the procedure.

-

-

Multitask Training for Recommender Systems:

-

Implemented a multi-task movie recommender system: A recommender system was developed that can handle multiple tasks simultaneously. In this context, it might mean recommending various types of content or handling different aspects of recommendations concurrently.

-

based on the classic Matrix Factorization and Neural Collaborative Filtering algorithms: The foundational techniques used for this recommender system are:

- Matrix Factorization: It’s a technique where user-item interactions are represented as a matrix, and then this matrix is decomposed into multiple matrices representing latent factors. This is a traditional technique often used in recommendation systems.

- Neural Collaborative Filtering: This is a more modern technique that uses neural networks to predict user-item interactions, thus providing recommendations.

-

In summary, the first task involved adapting large, general-purpose language models for specific tasks using a small amount of data, while the second task was about building a multi-task recommendation system using traditional and neural techniques.

Technical Breadth

- I love collaboration and thinking outside the box. Amazon devices, the primary goal was for users to shop.

- So what I’ve been trying to do is, find correlation between retail items and songs, both for the website and Alexa as well.

- Item to item recommendations are bread and butter of Amazon

People management

- I like to lead with empathy

- Mentorship: make sure everyone has a mentor, helping them find one if not

- people growth

- upward influencing: offerring solutions, understanding the perspectives and goals

Agility

Motivation

- The research coming out of Meta is an inspiration in itself, Meta is a trailblaizer in so many domains:

- Text to speech: Voicebox where its able to do speech generation tasks it was not necessarily trained on

- Pure NLP: No language left behind project with translations between 200 languages and including the work with low-resource languages is something I really connect with.

- Recommender system: embedding based retrieval and so many more

- And I imagine Smart glasses org to be a culmination of all of this research and so, to be given the opportunity to work there would be a true joy.

Questions for the manager

- Team structure, I assume since it’s lenses, theres collaboration with a vision team. Are there other modalities at play?

- Hallucination is the biggest problem with LLMs

- Smart Glasses (SG) Language AI

-

We focus on Conversational AI, SG Input AI, Knowledge-enriched Discovery AI, Privacy ML and AI Efficiency. Our system powers critical SG launches thrusted by the Meta leadership. We have strong scientists and engineers, solving challenging AI problems with both Cloud based large models and On-Device ML models. Join us if you are passionate about AI-centric next-gen computing platforms and pushing advanced AI at production scale!

- Our team: Smart input team’s mission is to enhance input and messaging experience on these smart glasses. Imagine being able to receive your whatsapp messages, and being able to get more context (summary) and respond in a natural way just like how you would have a conversation with a human, all while wearing your glasses and not taking your attention off the things you are doing (like biking, cooking, walking with your grocery bags).

- Our tech: We want to bring ChatGPT capabilities on-device. We build the capabilities similar to what ChatGPT can do for messaging but with a model that is much smaller to be able to fit on the glasses. This is a niche tech space with big opportunities to innovate on LLMs, on-device ML, privacy ML such as Federated learning, on-device personalization. This team aims to ship these cool technologies to drive end user value.

-

While the rest of the world is going after making LLMs work on the servers, we are taking a bigger challenge to make LLMs work on-device.

- In a more constrained use case, such as using natural language processing (NLP) to interpret voice commands for Amazon Music, the problem of hallucination might be less prominent. In this case, the system is less likely to “make things up” because it’s not primarily generating content but rather interpreting and executing commands based on user input. If the NLP system doesn’t understand a command, it’s more likely to ask for clarification or fall back to a default action rather than inventing an inappropriate response.

- However, hallucination could still be an issue if the system uses language models to generate responses or explanations. For example, if you ask Alexa for information about a particular artist or song, and it uses a language model to generate the response, it could potentially hallucinate details that aren’t true.

- In any case, whether hallucination is a problem depends on the specific application and how the system is designed and used. It’s an active area of research in AI to find ways to mitigate this issue, especially as language models are being used in more diverse and impactful applications. Techniques like fine-tuning the model on specific tasks or data, utilizing structured data sources to ground the responses, or using model validation to check the outputs could help to limit hallucination.

HOld

RAG

- Implementing RAG in an AI-driven application entails the subsequent procedures:

- The user submits a query.

- The application scans for pertinent documents that could potentially address the query from a document index, which usually comprises proprietary information.

- The application crafts an LLM prompt by merging the user’s query, the identified documents, and directives for the LLM to respond using the given documents.

- This constructed prompt is then dispatched to the LLM.

- Based on the provided context, the LLM produces a response to the user’s query, which serves as the system’s final output.

Onsite

- Round 1 in-domain design: Actually just talk about the research you have done

- Round 2 AI coding: Leetcode 1570. Find a local minimum and return index

- Round 3 AI research design: Apply the model you made in previous research to another scene

- Round 4 AI research design: explain your recent paper or project

- Round 5 BQ:

- Describe a project you are most proud of.

- Describe a time when you have to make a decision with insufficient information or conflict with others.

-

Describe a time when you insisted on doing something and it turned out to be wrong.

- a total of four rounds, 2coding+ML design+BQ

- first round ml design, Korean girl, design facebook event predictor. I asked how to collect data, how to do feature engineering, what model to use, how to evaluate,

- how to improve, and some basic ML questions.

-

In the second round of coding, I met an unreliable interviewer. The interviewer was almost 20 minutes late.

- I just interviewed for the Meta Reality Labs 2023 summer research intern last week, and I’m still waiting for the results. I’d like to share my feelings and experiences here. I have interviewed the original poster a few years ago, so the following is a summary of several interviews.

- Summer internship for Meta Reality Labs research position with CV emphasis. The Meta Careers website will list the positions of different teams in great detail. Just choose the one that matches your background and apply. You can apply for multiple positions at the same time. Research positions are sometimes divided into research scientists and research engineers, but personally I feel there is not much difference. Most research internships only recruit PhDs, but there are also MS/PhDs, which will be written in the title.

- After submitting the application, if there is a match, HR will contact you via email to ask some basic questions and then schedule an interview. Generally, there are two interviews, one is the research interview and the other is technical (coding), both of which are 45 minutes. If you are invited to interview for multiple positions you applied for, you will only have to interview once for coding, but you will probably have separate interviews with different teams for research. Results are generally given 1-2 weeks after the interview.

- Research interview: Interview with an on-the-job research scientist. The interviewer may be the person who will guide you in the future. The interviewer will first introduce what his team does roughly, and then ask you to introduce your research. Then he will ask about some knowledge related to this position or the problems he wants to solve for this project, and ask you what you think. Five minutes will be left at the end for you to ask him questions. .

- Technical (coding): On-site programming on the website they provide, usually two questions. The website can only be coded and cannot be run, but the interviewer may ask you to simulate the operation of a set of data on the whiteboard of the website. Then ask about the time and space complexity of the algorithm you wrote and whether it can be optimized, and observe whether you can handle the corner case properly. Unlike SDE, the focus of research intern is to solve research problems, so the coding ability may not be too high. I have seen two types of questions, one is Leetcode style, and the other is AI-related coding. We PhD students may rarely do LeetCode, but we still need to prepare a little before the interview. I got a Breadth-First Search question in the first interview. Although it is not very difficult, because I learned it during my undergraduate studies, it was so long ago that I would not be able to write it if I was not prepared. The other is AI-related coding, such as writing some data processing, augmentation, calculating some metrics, etc.

- Personally, I felt that my interviews were pretty good (everyone I interviewed in the past few years ended up with an offer), and the atmosphere was very good. It didn’t feel like they were interviewing me, but more like chatting about research. So let me briefly talk about my feelings and what I think I did better.

- The interviewer’s first name and last initial will be informed in the email. Combined with the team information, you can usually Google this person, so you can prepare in advance to see what he does, and then you can make targeted adjustments when introducing yourself for research. For example, I know that he should be very familiar with a certain background, so there is no need to introduce it in detail, or I can predict which work he has done before that he will be interested in. At the same time, you can roughly estimate the interviewer’s level (his title is included in the email) to have a rough prediction of the interview style: a high-level interviewer will care about higher-level questions and the practical application prospects of your research, while The interviewer with a lower level is likely to be the person who will directly interview you in the future, so he will ask more specific and project-related questions. I have encountered both types of interviewers above, and their styles are quite different. . Waral di, 2. At the beginning, you will be asked to introduce your research, mainly to know what you generally do, without going into too much detail. It is recommended to prepare a 5-min slides to roughly introduce your research direction and give a broad framework context. It is best to string together your previous work into a complete story, but do not introduce specific work. After the introduction, you can ask him to give more details if he is interested in a specific job. advertise

- Be familiar with where the things you have done are (on the computer or online), especially the various pictures. If you are asked a relevant question and need to be able to quickly pull up the picture to share the screen, pointing to the picture to answer the question is much more effective than just talking. And many questions are asked around resumes, so you

- There will be a few minutes left for you to ask questions at the end of the interview, so prepare some questions to avoid not thinking of good questions on the spot. Generally, you ask about project-related questions, such as expected outcome (publish a paper? Implement a certain system? There is a big gap between groups in this area), for example, ask what background the interviewer thinks you still lack (if you actually don’t lack it) You can add that background to him at this time; otherwise, thank him and say that he can add it as soon as possible), such as location (if the team has many offices), or think of other valuable issues. Don’t ask about interns’ benefits and benefits. You should ask HR about such questions. (Personally, I feel that Meta’s intern benefits are very good, so don’t worry too much)

- When sharing experiences in the forum, I also want to find friends who share the same direction and share the same goals with me!

- The full name of the position is Research Engineer Intern, CV, AI and Machine Perception (PhD/Master) - Redmond, WA. It actually does SLAM related things. Under FRL, I was very excited when I saw the recruitment of master. FRL should be There are many dream places in this field, and many great gods are in them. Being rejected is indeed because my skills are inferior to others, and my interview performance is very poor…

- 11.1 After knowing this position, I asked my friends to recommend again (I was rejected once when I applied for a general position before with my resume)

- 11.29 VO two rounds, the first round Project interview (similar to team match), the second round is coding, this position is like this, the

- first round of direct VO : I was asked about the details of lidar calibration at my previous company, and without saying much, I started to directly ask about domain knowledge. 1.

- Vector operations, Eigen, are very basic, just dot product and cross product

- Homography, I haven’t done vSLAM for a long time. I asked if I could talk about epipolar constraints. He asked me to calculate the formula on the spot. The formula was also listed in the drawing. After it came out, I finally forgot how to draw it in the form of essential matrix.

- Asked me if I knew about Linear Programming. At that time, the algorithm class had not yet reached this part, and I didn’t realize that LP is linear programming. Let’s see if I don’t change it again. Cheng asked me about the solution of linear equations, epic ancient knowledge, but he bit the bullet and said nothing.

- Image rotation, I mentioned bilinear interpolation, but I answered this question well.

- Second round :1 . The first question is to find the median of a given array. There should be this question in the tag. I forgot the question number. I directly used min_heap to do 2. followup gives you a two-dimensional matrix, and then gives you the kernel size k, and then asks you do something like

-

technology stack, some internally focus on lidar, some focus on vSLAM, and some focus on 3D reconstruction. The details of each company are very different, and it is quite difficult to prepare. Review some very basic and mathematical things again. This Meta interview was the most difficult one I encountered. It involved pushing formulas on the spot and asking questions about ancient linear algebra. My past projects basically didn’t ask any questions. The coding interview was a confusing followup. I was not as good as others, but I accepted it even if I failed. I only have an intern offer in Tucson, so it seems like the only option is to go to Tucson. I hope I can go to a better place full-time next year, so that I can live up to my trip to the United States.

- research round:

- each talks about his own project, I talk about my own research direction, then he introduces the idea that the team wants to do, and then I make some assumptions based on this idea. .

- When talking about the project, he will talk about the end-to-end process. From the beginning of the project, sometimes he wants to hear the technical details, and sometimes he wants to hear what [I] did in the project. Even if they are similar, everyone is analyzing the needs. Do literature reviews and other routines. . .

- (If you think about it carefully, this might be closest to bq?

- (Or is it like taking a speaking test?

- Coding wheel:

- sum of the cropped_image

- image = [[0 1 0 1 0]

- leftTop = [1 1]

- rightBottom = [2 3]

- At this moment, cropped_image = [[0 0 0],

dig Meta

-

- Storefront: Two questions, original title of Li Kou Er Umbrella Liu; variant of Li Kou Er San (no constraint on subarray with size at least two), Chinese guy, amiable; 2. Onsite: Round1(coding)

- three Questions, the original question of Likou San Umbrella Wine; the original question of Likou Yao Umbrella; the original question of Likou Er Erqi (it is a bonus, there is no space o(1) requirement, but I still gave one, and the interviewer felt that it was quite good Surprise), white guy, approachable;

- Round 2 (coding): two questions, Likou Liuwu variant (no constraints are given at all, only a few examples are given, so you need to keep clarifying with the other person, it seems that this is what he wants to see in the question) part of , no sign char); Likou Liuqi Ling (gave a solution of o(n^2), and then the other party chased for a better solution, but did not give it, so the other party gave a solution of o(nlogn), which seemed to be OK There are o(n)), black brother, gentle and elegant;

- Round3(ML): MLsystem design, the case is about the Facebook Event recommendation system, the target is to determine whether the user will participate in these events, the difficulty is to determine whether they are physically present, there is nothing special in other places), the Indian guy, the attitude is okay; Round4 (BQ)

- : constructive feedback, collaboration with different

- , but I don’t feel that the overall interview process reflects personal professional abilities.

- Timeline:

- I was hooked up by HR at the August meeting, but my student was postponed to November because I didn’t pass the exam at all; the

- store in mid-November will be updated one day later;

-

the onsite one week before Christmas will be completed in three days. Then because of the holiday season, I waited until the evening of January 4 to get an appointment, and the next day I called for an oral offer.

- The first round of chatting with the person in charge of the group was mainly to talk about the research I did and the papers I published, as well as the problems I would like to solve if I get an internship. In fact, the research direction is not particularly suitable, but the chat was quite pleasant. I heard there might be a return in the group.

- The second round of AI coding feels completely different from the common questions in the field…

- The first question is to give a matrix and determine whether the values of each diagonal (upper left to lower right) are the same. For example, [[0,1,2],[3,0,1],[4,3,0]] is satisfied. After I finish writing a method, I analyze it

- Turn it upside down (the title is byte swap). I wrote a simple algorithm, but I don’t know if I ran out of time and didn’t let me optimize it further.

- Generally speaking, coding is a bit difficult, but it’s really great without asking BQ.

AI coding and onsite:

- Write K means, write knn, implement a conv 2d, write code to calculate precision recall

- I was tested to achieve the target detection iou, but there are similar questions in lc, and I was also tested.

- AUC , RUc curve. Cross entropy loss. Tensor manipulation. My guess

- Round 1 in-domain design: Actually just talk about the research you have done

- Round 2 AI coding: Leetcode 1570. Find a local minimum and return index

- Round 3 AI research design: Apply the model you made in previous research to another scene

- Round 4 AI research design: explain your recent paper or project

- Round 5 BQ:

- Describe a project you are most proud of.

- Describe a time when you have to make a decision with insufficient information or conflict with others.

- Describe a time when you insisted on doing something and it turned out to be wrong.

- Negative feedbacks from your managers or peers.

- First give a presentation to introduce your research, so that people in the group understand what direction you are working on, a 45-minute presentation and a 15-minute QA.

- Then there are two rounds of research interviews. Basically, people from FAIR come to interview. They mainly focus on their own research. They will ask some questions about the current SOTA insights and the development direction of the field. Generally, the research aspect is the easiest and most interesting part. Each round is 45 minutes.

- followed bysystem designThere is basically no need to prepare for the interview. The questions are generally about all aspects involved in putting some tasks on the meta platform for users to use, such as Youtube video recommendation, and AR glass for smart purchases in stores, etc. Although the topic It will change, but after all, they are all CV-related tasks. As long as you are familiar with the conventional methods of these tasks, there is basically no big problem.

- Next is the coding interview. Fortunately, the coding side of researchers seems to have been reformed. In principle, it does not answer Leetcode questions, but the research coding side. What I encountered was to write an

- online code to calculate the mean and variance, and a calculation about average precision.

- AUC questions. The complexity of calculating AP’s requirements is O(n), so I didn’t finish it and finally just talked about the idea. But different aspects

- Almost medium is the classic sparse vector dot product question that Meta often takes.

- Provide a dp. The two rounds I went through were the same. I asked about research at the beginning, which was very detailed, and various follow ups. In the last 10 minutes, coding was not a sharp question, but it was about implementing a variant of a certain operation in the AI algorithm. Pure implementation, not API adjustment

- LZ started overseas investment around January. I received the interview invitation at the end of February and the interview at the beginning of March. There are two rounds of interviews, one is Team Match and the other is AI conding.

- The first round of chatting with the person in charge of the group was mainly to talk about the research I did and the papers I published, as well as the problems I would like to solve if I get an internship. In fact, the research direction is not particularly suitable, but the chat was quite pleasant. I heard there might be a return in the group.

- The second round of AI coding feels completely different from the common questions in the field… .google и

- The first question is to give a matrix and determine whether the values of each diagonal (upper left to lower right) are the same. For example, [[0,1,2],[3,0,1],[4,3,0]] is satisfied. After I wrote a method and analyzed it

- Turn it upside down (the title is byte swap). I wrote a simple algorithm, but I don’t know if I ran out of time and didn’t let me optimize it . 1point3acres Generally speaking, coding is a bit difficult, but it’s really great without asking BQ.

- I just interviewed for the Meta Reality Labs 2023 summer research intern last week, and I’m still waiting for the results. I’d like to share my feelings and experiences here. I have interviewed the original poster a few years ago, so the following is a summary of several interviews.

- [Interview Position] Summer internship for Meta Reality Labs research position with CV focus. The Meta Careers website will list the positions of different teams in great detail. Just choose the one that matches your background and apply. You can apply for multiple positions at the same time. Research positions are sometimes divided into research scientists and research engineers, but personally I feel there is not much difference. Most research internships only recruit PhDs, but there are also MS/PhDs, which will be written in the title. . Waral dи,

- [Interview Process] After submitting the application, if there is a match, HR will contact you via email to ask some basic questions and then schedule an interview. Generally, there are two interviews, one is the research interview and the other is technical (coding), both of which are 45 minutes. If you are invited to interview for multiple positions you applied for, you will only have to interview once for coding, but you will probably have separate interviews with different teams for research. Results are generally given 1-2 weeks after the interview.

- Research interview: Interview with an on-the-job research scientist. The interviewer may be the person who will guide you in the future. The interviewer will first introduce what his team does roughly, and then ask you to introduce your research. Then he will ask about some knowledge related to this position or the problems he wants to solve for this project, and ask you what you think. Five minutes will be left at the end for you to ask him questions.

- Technical (coding): Live programming on the website they provide, usually two questions. The website can only be coded and cannot be run, but the interviewer may ask you to simulate the operation of a set of data on the whiteboard of the website. Then ask about the time and space complexity of the algorithm you wrote and whether it can be optimized, and observe whether you can handle the corner case properly. Unlike SDE, the focus of research intern is to solve research problems, so the coding ability may not be too high. I have seen two types of questions, one is Leetcode style, and the other is AI-related coding. We PhD students may rarely do LeetCode, but we still need to prepare a little before the interview. I got a Breadth-First Search question in the first interview. Although it is not very difficult, because I learned it during my undergraduate studies, it was so long ago that I would not be able to write it if I was not prepared. The other is AI-related coding, such as writing some data processing, augmentation, calculating some metrics, etc.

- [Interview experience] Personally, I felt that my interviews were pretty good (everyone I interviewed in the past few years ended up with an offer), and the atmosphere was very good. It didn’t feel like they were interviewing me, but more like chatting about research. So let me briefly talk about my feelings and what I think I did better. . 1point3acres