Primers • Diffusion Models

- Background

- Overview

- Introduction

- Advantages

- Definitions

- Diffusion Models: The Theory

- Diffusion models: A Deep Dive

- Types of Diffusion Models

- Denoising Diffusion Probabilistic Models (DDPMs) / Discrete-Time Diffusion Models

- Denoising Diffusion Implicit Models (DDIMs)

- Score-Based Generative Models (SGMs) / Continuous-Time Diffusion Models

- Variational Diffusion Models (VDMs)

- Stochastic Differential Equation (SDE)-Based Models

- Comparative Analysis

- Training

- Model Choices

- Network Architecture: U-Net and Diffusion Transformer (DiT)

- Conditional Diffusion Models

- Classifier-Free Guidance

- Prompting Guidance

- Diffusion Models in PyTorch

- HuggingFace Diffusers

- Implementations

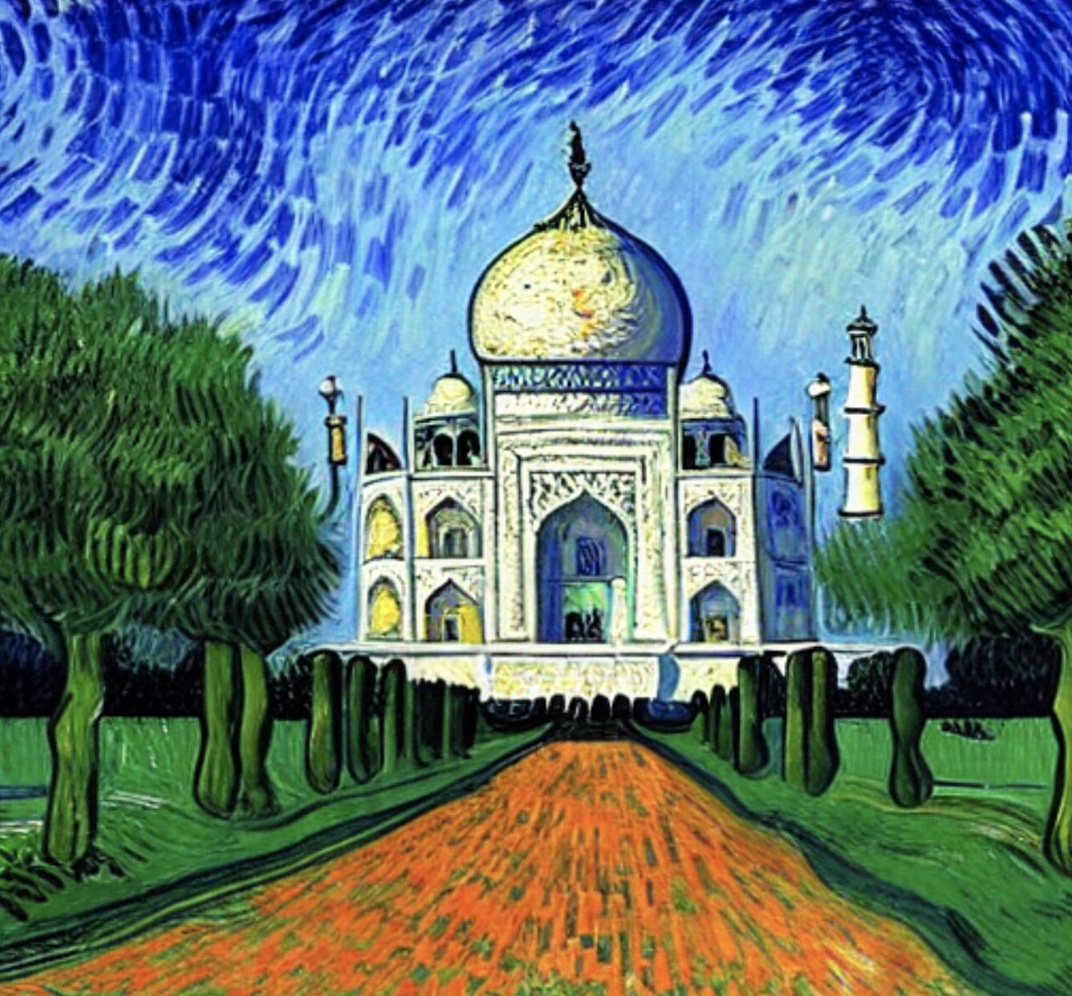

- Gallery

- FAQ

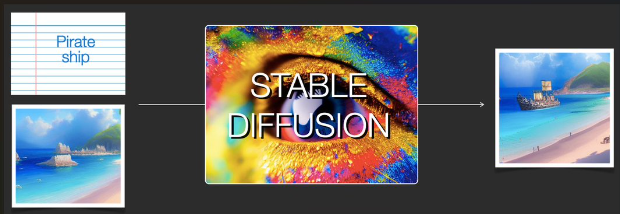

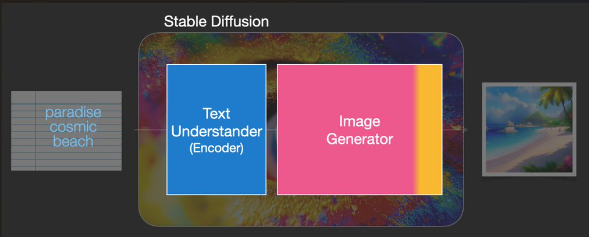

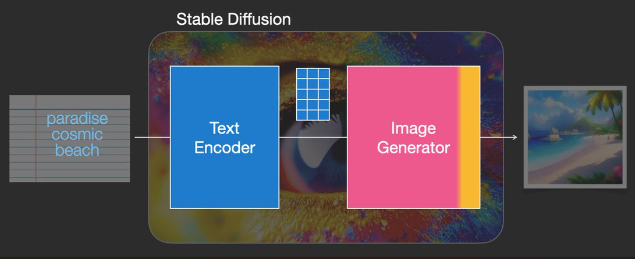

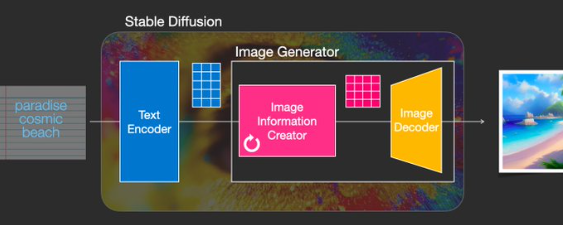

- At a high level, how do diffusion models work? What are some other models that are useful for image generation, and how do they compare to diffusion models?

- What is the difference between DDPM and DDIMs models?

- In diffusion models, there is a forward diffusion process and a reverse diffusion/denoising process. When do you use which during training and inference?

- What are the loss functions used in Diffusion Models?

- Integration with MSE

- What is the Denoising Score Matching Loss in Diffusion models? Provide equation and intuition.

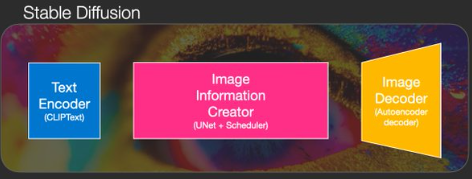

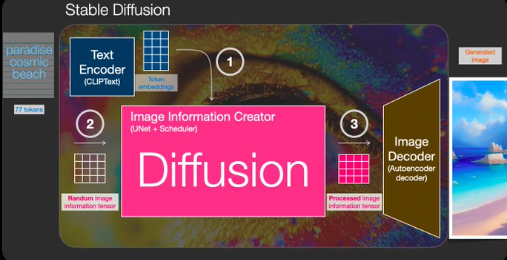

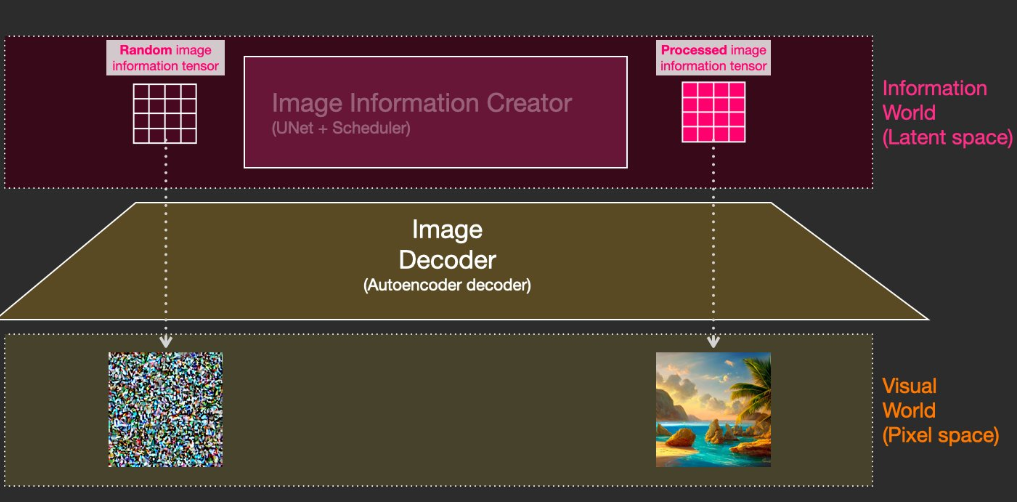

- What does the “stable” in stable diffusion refer to?

- How do you condition a diffusion model to the textual input prompt?

- In the context of diffusion models, what role does cross attention play? How are the \(Q\), \(K\), and \(V\) abstractions modeled for diffusion models?

- How is randomness in the outputs induced in a diffusion model?

- How does the noise schedule work in diffusion models? What are some standard noise schedules?

- Choosing a Noise Schedule

- Recent Papers

- High-Resolution Image Synthesis with Latent Diffusion Models

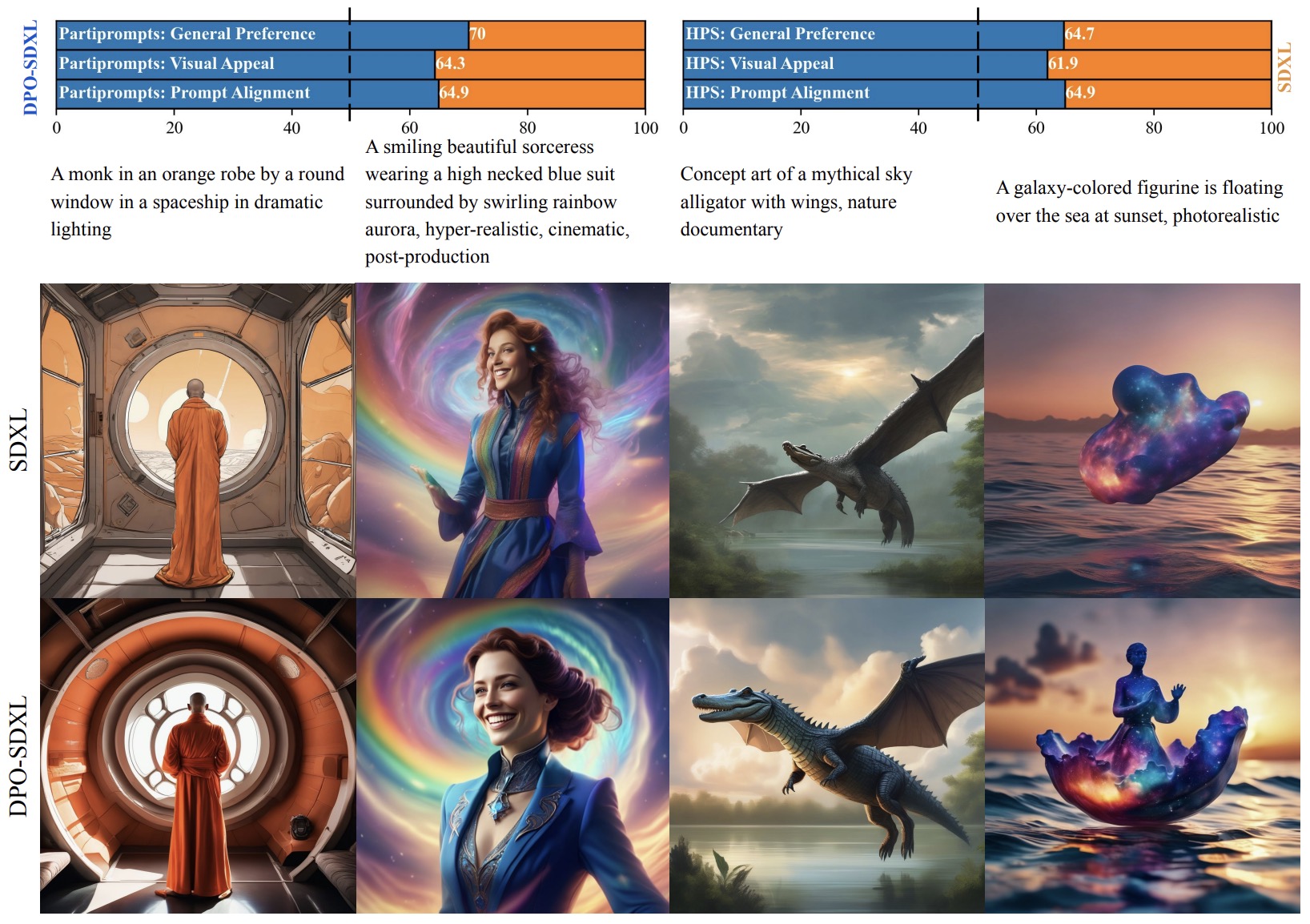

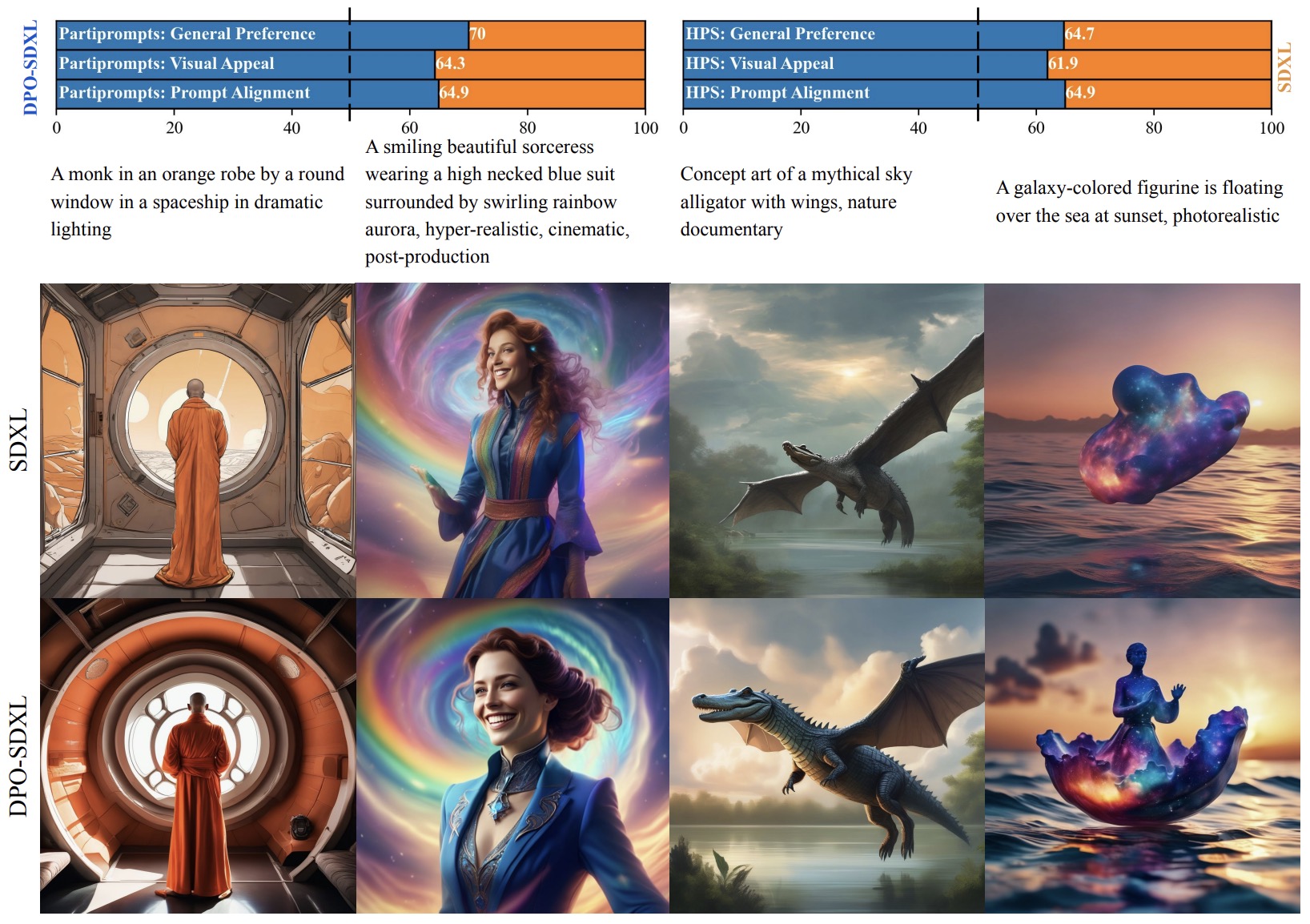

- Diffusion Model Alignment Using Direct Preference Optimization

- Scalable Diffusion Models with Transformers

- DeepFloyd IF

- PIXART-\(\alpha\): Fast Training of Diffusion Transformer for Photorealistic Text-to-Image Synthesis

- RAPHAEL: Text-to-Image Generation via Large Mixture of Diffusion Paths

- ERNIE-ViLG 2.0: Improving Text-to-Image Diffusion Model with Knowledge-Enhanced Mixture-of-Denoising-Experts

- Imagen Video: High Definition Video Generation with Diffusion Models

- Patch n’ Pack: NaViT, a Vision Transformer for any Aspect Ratio and Resolution

- SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis

- Dreamix: Video Diffusion Models are General Video Editors

- Stable Video Diffusion: Scaling Latent Video Diffusion Models to Large Datasets

- Fine-tuning Diffusion Models

- Diffusion Model Alignment

- Further Reading

- The Illustrated Stable Diffusion

- Understanding Diffusion Models: A Unified Perspective

- The Annotated Diffusion Model

- Lilian Weng: What are Diffusion Models?

- Stable Diffusion - What, Why, How?

- How does Stable Diffusion work? – Latent Diffusion Models Explained

- Diffusion Explainer

- Jupyter notebook on the theoretical and implementation aspects of Score-based Generative Models (SGMs)

- References

- Citation

Background

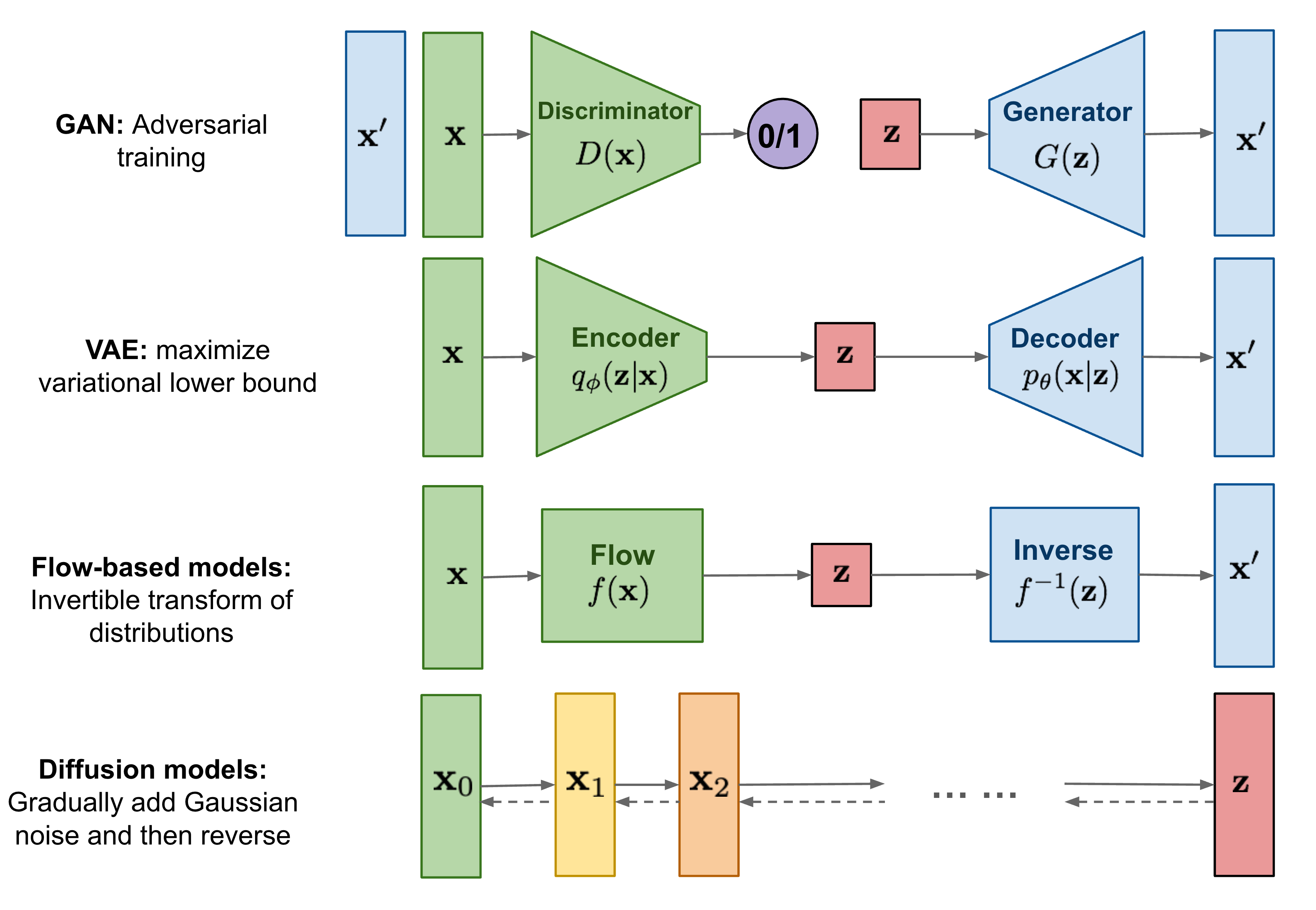

- Generative models have achieved remarkable success in creating high-quality data samples, with three prominent types being Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Flow-based models. Each of these approaches has distinct advantages and limitations. GANs, known for their adversarial training framework, often face challenges such as unstable training and reduced diversity in generated samples. VAEs rely on surrogate loss functions, which may limit the fidelity of outputs. Flow-based models require specialized reversible architectures, adding complexity to their design.

- Diffusion models present a compelling alternative to these traditional methods. Inspired by non-equilibrium thermodynamics, they define a Markov chain to incrementally add random noise to data in a forward process. The model then learns to reverse this diffusion process, reconstructing data samples from the noise. Unlike VAEs or Flow-based models, diffusion models follow a fixed learning procedure and utilize high-dimensional latent variables, matching the dimensionality of the original data.

- The concept of diffusion models for generative tasks was first introduced in Sohl-Dickstein et al., 2015. Significant advancements came later with the contributions of Song et al., 2019 at Stanford University and Ho et al., 2020 at Google Brain, who independently improved the approach and expanded its applicability.

- A helpful visual overview of the different types of generative models, including diffusion models, can be found in this diagram from Lilian Weng’s blog:

- Diffusion models are conceptually simple yet powerful. Their state-of-the-art results, coupled with the absence of adversarial training, have propelled them to prominence. They have proven essential in cutting-edge models for conditional and unconditional generation across various modalities such as images, audio, and video. Examples include GLIDE and DALL-E 2 by OpenAI, Latent Diffusion by the University of Heidelberg, Imagen by Google Brain, and Stable Diffusion by Stability.ai.

- As a relatively new paradigm, diffusion models hold immense potential for further refinement and innovation. Their current success underscores their growing importance in the field of generative modeling.

Overview

- The meteoric rise of diffusion models is one of the biggest developments in Machine Learning in the past several years.

- Diffusion models are generative models which have been gaining significant popularity in the past several years, and for good reason. A handful of seminal papers released in the 2020s alone have shown the world what Diffusion models are capable of, such as beating GANs on image synthesis. Most recently, Diffusion models were used in DALL-E 2, OpenAI’s image generation model (image below generated using DALL-E 2).

- Given the recent wave of success by Diffusion models, many Machine Learning practitioners are surely interested in their inner workings.

- In this primer, we will examine the theoretical foundations of diffusion models, and then demonstrate how to generate images with a diffusion model in PyTorch.

Introduction

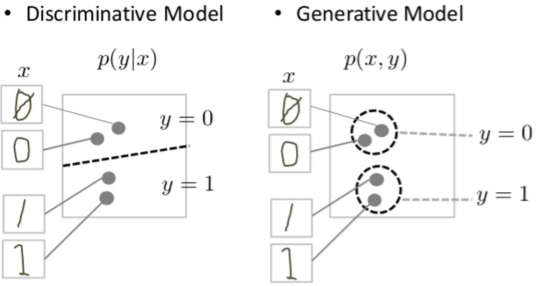

- Diffusion probabilistic models (also simply called diffusion models) are generative models, meaning that they are used to generate data similar to the data on which they are trained. As the name suggests, generative models are used to generate new data, for e.g., they can generate new photos of animals that look like real animals whereas discriminative models could tell apart a cat from a dog.

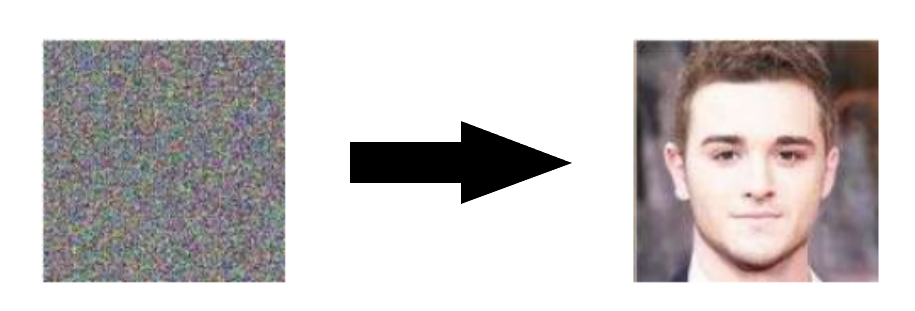

- Fundamentally, diffusion models work by destroying training data through the successive addition of Gaussian noise, and then learning to recover the data by reversing this noising process. In other words, diffusion models are parameterized Markov chains models trained to gradually denoise data. After training, we can use the diffusion model to generate data by simply passing randomly sampled noise through the learned denoising process. In other words, diffusion models undergo the process of transforming a random collection of numbers (the “latents tensor”) into a processed collection of numbers containing the right image information.

- Diffusion Models also go by Diffusion Probabilistic Models, score-based generative models or in some contexts, have been compared to denoising autoencoders owing to similarity in behavior.

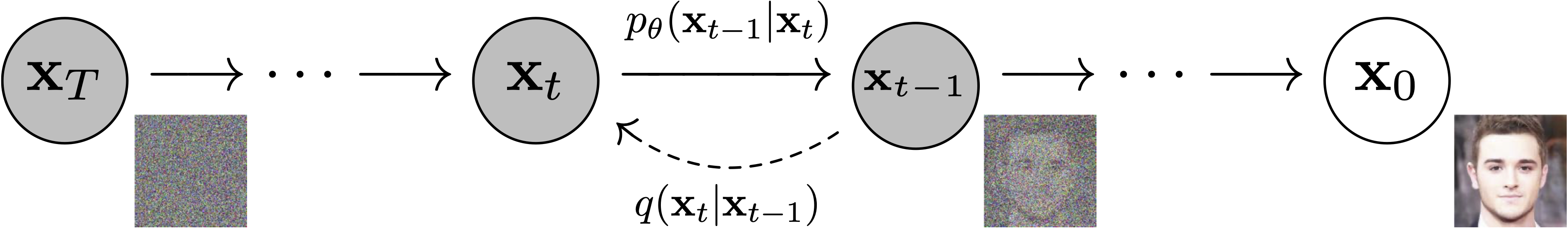

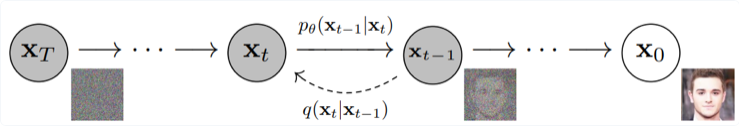

- The following diagram shows that diffusion models can be used to generate images from noise (figure modified from source):

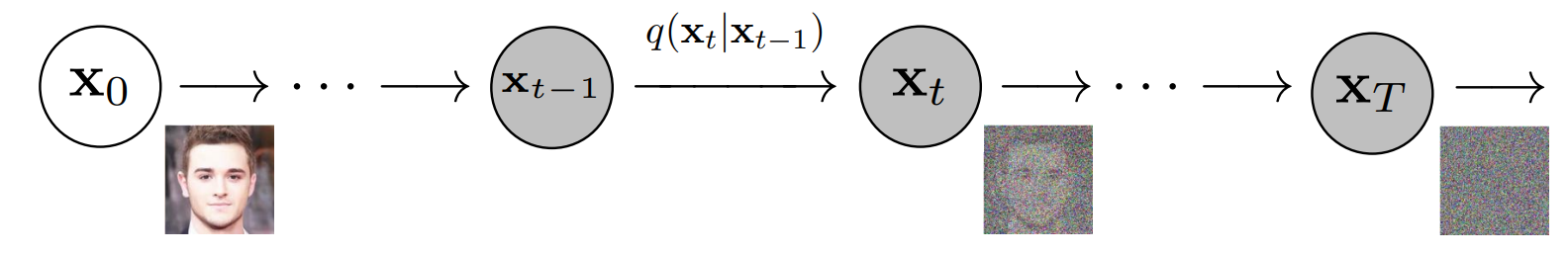

- More specifically, a diffusion model is a latent variable model which maps to the latent space using a fixed Markov chain. This chain gradually adds noise to the data in order to obtain the approximate posterior \(q\left(\mathbf{x} 1: T \mid \mathbf{x}_{0}\right)\), where \(\mathbf{x} 1, \ldots, \mathbf{x} T\) are the latent variables with the same dimensionality as \(\mathbf{x}_{0}\). In the figure below, we see such a Markov chain manifested for image data.

- The following diagram (figure modified from source):

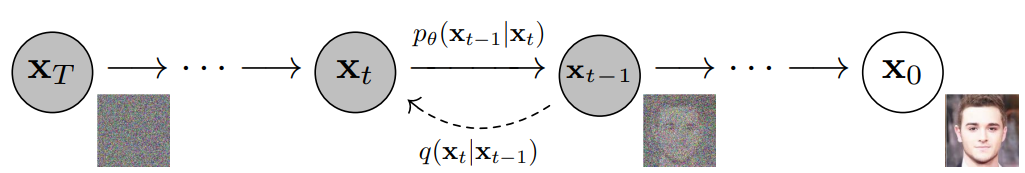

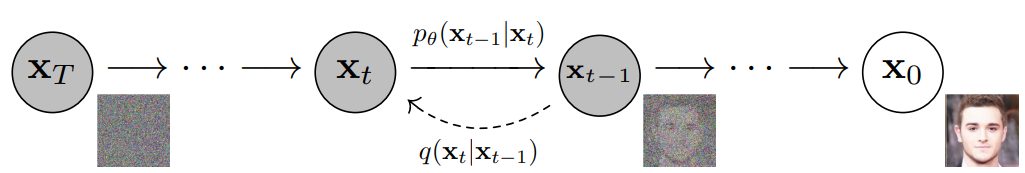

- Ultimately, the image is asymptotically transformed to pure Gaussian noise. The goal of training a diffusion model is to learn the reverse process - i.e. training \(p_{\theta}\left(x_{t-1} \mid x_{t}\right)\). By traversing backwards along this chain, we can generate new data, as shown below (figure modified from source).

- Under-the-hood, diffusion Models define a Markov chain of diffusion steps that add random noise to the data and then learn to reverse the diffusion process in order to create the desired data output from the noise. This can be seen in the image below:

- Recall that a Markov chain is a stochastic model that describes a sequence of possible events where the probability of each event only depends on the state of the previous event. Markov chains are used to calculate the probability of an event occurring by considering it as a state transitioning to another state or a state transitioning to the same state as before. The defining characteristic of a Markov chain is that no matter how the process arrived at its present state, the possible future states are fixed.

- Key takeaway

- In a nutshell, diffusion models are constructed by first describing a procedure for gradually turning data into noise, and then training a neural network that learns to invert this procedure step-by-step. Each of these steps consists of taking a noisy input and making it slightly less noisy, by filling in some of the information obscured by the noise. If you start from pure noise and do this enough times, it turns out you can generate data this way! (source)

Advantages

- Diffusion probabilistic models are latent variable models capable to synthesize high quality images. As mentioned above, research into diffusion models has exploded in recent years. Inspired by non-equilibrium thermodynamics, diffusion models currently produce State-of-the-Art image quality, examples of which can be seen below (figure adapted from source):

- Beyond cutting-edge image quality, diffusion models come with a host of other benefits, including not requiring adversarial training. The difficulties of adversarial training are well-documented; and, in cases where non-adversarial alternatives exist with comparable performance and training efficiency, it is usually best to utilize them. On the topic of training efficiency, diffusion models also have the added benefits of scalability and parallelizability.

- While diffusion models almost seem to be producing results out of thin air, there are a lot of careful and interesting mathematical choices and details that provide the foundation for these results, and best practices are still evolving in the literature. Let’s take a look at the mathematical theory underpinning diffusion models in more detail now.

- Their performance is, allegedly, superior to recent state-of-the-art generative models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) in most cases.

Definitions

Diffusion Models

- Diffusion models are neural models that model \(p_{\theta}\left(\mathbf{x}_{t-1} \mid \mathbf{x}_{t}\right)\) and are trained end-to-end to denoise a noisy input to a continuous output such as an image/audio (similar to how GANs generate continuous outputs). Examples: UNet, Conditioned UNet, 3D UNet, Transformer UNet.

- The following figure from the DDPM paper shows the process of a diffusion model:

Schedulers

- Algorithm class for both inference and training. The class provides functionality to compute previous image according to alpha, beta schedule as well as predict noise for training. Examples: DDPM, DDIMs, PNDM, DEIS.

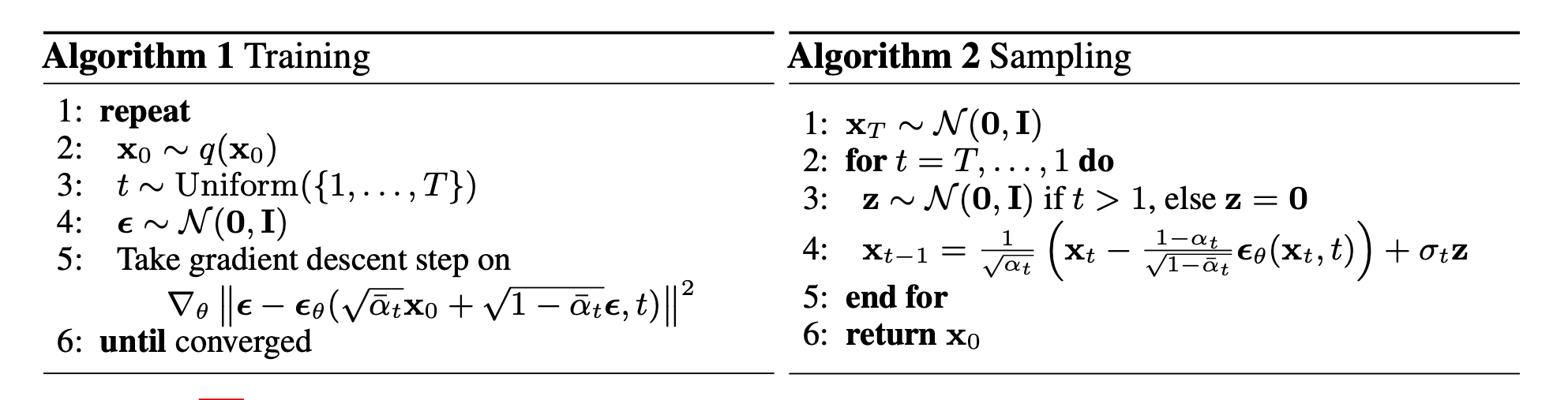

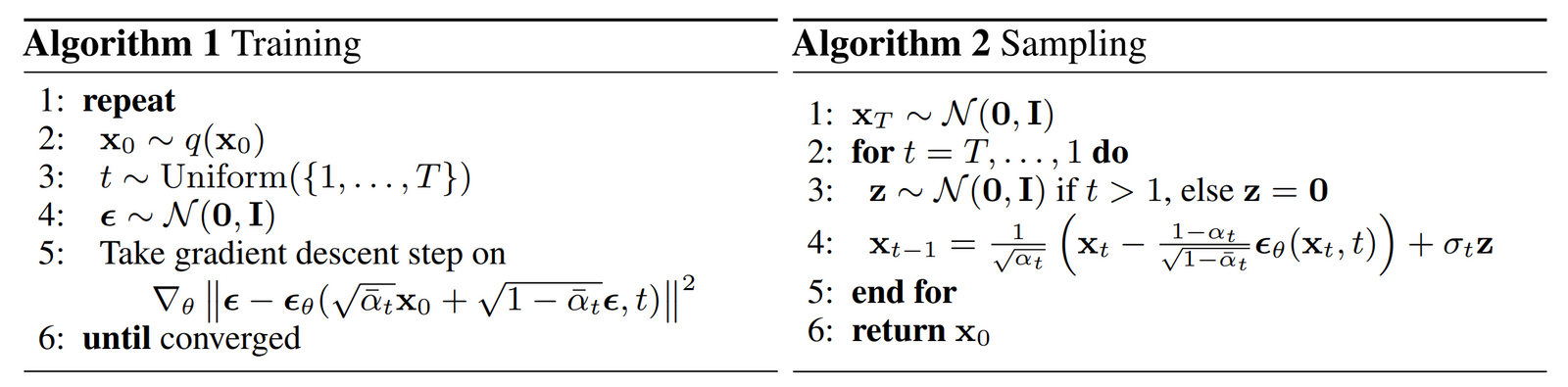

- The figure below from the DDPM paper shows the sampling and training algorithms:

Sampling and training algorithms

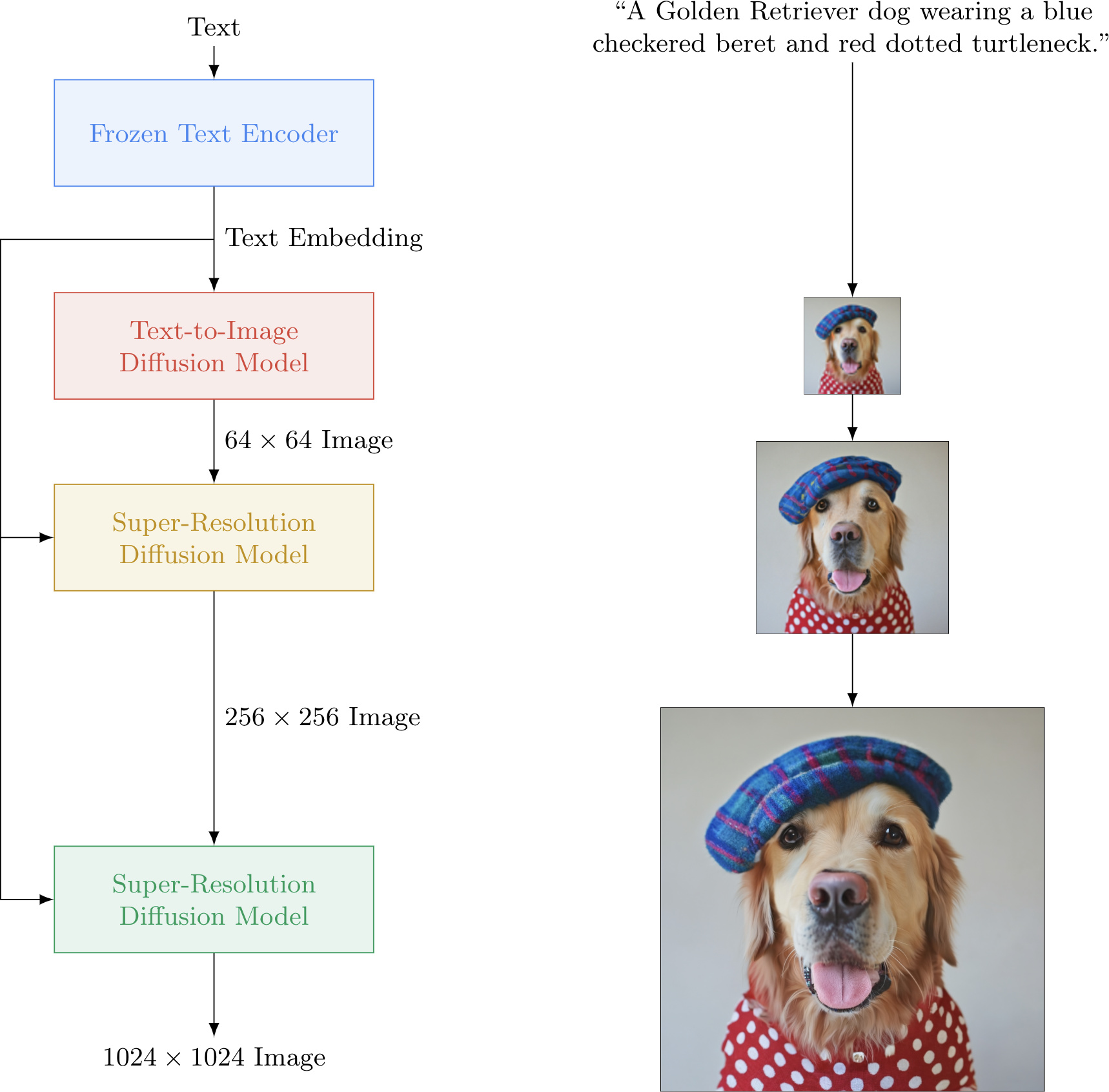

- Diffusion Pipeline: End-to-end pipeline that includes multiple diffusion models, possible text encoders, super-resolution model (for high-res image generation, in case of Imagen), etc.

- Examples: GLIDE, Latent-Diffusion, Imagen, DALL-E 2.

- The figure below from the Imagen paper shows the overall flow of the model.

Diffusion Models: The Theory

-

In this section, we explore the theoretical foundation of diffusion models:

-

Markov Chain Representation: A diffusion model is parameterized as a Markov chain, implying that the latent variables \(x_1, \ldots, x_T\) depend solely on the adjacent time-step (preceding or following).

-

Gaussian Transition Distributions: The transitions in the Markov chain are modeled as Gaussian distributions. The forward process employs a variance schedule, while the parameters for the reverse process are learned.

-

Asymptotic Distribution: The diffusion process ensures that \(x_T\) converges asymptotically to an isotropic Gaussian distribution for sufficiently large \(T\).

-

Variance Scheduling: While the variance schedule in our implementation is fixed, it can also be learned. For fixed schedules, using a geometric progression often yields better results compared to a linear progression. Regardless of the approach, the variances typically increase over time within the series (\(\beta_i < \beta_j\) for \(i < j\)).

-

Architectural Flexibility: Diffusion models are highly versatile, accommodating any architecture with matching input and output dimensionalities. Commonly, U-Net-like architectures are utilized in implementations.

-

Training Objective: The training goal is to maximize the likelihood of the data, which involves optimizing model parameters to minimize the variational upper bound of the negative log likelihood.

-

Role of KL Divergences: Owing to the Markov assumption, most terms in the objective function can be expressed as KL divergences. These are tractable for computation because the model assumes Gaussian distributions, thereby avoiding the need for Monte Carlo approximations.

-

Simplified Objective: Training is most stable and effective when a simplified objective function is used to predict the noise component of a given latent variable.

-

Discrete Decoding: In the final step of the reverse diffusion process, a discrete decoder is employed to compute log likelihoods across pixel values.

-

Diffusion models: A Deep Dive

General Overview

- Diffusion Models are a latent variable model that maps the latent space using the Markov chain. They essentially are made up of neural networks that learn to gradually de-noise data.

- Note: Latent variable models aim to model the probability distribution with latent variables. Latent variables are a transformation of the data points into a continuous lower-dimensional space. (source)

- Additionally, the latent space is simply a representation of compressed data in which similar data points are closer together in space.

- Latent space is useful for learning data features and for finding simpler representations of data for analysis.

- Diffusion models consist of two processes: a predefined forward diffusion and a learned reverse de-noising diffusion process.

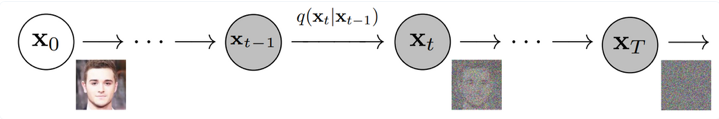

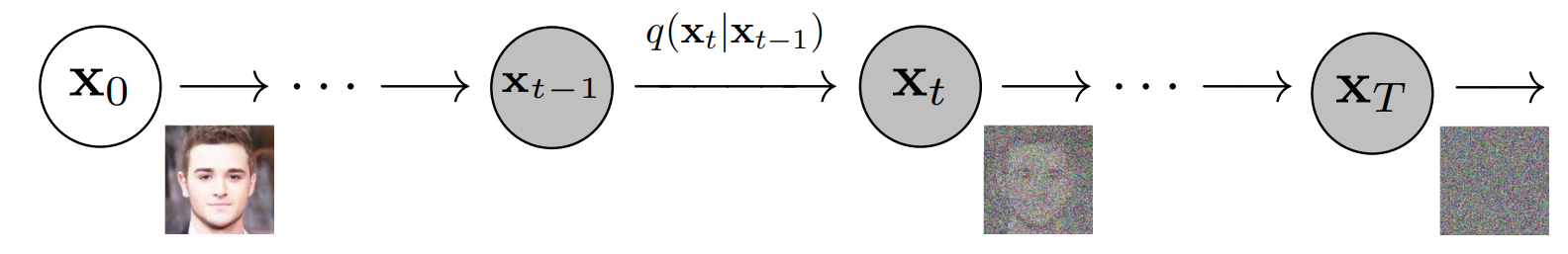

- In the image below, we can see the Markov chain working towards image generation. It represents the first process of forward diffusion.

- The forward diffusion process \(q\) of our choosing, gradually adds Gaussian noise to an image, until you end up with pure noise.

- Next, the image is asymptotically transformed to just Gaussian noise. The goal of training a diffusion model is to learn the reverse process.

- The second process below is the learned reverse de-noising diffusion process \(p_\theta\). Here, a neural network is trained to gradually de-noise an image starting from pure noise, until you end up with an actual image.

- Thus, we traverse backwards along this chain to generate the new data as seen below:

- Both the forward and reverse process (both indexed with \(t\)) continue for a duration of finite time steps \(T\) (the DDPM authors use \(T\) =1000).

- You will start off with \(t = 0\) where you will sample a real image \(x_0\) from your data distribution.

- A quick example is say you have an image of a cat from ImageNet dataset.

- You will then continue with the forward process and sample some noise from a Gaussian distribution at each time step \(t\).

- This will be added to the image of the previous time step.

- Given a sufficiently large \(T\) and a continuous process of adding noise at each time step, you will end up with \(t = T\).

-

This is also called an isotropic Gaussian distribution.

- Below is the high-level overview of how everything runs under the hood:

- we take a random sample \(x_0\) from the real unknown and complex data distribution \(q(x_0)\)

- we sample a noise level \(t\) uniformly between \(1\) and \(T\) (i.e., a random time step)

- we sample some noise from a Gaussian distribution and corrupt the input by this noise at level \(t\) (using the nice property defined above)

- the neural network is trained to predict this noise based on the corrupted image \(x_t\) (i.e. noise applied on \(x_0\) based on known schedule \(\beta_t\)) In reality, all of this is done on batches of data, as one uses stochastic gradient descent to optimize neural networks.

The Math Under-the-hood

-

As mentioned above, a diffusion model consists of a forward process (or diffusion process), in which a datum (generally an image) is progressively noised, and a reverse process (or reverse diffusion process), in which noise is transformed back into a sample from the target distribution.

-

The sampling chain transitions in the forward process can be set to conditional Gaussians when the noise level is sufficiently low. Combining this fact with the Markov assumption leads to a simple parameterization of the forward process:

\[q\left(\mathbf{x}_{1: T} \mid \mathbf{x}_{0}\right):=\prod_{t=1}^{T} q\left(\mathbf{x}_{t} \mid \mathbf{x}_{t-1}\right):=\prod_{t=1}^{T} \mathcal{N}\left(\mathbf{x}_{t} ; \sqrt{1-\beta_{t}} \mathbf{x}_{t-1}, \beta_{t} \mathbf{I}\right)\]- where \(\beta_1, \ldots, \beta_T\) is a variance schedule (either learned or fixed) which, if well-behaved, ensures that \(x_T\) is nearly an isotropic Gaussian for sufficiently large \(T\).

-

Given the Markov assumption, the joint distribution of the latent variables is the product of the Gaussian conditional chain transitions (figure modified from source).

-

As mentioned previously, the “magic” of diffusion models comes in the reverse process. During training, the model learns to reverse this diffusion process in order to generate new data. Starting with the pure Gaussian noise \(p(\mathbf{x} T):=\mathcal{N}\left(\mathbf{x}_{T}, \mathbf{0}, \mathbf{I}\right)\), the model learns the joint distribution \(p \theta(\mathbf{x} 0: T)\) as,

\[p_{\theta}\left(\mathbf{x}_{0: T}\right):=p\left(\mathbf{x}_{T}\right) \prod_{t=1}^{T} p_{\theta}\left(\mathbf{x}_{t-1} \mid \mathbf{x}_{t}\right):=p\left(\mathbf{x}_{T}\right) \prod_{t=1}^{T} \mathcal{N}\left(\mathbf{x}_{t-1} ; \boldsymbol{\mu}_{\theta}\left(\mathbf{x}_{t}, t\right), \boldsymbol{\Sigma}_{\theta}\left(\mathbf{x}_{t}, t\right)\right)\]- where the time-dependent parameters of the Gaussian transitions are learned. Note in particular that the Markov formulation asserts that a given reverse diffusion transition distribution depends only on the previous timestep (or following timestep, depending on how you look at it):

Diffusion models are a class of generative models that define a forward process that gradually adds noise to data, and a reverse process that learns to denoise it step-by-step to generate new data. Below is an enhanced explanation of key types of diffusion models, including DDPM and DDIMs, with implementation details and references to the original papers where each model type was proposed.

Types of Diffusion Models

- Diffusion models are a class of generative models that define a forward process that gradually adds noise to data, and a reverse process that learns to denoise it step-by-step to generate new data. Below is an enhanced explanation of key types of diffusion models, including DDPM, DDIMs, SGMs, VDMs, and SDE-based models, with implementation details and their pros and cons.

Denoising Diffusion Probabilistic Models (DDPMs) / Discrete-Time Diffusion Models

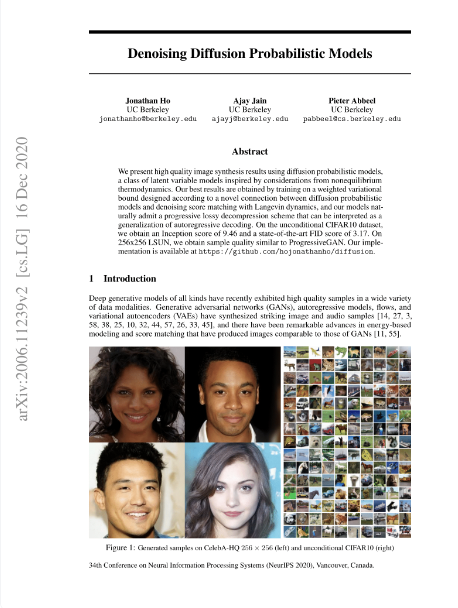

- Denoising Diffusion Probabilistic Models (DDPMs) were proposed in the paper “Denoising Diffusion Probabilistic Models” by Jonathan Ho, Ajay Jain, and Pieter Abbeel (2020).

- DDPMs are a foundational type of diffusion model introduced by Jonathan Ho, Ajay Jain, and Pieter Abbeel in 2020. They consist of two processes: a forward process (diffusion) that adds noise to data, and a reverse process (denoising) that learns to remove this noise to generate new data samples.

Implementation Details

-

Forward Process:

- The forward process gradually corrupts the data by adding Gaussian noise over a series of timesteps \(t = 1, \ldots, T\). Given data \(x_0\), the noisy version at timestep \(t\) is given by:

- where \(\beta_t\) are variance schedule parameters controlling the noise level at each timestep. This process ensures that as \(t\) increases, the data becomes increasingly noisy, eventually resembling pure Gaussian noise.

-

Reverse Process:

The reverse process aims to denoise the data by learning to reverse the forward process. The model \(p_\theta(x_{t-1} \| x_t)\) is trained to approximate:

\[p_\theta(x_{t-1} \| x_t) = \mathcal{N}(x_{t-1}; \mu_\theta(x_t, t), \sigma_t^2 I)\]- where \(\mu_\theta\) is the mean predicted by a neural network, and \(\sigma_t^2\) is a fixed variance. The objective is to minimize the variational bound, typically using a simplified loss function:

- where \(\epsilon\) is sampled from a standard normal distribution, and \(\epsilon_\theta\) is the noise predicted by the model. This loss function encourages the model to accurately predict the noise added during the forward process, facilitating effective denoising during sampling.

-

Sampling:

- The denoising process starts from pure noise \(x_T \sim \mathcal{N}(0, I)\) and gradually generates a sample \(x_0\) by reversing the noise addition steps. This iterative process involves sampling from the learned reverse distributions \(p_\theta(x_{t-1} \| x_t)\) for \(t = T, \ldots, 1\), ultimately producing a data sample that resembles the original data distribution.

Pros

- Strong theoretical foundation with a clear probabilistic framework.

- Capable of generating high-quality samples.

Cons

- Sampling can be computationally intensive due to the large number of required steps.

Denoising Diffusion Implicit Models (DDIMs)

- Denoising Diffusion Implicit Models (DDIMs) were proposed in the paper “Denoising Diffusion Implicit Models” by Jiaming Song, Chenlin Meng, and Stefano Ermon (2020). DDIMs are an extension of DDPM that allow for deterministic sampling, which can significantly speed up the generation process.

Implementation Details

- Forward Process:

- Similar to DDPMs, the forward process in DDIMs involves adding noise to the data. However, the key difference lies in the parameterization of the reverse process, which allows for a deterministic mapping during sampling.

- Reverse Process:

- DDIMs modifies the reverse process to make it deterministic. Instead of learning the reverse transition probabilities, DDIMs defines a deterministic mapping:

- where \(\alpha_t\) is a function of the noise schedule \(\beta_t\). This deterministic approach allows for a fixed trajectory in the latent space, making the process faster and more efficient.

- Sampling:

- The sampling process in DDIMs can be interpreted as a non-Markovian process that generates samples in fewer steps compared to DDPM, offering a trade-off between sample quality and computational efficiency. By eliminating the stochasticity in the reverse process, DDIMs enables faster sampling while maintaining high-quality sample generation.

Pros

- Faster sampling compared to DDPM due to deterministic processes.

- Offers a trade-off between sample quality and computational efficiency.

Cons

- May require careful tuning to balance speed and sample quality.

Score-Based Generative Models (SGMs) / Continuous-Time Diffusion Models

- Score-Based Generative Models (SGMs) leverage the score function of the data distribution to guide the denoising process, rather than modeling the direct transition probabilities between noisy and clean samples. This approach was introduced in the paper “Generative Modeling by Estimating Gradients of the Data Distribution” by Yang Song and Stefano Ermon (2019).

Implementation Details

- Neural Network Architecture:

- SGMs typically employ U-Net architectures due to their efficacy in capturing multi-scale features, which is crucial for modeling the score function across different noise levels.

- Noise Conditional Score Network (NCSN):

-

The neural network is trained to predict the score function conditioned on the noise level, denoted as \(s_\theta(x_t, \sigma_t)\), where:

\[s_\theta(x_t, \sigma_t) = \nabla_{x_t} \log p(x_t)\] -

This conditioning allows the model to handle varying degrees of noise during the denoising process.

-

- Training Objective:

-

The model is trained using a denoising score matching objective, which minimizes:

\[L = \mathbb{E}_{x_0, t, \epsilon} \left[ \lambda(t) \| s_\theta(x_t, t) - \nabla_{x_t} \log p(x_t \| x_0) \|^2 \right]\]- where \(\lambda(t)\) is a weighting function controlling the importance of different noise levels.

-

- Sampling Procedure:

-

Sampling from SGMs involves simulating the reverse diffusion process using Langevin dynamics:

\[x_{t-1} = x_t + \eta s_\theta(x_t, t) + \sqrt{2\eta} z_t\]- where \(\eta\) is the step size and \(z_t \sim \mathcal{N}(0, I)\) is Gaussian noise.

-

Pros

- SGMs can offer flexible sampling methods, including deterministic and stochastic options.

- These models effectively capture complex data distributions.

- They generalize well across different data types.

Cons

- The sampling process can be slow due to iterative Langevin steps.

- Training requires accurate estimation of the score function across different noise levels.

Variational Diffusion Models (VDMs)

- Variational Diffusion Models (VDMs) integrate variational inference with diffusion processes to enhance flexibility and sample quality. This approach was introduced in the paper “High-Resolution Image Synthesis with Latent Diffusion Models” by Robin Rombach et al. (2022).

Implementation Details

-

Latent Variable Introduction:

- VDMs integrate latent variables \(z\) into the diffusion framework, combining the strengths of Variational Autoencoders (VAEs) and diffusion processes. The generative process includes:

- Variational Inference:

- To approximate the intractable posterior \(p(z \| x)\), VDMs employ a variational approximation \(q_\phi(z \| x)\), and the model is trained by maximizing the Evidence Lower Bound (ELBO):

- Diffusion Process in Latent Space:

- The forward diffusion process in VDMs gradually corrupts the latent variables:

- The reverse process then learns to denoise these latent variables, effectively modeling the data distribution in the latent space.

-

Combining VAE and Diffusion:

- By integrating the VAE framework with diffusion processes, VDMs leverage the latent variable structure to guide the denoising process. This combination enhances the flexibility and expressiveness of the model, allowing it to capture complex data distributions more effectively.

Pros

- Latent diffusion significantly reduces computational costs compared to pixel-based diffusion.

- Combines the advantages of VAEs and diffusion models for improved representation learning.

- More efficient in generating high-resolution images.

Cons

- Training requires careful balancing of the latent space structure.

- Requires additional encoding-decoding steps.

Stochastic Differential Equation (SDE)-Based Models

- Stochastic Differential Equation (SDE)-based diffusion models generalize the diffusion process to a continuous-time framework. This approach was introduced in the paper “Score-Based Generative Modeling through Stochastic Differential Equations” by Yang Song et al. (2021).

Implementation Details

- Continuous-Time Formulation:

- SDE-based models generalize the discrete-time diffusion process to continuous time using an SDE:

- where \(W\) is a Wiener process, and \(f(x, t)\) and \(g(t)\) are drift and diffusion coefficients, respectively.

- Forward and Reverse SDEs:

- The forward SDE describes the noise addition:

- The reverse-time SDE is used for sampling:

- Training via Score Matching:

- The score function \(s_\theta(x, t)\) is learned via score matching:

- Sampling with Numerical Solvers:

- Sampling from SDE-based models involves solving the reverse-time SDE using numerical methods such as Euler-Maruyama:

- where \(z_t \sim \mathcal{N}(0, I)\).

Pros

- SDE-based models provide a continuous-time formulation, leading to more flexibility in training and sampling.

- They can generate high-quality samples efficiently.

- The framework is theoretically robust and generalizes other diffusion-based methods.

Cons

- Requires specialized solvers for numerical integration.

- Computational cost can be high for complex models.

Comparative Analysis

- The table below offers a detailed comparison highlights the strengths and trade-offs among different diffusion model variants, offering insights into their practical applications and limitations.

| Model Type | Strengths | Weaknesses | Notable Features |

|---|---|---|---|

| DDPM | Strong theoretical foundation, high-quality samples | Slow sampling due to large step count | Probabilistic denoising framework |

| DDIMs | Faster sampling than DDPM, deterministic | Requires careful tuning | Reduces step count for efficient generation |

| SGMs | Flexible sampling, well-suited for complex data | Slow due to iterative Langevin steps | Uses score function for denoising |

| VDMs | Efficient high-resolution image generation | Complexity in training and inference | Integrates VAEs with diffusion |

| SDE-Based | Continuous-time, flexible framework | Requires numerical solvers, computationally expensive | Extends diffusion models into continuous-time |

Training

- A diffusion model is trained by finding the reverse Markov transitions that maximize the likelihood of the training data. In practice, training equivalently consists of minimizing the variational upper bound on the negative log likelihood.

-

As a notation detail, note that \(L_{v l b}\) is technically an upper bound (the negative of the ELBO) which we are trying to minimize, but we refer to it as \(L_{v l b}\) for consistency with the literature.

-

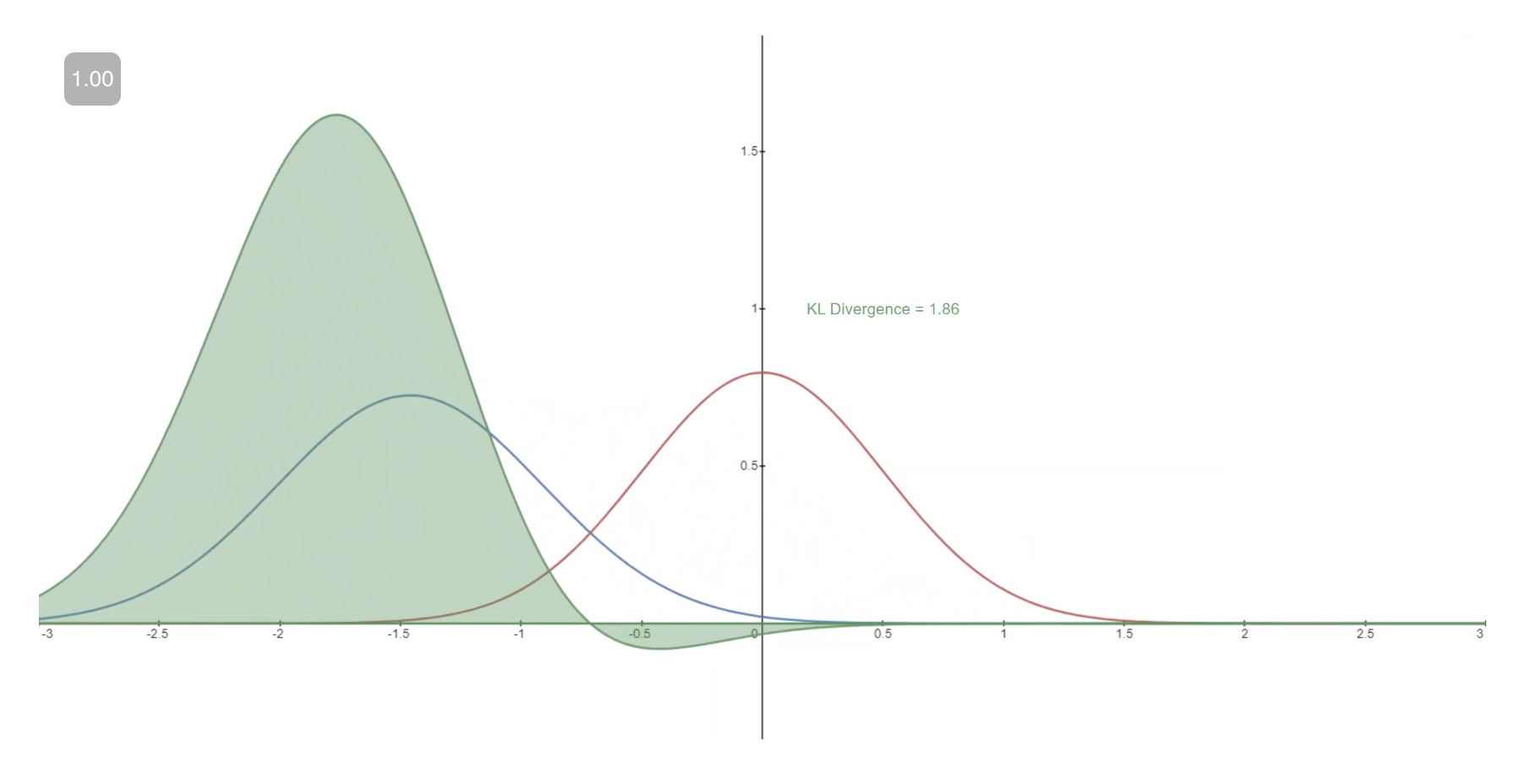

We seek to rewrite the \(L_{v l b}\) in terms of Kullback-Leibler (KL) Divergences. The KL Divergence is an asymmetric statistical distance measure of how much one probability distribution \(P\) differs from a reference distribution \(Q\). We are interested in formulating \(L_{v l b}\) in terms of \(KL\) divergences because the transition distributions in our Markov chain are Gaussians, and the KL divergence between Gaussians has a closed form.

Recap: KL Divergence

-

The mathematical form of the \(\mathrm{KL}\) divergence for continuous distributions is,

\[D_{\mathrm{KL}}(P \| Q)=\int_{-\infty}^{\infty} p(x) \log \left(\frac{p(x)}{q(x)}\right) d x\]- Note that the double bars in the above equation indicate that the function is not symmetric with respect to its arguments.

-

Below you can see the \(K L\) divergence of a varying distribution \(P\) (blue) from a reference distribution \(Q\) (red). The green curve indicates the function within the integral in the definition for the \(\mathrm{KL}\) divergence above, and the total area under the curve represents the value of the KL divergence of \(P\) from \(Q\) at any given moment, a value which is also displayed numerically.

Casting \(L_{v l b}\) in Terms of KL Divergences

-

As mentioned previously, it is possible[1] to rewrite \(L v l b\) almost completely in terms of KL divergences:

\[L_{v l b}=L_{0}+L_{1}+\ldots+L_{T-1}+L_{T}\]- where, \(\begin{gathered} L_{0}=-\log p_{\theta}\left(x_{0} \mid x_{1}\right) \\ L_{t-1}=D_{K L}\left(q\left(x_{t-1} \mid x_{t}, x_{0}\right) \| p_{\theta}\left(x_{t-1} \mid x_{t}\right)\right) \\ L_{T}=D_{K L}\left(q\left(x_{T} \mid x_{0}\right) \| p\left(x_{T}\right)\right) \end{gathered}\)

-

Conditioning the forward process posterior on \(x_{0}\) in \(L_{t-1}\) results in a tractable form that leads to all KL divergences being comparisons between Gaussians. This means that the divergences can be exactly calculated with closed-form expressions rather than with Monte Carlo estimates.

Model Choices

- With the mathematical foundation for our objective function established, we now need to make several choices regarding how our diffusion model will be implemented. For the forward process, the only choice required is defining the variance schedule, the values of which are generally increasing during the forward process.

- For the reverse process, we much choose the Gaussian distribution parameterization / model architecture(s). Note the high degree of flexibility that Diffusion models afford - the only requirement on our architecture is that its input and output have the same dimensionality. We will explore the details of these choices in more detail below.

Forward Process and \(L_{T}\)

- As noted above, regarding the forward process, we must define the variance schedule. In particular, we set them to be time-dependent constants, ignoring the fact that they can be learned. For example, per Denoising Diffusion Probabilistic models, a linear schedule from \(\beta_{1}=10^{-4}\) to \(\beta_{T}=0.2\) might be used, or perhaps a geometric series. Regardless of the particular values chosen, the fact that the variance schedule is fixed results in \(L_{T}\) becoming a constant with respect to our set of learnable parameters, allowing us to ignore it as far as training is concerned.

Reverse Process and \(L_{1: T-1}\)

- Now we discuss the choices required in defining the reverse process. Recall from above we defined the reverse Markov transitions as a Gaussian:

- We must now define the functional forms of \(\mu_{\theta}\) or \(\Sigma_{\theta}\). While there are more complicated ways to parameterize \(\boldsymbol{\Sigma}_\theta\), we simply set,

- That is, we assume that the multivariate Gaussian is a product of independent gaussians with identical variance, a variance value which can change with time. We set these variances to be equivalent to our forward process variance schedule.

-

Given this new formulation of \(\Sigma_{\theta_{1}}\), we have

\[p_{\theta}\left(\mathbf{x}_{t-1} \mid \mathbf{x}_{t}\right):=\mathcal{N}\left(\mathbf{x}_{t-1} ; \boldsymbol{\mu}_{\theta}\left(\mathbf{x}_{t}, t\right), \mathbf{\Sigma}_{\theta}\left(\mathbf{x}_{t}, t\right):=\mathcal{N}\left(\mathbf{x}_{t-1} ; \boldsymbol{\mu}_{\theta}\left(\mathbf{x}_{t}, t\right), \sigma_{t}^{2} \mathbf{I}\right)\right.\]- which allows us to transform,

- to,

- where the first term in the difference is a linear combination of \(x t\) and \(x_{0}\) that depends on the variance schedule \(\beta_{t}\). The exact form of this function is not relevant for our purposes, but it can be found in Denoising Diffusion Probabilistic models. The significance of the above proportion is that the most straightforward parameterization of \(\mu_{\theta}\) simply predicts the diffusion posterior mean. Importantly, the authors of Denoising Diffusion Probabilistic models actually found that training \(\mu \theta\) to predict the noise component at any given timestep yields better results. In particular, let

- where, \(\alpha_{t}:=1-\beta_{t} \quad\) and \(\quad \bar{\alpha}_{t}:=\prod_{s=1}^{t} \alpha_{s}\).

- This leads to the following alternative loss function, which the authors of Denoising Diffusion Probabilistic models found to lead to more stable training and better results:

- The authors of Denoising Diffusion Probabilistic models also note connections of this formulation of Diffusion models to score-matching generative models based on Langevin dynamics. Indeed, it appears that Diffusion models and ScoreBased models may be two sides of the same coin, akin to the independent and concurrent development of wave-based quantum mechanics and matrix-based quantum mechanics revealing two equivalent formulations of the same phenomena.

Network Architecture: U-Net and Diffusion Transformer (DiT)

- Let’s dive deep into the network architecture of a diffusion model. Note that the only requirement for the model is that its input and output dimensionality are identical. Specifically, the neural network needs to take in a noised image at a particular time step and return the predicted noise. The predicted noise here is a tensor that has the same size and resolution as the input image. Thus, these neural networks take in tensors and return tensors of the same shape.

- Given this restriction, it is perhaps unsurprising that image diffusion models are commonly implemented with U-Net-like architectures. Put simply, U-Net-based diffusion models are the most prevalent type of diffusion models.

- There are primarily two types of architectures used in diffusion models: U-Net and Diffusion Transformer (DiT). U-Net-based architectures are highly effective for image-related tasks due to their spatially structured convolutional operations, while Diffusion Transformers leverage self-attention mechanisms to capture long-range dependencies, making them versatile for various data types.

U-Net-Based Diffusion Models

-

The neural net architecture that the authors of DDPM used was U-Net. Here are the key aspects of the U-Net architecture:

- Encoder-Decoder Structure:

- The network consists of a “bottleneck” layer in the middle of its architecture between the encoder and decoder.

- The encoder first encodes an image into a smaller hidden representation called the “bottleneck”.

- The decoder then decodes that hidden representation back into an actual image.

- Bottleneck Layer:

- This bottleneck ensures the network learns only the most important information.

- It compresses the input data to focus on essential features, facilitating effective denoising and reconstruction.

- Skip Connections:

- U-Net architecture includes skip connections between corresponding layers of the encoder and decoder.

- These connections help retain fine-grained details lost during downsampling in the encoder and reintroduce them during upsampling in the decoder.

- Encoder-Decoder Structure:

Diffusion Transformer (DiT)

-

In contrast to U-Net-based diffusion models, Diffusion Transformers (DiT), proposed in Scalable Diffusion Models with Transformers, employ a transformer-based architecture, characterized by self-attention mechanisms. Here are the key features of DiT:

- Transformer-Based Architecture:

- Utilizes layers of multi-head self-attention and feed-forward networks.

- Captures long-range dependencies and complex interactions within the data.

- Sequential and Attention Mechanisms:

- Handles data as sequences, leveraging self-attention to weigh the importance of different parts of the input dynamically.

- Well-suited for tasks requiring the understanding of relationships over long distances in the input data.

- Can be adapted for various data types, not just images, due to its flexibility in data representation.

- Transformer-Based Architecture:

Comparison

Model Complexity and Parameters

- DiT:

- Generally has a higher model complexity due to the quadratic scaling of self-attention with the input size.

- Requires significant computational resources for handling large sequences or high-resolution data.

- U-Net:

- Model complexity is more manageable, as convolutional operations scale linearly with input size.

- Easier to train and requires fewer computational resources compared to transformers, especially for high-resolution image data.

Training and Optimization

- DiT:

- Training can be more challenging due to the complexity of the attention mechanisms and the need for large-scale data to fully leverage its capabilities.

- Optimization techniques like learning rate schedules, gradient clipping, and advanced initialization methods are often necessary.

- U-Net:

- Training is generally more straightforward due to the well-understood nature of convolutional operations and spatial processing.

- Beneficial for applications with limited computational resources or where rapid prototyping and iteration are required.

Reverse Process of U-Net-based Diffusion Models

- The path along the reverse process consists of many transformations under continuous conditional Gaussian distributions. At the end of the reverse process, recall that we are trying to produce an image, which is composed of integer pixel values. Therefore, we must devise a way to obtain discrete (log) likelihoods for each possible pixel value across all pixels.

-

The way that this is done is by setting the last transition in the reverse diffusion chain to an independent discrete decoder. To determine the likelihood of a given image \(x_0\) given \(x_{1}\), we first impose independence between the data dimensions:

\[p_{\theta}\left(x_{0} \mid x_{1}\right)=\prod_{i=1}^{D} p_{\theta}\left(x_{0}^{i} \mid x_{1}^{i}\right)\]- where \(D\) is the dimensionality of the data and the superscript \(i\) indicates the extraction of one coordinate. The goal now is to determine how likely each integer value is for a given pixel given the distribution across possible values for the corresponding pixel in the slightly noised image at time \(t=1\) :

- where the pixel distributions for \(t=1\) are derived from the below multivariate Gaussian whose diagonal covariance matrix allows us to split the distribution into a product of univariate Gaussians, one for each dimension of the data:

-

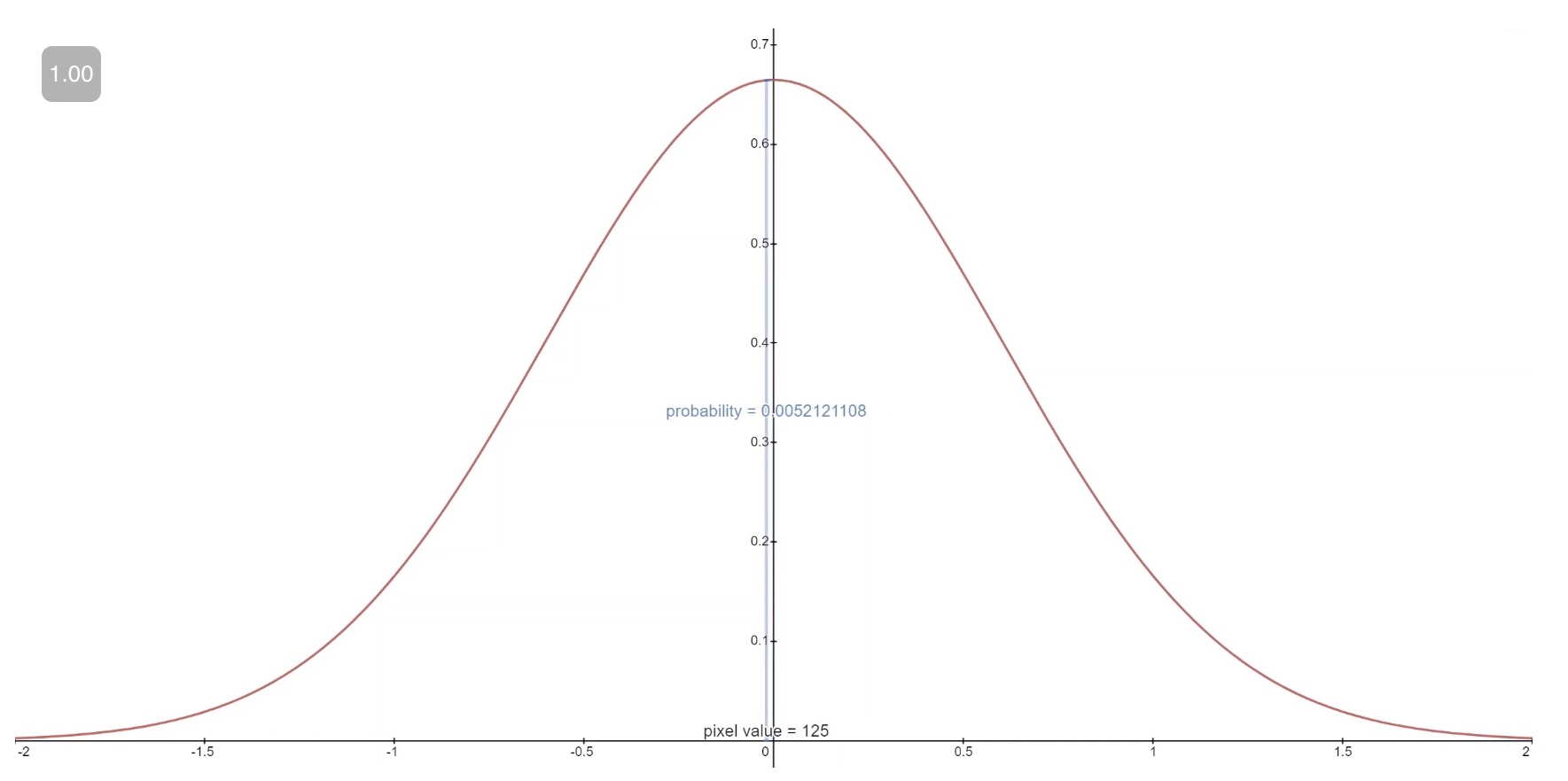

We assume that the images consist of integers in \(0,1, \ldots, 255\) (as standard RGB images do) which have been scaled linearly to \([-1,1]\). We then break down the real line into small “buckets”, where, for a given scaled pixel value \(x\), the bucket for that range is \([x-1 / 255, x+1 / 255]\). The probability of a pixel value \(x\), given the univariate Gaussian distribution of the corresponding pixel in \(x_1\), is the area under that univariate Gaussian distribution within the bucket centered at \(x\).

- The figure below shows the area for each of these buckets with their probabilities for a mean-0 Gaussian which, in this context, corresponds to a distribution with an average pixel value of \(\frac{255}{2}\) (half brightness). The red curve represents the distribution of a specific pixel in the \(t=1\) image, and the areas give the probability of the corresponding pixel value in the \(t=0\) image.

-

Technical Note: The first and final buckets extend out to -inf and +inf to preserve total probability.

-

Given a \(t=0\) pixel value for each pixel, the value of \(p_{\theta}\left(x_{0} \mid x_{1}\right)\) is simply their product. Succinctly, this process is succinctly encapsulated by the following equation:

\[p_{\theta}\left(x_{0} \mid x_{1}\right)=\prod_{i=1}^{D} p_{\theta}\left(x_{0}^{i} \mid x_{1}^{i}\right)=\prod_{i=1}^{D} \int_{\delta_{-}\left(x_{0}^{i}\right)}^{\delta_{+}\left(x_{0}^{i}\right)} \mathcal{N}\left(x ; \mu_{\theta}^{i}\left(x_{1}, 1\right), \sigma_{1}^{2}\right) d x\]- where,

- and

-

Given this equation for \(p_{\theta}\left(x_{0} \mid x_{1}\right)\), we can calculate the final term of \(L_{v l b}\) which is not formulated as a \(\mathrm{KL}\) Divergence:

\[L_{0}=-\log p_{\theta}\left(x_{0} \mid x_{1}\right)\]

Reverse Process of DiT-based Diffusion Models

-

In DiT-based diffusion models, the reverse process involves a series of denoising steps utilizing transformer architectures, specifically designed to capture the long-range dependencies in the data. Unlike U-Net-based models, which primarily leverage convolutional operations, DiT-based models employ self-attention mechanisms to model the interactions between different parts of the image more effectively.

-

The reverse process starts with a highly noisy image and progressively refines it through a sequence of transformer blocks. At each timestep, the model predicts the noise component that needs to be subtracted from the current noisy image to move closer to the original image distribution. This iterative process can be described as follows:

-

Input Representation: The noisy image at each timestep is first encoded into a latent representation using an initial linear projection layer. This encoded representation serves as the input to the transformer blocks.

-

Transformer Blocks: Each transformer block consists of multi-head self-attention layers followed by feed-forward networks. The self-attention mechanism allows the model to focus on different parts of the image, capturing global context and dependencies. The feed-forward networks further process this information to refine the noise prediction.

-

Noise Prediction: At each timestep, the output of the transformer blocks is used to predict the noise present in the current image. This predicted noise is then subtracted from the current noisy image to obtain a less noisy version of the image.

-

Iterative Denoising: This process is repeated iteratively, with each step producing a progressively denoised image. The final image, after all the steps, is expected to closely match the original image distribution.

-

-

The objective function for DiT-based models is similar to that of U-Net-based models, aiming to minimize the difference between the predicted and actual noise components. The overall loss function can be expressed as:

\[L_{\text {DiT}}(\theta):=\mathbb{E}_{t, \mathbf{x}_{0}, \epsilon}\left[\left\|\epsilon-\epsilon_{\theta}\left(\mathbf{x}_{t}, t\right)\right\|^{2}\right]\] -

Here, \(\mathbf{x}_{t}\) represents the noisy image at timestep \(t\), and \(\epsilon_{\theta}\) denotes the noise predicted by the transformer-based model. By minimizing this loss, the model learns to accurately predict the noise at each timestep, leading to high-quality image generation through the reverse diffusion process.

Final Objective

- As mentioned in the last section, the authors of Denoising Diffusion Probabilistic models found that predicting the noise component of an image at a given timestep produced the best results. Ultimately, they use the following objective:

- The training and sampling algorithms for our diffusion model therefore can be succinctly captured in the below table (from source):

Summary

- In summary, U-Net-based diffusion models are the most prevalent type of diffusion models, particularly effective for image-related tasks due to their spatially structured convolutional architecture. They are simpler to train and computationally more efficient. The reverse process in U-Net-based models involves many transformations under continuous conditional Gaussian distributions and concludes with an independent discrete decoder to determine pixel values.

- On the other hand, Diffusion Transformers (DiT) leverage the power of transformers to handle a variety of data types, capturing long-range dependencies through attention mechanisms. They utilize a series of denoising steps with transformer blocks, employing self-attention mechanisms to effectively model interactions within the data. However, DiT models are more complex and resource-intensive. The reverse process in DiT-based models involves iterative denoising steps using transformer blocks to progressively refine the noisy image.

- The choice between these models depends on the specific requirements of the task, the nature of the data, and the available computational resources.

Conditional Diffusion Models

- Conditional Diffusion Models (CDMs) are an extension of diffusion probabilistic models, where the generation process is conditioned on auxiliary information. This conditioning allows for more structured and controlled synthesis, enabling models to produce outputs that adhere to specific constraints or descriptions.

- Conditioning in diffusion models can be applied using various inputs, such as text (e.g., CLIP embeddings, transformers) or visual data (e.g., images, segmentation maps, depth maps). These inputs influence both the theoretical underpinnings and practical implementations of the models, enhancing their ability to generate outputs aligned with user-defined specifications.

- Early diffusion models relied on simple concatenation techniques for conditioning. However, modern architectures have adopted more sophisticated methods like cross-attention mechanisms, which significantly improve guidance effectiveness. Additionally, techniques such as classifier-free guidance and feature modulation further refine controllability, allowing models to better interpret conditioning signals. These advancements make CDMs powerful tools for diverse tasks, including text-to-image synthesis and guided image manipulation.

Conditioning Mechanisms

-

Diffusion models, which iteratively denoise a Gaussian noise sample to generate an image, can be conditioned by modifying either the forward diffusion process, the reverse process, or both. Below are the primary methods used for conditioning:

- Concatenation: Directly concatenating conditioning information to the input (e.g., concatenating a text embedding or image feature map to the input image tensor). This was widely used in earlier models such as SR3 (Saharia et al., 2021) and Palette (Saharia et al., 2022).

- Cross-Attention: Using a transformer-based cross-attention mechanism to modulate the noise prediction process. This is commonly used in modern models like Imagen (Saharia et al., 2022) and Stable Diffusion (Rombach et al., 2022).

- Adaptive Normalization (AdaGN, AdaIN): Using conditioning information to modulate the mean and variance of intermediate activations.

- Classifier Guidance: Using an external classifier to guide the reverse diffusion process.

- Score-Based Guidance: Modifying the score function based on conditioning information.

-

Below, we describe how these approaches work mathematically and their implementations.

Text Conditioning in Diffusion Models

- Text conditioning in diffusion models typically involves leveraging text encoders such as CLIP, T5, or BERT to obtain a text embedding, which is then integrated into the diffusion model’s denoising network.

Encoding Textual Information

-

A text encoder extracts a fixed-length embedding from an input text description. Suppose the input text is denoted as \(T\), the text encoder \(E_{text}\) produces an embedding:

\[z_T = E_{text}(T) \in \mathbb{R}^{d_{text}}\]- where \(z_T\) is the resulting embedding vector.

Concatenation vs. Cross-Attention Conditioning

- Earlier models such as SR3 (Saharia et al., 2021) and Palette (Saharia et al., 2022) used direct concatenation of conditioning inputs with noise latents. However, modern models like Stable Diffusion and Imagen rely on cross-attention for more expressive conditioning.

Cross-Attention

-

A common method for integrating \(z_T\) into the U-Net-based denoiser is via cross-attention. If \(f_l\) represents the feature map at layer \(l\) of the U-Net, attention-modulated features are computed as:

\[\text{Attention}(Q, K, V) = \text{softmax}\left( \frac{Q K^T}{\sqrt{d}} \right) V,\]- where \(Q = W_Q f_l, \quad K = W_K z_T, \quad V = W_V z_T.\)

-

This allows the model to attend to relevant text features while generating an image.

Implementation Details (PyTorch)

class CrossAttention(nn.Module):

def __init__(self, dim, context_dim):

super().__init__()

self.to_q = nn.Linear(dim, dim)

self.to_k = nn.Linear(context_dim, dim)

self.to_v = nn.Linear(context_dim, dim)

self.scale = dim ** -0.5

def forward(self, x, context):

q = self.to_q(x)

k = self.to_k(context)

v = self.to_v(context)

attn = torch.einsum('b i d, b j d -> b i j', q, k) * self.scale

attn = attn.softmax(dim=-1)

out = torch.einsum('b i j, b j d -> b i d', attn, v)

return out

Visual Conditioning in Diffusion Models

- Visual conditioning can be applied using images, segmentation maps, edge maps, or depth maps as conditioning inputs.

Concatenation-Based Conditioning

- A simple way to condition on an image is by concatenating it with the noise input at each timestep:

\(x_t' = \text{concat}(x_t, C)\)

- where \(x_t\) is the noisy image and \(C\) is the conditioning image. This method was prevalent in early models like SR3.

Feature Map Injection via Cross-Attention

- More advanced methods use feature injection via cross-attention, as seen in Stable Diffusion and Imagen. Instead of concatenation, this method extracts feature maps from a pretrained encoder \(E_{img}\):

- and injects these features at various U-Net layers via FiLM (Feature-wise Linear Modulation):

Implementation Details (PyTorch)

class FiLM(nn.Module):

def __init__(self, in_channels, conditioning_dim):

super().__init__()

self.gamma = nn.Linear(conditioning_dim, in_channels)

self.beta = nn.Linear(conditioning_dim, in_channels)

def forward(self, x, conditioning):

gamma = self.gamma(conditioning).unsqueeze(-1).unsqueeze(-1)

beta = self.beta(conditioning).unsqueeze(-1).unsqueeze(-1)

return gamma * x + beta

Classifier-Free Guidance

Background: Why Are External Classifiers Needed for Text-to-Image Synthesis Using Diffusion Models?

- Diffusion models when used for text-to-image synthesis produce high-quality and coherent images from textual descriptions. However, early implementations of diffusion-based text-to-image models often struggled with aligning generated images precisely with their corresponding textual descriptions. One method to improve this alignment is through classifier guidance, where an external classifier is used to steer the diffusion process. The introduction of an external classifier provided an initial improvement in text-to-image synthesis by guiding diffusion models towards more accurate outputs.

The Need for External Classifiers

- Conditional Control: Early diffusion models generated images by iteratively refining a noise vector but lacked a robust mechanism to ensure strict adherence to the input text description.

- Gradient-Based Steering: External classifiers enabled gradient-based guidance by evaluating intermediate diffusion steps and providing directional corrections to better match the conditioning input.

- Enhancing Specificity: Without an external classifier, models sometimes produced images that, while visually plausible, did not accurately capture the semantics of the input text. The classifier provided a corrective mechanism to reinforce textual consistency.

- Limitations of Pure Unconditional Diffusion Models: Unconditional diffusion models trained without any conditioning struggled to generate diverse yet accurate samples aligned with a given input prompt. External classifiers were introduced to bridge this gap by explicitly providing additional constraints during inference.

Key Papers Introducing External Classifiers for Text-to-Image Synthesis Using Diffusion Models

-

Several papers introduced and explored the use of external classifiers for guiding text-to-image synthesis in diffusion models:

- Dhariwal and Nichol (2021): “Diffusion Models Beat GANs on Image Synthesis”

- This paper introduced classifier guidance as a mechanism to improve the fidelity and control of image generation in diffusion models.

- The approach leveraged an external classifier trained to predict image labels, which was then used to modify the sampling process by influencing the reverse diffusion steps.

- Mathematically, the classifier-based guidance modifies the score function as: \(\nabla_x \log p(y \| x) \approx \frac{\partial f_y(x)}{\partial x},\) where \(f_y(x)\) represents the classifier’s output logits for class \(y\) given an image \(x\).

- Ho et al. (2021): “Classifier-Free Diffusion Guidance”

- This work proposed classifier-free guidance as an alternative to classifier-based guidance, enabling the model to learn both conditioned and unconditioned paths internally without requiring an external classifier.

- It showed that classifier-free guidance could achieve competitive or superior results compared to classifier-based methods while reducing architectural complexity.

- Ramesh et al. (2022): “Hierarchical Text-Conditional Image Generation with CLIP Latents (DALL·E 2)”

- This paper incorporated a CLIP-based approach to improve text-to-image alignment without directly using an external classifier.

- Instead of an explicit classifier, a pretrained CLIP model was used to guide the image generation by matching textual and visual embeddings.

- Dhariwal and Nichol (2021): “Diffusion Models Beat GANs on Image Synthesis”

How Classifier-Free Guidance Works

- Compared to using external classifiers, classifier-free guidance has since emerged as a more efficient and flexible alternative, eliminating the need for additional classifiers while maintaining or exceeding the performance of classifier-based methods. Put simply, classifier-free guidance provides an alternative to external classifier-based guidance by training the model to handle both conditioned and unconditioned paths internally.

- By incorporating a dual-path training approach and an adjustable guidance scale, classifier-free guidance enhances fidelity, efficiency, and control in text-to-image synthesis, making it a preferred choice in modern generative models.

Dual Training Path

- Conditioned and Unconditioned Paths: During training, the model learns two distinct paths:

- A conditioned path, where the model is trained to generate outputs aligned with a given text description.

- An unconditioned path, where the model generates outputs without any guidance.

- Random Conditioning Dropout: To encourage robustness, the model is trained with random conditioning dropout, where a fraction of inputs are deliberately trained without text guidance.

- Self-Guidance Mechanism: By learning both paths simultaneously, the model can interpolate between conditioned and unconditioned generations, allowing it to effectively control guidance strength during inference.

Equations

- Training Objective:

- The model learns two score functions:

\(\epsilon_\theta(x_t, y) \text{ and } \epsilon_\theta(x_t, \varnothing),\)

- where:

- \(x_t\) is the noised image at time step \(t\),

- \(y\) represents the conditioning input (e.g., text prompt), and

- \(\varnothing\) represents the unconditioned input.

- where:

- The model learns two score functions:

\(\epsilon_\theta(x_t, y) \text{ and } \epsilon_\theta(x_t, \varnothing),\)

- Guidance During Inference:

- Classifier-free guidance is implemented as:

\(\tilde{\epsilon}_\theta(x_t, y) = (1 + \gamma) \epsilon_\theta(x_t, y) - \gamma \epsilon_\theta(x_t, \varnothing),\)

- where \(\gamma\) is the guidance scale controlling adherence to the conditioning input.

- Classifier-free guidance is implemented as:

\(\tilde{\epsilon}_\theta(x_t, y) = (1 + \gamma) \epsilon_\theta(x_t, y) - \gamma \epsilon_\theta(x_t, \varnothing),\)

- Effect of Guidance Scale:

- When \(\gamma = 0\), the model behaves as an unconditional generator.

- When \(\gamma\) is increased, the generated output aligns more closely with the text condition.

Benefits of Classifier-Free Guidance

- Eliminates the Need for an External Classifier

- Traditional classifier-based guidance requires a separately trained classifier, adding complexity to both training and inference.

- Classifier-free guidance removes this dependency, simplifying the overall architecture while maintaining strong performance.

- Improved Sample Quality

- External classifiers introduce additional noise and potential misalignment between the classifier and the generative model.

- Classifier-free guidance directly integrates the conditioning within the diffusion process, leading to more natural and coherent outputs.

- Reduced Computational Cost

- Training and utilizing an external classifier increases the computational burden.

- Classifier-free guidance eliminates the need for additional model components, streamlining both training and inference.

- Enhanced Generalization and Robustness

- Classifier-based methods can be prone to adversarial vulnerabilities and overfitting to specific datasets.

- Classifier-free approaches allow the diffusion model to generalize better across different conditioning signals and input variations.

- Flexibility and Real-Time Control

- Classifier-free guidance allows for dynamic adjustment of the guidance scale \(\gamma\) at inference time, providing fine-tuned control over generation quality and diversity.

- Users can experiment with different \(\gamma\) values without retraining the model, unlike classifier-based methods where the external classifier’s influence is fixed.

Prompting Guidance

- Crafting effective prompts is crucial for generating high-quality and relevant outputs using diffusion models. This guide is divided into two main sections: (i) Prompting for Text-to-Image models and (ii) Prompting for Text-to-Video models.

Prompting Text-to-Image Models

- Text-to-image models, such as Stable Diffusion, DALL-E, and Imagen, translate textual descriptions into visual outputs. The success of a prompt depends on how well it describes the desired image in a structured, caption-like format. Below are the key considerations and techniques for crafting effective prompts for text-to-image generation.

Key Prompting Guidelines

- Phrase Your Prompt as an Image Caption:

- Avoid conversational language or commands. Instead, describe the desired image with concise, clear details as you would in an image caption.

- Example: “Realistic photo of a snowy mountain range under a clear blue sky, with sunlight casting long shadows.”

- Structure Your Prompt Using the Formula:

- [Subject] in [Environment], [Optional Pose/Position], [Optional Lighting], [Optional Camera Position/Framing], [Optional Style/Medium].

- Example: “A golden retriever playing in a grassy park during sunset, photorealistic, warm lighting.”

- Character Limit:

- Prompts must not exceed 1024 characters. Place less important details near the end.

- Avoid Negation Words:

- Do not use words like “no,” “not,” or “without.” For example, the prompt “a fruit basket with no bananas” may result in bananas being included. Instead, use negative prompts:

- Example:

Prompt: A fruit basket with apples and oranges.

Negative Prompt: Bananas.

- Example:

- Do not use words like “no,” “not,” or “without.” For example, the prompt “a fruit basket with no bananas” may result in bananas being included. Instead, use negative prompts:

- Refinement Techniques:

- Use a consistent seed value to test prompt variations, iterating with small changes to understand how each affects the output.

- Once satisfied with a prompt, generate variations by running the same prompt with different seed values.

Example Prompts for Text-to-Image Models

| Use Case | Prompt | Negative Prompt |

|---|---|---|

| Stock Photo | "Realistic editorial photo of a teacher standing at a blackboard with a warm smile." | "Crossed arms." |

| Story Illustration | "Whimsical storybook illustration: a knight in armor kneeling before a glowing sword." | "Cartoonish style." |

| Cinematic Landscape | "Drone view of a dark river winding through a stark Icelandic landscape, cinematic quality." | None |

Prompting Text-to-Video Models

- Text-to-video models extend text-to-image capabilities to temporal domains, generating coherent sequences of frames based on textual prompts. These models use additional techniques, such as temporal embeddings, to capture motion and transitions over time.

Key Prompting Guidelines

- Phrase Your Prompt as a Video Summary:

- Describe the video sequence as if summarizing its content, focusing on the subject, action, and environment.

- Example: “A time-lapse of a sunflower blooming in a sunny garden. Vibrant colors, cinematic lighting.”

- Include Camera Movement for Dynamic Outputs:

- Add camera movement descriptions (e.g., dolly shot, aerial view) at the start or end of the prompt for optimal results.

- Example: “Arc shot of a basketball spinning on a finger in slow motion. Cinematic, sharp focus, 4K resolution.”

- Character Limit:

- Like text-to-image prompts, video prompts must not exceed 1024 characters.

- Avoid Negation Words:

- Use negative prompts to exclude unwanted elements, similar to text-to-image generation.

- Refinement Techniques:

- Experiment with different camera movements, action descriptions, or lighting effects to improve output consistency and realism.

Camera Movements

- In video prompts, describing camera motion adds dynamic perspectives to the generated sequence. Below is a reference table of common camera movements and their suggested keywords:

| Camera Movement | Suggested Keywords | Definition |

|---|---|---|

| Aerial Shot | aerial shot, drone shot, first-person view (FPV) | A shot taken from above, often from a drone or aircraft. |

| Arc Shot | arc shot, 360-degree shot, orbit shot | Camera moves in a circular path around a central point/object. |

| Clockwise Rotation | camera rotates clockwise, clockwise rolling shot | Camera rotates in a clockwise direction. |

| Dolly In | dolly in, camera moves forward | Camera moves forward. |

Example Prompts for Text-to-Video Models

| Use Case | Prompt | Negative Prompt |

|---|---|---|

| Food Advertisement | "Cinematic dolly shot of a juicy cheeseburger with melting cheese, fries, and a cola on a diner table." | "Messy table." |

| Product Showcase | "Arc shot of a luxury wristwatch on a glass display, under studio lighting, with a blurred background." | "Low resolution." |

| Nature Scene | "Aerial shot of a waterfall cascading through a dense forest. Soft lighting, 4K resolution." | None |

Summary

Text-to-Image

- For text-to-image tasks, focus on describing the subject and its environment with optional details like lighting, style, and camera position. Use clear, concise descriptions structured like image captions.

Text-to-Video

-

For text-to-video tasks, describe the sequence as a whole, including subject actions, camera movements, and temporal transitions. Camera motion plays a critical role in adding dynamic elements to the video output.

-

Both types of prompting require careful attention to phrasing and refinement to achieve optimal results. By iterating and experimenting with different seeds and negative prompts, you can generate visually stunning and contextually accurate outputs tailored to your needs.

Diffusion Models in PyTorch

Implementing the original paper

- Let’s go over the original Denoising Diffusion Probabilistic Models (DDPMs) paper by Ho et al.,2020 and implement it step by step based on Phil Wang’s implementation and The Annotated Diffusion by Hugging Face which are both based off the original implementation.

Pre-requisites: Setup and Importing Libraries

- Let’s start with the setup and importing all the required libraries:

from IPython.display import Image

Image(filename='assets/78_annotated-diffusion/ddpm_paper.png')

!pip install -q -U einops datasets matplotlib tqdm

import math

from inspect import isfunction

from functools import partial

%matplotlib inline

import matplotlib.pyplot as plt

from tqdm.auto import tqdm

from einops import rearrange

import torch

from torch import nn, einsum

import torch.nn.functional as F

Helper functions

- Now let’s implement the neural network we have looked at earlier. First we start with a few helper functions.

- Most notably, we define

Residualclass which will add the input to the output of a particular function. That is, it adds a residual connection to a particular function.

def exists(x):

return x is not None

def default(val, d):

if exists(val):

return val

return d() if isfunction(d) else d

class Residual(nn.Module):

def __init__(self, fn):

super().__init__()

self.fn = fn

def forward(self, x, *args, **kwargs):

return self.fn(x, *args, **kwargs) + x

def Upsample(dim):

return nn.ConvTranspose2d(dim, dim, 4, 2, 1)

def Downsample(dim):

return nn.Conv2d(dim, dim, 4, 2, 1)

- Note: the parameters of the neural network are shared across time (noise level).

- Thus, for the neural network to keep track of which time step (noise level) it is on, the authors used sinusoidal position embeddings to encode \(t\).

- The

SinusoidalPositionEmbeddingsclass, that we have defined below, takes a tensor of shape(batch_size,1)as input or the noise levels in a batch. - It will then turn this input tensor into a tensor of shape

(batch_size, dim)wheredim$is the dimensionality of the position embeddings.

class SinusoidalPositionEmbeddings(nn.Module):

def __init__(self, dim):

super().__init__()

self.dim = dim

def forward(self, time):

device = time.device

half_dim = self.dim // 2

embeddings = math.log(10000) / (half_dim - 1)

embeddings = torch.exp(torch.arange(half_dim, device=device) * -embeddings)

embeddings = time[:, None] * embeddings[None, :]

embeddings = torch.cat((embeddings.sin(), embeddings.cos()), dim=-1)

return embeddings

Model Core: ResNet or ConvNeXT

- Now we will look at the meat or the core part of our U-Net model. The original DDPM authors employed a Wide ResNet block via Zagoruyko et al., 2016, however Phil Wang has also introduced support for ConvNeXT block via Liu et al., 2022.

- You are free to choose either or in your final U-Net architecture but both are provided below:

class Block(nn.Module):

def __init__(self, dim, dim_out, groups = 8):

super().__init__()

self.proj = nn.Conv2d(dim, dim_out, 3, padding = 1)

self.norm = nn.GroupNorm(groups, dim_out)

self.act = nn.SiLU()

def forward(self, x, scale_shift = None):

x = self.proj(x)

x = self.norm(x)

if exists(scale_shift):

scale, shift = scale_shift

x = x * (scale + 1) + shift

x = self.act(x)

return x

class ResnetBlock(nn.Module):

"""https://arxiv.org/abs/1512.03385"""

def __init__(self, dim, dim_out, *, time_emb_dim=None, groups=8):

super().__init__()

self.mlp = (

nn.Sequential(nn.SiLU(), nn.Linear(time_emb_dim, dim_out))

if exists(time_emb_dim)

else None

)

self.block1 = Block(dim, dim_out, groups=groups)

self.block2 = Block(dim_out, dim_out, groups=groups)

self.res_conv = nn.Conv2d(dim, dim_out, 1) if dim != dim_out else nn.Identity()

def forward(self, x, time_emb=None):

h = self.block1(x)

if exists(self.mlp) and exists(time_emb):

time_emb = self.mlp(time_emb)

h = rearrange(time_emb, "b c -> b c 1 1") + h

h = self.block2(h)

return h + self.res_conv(x)

class ConvNextBlock(nn.Module):

"""https://arxiv.org/abs/2201.03545"""

def __init__(self, dim, dim_out, *, time_emb_dim=None, mult=2, norm=True):

super().__init__()

self.mlp = (

nn.Sequential(nn.GELU(), nn.Linear(time_emb_dim, dim))

if exists(time_emb_dim)

else None

)

self.ds_conv = nn.Conv2d(dim, dim, 7, padding=3, groups=dim)

self.net = nn.Sequential(

nn.GroupNorm(1, dim) if norm else nn.Identity(),

nn.Conv2d(dim, dim_out * mult, 3, padding=1),

nn.GELU(),

nn.GroupNorm(1, dim_out * mult),

nn.Conv2d(dim_out * mult, dim_out, 3, padding=1),

)

self.res_conv = nn.Conv2d(dim, dim_out, 1) if dim != dim_out else nn.Identity()

def forward(self, x, time_emb=None):

h = self.ds_conv(x)

if exists(self.mlp) and exists(time_emb):

condition = self.mlp(time_emb)

h = h + rearrange(condition, "b c -> b c 1 1")

h = self.net(h)

return h + self.res_conv(x)

Attention

- Next, we will look into defining the attention module which was added between the convolutional blocks in DDPM.

- Phil Wang added two variants of attention, a normal multi-headed self-attention from the original Transformer paper (Vaswani et al.,2017), and linear attention variant (Shen et al., 2018).

- Linear attention variant’s time and memory requirements scale linear in the sequence length, as opposed to quadratic for regular attention.

class Attention(nn.Module):

def __init__(self, dim, heads=4, dim_head=32):

super().__init__()

self.scale = dim_head**-0.5

self.heads = heads

hidden_dim = dim_head * heads

self.to_qkv = nn.Conv2d(dim, hidden_dim * 3, 1, bias=False)

self.to_out = nn.Conv2d(hidden_dim, dim, 1)

def forward(self, x):

b, c, h, w = x.shape

qkv = self.to_qkv(x).chunk(3, dim=1)

q, k, v = map(

lambda t: rearrange(t, "b (h c) x y -> b h c (x y)", h=self.heads), qkv

)

q = q * self.scale

sim = einsum("b h d i, b h d j -> b h i j", q, k)

sim = sim - sim.amax(dim=-1, keepdim=True).detach()

attn = sim.softmax(dim=-1)

out = einsum("b h i j, b h d j -> b h i d", attn, v)

out = rearrange(out, "b h (x y) d -> b (h d) x y", x=h, y=w)

return self.to_out(out)

class LinearAttention(nn.Module):

def __init__(self, dim, heads=4, dim_head=32):

super().__init__()

self.scale = dim_head**-0.5

self.heads = heads

hidden_dim = dim_head * heads

self.to_qkv = nn.Conv2d(dim, hidden_dim * 3, 1, bias=False)

self.to_out = nn.Sequential(nn.Conv2d(hidden_dim, dim, 1),

nn.GroupNorm(1, dim))

def forward(self, x):

b, c, h, w = x.shape

qkv = self.to_qkv(x).chunk(3, dim=1)

q, k, v = map(

lambda t: rearrange(t, "b (h c) x y -> b h c (x y)", h=self.heads), qkv

)

q = q.softmax(dim=-2)

k = k.softmax(dim=-1)

q = q * self.scale

context = torch.einsum("b h d n, b h e n -> b h d e", k, v)

out = torch.einsum("b h d e, b h d n -> b h e n", context, q)

out = rearrange(out, "b h c (x y) -> b (h c) x y", h=self.heads, x=h, y=w)

return self.to_out(out)

- DDPM then adds group normalization to interleave the convolutional/attention layers of the U-Net architecture.

- Below, the

PreNormclass will apply group normalization before the attention layer.- Note, there has been a debate about whether groupnorm is better to be applied before or after attention in Transformers.

class PreNorm(nn.Module):

def __init__(self, dim, fn):

super().__init__()

self.fn = fn

self.norm = nn.GroupNorm(1, dim)

def forward(self, x):

x = self.norm(x)

return self.fn(x)

Overall network

- Now that we have all the building blocks of the neural network (ResNet/ConvNeXT blocks, attention, positional embeddings, group norm), lets define our entire neural network.

- The task of this neural network is to take in a batch of noisy images and their noise levels and then to output the noise added to the input.

- The network takes a batch of noisy images of shape

(batch_size, num_channels, height, width)and a batch of noise levels of shape(batch_size, 1)as input, and returns a tensor of shape(batch_size, num_channels, height, width). - The network is built up as follows: (source)

- first, a convolutional layer is applied on the batch of noisy images, and position embeddings are computed for the noise levels

- next, a sequence of downsampling stages are applied.

- Each downsampling stage consists of two ResNet/ConvNeXT blocks + groupnorm + attention + residual connection + a downsample operation

- at the middle of the network, again ResNet or ConvNeXT blocks are applied, interleaved with attention

- next, a sequence of upsampling stages are applied.

- Each upsampling stage consists of two ResNet/ConvNeXT blocks + groupnorm + attention + residual connection + an upsample operation

- finally, a ResNet/ConvNeXT block followed by a convolutional layer is applied.

class Unet(nn.Module):

def __init__(

self,

dim,

init_dim=None,

out_dim=None,

dim_mults=(1, 2, 4, 8),

channels=3,

with_time_emb=True,

resnet_block_groups=8,

use_convnext=True,

convnext_mult=2,

):

super().__init__()

# determine dimensions

self.channels = channels

init_dim = default(init_dim, dim // 3 * 2)